forked from apache/spark

-

Notifications

You must be signed in to change notification settings - Fork 0

sync #17

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

sync #17

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

… task ### What changes were proposed in this pull request? This PR is a followup of #26624. This PR cleans up MDC properties if the original value is empty. Besides, this PR adds a warning and ignore the value when the user tries to override the value of `taskName`. ### Why are the changes needed? Before this PR, running the following jobs: ``` sc.setLocalProperty("mdc.my", "ABC") sc.parallelize(1 to 100).count() sc.setLocalProperty("mdc.my", null) sc.parallelize(1 to 100).count() ``` there's still MDC value "ABC" in the log of the second count job even if we've unset the value. ### Does this PR introduce _any_ user-facing change? Yes, user will 1) no longer see the MDC values after unsetting the value; 2) see a warning if he/she tries to override the value of `taskName`. ### How was this patch tested? Tested Manaually. Closes #28756 from Ngone51/followup-8981. Authored-by: yi.wu <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

… conversion ### What changes were proposed in this pull request? This PR add a new rule to support push predicate through join by rewriting join condition to CNF(conjunctive normal form). The following example is the steps of this rule: 1. Prepare Table: ```sql CREATE TABLE x(a INT); CREATE TABLE y(b INT); ... SELECT * FROM x JOIN y ON ((a < 0 and a > b) or a > 10); ``` 2. Convert the join condition to CNF: ``` (a < 0 or a > 10) and (a > b or a > 10) ``` 3. Split conjunctive predicates Predicates ---| (a < 0 or a > 10) (a > b or a > 10) 4. Push predicate Table | Predicate --- | --- x | (a < 0 or a > 10) ### Why are the changes needed? Improve query performance. PostgreSQL, [Impala](https://issues.apache.org/jira/browse/IMPALA-9183) and Hive support this feature. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Unit test and benchmark test. SQL | Before this PR | After this PR --- | --- | --- TPCDS 5T Q13 | 84s | 21s TPCDS 5T q85 | 66s | 34s TPCH 1T q19 | 37s | 32s Closes #28733 from gengliangwang/cnf. Lead-authored-by: Gengliang Wang <[email protected]> Co-authored-by: Yuming Wang <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

### What changes were proposed in this pull request? This PR intends to add a build-in SQL function - `WIDTH_BUCKET`. It is the rework of #18323. Closes #18323 The other RDBMS references for `WIDTH_BUCKET`: - Oracle: https://docs.oracle.com/cd/B28359_01/olap.111/b28126/dml_functions_2137.htm#OLADM717 - PostgreSQL: https://www.postgresql.org/docs/current/functions-math.html - Snowflake: https://docs.snowflake.com/en/sql-reference/functions/width_bucket.html - Prestodb: https://prestodb.io/docs/current/functions/math.html - Teradata: https://docs.teradata.com/reader/kmuOwjp1zEYg98JsB8fu_A/Wa8vw69cGzoRyNULHZeudg - DB2: https://www.ibm.com/support/producthub/db2/docs/content/SSEPGG_11.5.0/com.ibm.db2.luw.sql.ref.doc/doc/r0061483.html?pos=2 ### Why are the changes needed? For better usability. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added unit tests. Closes #28764 from maropu/SPARK-21117. Lead-authored-by: Takeshi Yamamuro <[email protected]> Co-authored-by: Yuming Wang <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR upgrades HtmlUnit. Selenium and Jetty also upgraded because of dependency. ### Why are the changes needed? Recently, a security issue which affects HtmlUnit is reported. https://nvd.nist.gov/vuln/detail/CVE-2020-5529 According to the report, arbitrary code can be run by malicious users. HtmlUnit is used for test so the impact might not be large but it's better to upgrade it just in case. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing testcases. Closes #28585 from sarutak/upgrade-htmlunit. Authored-by: Kousuke Saruta <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…eption ### What changes were proposed in this pull request? A minor fix to fix the append method of StringConcat to cap the length at MAX_ROUNDED_ARRAY_LENGTH to make sure it does not overflow and cause StringIndexOutOfBoundsException Thanks to **Jeffrey Stokes** for reporting the issue and explaining the underlying problem in detail in the JIRA. ### Why are the changes needed? This fixes StringIndexOutOfBoundsException on an overflow. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Added a test in StringsUtilSuite. Closes #28750 from dilipbiswal/SPARK-31916. Authored-by: Dilip Biswal <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

### What changes were proposed in this pull request?

This pr normalize all binary comparison expressions when comparing plans.

### Why are the changes needed?

Improve test framework, otherwise this test will fail:

```scala

test("SPARK-31912 Normalize all binary comparison expressions") {

val original = testRelation

.where('a === 'b && Literal(13) >= 'b).as("x")

val optimized = testRelation

.where(IsNotNull('a) && IsNotNull('b) && 'a === 'b && 'b <= 13 && 'a <= 13).as("x")

comparePlans(Optimize.execute(original.analyze), optimized.analyze)

}

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual test.

Closes #28734 from wangyum/SPARK-31912.

Authored-by: Yuming Wang <[email protected]>

Signed-off-by: Yuming Wang <[email protected]>

…while switching standard time zone offset ### What changes were proposed in this pull request? Fix the bug in microseconds rebasing during transitions from one standard time zone offset to another one. In the PR, I propose to change the implementation of `rebaseGregorianToJulianMicros` which performs rebasing via local timestamps. In the case of overlapping: 1. Check that the original instant belongs to earlier or later instant of overlapped local timestamp. 2. If it is an earlier instant, take zone and DST offsets from the previous day otherwise 3. Set time zone offsets to Julian timestamp from the next day. Note: The fix assumes that transitions cannot happen more often than once per 2 days. ### Why are the changes needed? Current implementation handles timestamps overlapping only during daylight saving time but overlapping can happen also during transition from one standard time zone to another one. For example in the case of `Asia/Hong_Kong`, the time zone switched from `Japan Standard Time` (UTC+9) to `Hong Kong Time` (UTC+8) on _Sunday, 18 November, 1945 01:59:59 AM_. The changes allow to handle the special case as well. ### Does this PR introduce _any_ user-facing change? It might affect micros rebasing in before common era when not-optimised version of `rebaseGregorianToJulianMicros()` is used directly. ### How was this patch tested? 1. By existing tests in `DateTimeUtilsSuite`, `RebaseDateTimeSuite`, `DateFunctionsSuite`, `DateExpressionsSuite` and `TimestampFormatterSuite`. 2. Added new test to `RebaseDateTimeSuite` 3. Regenerated `gregorian-julian-rebase-micros.json` with the step of 30 minutes, and got the same JSON file. The JSON file isn't affected because previously it was generated with the step of 1 week. And the spike in diffs/switch points during 1 hour of timestamp overlapping wasn't detected. Closes #28787 from MaxGekk/HongKong-tz-1945. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…/Join ### What changes were proposed in this pull request? Currently we only push nested column pruning through a few operators such as LIMIT, SAMPLE, etc. This patch extends the feature to other operators including RepartitionByExpression, Join. ### Why are the changes needed? Currently nested column pruning only applied on a few operators. It limits the benefit of nested column pruning. Extending nested column pruning coverage to make this feature more generally applied through different queries. ### Does this PR introduce _any_ user-facing change? Yes. More SQL operators are covered by nested column pruning. ### How was this patch tested? Added unit test, end-to-end tests. Closes #28556 from viirya/others-column-pruning. Authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

This reverts commit 69ba9b6.

### What changes were proposed in this pull request? #28747 reverted #28439 due to some flaky test case. This PR fixes the flaky test and adds pagination support. ### Why are the changes needed? To support pagination for streaming tab ### Does this PR introduce _any_ user-facing change? Yes, Now streaming tab tables will be paginated. ### How was this patch tested? Manually. Closes #28748 from iRakson/fixstreamingpagination. Authored-by: iRakson <[email protected]> Signed-off-by: Sean Owen <[email protected]>

… files ### What changes were proposed in this pull request? When removing non-existing files in the release script, do not fail. ### Why are the changes needed? This is to make the release script more robust, as we don't care if the files exist before we remove them. ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? tested when cutting 3.0.0 RC Closes #28815 from cloud-fan/release. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR intends to extract SQL reserved/non-reserved keywords from the ANTLR grammar file (`SqlBase.g4`) directly. This approach is based on the cloud-fan suggestion: #28779 (comment) ### Why are the changes needed? It is hard to maintain a full set of the keywords in `TableIdentifierParserSuite`, so it would be nice if we could extract them from the `SqlBase.g4` file directly. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing tests. Closes #28802 from maropu/SPARK-31950-2. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

### What changes were proposed in this pull request?

This proposes a minor refactoring to match `NestedColumnAliasing` to `GeneratorNestedColumnAliasing` so it returns the pruned plan directly.

```scala

case p NestedColumnAliasing(nestedFieldToAlias, attrToAliases) =>

NestedColumnAliasing.replaceToAliases(p, nestedFieldToAlias, attrToAliases)

```

vs

```scala

case GeneratorNestedColumnAliasing(p) => p

```

### Why are the changes needed?

Just for readability.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests should cover.

Closes #28812 from HyukjinKwon/SPARK-31977.

Authored-by: HyukjinKwon <[email protected]>

Signed-off-by: Takeshi Yamamuro <[email protected]>

…ime regression ### What changes were proposed in this pull request? After #28192, the job list page becomes very slow. For example, after the following operation, the UI loading can take >40 sec. ``` (1 to 1000).foreach(_ => sc.parallelize(1 to 10).collect) ``` This is caused by a [performance issue of `vis-timeline`](visjs/vis-timeline#379). The serious issue affects both branch-3.0 and branch-2.4 I tried a different version 4.21.0 from https://cdnjs.com/libraries/vis The infinite drawing issue seems also fixed if the zoom is disabled as default. ### Why are the changes needed? Fix the serious perf issue in web UI by falling back vis-timeline-graph2d to an ealier version. ### Does this PR introduce _any_ user-facing change? Yes, fix the UI perf regression ### How was this patch tested? Manual test Closes #28806 from gengliangwang/downgradeVis. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

### What changes were proposed in this pull request? Add instance weight support in LinearRegressionSummary ### Why are the changes needed? LinearRegression and RegressionMetrics support instance weight. We should support instance weight in LinearRegressionSummary too. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? add new test Closes #28772 from huaxingao/lir_weight_summary. Authored-by: Huaxin Gao <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…e from the command line for sbt ### What changes were proposed in this pull request? This PR proposes to support guava version configurable from command line for sbt. ### Why are the changes needed? #28455 added the configurability for Maven but not for sbt. sbt is usually faster than Maven so it's useful for developers. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? I confirmed the guava version is changed with the following commands. ``` $ build/sbt "inspect tree clean" | grep guava [info] +-spark/*:dependencyOverrides = Set(com.google.guava:guava:14.0.1, xerces:xercesImpl:2.12.0, jline:jline:2.14.6, org.apache.avro:avro:1.8.2) ``` ``` $ build/sbt -Dguava.version=25.0-jre "inspect tree clean" | grep guava [info] +-spark/*:dependencyOverrides = Set(com.google.guava:guava:25.0-jre, xerces:xercesImpl:2.12.0, jline:jline:2.14.6, org.apache.avro:avro:1.8.2) ``` Closes #28822 from sarutak/guava-version-for-sbt. Authored-by: Kousuke Saruta <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…n application summary is unavailable ### What changes were proposed in this pull request? <!-- Please clarify what changes you are proposing. The purpose of this section is to outline the changes and how this PR fixes the issue. If possible, please consider writing useful notes for better and faster reviews in your PR. See the examples below. 1. If you refactor some codes with changing classes, showing the class hierarchy will help reviewers. 2. If you fix some SQL features, you can provide some references of other DBMSes. 3. If there is design documentation, please add the link. 4. If there is a discussion in the mailing list, please add the link. --> This PR enriches the exception message when application summary is not available. #28444 covers the case when application information is not available but the case application summary is not available is not covered. ### Why are the changes needed? <!-- Please clarify why the changes are needed. For instance, 1. If you propose a new API, clarify the use case for a new API. 2. If you fix a bug, you can clarify why it is a bug. --> To complement #28444 . ### Does this PR introduce _any_ user-facing change? <!-- Note that it means *any* user-facing change including all aspects such as the documentation fix. If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description and/or an example to show the behavior difference if possible. If possible, please also clarify if this is a user-facing change compared to the released Spark versions or within the unreleased branches such as master. If no, write 'No'. --> Yes. Before this change, we can get the following error message when we access to `/jobs` if application summary is not available. <img width="707" alt="no-such-element-exception-error-message" src="https://user-images.githubusercontent.com/4736016/84562182-6aadf200-ad8d-11ea-8980-d63edde6fad6.png"> After this change, we can get the following error message. It's like #28444 does. <img width="1349" alt="enriched-errorm-message" src="https://user-images.githubusercontent.com/4736016/84562189-85806680-ad8d-11ea-8346-4da2ec11df2b.png"> ### How was this patch tested? <!-- If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible. If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future. If tests were not added, please describe why they were not added and/or why it was difficult to add. --> I checked with the following procedure. 1. Set breakpoint in the line of `kvstore.write(appSummary)` in `AppStatusListener#onStartApplicatin`. Only the thread reaching this line should be suspended. 2. Start spark-shell and wait few seconds. 3. Access to `/jobs` Closes #28820 from sarutak/fix-no-such-element. Authored-by: Kousuke Saruta <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request?

A unit test is added

Partition duplicate check added in `org.apache.spark.sql.execution.datasources.PartitioningUtils#validatePartitionColumn`

### Why are the changes needed?

When people write data with duplicate partition column, it will cause a `org.apache.spark.sql.AnalysisException: Found duplicate column ...` in loading data from the writted.

### Does this PR introduce _any_ user-facing change?

Yes.

It will prevent people from using duplicate partition columns to write data.

1. Before the PR:

It will look ok at `df.write.partitionBy("b", "b").csv("file:///tmp/output")`,

but get an exception when read:

`spark.read.csv("file:///tmp/output").show()`

org.apache.spark.sql.AnalysisException: Found duplicate column(s) in the partition schema: `b`;

2. After the PR:

`df.write.partitionBy("b", "b").csv("file:///tmp/output")` will trigger the exception:

org.apache.spark.sql.AnalysisException: Found duplicate column(s) b, b: `b`;

### How was this patch tested?

Unit test.

Closes #28814 from TJX2014/master-SPARK-31968.

Authored-by: TJX2014 <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

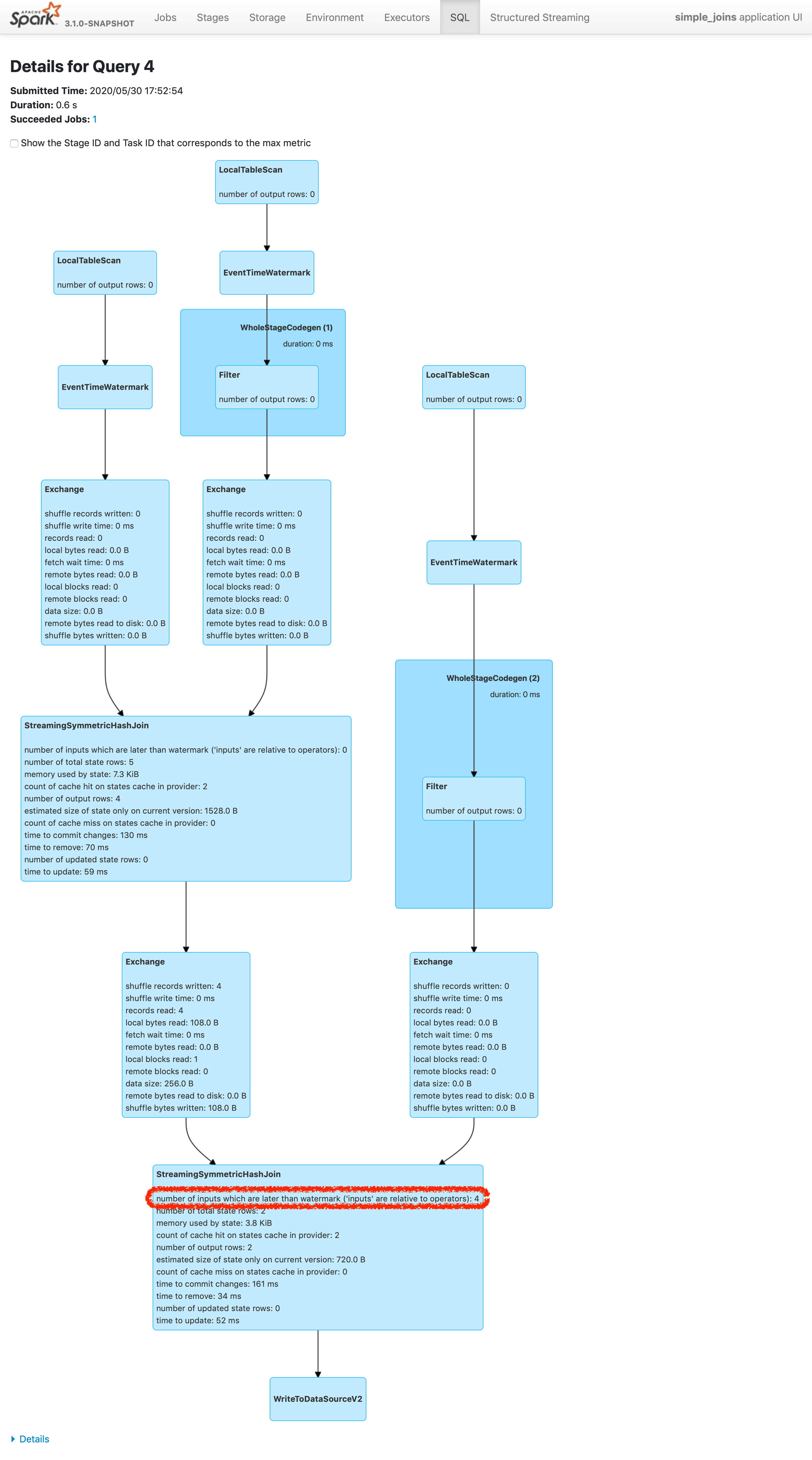

…han watermark plus allowed delay ### What changes were proposed in this pull request? Please refer https://issues.apache.org/jira/browse/SPARK-24634 to see rationalization of the issue. This patch adds a new metric to count the number of inputs arrived later than watermark plus allowed delay. To make changes simpler, this patch doesn't count the exact number of input rows which are later than watermark plus allowed delay. Instead, this patch counts the inputs which are dropped in the logic of operator. The difference of twos are shown in streaming aggregation: to optimize the calculation, streaming aggregation "pre-aggregates" the input rows, and later checks the lateness against "pre-aggregated" inputs, hence the number might be reduced. The new metric will be provided via two places: 1. On Spark UI: check the metrics in stateful operator nodes in query execution details page in SQL tab 2. On Streaming Query Listener: check "numLateInputs" in "stateOperators" in QueryProcessEvent. ### Why are the changes needed? Dropping late inputs means that end users might not get expected outputs. Even end users may indicate the fact and tolerate the result (as that's what allowed lateness is for), but they should be able to observe whether the current value of allowed lateness drops inputs or not so that they can adjust the value. Also, whatever the chance they have multiple of stateful operators in a single query, if Spark drops late inputs "between" these operators, it becomes "correctness" issue. Spark should disallow such possibility, but given we already provided the flexibility, at least we should provide the way to observe the correctness issue and decide whether they should make correction of their query or not. ### Does this PR introduce _any_ user-facing change? Yes. End users will be able to retrieve the information of late inputs via two ways: 1. SQL tab in Spark UI 2. Streaming Query Listener ### How was this patch tested? New UTs added & existing UTs are modified to reflect the change. And ran manual test reproducing SPARK-28094. I've picked the specific case on "B outer C outer D" which is enough to represent the "intermediate late row" issue due to global watermark. https://gist.github.com/jammann/b58bfbe0f4374b89ecea63c1e32c8f17 Spark logs warning message on the query which means SPARK-28074 is working correctly, ``` 20/05/30 17:52:47 WARN UnsupportedOperationChecker: Detected pattern of possible 'correctness' issue due to global watermark. The query contains stateful operation which can emit rows older than the current watermark plus allowed late record delay, which are "late rows" in downstream stateful operations and these rows can be discarded. Please refer the programming guide doc for more details.; Join LeftOuter, ((D_FK#28 = D_ID#87) AND (B_LAST_MOD#26-T30000ms = D_LAST_MOD#88-T30000ms)) :- Join LeftOuter, ((C_FK#27 = C_ID#58) AND (B_LAST_MOD#26-T30000ms = C_LAST_MOD#59-T30000ms)) : :- EventTimeWatermark B_LAST_MOD#26: timestamp, 30 seconds : : +- Project [v#23.B_ID AS B_ID#25, v#23.B_LAST_MOD AS B_LAST_MOD#26, v#23.C_FK AS C_FK#27, v#23.D_FK AS D_FK#28] : : +- Project [from_json(StructField(B_ID,StringType,false), StructField(B_LAST_MOD,TimestampType,false), StructField(C_FK,StringType,true), StructField(D_FK,StringType,true), value#21, Some(UTC)) AS v#23] : : +- Project [cast(value#8 as string) AS value#21] : : +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider3a7fd18c, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable396d2958, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee61a, [key#7, value#8, topic#9, partition#10, offset#11L, timestamp#12, timestampType#13], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> B, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#0, value#1, topic#2, partition#3, offset#4L, timestamp#5, timestampType#6] : +- EventTimeWatermark C_LAST_MOD#59: timestamp, 30 seconds : +- Project [v#56.C_ID AS C_ID#58, v#56.C_LAST_MOD AS C_LAST_MOD#59] : +- Project [from_json(StructField(C_ID,StringType,false), StructField(C_LAST_MOD,TimestampType,false), value#54, Some(UTC)) AS v#56] : +- Project [cast(value#41 as string) AS value#54] : +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider3f507373, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable7b6736a4, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee61b, [key#40, value#41, topic#42, partition#43, offset#44L, timestamp#45, timestampType#46], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> C, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#33, value#34, topic#35, partition#36, offset#37L, timestamp#38, timestampType#39] +- EventTimeWatermark D_LAST_MOD#88: timestamp, 30 seconds +- Project [v#85.D_ID AS D_ID#87, v#85.D_LAST_MOD AS D_LAST_MOD#88] +- Project [from_json(StructField(D_ID,StringType,false), StructField(D_LAST_MOD,TimestampType,false), value#83, Some(UTC)) AS v#85] +- Project [cast(value#70 as string) AS value#83] +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider2b90e779, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable36f8cd29, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee620, [key#69, value#70, topic#71, partition#72, offset#73L, timestamp#74, timestampType#75], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> D, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#62, value#63, topic#64, partition#65, offset#66L, timestamp#67, timestampType#68] ``` and we can find the late inputs from the batch 4 as follows:  which represents intermediate inputs are being lost, ended up with correctness issue. Closes #28607 from HeartSaVioR/SPARK-24634-v3. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Structured Streaming progress reporter will always report an `empty` progress when there is no new data. As design, we should provide progress updates every 10s (default) when there is no new data. Before PR:    After PR:  ### Why are the changes needed? Fixes a bug around incorrect progress report ### Does this PR introduce any user-facing change? Fixes a bug around incorrect progress report ### How was this patch tested? existing ut and manual test Closes #28391 from uncleGen/SPARK-31593. Authored-by: uncleGen <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

… setLocalProperty and log4j.properties ### What changes were proposed in this pull request? This PR proposes to use "mdc.XXX" as the consistent key for both `sc.setLocalProperty` and `log4j.properties` when setting up configurations for MDC. ### Why are the changes needed? It's weird that we use "mdc.XXX" as key to set MDC value via `sc.setLocalProperty` while we use "XXX" as key to set MDC pattern in log4j.properties. It could also bring extra burden to the user. ### Does this PR introduce _any_ user-facing change? No, as MDC feature is added in version 3.1, which hasn't been released. ### How was this patch tested? Tested manually. Closes #28801 from Ngone51/consistent-mdc. Authored-by: yi.wu <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…us column sortable ### What changes were proposed in this pull request? In #28485 pagination support for tables of Structured Streaming Tab was added. It missed 2 things: * For sorting duration column, `String` was used which sometimes gives wrong results(consider `"3 ms"` and `"12 ms"`). Now we first sort the duration column and then convert it to readable String * Status column was not made sortable. ### Why are the changes needed? To fix the wrong result for sorting and making Status column sortable. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? After changes: <img width="1677" alt="Screenshot 2020-06-08 at 2 18 48 PM" src="https://user-images.githubusercontent.com/15366835/84010992-153fa280-a993-11ea-9846-bf176f2ec5d7.png"> Closes #28752 from iRakson/ssTests. Authored-by: iRakson <[email protected]> Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? In NestedColumnAliasing rule, we create aliases for nested field access in project list. We considered that top level parent field and nested fields under it were both accessed. In the case, we don't create the aliases because they are redundant. There is another case, where a nested parent field and nested fields under it were both accessed, which we don't consider now. We don't need to create aliases in this case too. ## How was this patch tested? Added test. Closes #24525 from viirya/SPARK-27633. Lead-authored-by: Liang-Chi Hsieh <[email protected]> Co-authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…r ThriftCLIService to getPortNumber ### What changes were proposed in this pull request? This PR brings 02f32cf back which reverted by 4a25200 because of maven test failure diffs newly made: 1. add a missing log4j file to test resources 2. Call `SessionState.detachSession()` to clean the thread local one in `afterAll`. 3. Not use dedicated JVMs for sbt test runner too ### Why are the changes needed? fix the maven test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? add new tests Closes #28797 from yaooqinn/SPARK-31926-NEW. Authored-by: Kent Yao <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? - Modify `DateTimeRebaseBenchmark` to benchmark the default date-time rebasing mode - `EXCEPTION` for saving/loading dates/timestamps from/to parquet files. The mode is benchmarked for modern timestamps after 1900-01-01 00:00:00Z and dates after 1582-10-15. - Regenerate benchmark results in the environment: | Item | Description | | ---- | ----| | Region | us-west-2 (Oregon) | | Instance | r3.xlarge | | AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) | | Java | OpenJDK 64-Bit Server VM 1.8.0_252 and OpenJDK 64-Bit Server VM 11.0.7+10 | ### Why are the changes needed? The `EXCEPTION` rebasing mode is the default mode of the SQL configs `spark.sql.legacy.parquet.datetimeRebaseModeInRead` and `spark.sql.legacy.parquet.datetimeRebaseModeInWrite`. The changes are needed to improve benchmark coverage for default settings. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By running the benchmark and check results manually. Closes #28829 from MaxGekk/benchmark-exception-mode. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? In LogisticRegression and LinearRegression, if set maxIter=n, the model.summary.totalIterations returns n+1 if the training procedure does not drop out. This is because we use ```objectiveHistory.length``` as totalIterations, but ```objectiveHistory``` contains init sate, thus ```objectiveHistory.length``` is 1 larger than number of training iterations. ### Why are the changes needed? correctness ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? add new tests and also modify existing tests Closes #28786 from huaxingao/summary_iter. Authored-by: Huaxin Gao <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? This PR partially revert SPARK-31292 in order to provide a hot-fix for a bug in `Dataset.dropDuplicates`; we must preserve the input order of `colNames` for `groupCols` because the Streaming's state store depends on the `groupCols` order. ### Why are the changes needed? Bug fix. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added tests in `DataFrameSuite`. Closes #28830 from maropu/SPARK-31990. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…-> HKT at Asia/Hong_Kong in 1945" to outdated tzdb ### What changes were proposed in this pull request? Old JDK can have outdated time zone database in which `Asia/Hong_Kong` doesn't have timestamp overlapping in 1946 at all. This PR changes the test "SPARK-31959: JST -> HKT at Asia/Hong_Kong in 1945" in `RebaseDateTimeSuite`, and makes it tolerant to the case. ### Why are the changes needed? To fix the test failures on old JDK w/ outdated tzdb like on Jenkins machine `research-jenkins-worker-09`. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By running the test on old JDK Closes #28832 from MaxGekk/HongKong-tz-1945-followup. Authored-by: Max Gekk <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR intends to move keywords `ANTI`, `SEMI`, and `MINUS` from reserved to non-reserved. ### Why are the changes needed? To comply with the ANSI/SQL standard. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added tests. Closes #28807 from maropu/SPARK-26905-2. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

…debian.org mirrors ### What changes were proposed in this pull request? At the moment, we switch to `https` urls for all the debian mirrors, but turns out some of the mirrors do not support. In this patch, we turn on https mode only for `deb.debian.org` mirror (as it supports SSL). ### Why are the changes needed? It appears, that security.debian.org does not support https. ``` curl https://security.debian.org curl: (35) LibreSSL SSL_connect: SSL_ERROR_SYSCALL in connection to security.debian.org:443 ``` While building the image, it fails in the following way. ``` MacBook-Pro:spark prashantsharma$ bin/docker-image-tool.sh -r scrapcodes -t v3.1.0-1 build Sending build context to Docker daemon 222.1MB Step 1/18 : ARG java_image_tag=8-jre-slim Step 2/18 : FROM openjdk:${java_image_tag} ---> 381b20190cf7 Step 3/18 : ARG spark_uid=185 ---> Using cache ---> 65c06f86753c Step 4/18 : RUN set -ex && sed -i 's/http:/https:/g' /etc/apt/sources.list && apt-get update && ln -s /lib /lib64 && apt install -y bash tini libc6 libpam-modules krb5-user libnss3 procps && mkdir -p /opt/spark && mkdir -p /opt/spark/examples && mkdir -p /opt/spark/work-dir && touch /opt/spark/RELEASE && rm /bin/sh && ln -sv /bin/bash /bin/sh && echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su && chgrp root /etc/passwd && chmod ug+rw /etc/passwd && rm -rf /var/cache/apt/* ---> Running in a3461dadd6eb + sed -i s/http:/https:/g /etc/apt/sources.list + apt-get update Ign:1 https://security.debian.org/debian-security buster/updates InRelease Err:2 https://security.debian.org/debian-security buster/updates Release Could not handshake: The TLS connection was non-properly terminated. [IP: 151.101.0.204 443] Get:3 https://deb.debian.org/debian buster InRelease [121 kB] Get:4 https://deb.debian.org/debian buster-updates InRelease [51.9 kB] Get:5 https://deb.debian.org/debian buster/main amd64 Packages [7905 kB] Get:6 https://deb.debian.org/debian buster-updates/main amd64 Packages [7868 B] Reading package lists... E: The repository 'https://security.debian.org/debian-security buster/updates Release' does not have a Release file. The command '/bin/sh -c set -ex && sed -i 's/http:/https:/g' /etc/apt/sources.list && apt-get update && ln -s /lib /lib64 && apt install -y bash tini libc6 libpam-modules krb5-user libnss3 procps && mkdir -p /opt/spark && mkdir -p /opt/spark/examples && mkdir -p /opt/spark/work-dir && touch /opt/spark/RELEASE && rm /bin/sh && ln -sv /bin/bash /bin/sh && echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su && chgrp root /etc/passwd && chmod ug+rw /etc/passwd && rm -rf /var/cache/apt/*' returned a non-zero code: 100 Failed to build Spark JVM Docker image, please refer to Docker build output for details. ``` So, if we limit the `https` support to only deb.debian.org, does the trick. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Manually, by building an image and testing it by running spark shell against it locally using kubernetes. Closes #28834 from ScrapCodes/spark-31994/debian_mirror_fix. Authored-by: Prashant Sharma <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…rtWithContext ### What changes were proposed in this pull request? Comparing to the long-running ThriftServer via start-script, we are more likely to hit the issue https://issues.apache.org/jira/browse/HIVE-10415 / https://issues.apache.org/jira/browse/SPARK-31626 in the developer API `startWithContext` This PR apply SPARK-31626 to the developer API `startWithContext` ### Why are the changes needed? Fix the issue described in SPARK-31626 ### Does this PR introduce _any_ user-facing change? Yes, the hive scratch dir will be deleted if cleanup is enabled for calling `startWithContext` ### How was this patch tested? new test Closes #28784 from yaooqinn/SPARK-31957. Authored-by: Kent Yao <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Add a generic ClassificationSummary trait ### Why are the changes needed? Add a generic ClassificationSummary trait so all the classification models can use it to implement summary. Currently in classification, we only have summary implemented in ```LogisticRegression```. There are requests to implement summary for ```LinearSVCModel``` in https://issues.apache.org/jira/browse/SPARK-20249 and to implement summary for ```RandomForestClassificationModel``` in https://issues.apache.org/jira/browse/SPARK-23631. If we add a generic ClassificationSummary trait and put all the common code there, we can easily add summary to ```LinearSVCModel``` and ```RandomForestClassificationModel```, and also add summary to all the other classification models. We can use the same approach to add a generic RegressionSummary trait to regression package and implement summary for all the regression models. ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? existing tests Closes #28710 from huaxingao/summary_trait. Authored-by: Huaxin Gao <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? Add a new config `spark.sql.files.minPartitionNum` to control file split partition in local session. ### Why are the changes needed? Aims to control file split partitions in session level. More details see discuss in [PR-28778](#28778). ### Does this PR introduce _any_ user-facing change? Yes, new config. ### How was this patch tested? Add UT. Closes #28853 from ulysses-you/SPARK-32019. Authored-by: ulysses <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request? To allow alternatives to serviceaccounts ### Why are the changes needed? Although we provide some authentication configuration, such as spark.kubernetes.authenticate.driver.mounted.oauthTokenFile, spark.kubernetes.authenticate.driver.mounted.caCertFile, etc. But there is a bug as we forced the service account so when we use one of them, driver still use the KUBERNETES_SERVICE_ACCOUNT_TOKEN_PATH file, and the error look like bellow: the KUBERNETES_SERVICE_ACCOUNT_TOKEN_PATH serviceAccount not exists ### Does this PR introduce any user-facing change? Yes user can now use `spark.kubernetes.authenticate.driver.mounted.caCertFile` or token file by `spark.kubernetes.authenticate.driver.mounted.oauthTokenFile` ## How was this patch tested? Manually passed the certificates using `spark.kubernetes.authenticate.driver.mounted.caCertFile` or token file by `spark.kubernetes.authenticate.driver.mounted.oauthTokenFile` if there is no default service account available. Closes #24601 from Udbhav30/serviceaccount. Authored-by: Udbhav30 <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…utChecker thread properly upon shutdown ### What changes were proposed in this pull request? This PR port https://issues.apache.org/jira/browse/HIVE-14817 for spark thrift server. ### Why are the changes needed? When stopping the HiveServer2, the non-daemon thread stops the server from terminating ```sql "HiveServer2-Background-Pool: Thread-79" #79 prio=5 os_prio=31 tid=0x00007fde26138800 nid=0x13713 waiting on condition [0x0000700010c32000] java.lang.Thread.State: TIMED_WAITING (sleeping) at java.lang.Thread.sleep(Native Method) at org.apache.hive.service.cli.session.SessionManager$1.sleepInterval(SessionManager.java:178) at org.apache.hive.service.cli.session.SessionManager$1.run(SessionManager.java:156) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) ``` Here is an example to reproduce: https://github.com/yaooqinn/kyuubi/blob/master/kyuubi-spark-sql-engine/src/main/scala/org/apache/kyuubi/spark/SparkSQLEngineApp.scala Also, it causes issues as HIVE-14817 described which ### Does this PR introduce _any_ user-facing change? NO ### How was this patch tested? Passing Jenkins Closes #28870 from yaooqinn/SPARK-32034. Authored-by: Kent Yao <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…om map output metadata Introduces the concept of a `MapOutputMetadata` opaque object that can be returned from map output writers. Note that this PR only proposes the API changes on the shuffle writer side. Following patches will be proposed for actually accepting the metadata on the driver and persisting it in the driver's shuffle metadata storage plugin. ### Why are the changes needed? For a more complete design discussion on this subject as a whole, refer to [this design document](https://docs.google.com/document/d/1Aj6IyMsbS2sdIfHxLvIbHUNjHIWHTabfknIPoxOrTjk/edit#). ### Does this PR introduce any user-facing change? Enables additional APIs for the shuffle storage plugin tree. Usage will become more apparent as the API evolves. ### How was this patch tested? No tests here, since this is only an API-side change that is not consumed by core Spark itself. Closes #28616 from mccheah/return-map-output-metadata. Authored-by: mcheah <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…ormat ### What changes were proposed in this pull request? Add compatibility tests for streaming state store format. ### Why are the changes needed? After SPARK-31894, we have a validation checking for the streaming state store. It's better to add integrated tests in the PR builder as soon as the breaking changes introduced. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Test only. Closes #28725 from xuanyuanking/compatibility_check. Authored-by: Yuanjian Li <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…nct aggregate ### What changes were proposed in this pull request? This patch applies `NormalizeFloatingNumbers` to distinct aggregate to fix a regression of distinct aggregate on NaNs. ### Why are the changes needed? We added `NormalizeFloatingNumbers` optimization rule in 3.0.0 to normalize special floating numbers (NaN and -0.0). But it is missing in distinct aggregate so causes a regression. We need to apply this rule on distinct aggregate to fix it. ### Does this PR introduce _any_ user-facing change? Yes, fixing a regression of distinct aggregate on NaNs. ### How was this patch tested? Added unit test. Closes #28876 from viirya/SPARK-32038. Authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? A minor PR that adds a couple of usage examples for ArrayFilter and ArrayExists that shows how to deal with NULL data. ### Why are the changes needed? Enhances the examples that shows how to filter out null values from an array and also to test if null value exists in an array. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Tested manually. Closes #28890 from dilipbiswal/array_func_description. Authored-by: Dilip Biswal <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

… AggregateExpression ### What changes were proposed in this pull request? This PR changes the references of the `PartialMerge`/`Final` `AggregateExpression` from `aggBufferAttributes` to `inputAggBufferAttributes`. After this change, the tests of `SPARK-31620` can fail on the assertion of `QueryTest.assertEmptyMissingInput`. So, this PR also fixes it by overriding the `inputAggBufferAttributes` of the Aggregate operators. ### Why are the changes needed? With my understanding of Aggregate framework, especially, according to the logic of `AggUtils.planAggXXX`, I think for the `PartialMerge`/`Final` `AggregateExpression` the right references should be `inputAggBufferAttributes`. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Before this patch, for an Aggregate operator, its input attributes will always be equal to or more than(because it refers to its own attributes while it should refer to the attributes from the child) its reference attributes. Therefore, its missing inputs must always be empty and break nothing. Thus, it's impossible to add a UT for this patch. However, after correcting the right references in this PR, the problem is then exposed by `QueryTest.assertEmptyMissingInput` in the UT of SPARK-31620, since missing inputs are no longer always empty. This PR can fix the problem. Closes #28869 from Ngone51/fix-agg-reference. Authored-by: yi.wu <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Call the `replace()` method from `UTF8String` to remove the `GMT` string from the input of `DateTimeUtils.cleanLegacyTimestampStr`. It removes all `GMT` substrings. ### Why are the changes needed? Simpler code improves maintainability ### Does this PR introduce _any_ user-facing change? Should not ### How was this patch tested? By existing test suites `JsonSuite` and `UnivocityParserSuite`. Closes #28892 from MaxGekk/simplify-cleanLegacyTimestampStr. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Extract common code from the expressions that get date or time fields from input dates/timestamps to new expressions `GetDateField` and `GetTimeField`, and re-use the common traits from the affected classes. ### Why are the changes needed? Code deduplication improves maintainability. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By `DateExpressionsSuite` Closes #28894 from MaxGekk/get-date-time-field-expr. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? This PR aims to upgrade to Apache Commons Lang 3.10. ### Why are the changes needed? This will bring the latest bug fixes like [LANG-1453](https://issues.apache.org/jira/browse/LANG-1453). https://commons.apache.org/proper/commons-lang/release-notes/RELEASE-NOTES-3.10.txt ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the Jenkins. Closes #28889 from williamhyun/commons-lang-3.10. Authored-by: William Hyun <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? `OracleIntegrationSuite` is not using the latest oracle JDBC driver. In this PR I've upgraded the driver to the latest which supports JDK8, JDK9, and JDK11. ### Why are the changes needed? Old JDBC driver. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing unit tests. Existing integration tests (especially `OracleIntegrationSuite`) Closes #28893 from gaborgsomogyi/SPARK-32049. Authored-by: Gabor Somogyi <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…make_timestamp` ### What changes were proposed in this pull request? Replace Decimal by Int op in the `MakeInterval` & `MakeTimestamp` expression. For instance, `(secs * Decimal(MICROS_PER_SECOND)).toLong` can be replaced by the unscaled long because the former one already contains microseconds. ### Why are the changes needed? To improve performance. Before: ``` make_timestamp(): Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------------------------------ ... make_timestamp(2019, 1, 2, 3, 4, 50.123456) 94 99 4 10.7 93.8 38.8X ``` After: ``` make_timestamp(2019, 1, 2, 3, 4, 50.123456) 76 92 15 13.1 76.5 48.1X ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - By existing test suites `IntervalExpressionsSuite`, `DateExpressionsSuite` and etc. - Re-generate results of `MakeDateTimeBenchmark` in the environment: | Item | Description | | ---- | ----| | Region | us-west-2 (Oregon) | | Instance | r3.xlarge | | AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) | | Java | OpenJDK 64-Bit Server VM 1.8.0_252 and OpenJDK 64-Bit Server VM 11.0.7+10 | Closes #28886 from MaxGekk/make_interval-opt-decimal. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…closure cleaning to support R 4.0.0+ ### What changes were proposed in this pull request? This PR proposes to ignore S4 generic methods under SparkR namespace in closure cleaning to support R 4.0.0+. Currently, when you run the codes that runs R native codes, it fails as below with R 4.0.0: ```r df <- createDataFrame(lapply(seq(100), function (e) list(value=e))) count(dapply(df, function(x) as.data.frame(x[x$value < 50,]), schema(df))) ``` ``` org.apache.spark.SparkException: R unexpectedly exited. R worker produced errors: Error in lapply(part, FUN) : attempt to bind a variable to R_UnboundValue ``` The root cause seems to be related to when an S4 generic method is manually included into the closure's environment via `SparkR:::cleanClosure`. For example, when an RRDD is created via `createDataFrame` with calling `lapply` to convert, `lapply` itself: https://github.com/apache/spark/blob/f53d8c63e80172295e2fbc805c0c391bdececcaa/R/pkg/R/RDD.R#L484 is added into the environment of the cleaned closure - because this is not an exposed namespace; however, this is broken in R 4.0.0+ for an unknown reason with an error message such as "attempt to bind a variable to R_UnboundValue". Actually, we don't need to add the `lapply` into the environment of the closure because it is not supposed to be called in worker side. In fact, there is no private generic methods supposed to be called in worker side in SparkR at all from my understanding. Therefore, this PR takes a simpler path to work around just by explicitly excluding the S4 generic methods under SparkR namespace to support R 4.0.0. in SparkR. ### Why are the changes needed? To support R 4.0.0+ with SparkR, and unblock the releases on CRAN. CRAN requires the tests pass with the latest R. ### Does this PR introduce _any_ user-facing change? Yes, it will support R 4.0.0 to end-users. ### How was this patch tested? Manually tested. Both CRAN and tests with R 4.0.1: ``` ══ testthat results ═══════════════════════════════════════════════════════════ [ OK: 13 | SKIPPED: 0 | WARNINGS: 0 | FAILED: 0 ] ✔ | OK F W S | Context ✔ | 11 | binary functions [2.5 s] ✔ | 4 | functions on binary files [2.1 s] ✔ | 2 | broadcast variables [0.5 s] ✔ | 5 | functions in client.R ✔ | 46 | test functions in sparkR.R [6.3 s] ✔ | 2 | include R packages [0.3 s] ✔ | 2 | JVM API [0.2 s] ✔ | 75 | MLlib classification algorithms, except for tree-based algorithms [86.3 s] ✔ | 70 | MLlib clustering algorithms [44.5 s] ✔ | 6 | MLlib frequent pattern mining [3.0 s] ✔ | 8 | MLlib recommendation algorithms [9.6 s] ✔ | 136 | MLlib regression algorithms, except for tree-based algorithms [76.0 s] ✔ | 8 | MLlib statistics algorithms [0.6 s] ✔ | 94 | MLlib tree-based algorithms [85.2 s] ✔ | 29 | parallelize() and collect() [0.5 s] ✔ | 428 | basic RDD functions [25.3 s] ✔ | 39 | SerDe functionality [2.2 s] ✔ | 20 | partitionBy, groupByKey, reduceByKey etc. [3.9 s] ✔ | 4 | functions in sparkR.R ✔ | 16 | SparkSQL Arrow optimization [19.2 s] ✔ | 6 | test show SparkDataFrame when eager execution is enabled. [1.1 s] ✔ | 1175 | SparkSQL functions [134.8 s] ✔ | 42 | Structured Streaming [478.2 s] ✔ | 16 | tests RDD function take() [1.1 s] ✔ | 14 | the textFile() function [2.9 s] ✔ | 46 | functions in utils.R [0.7 s] ✔ | 0 1 | Windows-specific tests ──────────────────────────────────────────────────────────────────────────────── test_Windows.R:22: skip: sparkJars tag in SparkContext Reason: This test is only for Windows, skipped ──────────────────────────────────────────────────────────────────────────────── ══ Results ═════════════════════════════════════════════════════════════════════ Duration: 987.3 s OK: 2304 Failed: 0 Warnings: 0 Skipped: 1 ... Status: OK + popd Tests passed. ``` Note that I tested to build SparkR in R 4.0.0, and run the tests with R 3.6.3. It all passed. See also [the comment in the JIRA](https://issues.apache.org/jira/browse/SPARK-31918?focusedCommentId=17142837&page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel#comment-17142837). Closes #28907 from HyukjinKwon/SPARK-31918. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Spark 3.0 accidentally dropped R < 3.5. It is built by R 3.6.3 which not support R < 3.5: ``` Error in readRDS(pfile) : cannot read workspace version 3 written by R 3.6.3; need R 3.5.0 or newer version. ``` In fact, with SPARK-31918, we will have to drop R < 3.5 entirely to support R 4.0.0. This is inevitable to release on CRAN because they require to make the tests pass with the latest R. ### Why are the changes needed? To show the supported versions correctly, and support R 4.0.0 to unblock the releases. ### Does this PR introduce _any_ user-facing change? In fact, no because Spark 3.0.0 already does not work with R < 3.5. Compared to Spark 2.4, yes. R < 3.5 would not work. ### How was this patch tested? Jenkins should test it out. Closes #28908 from HyukjinKwon/SPARK-32073. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…y summary page ### What changes were proposed in this pull request? Fix app id link for multi attempts application in history summary page If attempt id is available (yarn), app id link url will contain correct attempt id, like `/history/application_1561589317410_0002/1/jobs/`. If attempt id is not available (standalone), app id link url will not contain fake attempt id, like `/history/app-20190404053606-0000/jobs/`. ### Why are the changes needed? This PR is for fixing [32028](https://issues.apache.org/jira/browse/SPARK-32028). App id link use application attempt count as attempt id. this would cause link url wrong for below cases: 1. there are multi attempts, all links point to last attempt  2. if there is one attempt, but attempt id is not 1 (before attempt maybe crash or fail to gerenerate event file). link url points to worng attempt (1) here.  ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Tested this manually. Closes #28867 from zhli1142015/fix-appid-link-in-history-page. Authored-by: Zhen Li <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? Fix a few issues in parameters table in structured-streaming-kafka-integration doc. ### Why are the changes needed? Make the title of the table consistent with the data. ### Does this PR introduce _any_ user-facing change? Yes. Before:  After:  Before:  After:  Before:  After:  ### How was this patch tested? Manually build and check. Closes #28910 from sidedoorleftroad/SPARK-32075. Authored-by: sidedoorleftroad <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Set column width w/ benchmark names to maximum of either 1. 40 (before this PR) or 2. The length of benchmark name or 3. Maximum length of cases names ### Why are the changes needed? To improve readability of benchmark results. For example, `MakeDateTimeBenchmark`. Before: ``` make_timestamp(): Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------------------------------ prepare make_timestamp() 3636 3673 38 0.3 3635.7 1.0X make_timestamp(2019, 1, 2, 3, 4, 50.123456) 94 99 4 10.7 93.8 38.8X make_timestamp(2019, 1, 2, 3, 4, 60.000000) 68 80 13 14.6 68.3 53.2X make_timestamp(2019, 12, 31, 23, 59, 60.00) 65 79 19 15.3 65.3 55.7X make_timestamp(*, *, *, 3, 4, 50.123456) 271 280 14 3.7 270.7 13.4X ``` After: ``` make_timestamp(): Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative --------------------------------------------------------------------------------------------------------------------------- prepare make_timestamp() 3694 3745 82 0.3 3694.0 1.0X make_timestamp(2019, 1, 2, 3, 4, 50.123456) 82 90 9 12.2 82.3 44.9X make_timestamp(2019, 1, 2, 3, 4, 60.000000) 72 77 5 13.9 71.9 51.4X make_timestamp(2019, 12, 31, 23, 59, 60.00) 67 71 5 15.0 66.8 55.3X make_timestamp(*, *, *, 3, 4, 50.123456) 273 289 14 3.7 273.2 13.5X ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating benchmark results for `MakeDateTimeBenchmark`: ``` $ SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "sql/test:runMain org.apache.spark.sql.execution.benchmark.MakeDateTimeBenchmark" ``` in the environment: | Item | Description | | ---- | ----| | Region | us-west-2 (Oregon) | | Instance | r3.xlarge | | AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) | | Java | OpenJDK 64-Bit Server VM 1.8.0_252 and OpenJDK 64-Bit Server VM 11.0.7+10 | Closes #28906 from MaxGekk/benchmark-table-formatting. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Reset listenerRegistered when application end. ### Why are the changes needed? Within a jvm, stop and create `SparkContext` multi times will cause the bug. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Add UT. Closes #28899 from ulysses-you/SPARK-32062. Authored-by: ulysses <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? R version 4.0.2 was released, see https://cran.r-project.org/doc/manuals/r-release/NEWS.html. This PR targets to upgrade R version in AppVeyor CI environment. ### Why are the changes needed? To test the latest R versions before the release, and see if there are any regressions. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? AppVeyor will test. Closes #28909 from HyukjinKwon/SPARK-32074. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…accessor ### What changes were proposed in this pull request? This change simplifies the ArrowColumnVector ListArray accessor to use provided Arrow APIs available in v0.15.0 to calculate element indices. ### Why are the changes needed? This simplifies the code by avoiding manual calculations on the Arrow offset buffer and makes use of more stable APIs. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing tests Closes #28915 from BryanCutler/arrow-simplify-ArrowColumnVector-ListArray-SPARK-32080. Authored-by: Bryan Cutler <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…e rows in ScalaUDF as well ### What changes were proposed in this pull request? This PR tries to address the comment: #28645 (comment) It changes `canUpCast/canCast` to allow cast from sub UDT to base UDT, in order to achieve the goal to allow UserDefinedType to use `ExpressionEncoder` to deserialize rows in ScalaUDF as well. One thing that needs to mention is, even we allow cast from sub UDT to base UDT, it doesn't really do the cast in `Cast`. Because, yet, sub UDT and base UDT are considered as the same type(because of #16660), see: https://github.com/apache/spark/blob/5264164a67df498b73facae207eda12ee133be7d/sql/catalyst/src/main/scala/org/apache/spark/sql/types/UserDefinedType.scala#L81-L86 https://github.com/apache/spark/blob/5264164a67df498b73facae207eda12ee133be7d/sql/catalyst/src/main/scala/org/apache/spark/sql/types/UserDefinedType.scala#L92-L95 Therefore, the optimize rule `SimplifyCast` will eliminate the cast at the end. ### Why are the changes needed? Reduce the special case caused by `UserDefinedType` in `ResolveEncodersInUDF` and `ScalaUDF`. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? It should be covered by the test of `SPARK-19311`, which is also updated a little in this PR. Closes #28920 from Ngone51/fix-udf-udt. Authored-by: yi.wu <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…kerFiile ### What changes were proposed in this pull request? This PR proposes to upgrade R version to 4.0.2 in the release docker image. As of SPARK-31918, we should make a release with R 4.0.0+ which works with R 3.5+ too. ### Why are the changes needed? To unblock releases on CRAN. ### Does this PR introduce _any_ user-facing change? No, dev-only. ### How was this patch tested? Manually tested via scripts under `dev/create-release`, manually attaching to the container and checking the R version. Closes #28922 from HyukjinKwon/SPARK-32089. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? This PR is to add a redirect to sql-ref.html. ### Why are the changes needed? Before Spark 3.0 release, we are using sql-reference.md, which was replaced by sql-ref.md instead. A number of Google searches I’ve done today have turned up https://spark.apache.org/docs/latest/sql-reference.html, which does not exist any more. Thus, we should add a redirect to sql-ref.html. ### Does this PR introduce _any_ user-facing change? https://spark.apache.org/docs/latest/sql-reference.html will be redirected to https://spark.apache.org/docs/latest/sql-ref.html ### How was this patch tested? Build it in my local environment. It works well. The sql-reference.html file was generated. The contents are like: ``` <!DOCTYPE html> <html lang="en-US"> <meta charset="utf-8"> <title>Redirecting…</title> <link rel="canonical" href="http://localhost:4000/sql-ref.html"> <script>location="http://localhost:4000/sql-ref.html"</script> <meta http-equiv="refresh" content="0; url=http://localhost:4000/sql-ref.html"> <meta name="robots" content="noindex"> <h1>Redirecting…</h1> <a href="http://localhost:4000/sql-ref.html">Click here if you are not redirected.</a> </html> ``` Closes #28914 from gatorsmile/addRedirectSQLRef. Authored-by: gatorsmile <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…ct slicing in createDataFrame with Arrow

### What changes were proposed in this pull request?

When you use floats are index of pandas, it creates a Spark DataFrame with a wrong results as below when Arrow is enabled:

```bash

./bin/pyspark --conf spark.sql.execution.arrow.pyspark.enabled=true

```

```python

>>> import pandas as pd

>>> spark.createDataFrame(pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.])).show()

+---+

| a|

+---+

| 1|

| 1|

| 2|

+---+

```

This is because direct slicing uses the value as index when the index contains floats:

```python

>>> pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.])[2:]

a

2.0 1

3.0 2

4.0 3

>>> pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.]).iloc[2:]

a

4.0 3

>>> pd.DataFrame({'a': [1,2,3]}, index=[2, 3, 4])[2:]

a

4 3

```

This PR proposes to explicitly use `iloc` to positionally slide when we create a DataFrame from a pandas DataFrame with Arrow enabled.

FWIW, I was trying to investigate why direct slicing refers the index value or the positional index sometimes but I stopped investigating further after reading this https://pandas.pydata.org/pandas-docs/stable/getting_started/10min.html#selection

> While standard Python / Numpy expressions for selecting and setting are intuitive and come in handy for interactive work, for production code, we recommend the optimized pandas data access methods, `.at`, `.iat`, `.loc` and `.iloc`.

### Why are the changes needed?

To create the correct Spark DataFrame from a pandas DataFrame without a data loss.

### Does this PR introduce _any_ user-facing change?

Yes, it is a bug fix.

```bash

./bin/pyspark --conf spark.sql.execution.arrow.pyspark.enabled=true

```

```python

import pandas as pd

spark.createDataFrame(pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.])).show()

```

Before:

```

+---+

| a|

+---+

| 1|

| 1|

| 2|

+---+

```

After:

```

+---+

| a|

+---+

| 1|

| 2|

| 3|

+---+

```

### How was this patch tested?

Manually tested and unittest were added.

Closes #28928 from HyukjinKwon/SPARK-32098.

Authored-by: HyukjinKwon <[email protected]>

Signed-off-by: Bryan Cutler <[email protected]>

### What changes were proposed in this pull request? This PR aims to add `WorkerDecomissionExtendedSuite` for various worker decommission combinations. ### Why are the changes needed? This will improve the test coverage. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the Jenkins. Closes #28929 from dongjoon-hyun/SPARK-WD-TEST. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

… in the same column ### What changes were proposed in this pull request? This pull request fixes a bug present in the csv type inference. We have problems when we have different types in the same column. **Previously:** ``` $ cat /example/f1.csv col1 43200000 true spark.read.csv(path="file:///example/*.csv", header=True, inferSchema=True).show() +----+ |col1| +----+ |null| |true| +----+ root |-- col1: boolean (nullable = true) ``` **Now** ``` spark.read.csv(path="file:///example/*.csv", header=True, inferSchema=True).show() +-------------+ |col1 | +-------------+ |43200000 | |true | +-------------+ root |-- col1: string (nullable = true) ``` Previously the hierarchy of type inference is the following: > IntegerType > > LongType > > > DecimalType > > > > DoubleType > > > > > TimestampType > > > > > > BooleanType > > > > > > > StringType So, when, for example, we have integers in one column, and the last element is a boolean, all the column is inferred as a boolean column incorrectly and all the number are shown as null when you see the data We need the following hierarchy. When we have different numeric types in the column it will be resolved correctly. And when we have other different types it will be resolved as a String type column > IntegerType > > LongType > > > DecimalType > > > > DoubleType > > > > > StringType > TimestampType > > StringType > BooleanType > > StringType > StringType ### Why are the changes needed? Fix the bug explained ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test and manual tests Closes #28896 from planga82/feature/SPARK-32025_csv_inference. Authored-by: Pablo Langa <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

sync