-

Notifications

You must be signed in to change notification settings - Fork 29k

Branch 2.4 #22811

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Closed

Closed

Branch 2.4 #22811

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

## What changes were proposed in this pull request? SPARK-10399 introduced a performance regression on the hash computation for UTF8String. The regression can be evaluated with the code attached in the JIRA. That code runs in about 120 us per method on my laptop (MacBook Pro 2.5 GHz Intel Core i7, RAM 16 GB 1600 MHz DDR3) while the code from branch 2.3 takes on the same machine about 45 us for me. After the PR, the code takes about 45 us on the master branch too. ## How was this patch tested? running the perf test from the JIRA Closes #22338 from mgaido91/SPARK-25317. Authored-by: Marco Gaido <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 64c314e) Signed-off-by: Wenchen Fan <[email protected]>

…lization Exception ## What changes were proposed in this pull request? mapValues in scala is currently not serializable. To avoid the serialization issue while running pageRank, we need to use map instead of mapValues. Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #22271 from shahidki31/master_latest. Authored-by: Shahid <[email protected]> Signed-off-by: Joseph K. Bradley <[email protected]> (cherry picked from commit 3b6591b) Signed-off-by: Joseph K. Bradley <[email protected]>

…ouping key in group aggregate pandas UDF ## What changes were proposed in this pull request? This PR proposes to add another example for multiple grouping key in group aggregate pandas UDF since this feature could make users still confused. ## How was this patch tested? Manually tested and documentation built. Closes #22329 from HyukjinKwon/SPARK-25328. Authored-by: hyukjinkwon <[email protected]> Signed-off-by: Bryan Cutler <[email protected]> (cherry picked from commit 7ef6d1d) Signed-off-by: Bryan Cutler <[email protected]>

## What changes were proposed in this pull request? Add value length check in `_create_row`, forbid extra value for custom Row in PySpark. ## How was this patch tested? New UT in pyspark-sql Closes #22140 from xuanyuanking/SPARK-25072. Lead-authored-by: liyuanjian <[email protected]> Co-authored-by: Yuanjian Li <[email protected]> Signed-off-by: Bryan Cutler <[email protected]> (cherry picked from commit c84bc40) Signed-off-by: Bryan Cutler <[email protected]>

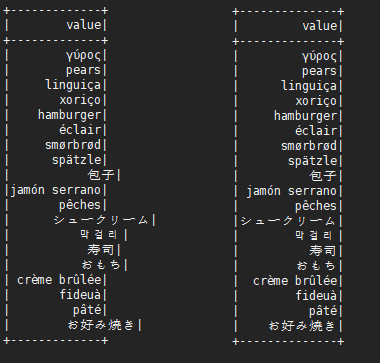

…alignment problem This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems. It is effective for English, Chinese characters, Japanese, Korean and so on. ```scala before: +---+---------------------------+-------------+ |id |中国 |s2 | +---+---------------------------+-------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world]| |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312] | |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+---------------------------+-------------+ after: +---+-----------------------------------+----------------+ |id |中国 |s2 | +---+-----------------------------------+----------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world] | |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312]| |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+-----------------------------------+----------------+ ``` ## What changes were proposed in this pull request? When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem. Try to fix this problem. ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)  Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #22048 from xuejianbest/master. Authored-by: xuejianbest <[email protected]> Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? This is a follow-up pr of #22200. When casting to decimal type, if `Cast.canNullSafeCastToDecimal()`, overflow won't happen, so we don't need to check the result of `Decimal.changePrecision()`. ## How was this patch tested? Existing tests. Closes #22352 from ueshin/issues/SPARK-25208/reduce_code_size. Authored-by: Takuya UESHIN <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 1b1711e) Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? How to reproduce permission issue: ```sh # build spark ./dev/make-distribution.sh --name SPARK-25330 --tgz -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn tar -zxf spark-2.4.0-SNAPSHOT-bin-SPARK-25330.tar && cd spark-2.4.0-SNAPSHOT-bin-SPARK-25330 export HADOOP_PROXY_USER=user_a bin/spark-sql export HADOOP_PROXY_USER=user_b bin/spark-sql ``` ```java Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.security.AccessControlException: Permission denied: user=user_b, access=EXECUTE, inode="/tmp/hive-$%7Buser.name%7D/user_b/668748f2-f6c5-4325-a797-fd0a7ee7f4d4":user_b:hadoop:drwx------ at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:259) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:205) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190) ``` The issue occurred in this commit: apache/hadoop@feb886f. This pr revert Hadoop 2.7 to 2.7.3 to avoid this issue. ## How was this patch tested? unit tests and manual tests. Closes #22327 from wangyum/SPARK-25330. Authored-by: Yuming Wang <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit b0ada7d) Signed-off-by: Sean Owen <[email protected]>

…itions parameter ## What changes were proposed in this pull request? This adds a test following #21638 ## How was this patch tested? Existing tests and new test. Closes #22356 from srowen/SPARK-22357.2. Authored-by: Sean Owen <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 4e3365b) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? This pr removed the method `updateBytesReadWithFileSize` in `FileScanRDD` because it computes input metrics by file size supported in Hadoop 2.5 and earlier. The current Spark does not support the versions, so it causes wrong input metric numbers. This is rework from #22232. Closes #22232 ## How was this patch tested? Added tests in `FileBasedDataSourceSuite`. Closes #22324 from maropu/pr22232-2. Lead-authored-by: dujunling <[email protected]> Co-authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit ed249db) Signed-off-by: Sean Owen <[email protected]>

…ases of sql/core and sql/hive ## What changes were proposed in this pull request? In SharedSparkSession and TestHive, we need to disable the rule ConvertToLocalRelation for better test case coverage. ## How was this patch tested? Identify the failures after excluding "ConvertToLocalRelation" rule. Closes #22270 from dilipbiswal/SPARK-25267-final. Authored-by: Dilip Biswal <[email protected]> Signed-off-by: gatorsmile <[email protected]> (cherry picked from commit 6d7bc5a) Signed-off-by: gatorsmile <[email protected]>

## What changes were proposed in this pull request? Before Apache Spark 2.3, table properties were ignored when writing data to a hive table(created with STORED AS PARQUET/ORC syntax), because the compression configurations were not passed to the FileFormatWriter in hadoopConf. Then it was fixed in #20087. But actually for CTAS with USING PARQUET/ORC syntax, table properties were ignored too when convertMastore, so the test case for CTAS not supported. Now it has been fixed in #20522 , the test case should be enabled too. ## How was this patch tested? This only re-enables the test cases of previous PR. Closes #22302 from fjh100456/compressionCodec. Authored-by: fjh100456 <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 473f2fb) Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request? Fix unused imports & outdated comments on `kafka-0-10-sql` module. (Found while I was working on [SPARK-23539](#22282)) ## How was this patch tested? Existing unit tests. Closes #22342 from dongjinleekr/feature/fix-kafka-sql-trivials. Authored-by: Lee Dongjin <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 458f501) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? Deprecate public APIs from ImageSchema. ## How was this patch tested? N/A Closes #22349 from WeichenXu123/image_api_deprecate. Authored-by: WeichenXu <[email protected]> Signed-off-by: Xiangrui Meng <[email protected]> (cherry picked from commit 08c02e6) Signed-off-by: Xiangrui Meng <[email protected]>

…UDFSuite ## What changes were proposed in this pull request? At Spark 2.0.0, SPARK-14335 adds some [commented-out test coverages](https://github.com/apache/spark/pull/12117/files#diff-dd4b39a56fac28b1ced6184453a47358R177 ). This PR enables them because it's supported since 2.0.0. ## How was this patch tested? Pass the Jenkins with re-enabled test coverage. Closes #22363 from dongjoon-hyun/SPARK-25375. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: gatorsmile <[email protected]> (cherry picked from commit 26f74b7) Signed-off-by: gatorsmile <[email protected]>

## What changes were proposed in this pull request? When running TPC-DS benchmarks on 2.4 release, npoggi and winglungngai saw more than 10% performance regression on the following queries: q67, q24a and q24b. After we applying the PR #22338, the performance regression still exists. If we revert the changes in #19222, npoggi and winglungngai found the performance regression was resolved. Thus, this PR is to revert the related changes for unblocking the 2.4 release. In the future release, we still can continue the investigation and find out the root cause of the regression. ## How was this patch tested? The existing test cases Closes #22361 from gatorsmile/revertMemoryBlock. Authored-by: gatorsmile <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 0b9ccd5) Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? Remove `BisectingKMeansModel.setDistanceMeasure` method. In `BisectingKMeansModel` set this param is meaningless. ## How was this patch tested? N/A Closes #22360 from WeichenXu123/bkmeans_update. Authored-by: WeichenXu <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 88a930d) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request?

How to reproduce:

```scala

val df1 = spark.createDataFrame(Seq(

(1, 1)

)).toDF("a", "b").withColumn("c", lit(null).cast("int"))

val df2 = df1.union(df1).withColumn("d", spark_partition_id).filter($"c".isNotNull)

df2.show

+---+---+----+---+

| a| b| c| d|

+---+---+----+---+

| 1| 1|null| 0|

| 1| 1|null| 1|

+---+---+----+---+

```

`filter($"c".isNotNull)` was transformed to `(null <=> c#10)` before #19201, but it is transformed to `(c#10 = null)` since #20155. This pr revert it to `(null <=> c#10)` to fix this issue.

## How was this patch tested?

unit tests

Closes #22368 from wangyum/SPARK-25368.

Authored-by: Yuming Wang <[email protected]>

Signed-off-by: gatorsmile <[email protected]>

(cherry picked from commit 77c9964)

Signed-off-by: gatorsmile <[email protected]>

… for ORC native data source table persisted in metastore ## What changes were proposed in this pull request? Apache Spark doesn't create Hive table with duplicated fields in both case-sensitive and case-insensitive mode. However, if Spark creates ORC files in case-sensitive mode first and create Hive table on that location, where it's created. In this situation, field resolution should fail in case-insensitive mode. Otherwise, we don't know which columns will be returned or filtered. Previously, SPARK-25132 fixed the same issue in Parquet. Here is a simple example: ``` val data = spark.range(5).selectExpr("id as a", "id * 2 as A") spark.conf.set("spark.sql.caseSensitive", true) data.write.format("orc").mode("overwrite").save("/user/hive/warehouse/orc_data") sql("CREATE TABLE orc_data_source (A LONG) USING orc LOCATION '/user/hive/warehouse/orc_data'") spark.conf.set("spark.sql.caseSensitive", false) sql("select A from orc_data_source").show +---+ | A| +---+ | 3| | 2| | 4| | 1| | 0| +---+ ``` See #22148 for more details about parquet data source reader. ## How was this patch tested? Unit tests added. Closes #22262 from seancxmao/SPARK-25175. Authored-by: seancxmao <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit a0aed47) Signed-off-by: Dongjoon Hyun <[email protected]>

…ema in Parquet issue

## What changes were proposed in this pull request?

How to reproduce:

```scala

spark.sql("CREATE TABLE tbl(id long)")

spark.sql("INSERT OVERWRITE TABLE tbl VALUES 4")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"INSERT OVERWRITE LOCAL DIRECTORY '/tmp/spark/parquet' " +

"STORED AS PARQUET SELECT ID FROM view1")

spark.read.parquet("/tmp/spark/parquet").schema

scala> spark.read.parquet("/tmp/spark/parquet").schema

res10: org.apache.spark.sql.types.StructType = StructType(StructField(id,LongType,true))

```

The schema should be `StructType(StructField(ID,LongType,true))` as we `SELECT ID FROM view1`.

This pr fix this issue.

## How was this patch tested?

unit tests

Closes #22359 from wangyum/SPARK-25313-FOLLOW-UP.

Authored-by: Yuming Wang <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

(cherry picked from commit f8b4d5a)

Signed-off-by: Wenchen Fan <[email protected]>

…ctType to a DDL string ## What changes were proposed in this pull request? Add the version number for the new APIs. ## How was this patch tested? N/A Closes #22377 from gatorsmile/followup24849. Authored-by: gatorsmile <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 6f65178) Signed-off-by: Wenchen Fan <[email protected]>

…plan appears in the query ## What changes were proposed in this pull request? In the Planner, we collect the placeholder which need to be substituted in the query execution plan and once we plan them, we substitute the placeholder with the effective plan. In this second phase, we rely on the `==` comparison, ie. the `equals` method. This means that if two placeholder plans - which are different instances - have the same attributes (so that they are equal, according to the equal method) they are both substituted with their corresponding new physical plans. So, in such a situation, the first time we substitute both them with the first of the 2 new generated plan and the second time we substitute nothing. This is usually of no harm for the execution of the query itself, as the 2 plans are identical. But since they are the same instance, now, the local variables are shared (which is unexpected). This causes issues for the metrics collected, as the same node is executed 2 times, so the metrics are accumulated 2 times, wrongly. The PR proposes to use the `eq` method in checking which placeholder needs to be substituted,; thus in the previous situation, actually both the two different physical nodes which are created (one for each time the logical plan appears in the query plan) are used and the metrics are collected properly for each of them. ## How was this patch tested? added UT Closes #22284 from mgaido91/SPARK-25278. Authored-by: Marco Gaido <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 12e3e9f) Signed-off-by: Wenchen Fan <[email protected]>

…lar with arrow udfs ## What changes were proposed in this pull request? Clarify docstring for Scalar functions ## How was this patch tested? Adds a unit test showing use similar to wordcount, there's existing unit test for array of floats as well. Closes #20908 from holdenk/SPARK-23672-document-support-for-nested-return-types-in-scalar-with-arrow-udfs. Authored-by: Holden Karau <[email protected]> Signed-off-by: Bryan Cutler <[email protected]> (cherry picked from commit da5685b) Signed-off-by: Bryan Cutler <[email protected]>

## What changes were proposed in this pull request? SPARK-21281 introduced a check for the inputs of `CreateStructLike` to be non-empty. This means that `struct()`, which was previously considered valid, now throws an Exception. This behavior change was introduced in 2.3.0. The change may break users' application on upgrade and it causes `VectorAssembler` to fail when an empty `inputCols` is defined. The PR removes the added check making `struct()` valid again. ## How was this patch tested? added UT Closes #22373 from mgaido91/SPARK-25371. Authored-by: Marco Gaido <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 0736e72) Signed-off-by: Wenchen Fan <[email protected]>

…sed as null when nullValue is set. ## What changes were proposed in this pull request? In the PR, I propose new CSV option `emptyValue` and an update in the SQL Migration Guide which describes how to revert previous behavior when empty strings were not written at all. Since Spark 2.4, empty strings are saved as `""` to distinguish them from saved `null`s. Closes #22234 Closes #22367 ## How was this patch tested? It was tested by `CSVSuite` and new tests added in the PR #22234 Closes #22389 from MaxGekk/csv-empty-value-master. Lead-authored-by: Mario Molina <[email protected]> Co-authored-by: Maxim Gekk <[email protected]> Signed-off-by: hyukjinkwon <[email protected]> (cherry picked from commit c9cb393) Signed-off-by: hyukjinkwon <[email protected]>

…t duplicate fields

## What changes were proposed in this pull request?

Like `INSERT OVERWRITE DIRECTORY USING` syntax, `INSERT OVERWRITE DIRECTORY STORED AS` should not generate files with duplicate fields because Spark cannot read those files back.

**INSERT OVERWRITE DIRECTORY USING**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' USING parquet SELECT 'id', 'id2' id")

... ERROR InsertIntoDataSourceDirCommand: Failed to write to directory ...

org.apache.spark.sql.AnalysisException: Found duplicate column(s) when inserting into file:/tmp/parquet: `id`;

```

**INSERT OVERWRITE DIRECTORY STORED AS**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' STORED AS parquet SELECT 'id', 'id2' id")

// It generates corrupted files

scala> spark.read.parquet("/tmp/parquet").show

18/09/09 22:09:57 WARN DataSource: Found duplicate column(s) in the data schema and the partition schema: `id`;

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes #22378 from dongjoon-hyun/SPARK-25389.

Authored-by: Dongjoon Hyun <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

(cherry picked from commit 77579aa)

Signed-off-by: Dongjoon Hyun <[email protected]>

…f values ## What changes were proposed in this pull request? Stop trimming values of properties loaded from a file ## How was this patch tested? Added unit test demonstrating the issue hit in production. Closes #22213 from gerashegalov/gera/SPARK-25221. Authored-by: Gera Shegalov <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]> (cherry picked from commit bcb9a8c) Signed-off-by: Marcelo Vanzin <[email protected]>

## What changes were proposed in this pull request? We will update block info coming from executors, at the timing like caching a RDD. However, when removing RDDs with unpersisting, we don't ask to update block info. So the block info is not updated. We can fix this with few options: 1. Ask to update block info when unpersisting This is simplest but changes driver-executor communication a bit. 2. Update block info when processing the event of unpersisting RDD We send a `SparkListenerUnpersistRDD` event when unpersisting RDD. When processing this event, we can update block info of the RDD. This only changes event processing code so the risk seems to be lower. Currently this patch takes option 2 for lower risk. If we agree first option has no risk, we can change to it. ## How was this patch tested? Unit tests. Closes #22341 from viirya/SPARK-24889. Authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]> (cherry picked from commit 14f3ad2) Signed-off-by: Marcelo Vanzin <[email protected]>

## What changes were proposed in this pull request? Correct some comparisons between unrelated types to what they seem to… have been trying to do ## How was this patch tested? Existing tests. Closes #22384 from srowen/SPARK-25398. Authored-by: Sean Owen <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit cfbdd6a) Signed-off-by: Sean Owen <[email protected]>

This reverts commit e58dadb.

…batch execution jobs ## What changes were proposed in this pull request? The leftover state from running a continuous processing streaming job should not affect later microbatch execution jobs. If a continuous processing job runs and the same thread gets reused for a microbatch execution job in the same environment, the microbatch job could get wrong answers because it can attempt to load the wrong version of the state. ## How was this patch tested? New and existing unit tests Closes #22386 from mukulmurthy/25399-streamthread. Authored-by: Mukul Murthy <[email protected]> Signed-off-by: Tathagata Das <[email protected]> (cherry picked from commit 9f5c5b4) Signed-off-by: Tathagata Das <[email protected]>

## What changes were proposed in this pull request? Currently when we open our doc site: https://spark.apache.org/docs/latest/index.html , there is one warning  This PR is to change the CDN as per the migration tips: https://www.mathjax.org/cdn-shutting-down/ This is very very trivial. But it would be good to follow the suggestion from MathJax team and remove the warning, in case one day the original CDN is no longer available. ## How was this patch tested? Manual check. Closes #22753 from gengliangwang/migrateMathJax. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 2ab4473) Signed-off-by: Sean Owen <[email protected]>

When deserializing values of ArrayType with struct elements in java beans, fields of structs get mixed up. I suggest using struct data types retrieved from resolved input data instead of inferring them from java beans. ## What changes were proposed in this pull request? MapObjects expression is used to map array elements to java beans. Struct type of elements is inferred from java bean structure and ends up with mixed up field order. I used UnresolvedMapObjects instead of MapObjects, which allows to provide element type for MapObjects during analysis based on the resolved input data, not on the java bean. ## How was this patch tested? Added a test case. Built complete project on travis. michalsenkyr cloud-fan marmbrus liancheng Closes #22708 from vofque/SPARK-21402. Lead-authored-by: Vladimir Kuriatkov <[email protected]> Co-authored-by: Vladimir Kuriatkov <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit e5b8136) Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? When the URL for description column in the table of job/stage page is long, WebUI doesn't render it properly.  Both job and stage page are using the class `name-link` for the description URL, so change the style of `a.name-link` to fix it. ## How was this patch tested? Manual test on my local:  Closes #22744 from gengliangwang/fixUILink. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 1901f06) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? The PR proposes to deprecate the `computeCost` method on `BisectingKMeans` in favor of the adoption of `ClusteringEvaluator` in order to evaluate the clustering. ## How was this patch tested? NA Closes #22756 from mgaido91/SPARK-25758. Authored-by: Marco Gaido <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit c296254) Signed-off-by: Dongjoon Hyun <[email protected]>

…to multiple separate pages 1. Split the main page of sql-programming-guide into 7 parts: - Getting Started - Data Sources - Performance Turing - Distributed SQL Engine - PySpark Usage Guide for Pandas with Apache Arrow - Migration Guide - Reference 2. Add left menu for sql-programming-guide, keep first level index for each part in the menu.  Local test with jekyll build/serve. Closes #22746 from xuanyuanking/SPARK-24499. Authored-by: Yuanjian Li <[email protected]> Signed-off-by: gatorsmile <[email protected]> (cherry picked from commit 987f386) Signed-off-by: gatorsmile <[email protected]>

….html to multiple separate pages ## What changes were proposed in this pull request? Forgot to clean remove the link for `Upgrading From Spark SQL 2.4 to 3.0` when merging to 2.4 ## How was this patch tested? N/A Closes #22769 from gatorsmile/test2.4. Authored-by: gatorsmile <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…steringEvaluator ## What changes were proposed in this pull request? The PR updates the examples for `BisectingKMeans` so that they don't use the deprecated method `computeCost` (see SPARK-25758). ## How was this patch tested? running examples Closes #22763 from mgaido91/SPARK-25764. Authored-by: Marco Gaido <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit d0ecff2) Signed-off-by: Wenchen Fan <[email protected]>

…sistency ## What changes were proposed in this pull request? Currently, migration guide has no space between each item which looks too compact and hard to read. Some of items already had some spaces between them in the migration guide. This PR suggest to format them consistently for readability. Before:  After:  ## How was this patch tested? Manually tested: Closes #22761 from HyukjinKwon/minor-migration-doc. Authored-by: hyukjinkwon <[email protected]> Signed-off-by: hyukjinkwon <[email protected]> (cherry picked from commit c8f7691) Signed-off-by: hyukjinkwon <[email protected]>

## What changes were proposed in this pull request? This is a follow-up PR for #22259. The extra field added in `ScalaUDF` with the original PR was declared optional, but should be indeed required, otherwise callers of `ScalaUDF`'s constructor could ignore this new field and cause the result to be incorrect. This PR makes the new field required and changes its name to `handleNullForInputs`. #22259 breaks the previous behavior for null-handling of primitive-type input parameters. For example, for `val f = udf({(x: Int, y: Any) => x})`, `f(null, "str")` should return `null` but would return `0` after #22259. In this PR, all UDF methods except `def udf(f: AnyRef, dataType: DataType): UserDefinedFunction` have been restored with the original behavior. The only exception is documented in the Spark SQL migration guide. In addition, now that we have this extra field indicating if a null-test should be applied on the corresponding input value, we can also make use of this flag to avoid the rule `HandleNullInputsForUDF` being applied infinitely. ## How was this patch tested? Added UT in UDFSuite Passed affected existing UTs: AnalysisSuite UDFSuite Closes #22732 from maryannxue/spark-25044-followup. Lead-authored-by: maryannxue <[email protected]> Co-authored-by: Wenchen Fan <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit e816776) Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an exception because expecting a constant parameter (object inspector) as a particular argument. The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer rule transforms a `Literal` parameter to a `PrettyAttribute` which is then transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`. The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox` that actually shouldn't be called during `toPrettySQL` transformation. The reason why it is called are the non lazy fields in `HiveUDAFFunction`. This PR makes all fields of `HiveUDAFFunction` lazy. ## How was this patch tested? added new UT Closes #22766 from peter-toth/SPARK-25768. Authored-by: Peter Toth <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit f38594f) Signed-off-by: Wenchen Fan <[email protected]>

JVMs don't you allocate arrays of length exactly Int.MaxValue, so leave a little extra room. This is necessary when reading blocks >2GB off the network (for remote reads or for cache replication). Unit tests via jenkins, ran a test with blocks over 2gb on a cluster Closes #22705 from squito/SPARK-25704. Authored-by: Imran Rashid <[email protected]> Signed-off-by: Imran Rashid <[email protected]>

This reverts commit c296254.

… use ClusteringEvaluator" This reverts commit 36307b1.

## What changes were proposed in this pull request? Fix some broken links in the new document. I have clicked through all the links. Hopefully i haven't missed any :-) ## How was this patch tested? Built using jekyll and verified the links. Closes #22772 from dilipbiswal/doc_check. Authored-by: Dilip Biswal <[email protected]> Signed-off-by: gatorsmile <[email protected]> (cherry picked from commit ed9d0aa) Signed-off-by: gatorsmile <[email protected]>

## What changes were proposed in this pull request? Since we didn't test Java 9 ~ 11 up to now in the community, fix the document to describe Java 8 only. ## How was this patch tested? N/A (This is a document only change.) Closes #22781 from dongjoon-hyun/SPARK-JDK-DOC. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fc9ba9d) Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request? Fix minor error in the code "sketch of pregel implementation" of GraphX guide. This fixed error relates to `[SPARK-12995][GraphX] Remove deprecate APIs from Pregel` ## How was this patch tested? N/A Closes #22780 from WeichenXu123/minor_doc_update1. Authored-by: WeichenXu <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 3b4f35f) Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request?

This PR aims to fix the following SparkR example in Spark 2.3.0 ~ 2.4.0.

```r

> df <- read.df("examples/src/main/resources/people.csv", "csv")

> namesAndAges <- select(df, "name", "age")

...

Caused by: org.apache.spark.sql.AnalysisException: cannot resolve '`name`' given input columns: [_c0];;

'Project ['name, 'age]

+- AnalysisBarrier

+- Relation[_c0#97] csv

```

- https://dist.apache.org/repos/dist/dev/spark/v2.4.0-rc3-docs/_site/sql-programming-guide.html#manually-specifying-options

- http://spark.apache.org/docs/2.3.2/sql-programming-guide.html#manually-specifying-options

- http://spark.apache.org/docs/2.3.1/sql-programming-guide.html#manually-specifying-options

- http://spark.apache.org/docs/2.3.0/sql-programming-guide.html#manually-specifying-options

## How was this patch tested?

Manual test in SparkR. (Please note that `RSparkSQLExample.R` fails at the last JDBC example)

```r

> df <- read.df("examples/src/main/resources/people.csv", "csv", sep=";", inferSchema=T, header=T)

> namesAndAges <- select(df, "name", "age")

```

Closes #22791 from dongjoon-hyun/SPARK-25795.

Authored-by: Dongjoon Hyun <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

(cherry picked from commit 3b45567)

Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request? This PR replaces `turing` with `tuning` in files and a file name. Currently, in the left side menu, `Turing` is shown. [This page](https://dist.apache.org/repos/dist/dev/spark/v2.4.0-rc4-docs/_site/sql-performance-turing.html) is one of examples.  ## How was this patch tested? `grep -rin turing docs` && `find docs -name "*turing*"` Closes #22800 from kiszk/SPARK-24499-follow. Authored-by: Kazuaki Ishizaki <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit c391dc6) Signed-off-by: Wenchen Fan <[email protected]>

The original test would sometimes fail if the listener bus did not keep up, so just wait till the listener bus is empty. Tested by adding a sleep in the listener, which made the test consistently fail without the fix, but pass consistently after the fix. Closes #22799 from squito/SPARK-25805. Authored-by: Imran Rashid <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 78c8bd2) Signed-off-by: Wenchen Fan <[email protected]>

Member

|

Hi, @un-knower . Could you close this? |

|

Can one of the admins verify this patch? |

zifeif2

pushed a commit

to zifeif2/spark

that referenced

this pull request

Nov 22, 2025

Closes apache#22567 Closes apache#18457 Closes apache#21517 Closes apache#21858 Closes apache#22383 Closes apache#19219 Closes apache#22401 Closes apache#22811 Closes apache#20405 Closes apache#21933 Closes apache#22819 from srowen/ClosePRs. Authored-by: Sean Owen <[email protected]> Signed-off-by: Sean Owen <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.