-

Notifications

You must be signed in to change notification settings - Fork 593

HDDS-4043. allow deletion from Trash directory without -skipTrash option #2110

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…tems WITHOUT need of -skipTrash flag. Ozone specific trash policy affected. Integration test, testDeleteTrashNoSkipTrash, created to test and validate ozone trash policy changes for FsShell rm command deleting Trash items without -skipTrash flag.

… Trash items WITHOUT need of -skipTrash flag. Ozone specific trash policy affected. Integration test, testDeleteTrashNoSkipTrash, created to test and validate ozone trash policy changes for FsShell rm command deleting Trash items without -skipTrash flag." This reverts commit ebfa5ea.

…tems WITHOUT need of -skipTrash flag. Ozone specific trash policy affected. Integration test, testDeleteTrashNoSkipTrash, created to test and validate ozone trash policy changes for FsShell rm command deleting Trash items without -skipTrash flag.

sadanand48

left a comment

sadanand48

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @neils-dev for the good work. I have left a few minor comments . Apart from that patch looks good to me.

| public boolean moveToTrash(Path path) throws IOException { | ||

| this.fs.getFileStatus(path); | ||

| Path trashRoot = this.fs.getTrashRoot(path); | ||

| Path trashCurrent = new Path(trashRoot, CURRENT); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please remove trashCurrent variable as it is unused

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Removed unused variable.

hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/TrashPolicyOzone.java

Show resolved

Hide resolved

| throw new InvalidPathException("Invalid path Name " + key); | ||

| } | ||

|

|

||

| if (trashRootKey.startsWith(key) || key.startsWith(trashRootKey)) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think only key.startsWith(trashRootKey) check would suffice. Do we need the OR condition?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for asking to clarify. Added comments in code to explain. In process also improved conditional to satisfy an edge condition where key = .Tra (and the trash prefix is .Trash that would cause an unintentional action, by deleting and not moving to trash).

// first condition tests when length key is <= length trash

// and second when length key > length trash

if ((key.contains(this.fs.TRASH_PREFIX)) && (trashRootKey.startsWith(key))

|| key.startsWith(trashRootKey)) {

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the explaining it in the comment . Although , when length of key < length of trashroot , the key would not be inside .Trash directory.

Suppose key = vol/buck/.Trash/user/randomKey ,the trashroot here would be vol/buck/.Trash

So length of key is always greater than or equal to the length of trashroot and first condition would not be required.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @sadanand48, yes for what you described that is true. The trashroot is derived from the bucket under the key, trashroot=/vol/bucket/.Trash/username. One case where length key < length trashroot can be when key = /vol/bucket/.Trash; here trashroot is /vol/bucket/.Trash/username.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for clarifying this @neils-dev . I forgot to consider the username in trashroot. It makes sense now . Thanks

…olicyOzone.java; added additonal log messages to integration test TestOzoneShellHA; added small modification to conditional statement in TrashPolicyOzone to clarify statement and satisfy edge conditions for trash removal.

|

Thanks @sadanand48 for reviewing the PR and the great comments! Latest commit with changes for comments. |

| // Test delete from Trash directory removes item from filesystem | ||

|

|

||

| // setup configuration to use TrashPolicyOzone | ||

| // (default is TrashPolicyDefault) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@neils-dev Please use getClientConfForOFS(hostPrefix,conf) for initialising clientConf here. The integration test is failing because of this

// Test delete from Trash directory removes item from filesystem

// setup configuration to use TrashPolicyOzone

// (default is TrashPolicyDefault)

final String hostPrefix = OZONE_OFS_URI_SCHEME + "://" + omServiceId;

OzoneConfiguration clientConf = getClientConfForOFS(hostPrefix,conf);

clientConf.setInt(FS_TRASH_INTERVAL_KEY, 60);

clientConf.setClass("fs.trash.classname", TrashPolicyOzone.class,

TrashPolicy.class);

OzoneFsShell shell = new OzoneFsShell(clientConf);

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the comment @sadanand48. Commit to set configuration in function setting TrashPolicyOzone after getClientConfForOFS as suggested.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @neils-dev for making the change . Sorry , I wasn't clear . I meant to use the same URI as that of testDeleteToTrashOrSkipTrash i.e to include serviceId as part of the URI. Since this is HA setup ,its required to pass the serviceID. Please use the same URI as that of testDeleteToTrashOrSkipTrash i.e

final String hostPrefix = OZONE_OFS_URI_SCHEME + "://" + omServiceId;

OzoneConfiguration clientConf = getClientConfForOFS(hostPrefix,conf);

instead of

OzoneConfiguration clientConf = getClientConfForOzoneTrashPolicy("ofs://localhost", conf);

I tried running the individual test in IDE and it's failing. The test only passes when the entire test file is run which is why the integration test passed.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

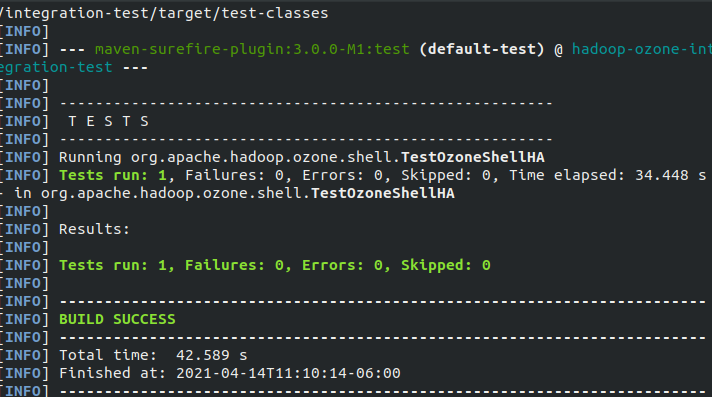

Thanks for your comment @sadanand48 . Commit a change for the service id as suggested. Thanks for following up. BTW, the host set to localhost, as was previously used for the clientconf should not be a problem with the integration test. It was tested both individually hadoop-ozone/integration-test$ mvn -Dtest=TestOzoneShellHA#testDeleteTrashNoSkipTrash test and when the entire suite is run, hadoop-ozone/integration-test$ mvn -Dtest=TestOzoneShellHA test. See attached image.

hadoop-ozone/integration-test$ mvn -Dtest=TestOzoneShellHA#testDeleteTrashNoSkipTrash test

… conf with TrashPolicyOzone - fixes for CI integration test run.

…nging conf to use test suite serviceid omserviceId.

sadanand48

left a comment

sadanand48

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @neils-dev for addressing comments. +1 . LGTM

|

Thanks the patch @neils-dev. It looks good to me, but I tried to run the Unit test locally before the commit. When I try to debug it the unit test is frequently failing: So I tried to run the unit with IntelliJ "repeat until fail" functionality, but it is always failed at the second time. Do you have the same behavior? Can you run it multiple times without any error? I would like to be sure that it's not an intermittent test. |

|

Thanks for looking into potential problems with integration test @elek. I can confirm the error you see on your local test environment, however the messages I see associated with running the test 'repeat until fail' are timeout errors - In addition I tried the same test setup with "repeat until failure" option with Intellij on the It does not appear to be intermittent, rather a problem with running the tests continuously. It may be from parts of the test initialized globally for |

|

The problem indeed involves running the TestOzoneShellHA trash tests repetitively continuously. The test cluster is initialized at the start of the suite of tests with the Om rpc server and Om rpc client in sync, however when the test is repeated as in this case, the rpc client becomes out of sync and uses the wrong port to access the Om rpc server - occurs with shell rpc submitRequest calls. Problem is identified. Will investigate further. |

…NoSkipTrash correcting shutdown and initializing error with OmTransport RPC for shell. FileSystem needs to be closed on shutdown of testcases to properly close OmTransport between shell and om (close() method for instantitated fs for cleanup).

|

Recent commit contains fix for problem running trash service integration tests consecutively (repeat until failure). The OmTransport RPC connection between the shell and om was not closed at end of test; improper cleanup prior to start of next test. Fixed with calling the close() method of the filesystem class implementation. |

|

Thanks @neils-dev for identifying the issue. @elek Can you please take a look and merge the change? |

|

Thanks for the review @sadanand48 and the patch @neils-dev. Merging it now. |

…ing-upgrade-master-merge * upstream/master: (76 commits) HDDS-5280. Make XceiverClientManager creation when necessary in ContainerOperationClient (apache#2289) HDDS-5272. Make ozonefs.robot execution repeatable (apache#2280) HDDS-5123. Use the pre-created apache/ozone-testkrb5 image during secure acceptance tests (apache#2165) HDDS-4993. Add guardrail for reserved buffer size when DN reads a chunk (apache#2058) HDDS-4936. Change ozone groupId from org.apache.hadoop to org.apache.ozone (apache#2018) HDDS-4043. allow deletion from Trash directory without -skipTrash option (apache#2110) HDDS-4927. Determine over and under utilized datanodes in Container Balancer. (apache#2230) HDDS-5273. Handle unsecure cluster convert to secure cluster for SCM. (apache#2281) HDDS-5158. Add documentation for SCM HA Security. (apache#2205) HDDS-5275. Datanode Report Publisher publishes one extra report after DN shutdown (apache#2283) HDDS-5241. SCM UI should have leader/follower and Primordial SCM information (apache#2260) HDDS-5219. Limit number of bad volumes by dfs.datanode.failed.volumes.tolerated. (apache#2243) HDDS-5252. PipelinePlacementPolicy filter out datanodes with not enough space. (apache#2271) HDDS-5191. Increase default pvc storage size (apache#2219) HDDS-5073. Use ReplicationConfig on client side (apache#2136) HDDS-5250. Build integration tests with Maven cache (apache#2269) HDDS-5236. Require block token for more operations (apache#2254) HDDS-5266 Misspelt words in S3MultipartUploadCommitPartRequest.java line 202 (apache#2279) HDDS-5249. Race Condition between Full and Incremental Container Reports (apache#2268) HDDS-5142. Make generic streaming client/service for container re-replication, data read, scm/om snapshot download (apache#2256) ... Conflicts: hadoop-hdds/common/src/main/java/org/apache/hadoop/hdds/scm/protocol/StorageContainerLocationProtocol.java hadoop-hdds/framework/src/main/java/org/apache/hadoop/hdds/scm/protocolPB/StorageContainerLocationProtocolClientSideTranslatorPB.java hadoop-hdds/interface-admin/src/main/proto/ScmAdminProtocol.proto hadoop-hdds/server-scm/src/main/java/org/apache/hadoop/hdds/scm/server/StorageContainerManager.java hadoop-hdds/server-scm/src/test/java/org/apache/hadoop/hdds/scm/container/MockNodeManager.java hadoop-ozone/dist/src/main/compose/testlib.sh hadoop-ozone/integration-test/src/test/java/org/apache/hadoop/ozone/TestStorageContainerManager.java hadoop-ozone/interface-client/src/main/proto/OmClientProtocol.proto hadoop-ozone/ozone-manager/pom.xml hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/OzoneManager.java hadoop-ozone/ozone-manager/src/main/java/org/apache/hadoop/ozone/om/ratis/utils/OzoneManagerRatisUtils.java hadoop-ozone/s3gateway/pom.xml

…ion (apache#2110) (cherry picked from commit 8586815) Change-Id: I6ea9af9ab59b5384e991dd3c6e2245ba1bf245b7

What changes were proposed in this pull request?

To have FsShell rm (eg.

bin/ozone fs -rm o3fs://bucket.volume/key) commands delete items from the filesystem Trash without need of the -skipTrash flag, changes are made to the ozone filesystem specific TrashPolicy. Implemented through overriding the TrashPolicy moveTrash method for TrashPolicyOzone.Here if the item to be deleted with the FsShell rm command is located in the key root trash directory, it is deleted from the filesystem. Before this patch, items removed through the rm command were moved to the root Trash directory when trash was enabled. Patch enacts similar behavior as that of FsShell rm command with the -skipTrash flag specified. Behavior with the -skipTrash flag enabled is unaffected.

Necessary for this patch is configuring the hadoop filesystem to use the ozone implementation specific TrashPolicyOzone though the 'core-site.xml' as in :

<property> <name>fs.trash.classname</name> <value>org.apache.hadoop.ozone.om.TrashPolicyOzone</value> </property>What is the link to the Apache JIRA

https://issues.apache.org/jira/browse/HDDS-4043

How was this patch tested?

Patch was tested through both integration tests and manual tests.

Integration test implemented in ozone shell integration test : TestOzoneShellHA.java. Tested through

hadoop-ozone/integration_test$ mvn -Dtest=TestOzoneShellHA#testDeleteTrashNoSkipTrash testTest set: org.apache.hadoop.ozone.shell.TestOzoneShellHA

Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 28.099 s - in org.apache.hadoop.ozone.shell.TestOzoneShellHA

Manual test through docker cluster deployment:

before patch ->

<property> <name>fs.trash.classname</name> <value>org.apache.hadoop.ozone.om.TrashPolicyDefault</value> </property>OR leave unchanged (default behavior)enable Trash

<property> <name>fs.trash.interval</name> <value>60</value> </property>bin/ozone fs -rm -R o3fs://bucket1.vol1/key1)bin/ozone fs -rm -R o3fs://bucket1.vol1/.Trash)Trash directory remains.

patch ->

<property> <name>fs.trash.classname</name> <value>org.apache.hadoop.ozone.om.TrashPolicyOzone</value> </property>enable Trash

<property> <name>fs.trash.interval</name> <value>60</value> </property>bin/ozone fs -rm -R o3fs://bucket1.vol1/key1)bin/ozone fs -rm -R o3fs://bucket1.vol1/.Trash)Trash directory is deleted.