-

Notifications

You must be signed in to change notification settings - Fork 3k

[SITE] Document cache.expiration-interval-ms in Spark Configuration #3787

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SITE] Document cache.expiration-interval-ms in Spark Configuration #3787

Conversation

9601361 to

f4bb4ef

Compare

|

cc @rdblue @nastra @samredai @jackye1995 @RussellSpitzer added some documentation for I still need to plumb it through Flink, which I'm working on today and will update the Flink docs afterwards. |

site/docs/spark-configuration.md

Outdated

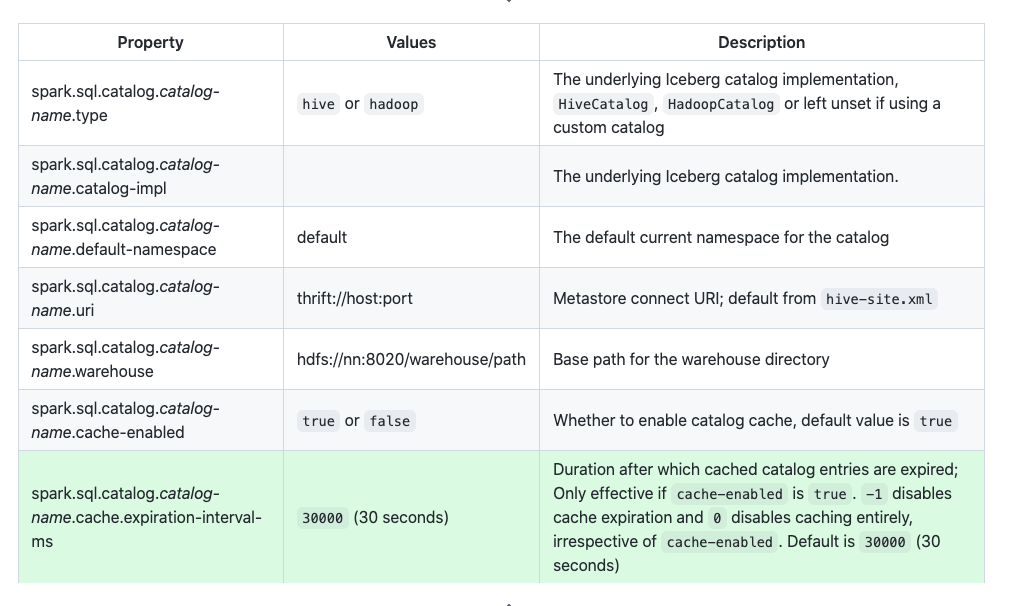

| | spark.sql.catalog._catalog-name_.warehouse | hdfs://nn:8020/warehouse/path | Base path for the warehouse directory | | ||

| | spark.sql.catalog._catalog-name_.cache-enabled | `true` or `false` | Whether to enable catalog cache, default value is `true` | | ||

| | Property | Values | Description | | ||

| |---------------------------------------------------------------|-------------------------------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

there is no need to change all the other lines to have the same size description blocks, the website will format them correctly anyway. Could you remove the unrelated changes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Updated to just one line change.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good! Thanks for updating this.

f4bb4ef to

3ea3a09

Compare

|

Thanks, @kbendick! |

nastra

left a comment

nastra

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

belated +1

* apache/iceberg#3723 * apache/iceberg#3732 * apache/iceberg#3749 * apache/iceberg#3766 * apache/iceberg#3787 * apache/iceberg#3796 * apache/iceberg#3809 * apache/iceberg#3820 * apache/iceberg#3878 * apache/iceberg#3890 * apache/iceberg#3892 * apache/iceberg#3944 * apache/iceberg#3976 * apache/iceberg#3993 * apache/iceberg#3996 * apache/iceberg#4008 * apache/iceberg#3758 and 3856 * apache/iceberg#3761 * apache/iceberg#2062 * apache/iceberg#3422 * remove restriction related to legacy parquet file list

Adds documentation to the site for the catalog config key

cache.expiration-interval-msfor Spark.This has not been implemented for Flink yet, so I've not added it to the Flink documentation (working on that in a separate PR).

Here's a reference to the JavaDoc for this configuration value:

iceberg/core/src/main/java/org/apache/iceberg/CatalogProperties.java

Lines 42 to 52 in 9c3e340