-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI-1180] Upgrade HBase to 2.4.9 #5004

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[HUDI-1180] Upgrade HBase to 2.4.9 #5004

Conversation

cfd58a7 to

1b354cf

Compare

|

@hudi-bot run azure |

| //LOG.error("\n\n ###### Stdout #######\n" + callback.getStdout().toString()); | ||

| } | ||

| callback.getStderr().flush(); | ||

| callback.getStdout().flush(); | ||

| LOG.error("\n\n ###### Stdout #######\n" + callback.getStdout().toString()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These debugging code will be removed once the PR is near landing.

|

@danny0405 @xiarixiaoyao @alexeykudinkin @umehrot2 PTAL after more changes to make HBase upgrade work with Spark 2.4.4, Hadoop 2.8.4, and Hive 2.3.3 (tested with Docker setup). @danny0405 could you help verify if the changes to hudi-flink-bundle is fine? I don't see any IT tests using Flink in Docker setup, though the hudi-flink-bundle changes are similar to other bundles which are verified. Maybe we should add Flink ITs in Docker setup as well. |

fe2f135 to

70c1029

Compare

can we test the reverse. For e.g writers may be upgraded, while query engines may not be. So can we write hudi table with this change and then try to read it with older code? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Took a pass. Wanted to clarify few things. Also make sure we handle upgrade-downgrade

| private final Configuration hadoopConf; | ||

| private final BloomFilter bloomFilter; | ||

| private final KeyValue.KVComparator hfileComparator; | ||

| private final CellComparator hfileComparator; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

are these backwards and forward compatible . i.e KVComparator is written into the HFile footer?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Per discussion. there is a potential issue of 0.11 queries not being able to read 0.9 tables, because the org.apache.hadoop.hbase.KeyValue.KVComparator class is written into HFile 1.x (0.9 table) and since we now shade hbase in 0.11 as org.apache.hudi.org.apache.hadoop.hbase.KeyValue.KVComparator.

If we ship the KVComparator class along with our jar, then we will be good.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it can just extend CellComparatorImpl

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actually, I took another approach here. In HBase, there is already backward compatibility to transform the old comparator. When we package the Hudi bundles, we need to make sure that org.apache.hadoop.hbase.KeyValue$KeyComparator (written to the metadata table HFiles in Hudi 0.9.0) is not shaded, not to break the backward compatibility logic in HBase. The new rule is added for this. More details can be found in the PR description.

<relocation>

<pattern>org.apache.hadoop.hbase.</pattern>

<shadedPattern>org.apache.hudi.org.apache.hadoop.hbase.</shadedPattern>

<excludes>

<exclude>org.apache.hadoop.hbase.KeyValue$KeyComparator</exclude>

</excludes>

</relocation>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In this way, we don't need to ship our own comparator in org.apache.hadoop.hbase and exclude that from shading.

hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java

Outdated

Show resolved

Hide resolved

| } catch (IOException e) { | ||

| throw new HoodieException("Could not read min/max record key out of file information block correctly from path", e); | ||

| } | ||

| HFileInfo fileInfo = reader.getHFileInfo(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we UT this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added more unit tests around the HFile reader.

| Map<byte[], byte[]> fileInfo = reader.loadFileInfo(); | ||

| return new String[] { new String(fileInfo.get(KEY_MIN_RECORD.getBytes())), | ||

| new String(fileInfo.get(KEY_MAX_RECORD.getBytes()))}; | ||

| } catch (IOException e) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good practice to wrap this in a HoodieException no?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IOException is checked exception, so if new HFile API doesn't throw it we can't catch it here

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, the new API getHFileInfo() returns the stored file info directly and there is no exception we can catch here now.

HFileReaderImpl

@Override

public HFileInfo getHFileInfo() {

return this.fileInfo;

}

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Generally, we should wrap it with HoodieException.

|

|

||

| <include>org.apache.hbase:hbase-common</include> | ||

| <include>org.apache.hbase:hbase-client</include> | ||

| <include>org.apache.hbase:hbase-hadoop-compat</include> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is the absolute minimal set of artifacts needed

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Need not to take as part of this PR, but i actually want to suggest one step further:

Since we're mostly reliant on HFile and the classes it's dependent on, can we try to filter out packages that won't break it?

My hunch is that we can greatly reduce 16Mb overhead number by just cleaning up all the stuff that is bolted onto HBase.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's a good idea. In fact, i've tried out but it's a very manual time-consuming process to verify. I gave up after a few failures. And keep future upgrades in mind. But, i would be very happy to reduce the bundle size in any way we can and we should take another stab at this idea in future.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, that's good to have. The problem as @codope pointed out is that such a process is time-consuming. For now, what I can say is that the newly added artifacts are necessary, since I started with the old pom, incrementally added new artifacts as I saw NoClassDef exception until every test can pass.

One thing we may try later is to add and trim hudi-hbase-shaded by excluding transitives and only depend on hudi-hbase-shaded here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, it's tedious manual process for sure, but i think we can do it pretty fast: we just look at the packages imported by HFile, then look at files that are imported by HFile, and so on. Then after that we can run the tests if we collected it properly or not.

The hypothesis is that this set should be reasonably bounded (why wouldn't it?) so this iteration should be pretty fast.

Can you please create a task and link it here to follow-up?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

HUDI-3674 is created as a follow-up.

| <shadedPattern>${flink.bundle.shade.prefix}org.apache.avro.</shadedPattern> | ||

| </relocation> | ||

| <relocation> | ||

| <pattern>org.apache.commons.io.</pattern> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this reminds me. older bootstrap index files may have an unshaded key comparator class saved within the HFile. Does that cause any issues? ie can we read such files?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I need to check that. This is another compatibility test.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actually, bootstrap index HFiles always use our own comparator class under org.apache.hudi package so we're good here. The problem is with the metadata table HFiles in Hudi 0.9.0.

| <pattern>com.fasterxml.jackson.</pattern> | ||

| <shadedPattern>${flink.bundle.shade.prefix}com.fasterxml.jackson.</shadedPattern> | ||

| </relocation> | ||

| <!-- The classes below in org.apache.hadoop.metrics2 package come from |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are classes from hbase-hadoop2-compat and hbase-hadoop-compat implementof org.apache.hadoop.metrics2 classes in hadoop-common. We cannot shade all classes in org.apache.hadoop.metrics2 which cause NoClassDef of hadoop classes. So have to pick and choose here for HBase specific ones.

| <pattern>com.google.common.</pattern> | ||

| <shadedPattern>org.apache.hudi.com.google.common.</shadedPattern> | ||

| </relocation> | ||

| <!-- The classes below in org.apache.hadoop.metrics2 package come from |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I assume all of this is repeated? does maven offer a way to reuse the include? I am wondering if we can build a hudi-hbase-shaded package and simply include that everywhere. will be easier to maintain?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, we can have hudi-hbase-shaded as a separate module. I'll see if this can be done soon; if not, I'll take it as a follow-up.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

HUDI-3673 is created as a follow-up.

hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java

Outdated

Show resolved

Hide resolved

| Map<byte[], byte[]> fileInfo = reader.loadFileInfo(); | ||

| return new String[] { new String(fileInfo.get(KEY_MIN_RECORD.getBytes())), | ||

| new String(fileInfo.get(KEY_MAX_RECORD.getBytes()))}; | ||

| } catch (IOException e) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IOException is checked exception, so if new HFile API doesn't throw it we can't catch it here

hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java

Outdated

Show resolved

Hide resolved

| HBaseConfiguration::addHbaseResources(). To get around this issue, | ||

| since HBase loads "hbase-site.xml" after "hbase-default.xml", we | ||

| provide hbase-site.xml from the bundle so that HBaseConfiguration | ||

| can pick it up and ensure the right defaults. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for adding explainer!

hudi-common/src/test/java/org/apache/hudi/common/fs/inline/TestInLineFileSystem.java

Outdated

Show resolved

Hide resolved

...common/src/test/java/org/apache/hudi/common/fs/inline/TestInLineFileSystemHFileInLining.java

Outdated

Show resolved

Hide resolved

...common/src/test/java/org/apache/hudi/common/fs/inline/TestInLineFileSystemHFileInLining.java

Outdated

Show resolved

Hide resolved

|

|

||

| <include>org.apache.hbase:hbase-common</include> | ||

| <include>org.apache.hbase:hbase-client</include> | ||

| <include>org.apache.hbase:hbase-hadoop-compat</include> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Need not to take as part of this PR, but i actually want to suggest one step further:

Since we're mostly reliant on HFile and the classes it's dependent on, can we try to filter out packages that won't break it?

My hunch is that we can greatly reduce 16Mb overhead number by just cleaning up all the stuff that is bolted onto HBase.

| <pattern>com.google.common.</pattern> | ||

| <shadedPattern>org.apache.hudi.com.google.common.</shadedPattern> | ||

| </relocation> | ||

| <!-- The classes below in org.apache.hadoop.metrics2 package come from |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1

codope

left a comment

codope

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great effort @yihua and ❤️ the PR description.

| </exclusions> | ||

| </dependency> | ||

| <dependency> | ||

| <groupId>org.apache.zookeeper</groupId> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why is this needed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is needed for TestSparkHoodieHBaseIndex.

hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java

Outdated

Show resolved

Hide resolved

hudi-common/src/test/java/org/apache/hudi/common/fs/inline/TestInLineFileSystem.java

Outdated

Show resolved

Hide resolved

|

|

||

| <include>org.apache.hbase:hbase-common</include> | ||

| <include>org.apache.hbase:hbase-client</include> | ||

| <include>org.apache.hbase:hbase-hadoop-compat</include> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's a good idea. In fact, i've tried out but it's a very manual time-consuming process to verify. I gave up after a few failures. And keep future upgrades in mind. But, i would be very happy to reduce the bundle size in any way we can and we should take another stab at this idea in future.

70c1029 to

29100bd

Compare

Good point. I'll take this up as well. |

9f05eb9 to

8ebfdf9

Compare

| schema = new Schema.Parser().parse(new String(reader.loadFileInfo().get("schema".getBytes()))); | ||

| schema = new Schema.Parser().parse(new String(reader.getHFileInfo().get(KEY_SCHEMA.getBytes()))); | ||

| } | ||

| HFileScanner scanner = reader.getScanner(false, false); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@cuibo01 Thanks for raising this. Do you mean that we should use the close() method to properly close the reader when done reading?

yes, we should close scanner

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This class is for the test only. Regarding the production code, HoodieHFileReader properly close the HFile reader:

@Override

public synchronized void close() {

try {

reader.close();

reader = null;

if (fsDataInputStream != null) {

fsDataInputStream.close();

}

keyScanner = null;

} catch (IOException e) {

throw new HoodieIOException("Error closing the hfile reader", e);

}

}

codope

left a comment

codope

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks in good shape. Few minor comments/clarifications.

| @Override | ||

| @Test | ||

| public void testWriteReadWithEvolvedSchema() throws Exception { | ||

| // Disable the test with evolved schema for HFile since it's not supported |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's remove it if it's not needed or track it in a JIRA if you plan to add support later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Tracked here: HUDI-3683

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need the override here to disable the test.

| @ParameterizedTest | ||

| @ValueSource(strings = { | ||

| "/hudi_0_9_hbase_1_2_3", "/hudi_0_10_hbase_1_2_3", "/hudi_0_11_hbase_2_4_9"}) | ||

| public void testHoodieHFileCompatibility(String hfilePrefix) throws IOException { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I like the way it's being tested but we will need to update fixtures as and when there're changes to the sources from which they were generated. They wouldn't be too frequent but just something to keep in mind.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Makes sense. The fixtures for older releases should not be changed given they are generated from public releases and should be used as is for compatibility tests.

| } | ||

| @Override | ||

| public void testReaderFilterRowKeys() { | ||

| // TODO: fix filterRowKeys test for ORC due to a bug in ORC logic |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What's the bug here? Are we tracking it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Tracked here: HUDI-3682

| LOG.info("Exit code for command : " + exitCode); | ||

| if (exitCode != 0) { | ||

| LOG.error("\n\n ###### Stdout #######\n" + callback.getStdout().toString()); | ||

| //LOG.error("\n\n ###### Stdout #######\n" + callback.getStdout().toString()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's remove this condition, or maybe bring L243-L245 inside this conditional?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These are debugging code for showing logs when there are IT failures. I'll remove these changes.

…ore unit tests in TestHoodieHFileReaderWriter

…or backward compatibility

2b54590 to

0733dcc

Compare

Co-authored-by: Sagar Sumit <[email protected]>

Co-authored-by: Sagar Sumit <[email protected]>

What is the purpose of the pull request

This PR upgrades HBase version from 1.2.3 to 2.4.9, addresses issues of Hudi HFile usage due to HBase API changes, and shade HBase-related dependencies in Hudi bundles.

Brief change log

HoodieHBaseKVComparatorfromCellComparatorImplwhich is aCellComparatorimplementation, instead ofKeyValue.KVComparatorsince it is deprecated in 2.x.HoodieHFileUtilsto constructHFile.Readerin different ways.HoodieHFileReaderconstructor with byte array, the file system and dummy path are passed in. The dummy path can be either the log file path or base path of the table, which is not actually used for reading content, only serving the purpose of reference.hudi-common/src/main/resources/hbase-site.xmlcontaining HBase default configs from thehbase-common 2.4.9we use, to override the default configs loaded fromhbase-default.xmlfrom an older HBase version on the classpath and to ensure correct default configs for Hudi HBase usage. In Hive, the Hive server loads all lib jars including HBase jars with its correspondinghbase-default.xmlinto class path (e.g., HBase 1.1.1), and that can cause conflict with thehbase-default.xmlin Hudi bundles (HBase 2.4.9). The exception is thrown as follows:Caused by: java.lang.RuntimeException: hbase-default.xml file seems to be for an older version of HBase (1.1.1), this version is 2.4.9. Relevant logic causing such exception can be found in HBaseConfiguration::addHbaseResources(). To get around this issue, since HBase loadshbase-site.xmlafterhbase-default.xml, we provide hbase-site.xml from the bundle so that HBaseConfiguration can pick it up and ensure the right defaults.maven-shade-pluginfor packaging hudi bundlesincludes for HBase 2.x related dependenciesrelocationfororg.apache.commons.io.,org.apache.hadoop.hbase.,org.apache.hbase., andorg.apache.htrace.relocationfor classes fromhbase-hadoop2-compatandhbase-hadoop-compat. There are classes from these two artifacts which implementorg.apache.hadoop.metrics2classes inhadoop-common. We cannot shade all classes inorg.apache.hadoop.metrics2which would causeNoClassDefof hadoop classes. So we have to pick and choose here for HBase-specific ones.**/*.protoandhbase-webapps/**from bundleshudi-commonin the bundle pomshbase-shaded-serveris discontinued andhbase-shadedis maintained afterwards, which bundles all HBase related libs, most of which are not needed by us. So the originalhbase-*dependencies are used and we control the shading instead.HFileBootstrapIndexandHoodieHFileReader/HoodieHFileWriter. Note that theHFileBootstrapIndexdoes not use theHoodieHFileReader/HoodieHFileWriter. There are nuances here that affect the backward compatibility, which is considered in this PR. As already mentioned, HBase 1.x usesKeyValue.KVComparatorwhile HBase 2.x usesCellComparator, although the conceptual functionality is the same. The comparator class name is written to the trailer of the HFile. Below shows the comparator class name written to the HFile trailer in different Hudi + HBase versions:HFileBootstrapIndexHoodieHFileWriterHFileBootstrapIndex$HoodieKVComparatorhbase.KeyValue$KeyComparatorHFileBootstrapIndex$HoodieKVComparatorHoodieHBaseKVComparatorHFileBootstrapIndex$HoodieKVComparatorHoodieHBaseKVComparatorFull class name:

HFileBootstrapIndex$HoodieKVComparator:org.apache.hudi.common.bootstrap.index.HFileBootstrapIndex$HoodieKVComparatorhbase.KeyValue$KeyComparator:org.apache.hadoop.hbase.KeyValue$KeyComparatorHoodieHBaseKVComparator:org.apache.hudi.io.storage.HoodieHBaseKVComparatorThere are no compatibility issues with

HoodieKVComparatorandHoodieHBaseKVComparatorgiven that we control the implementation. Fororg.apache.hadoop.hbase.KeyValue$KeyComparator, HBase already provides the conversion when retrieving the comparator class in FixedFileTrailer:The

KeyValue.COMPARATOR.getLegacyKeyComparatorName()returnsorg.apache.hadoop.hbase.KeyValue$KeyComparatorin KeyValue class. In the unit test, it is verified that the HFile written by Hudi 0.9.0HoodieHFileWritercan be read with the comparator transformed toCellComparatorImpl.When we package the Hudi bundles, we need to make sure that

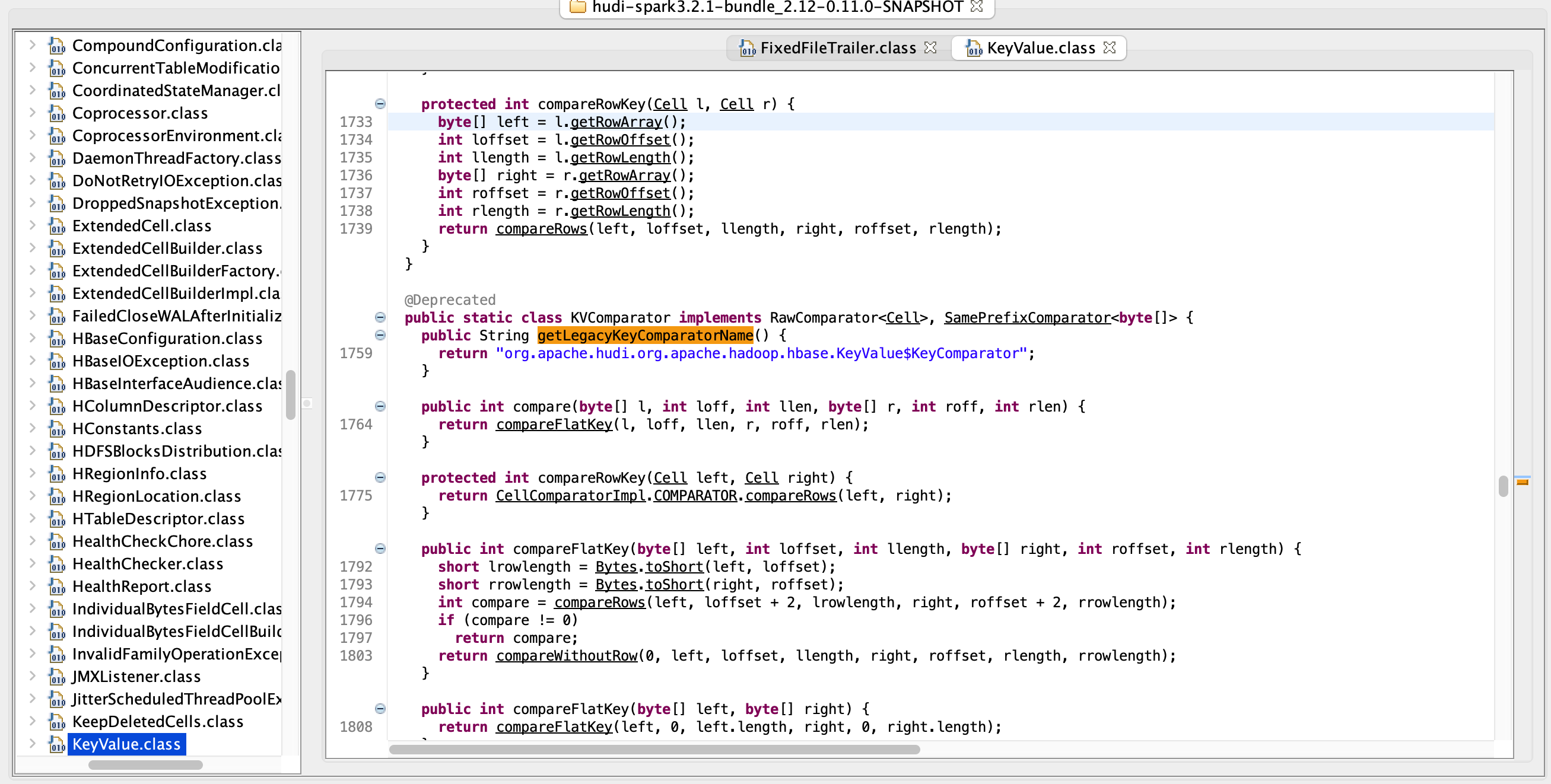

org.apache.hadoop.hbase.KeyValue$KeyComparatoris not shaded, not to break the backward compatibility logic in HBase. That's why this relocation rule is added:The bundle BC logic is verified by using Java Decompiler. Before excluding the class in shading, the new bundle cannot read Hudi metadata table written by 0.9.0:

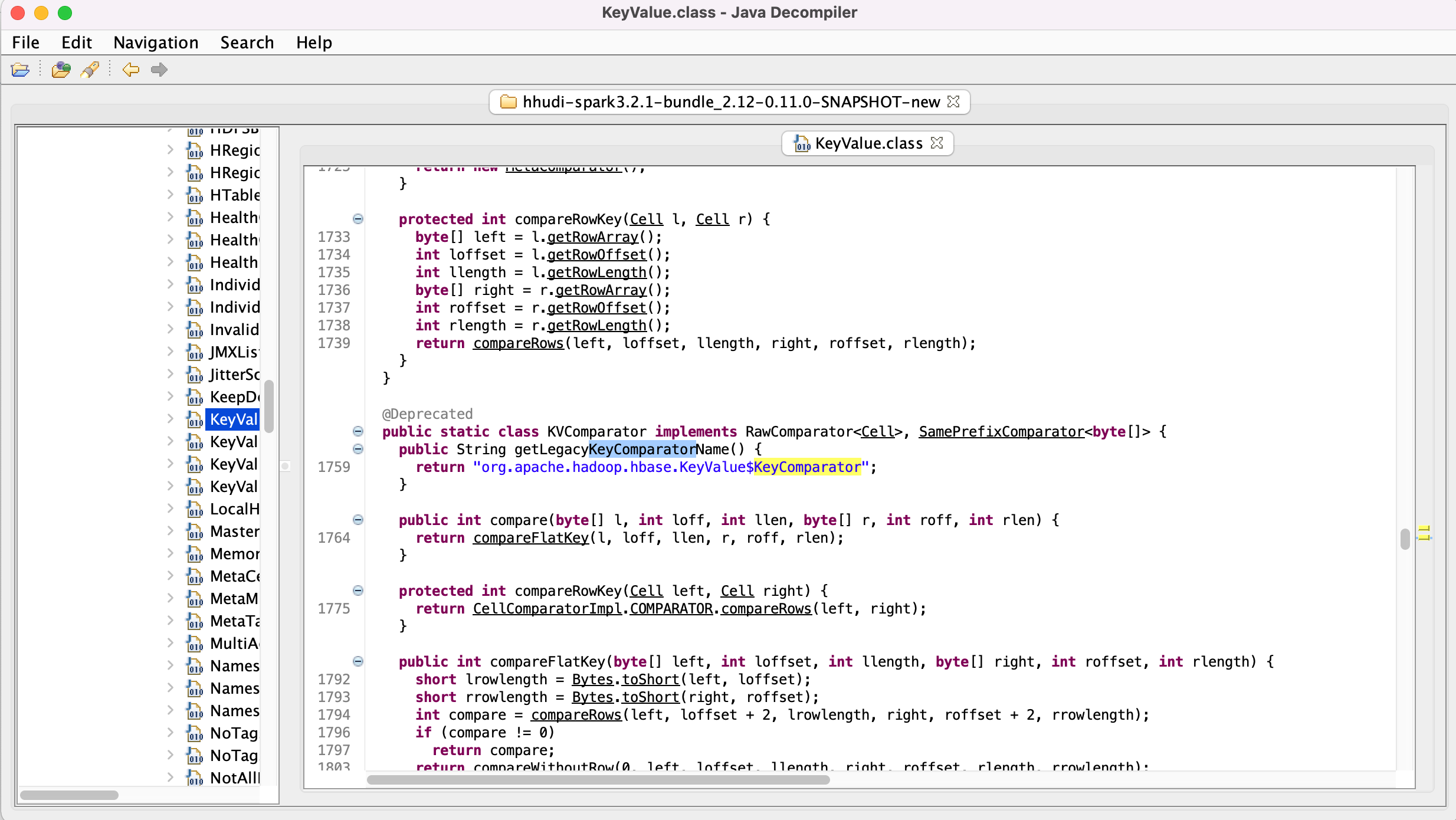

After excluding the class in shading, the new bundle can read Hudi metadata table written by 0.9.0:

HoodieHBaseKVComparatoris not present in Hudi 0.9.0 and before. However, the metadata table is forward compatible since 0.10.0.HoodieHFileReader.Verify this pull request

This PR adds new tests:

HoodieFileReaderandHoodieFileWriterby adding an abstract classTestHoodieReaderWriterBasefor common tests for all file formats. Adding more verificationTestHoodieReaderWriterBaseto cover all APIs. MakesTestHoodieHFileReaderWriterandTestHoodieOrcReaderWriterextendTestHoodieReaderWriterBase.TestHoodieHFileReaderWriterto cover HFile specific read and write logic.TestHoodieHFileReaderWriterand test fixtures in resources. Three types of HFiles are added for all three Hudi versions (9 HFile fixtures in total). These are all cases where HFile is used.TestHoodieReaderWriterBase#testWriteReadPrimitiveRecord(); (2) HFile based on complex schema generated fromTestHoodieReaderWriterBase#testWriteReadComplexRecord(); (3) HFile based on bootstrap index generated fromTestBootstrapIndex#testBootstrapIndex().This PR is covered by existing unit, functional and CI tests for core write/read flows, compatibility with docker setup of Spark 2.4.4, Hadoop 2.8.4, Hive 2.3.3.

Further compatibility testing:

Bundle jar:

The bundle jars are verified so that HBase related classes are properly shaded (

jar tf | grep "hbase\|htrace\|metrics2\|commons.io") and Google guava is not present (jar tf | grep guava).Unfortunately, the size of bundle jars is significantly increased due to new necessary packages (~ 16MB additional size for most of the bundles):

Committer checklist

Has a corresponding JIRA in PR title & commit

Commit message is descriptive of the change

CI is green

Necessary doc changes done or have another open PR

For large changes, please consider breaking it into sub-tasks under an umbrella JIRA.