-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI 1308] Harden RFC-15 Implementation based on production testing #2441

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[HUDI 1308] Harden RFC-15 Implementation based on production testing #2441

Conversation

vinothchandar

left a comment

vinothchandar

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@prashantwason @rmpifer @umehrot2 Kindly take a pass. this is still WIP. but want to run it by so there are major blockers.

...-client/hudi-client-common/src/main/java/org/apache/hudi/table/HoodieTimelineArchiveLog.java

Outdated

Show resolved

Hide resolved

...client/hudi-client-common/src/main/java/org/apache/hudi/table/action/clean/CleanPlanner.java

Outdated

Show resolved

Hide resolved

...park-client/src/main/java/org/apache/hudi/metadata/SparkHoodieBackedTableMetadataWriter.java

Outdated

Show resolved

Hide resolved

...lient/hudi-spark-client/src/test/java/org/apache/hudi/metadata/TestHoodieBackedMetadata.java

Outdated

Show resolved

Hide resolved

...lient/hudi-spark-client/src/test/java/org/apache/hudi/metadata/TestHoodieBackedMetadata.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/metadata/BaseTableMetadata.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadata.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadata.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadata.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/metadata/HoodieMetadataFileSystemView.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadata.java

Outdated

Show resolved

Hide resolved

prashantwason

left a comment

prashantwason

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks fine. But getting complicated now and difficult to trace where it is opened and closed. Please add liberal function comments at least to signify the lifetime of the metadata reader/writer.

...di-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java

Outdated

Show resolved

Hide resolved

hudi-common/src/main/java/org/apache/hudi/common/fs/FSUtils.java

Outdated

Show resolved

Hide resolved

Codecov Report

@@ Coverage Diff @@

## master #2441 +/- ##

============================================

- Coverage 50.69% 9.68% -41.01%

+ Complexity 3059 48 -3011

============================================

Files 419 53 -366

Lines 18810 1930 -16880

Branches 1924 230 -1694

============================================

- Hits 9535 187 -9348

+ Misses 8498 1730 -6768

+ Partials 777 13 -764

Flags with carried forward coverage won't be shown. Click here to find out more. |

@prashantwason IMO we should have always opened and close with each fetch call - that way even if the object is gc-ed afterwards, the resources are properly closed. if we need to amortize open/close/merge cost, then we can just add a batch API e.g |

|

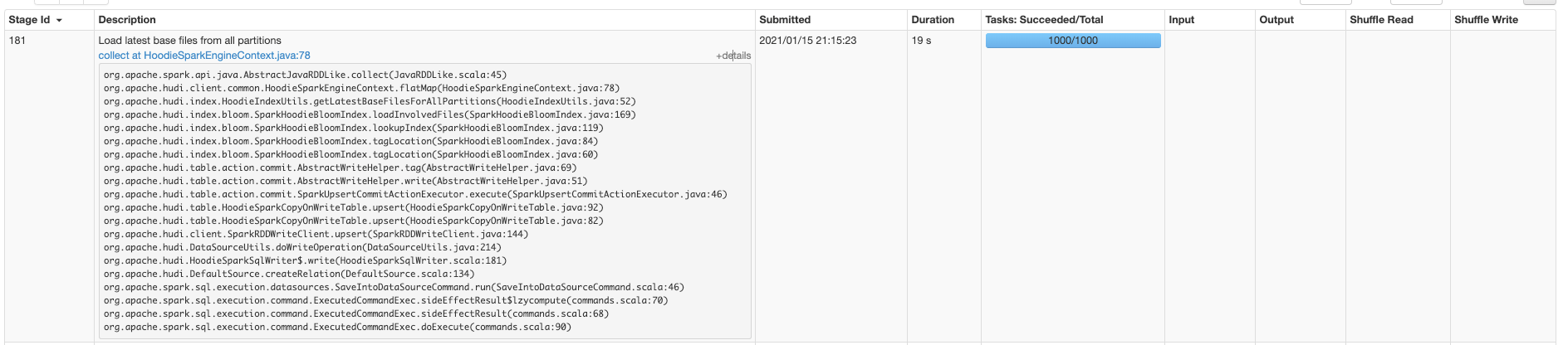

On a 250K file table, with 1000 partitions. Baseline single threaded listing : 2mins Parallelized listing from Spark (which is what most of Hudi already does) takes 18 seconds Metadata table is ~2x faster!! (timeline server=on) Metadata table is fast, even with parallel access from executors !! (timeline server=off) |

|

cc @bvaradar @n3nash @umehrot2 @yanghua @leesf @nsivabalan @bhasudha @prashantwason @satishkotha |

awesome, thanks for your effort! |

ccd1183 to

8708799

Compare

|

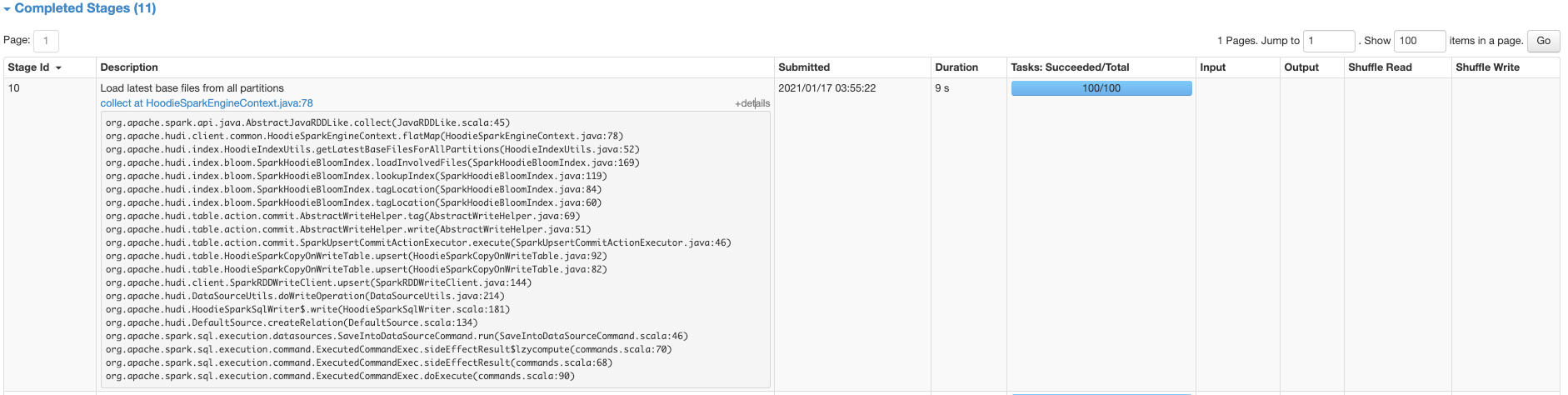

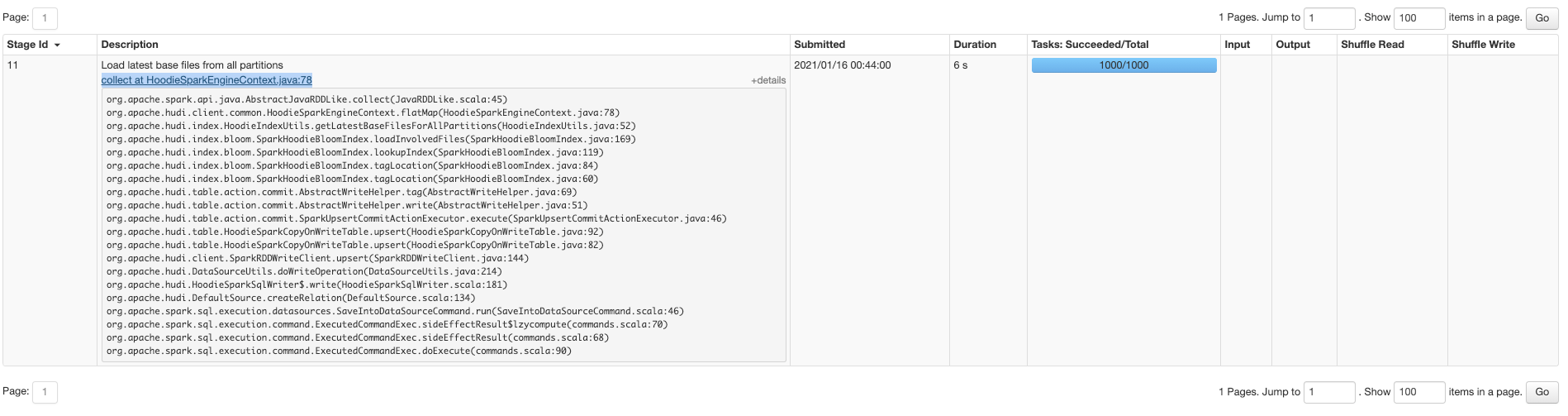

Folks, I have tested this again with 250K files. Spark datasource does not yield as much gains, need to investigate separately. But resolved all exceptions, leaks and degradations as we saw in the last 1 week of testing |

|

Also ran 20+ rounds of upserts back to back. seems stable with metadata turned on. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cc @prashantwason @umehrot2 this is an important piece of code

0900ab0 to

05e8910

Compare

|

@prashantwason @umehrot2 if you could review quickly, that would be great. otherwise, if CI passes, i ll land and cut the RC branch |

|

@nsivabalan can we kick off some tests on this PR branch? |

|

the failing tests succeeds 20 times for me, running locally back-to-back. |

- Removed special casing of assumeDatePartitioning inside FSUtils#getAllPartitionPaths() - Consequently, IOException is never thrown and many files had to be adjusted

- Added config for controlling reuse of connections - Added config for turning off fallback to listing, so we can see tests fail - Changed all ipf listing code to cache/amortize the open/close for better performance - Timelineserver also reuses connections, for better performance - Without timelineserver, when metadata table is opened from executors, reuse is not allowed - HoodieMetadataConfig passed into HoodieTableMetadata#create as argument.

922a6be to

7486e2d

Compare

7486e2d to

12550c6

Compare

|

https://travis-ci.com/github/apache/hudi/jobs/473273386 passed finally |

…pache#2441) Addresses leaks, perf degradation observed during testing. These were regressions from the original rfc-15 PoC implementation. * Pass a single instance of HoodieTableMetadata everywhere * Fix tests and add config for enabling metrics - Removed special casing of assumeDatePartitioning inside FSUtils#getAllPartitionPaths() - Consequently, IOException is never thrown and many files had to be adjusted - More diligent handling of open file handles in metadata table - Added config for controlling reuse of connections - Added config for turning off fallback to listing, so we can see tests fail - Changed all ipf listing code to cache/amortize the open/close for better performance - Timelineserver also reuses connections, for better performance - Without timelineserver, when metadata table is opened from executors, reuse is not allowed - HoodieMetadataConfig passed into HoodieTableMetadata#create as argument. - Fix TestHoodieBackedTableMetadata#testSync

…pache#2441) Addresses leaks, perf degradation observed during testing. These were regressions from the original rfc-15 PoC implementation. * Pass a single instance of HoodieTableMetadata everywhere * Fix tests and add config for enabling metrics - Removed special casing of assumeDatePartitioning inside FSUtils#getAllPartitionPaths() - Consequently, IOException is never thrown and many files had to be adjusted - More diligent handling of open file handles in metadata table - Added config for controlling reuse of connections - Added config for turning off fallback to listing, so we can see tests fail - Changed all ipf listing code to cache/amortize the open/close for better performance - Timelineserver also reuses connections, for better performance - Without timelineserver, when metadata table is opened from executors, reuse is not allowed - HoodieMetadataConfig passed into HoodieTableMetadata#create as argument. - Fix TestHoodieBackedTableMetadata#testSync

…OSS master Summary: [HUDI-1509]: Reverting LinkedHashSet changes to combine fields from oldSchema and newSchema in favor of using only new schema for record rewriting (apache#2424) [MINOR] Bumping snapshot version to 0.7.0 (apache#2435) [HUDI-1533] Make SerializableSchema work for large schemas and add ability to sortBy numeric values (apache#2453) [HUDI-1529] Add block size to the FileStatus objects returned from metadata table to avoid too many file splits (apache#2451) [HUDI-1532] Fixed suboptimal implementation of a magic sequence search (apache#2440) [HUDI-1535] Fix 0.7.0 snapshot (apache#2456) [MINOR] Fixing setting defaults for index config (apache#2457) [HUDI-1540] Fixing commons codec shading in spark bundle (apache#2460) [HUDI 1308] Harden RFC-15 Implementation based on production testing (apache#2441) [MINOR] Remove redundant judgments (apache#2466) [MINOR] Fix dataSource cannot use hoodie.datasource.hive_sync.auto_create_database (apache#2444) [MINOR] Disabling problematic tests temporarily to stabilize CI (apache#2468) [MINOR] Make a separate travis CI job for hudi-utilities (apache#2469) [HUDI-1512] Fix spark 2 unit tests failure with Spark 3 (apache#2412) [HUDI-1511] InstantGenerateOperator support multiple parallelism (apache#2434) [HUDI-1332] Introduce FlinkHoodieBloomIndex to hudi-flink-client (apache#2375) [HUDI] Add bloom index for hudi-flink-client [MINOR] Remove InstantGeneratorOperator parallelism limit in HoodieFlinkStreamer and update docs (apache#2471) [MINOR] Improve code readability,remove the continue keyword (apache#2459) [HOTFIX] Revert upgrade flink verison to 1.12.0 (apache#2473) [HUDI-1453] Fix NPE using HoodieFlinkStreamer to etl data from kafka to hudi (apache#2474) [MINOR] Use skipTests flag for skip.hudi-spark2.unit.tests property (apache#2477) [HUDI-1476] Introduce unit test infra for java client (apache#2478) [MINOR] Update doap with 0.7.0 release (apache#2491) [MINOR]Fix NPE when using HoodieFlinkStreamer with multi parallelism (apache#2492) [HUDI-1234] Insert new records to data files without merging for "Insert" operation. (apache#2111) [MINOR] Add Jira URL and Mailing List (apache#2404) [HUDI-1522] Add a new pipeline for Flink writer (apache#2430) [HUDI-1522] Add a new pipeline for Flink writer [HUDI-623] Remove UpgradePayloadFromUberToApache (apache#2455) [HUDI-1555] Remove isEmpty to improve clustering execution performance (apache#2502) [HUDI-1266] Add unit test for validating replacecommit rollback (apache#2418) [MINOR] Quickstart.generateUpdates method add check (apache#2505) [HUDI-1519] Improve minKey/maxKey computation in HoodieHFileWriter (apache#2427) [HUDI-1550] Honor ordering field for MOR Spark datasource reader (apache#2497) [MINOR] Fix method comment typo (apache#2518) [MINOR] Rename FileSystemViewHandler to RequestHandler and corrected the class comment (apache#2458) [HUDI-1335] Introduce FlinkHoodieSimpleIndex to hudi-flink-client (apache#2271) [HUDI-1523] Call mkdir(partition) only if not exists (apache#2501) [HUDI-1538] Try to init class trying different signatures instead of checking its name (apache#2476) [HUDI-1538] Try to init class trying different signatures instead of checking its name. [HUDI-1547] CI intermittent failure: TestJsonStringToHoodieRecordMapF… (apache#2521) [MINOR] Fixing the default value for source ordering field for payload config (apache#2516) [HUDI-1420] HoodieTableMetaClient.getMarkerFolderPath works incorrectly on windows client with hdfs server for wrong file seperator (apache#2526) [HUDI-1571] Adding commit_show_records_info to display record sizes for commit (apache#2514) [HUDI-1589] Fix Rollback Metadata AVRO backwards incompatiblity (apache#2543) [MINOR] Fix wrong logic for checking state condition (apache#2524) [HUDI-1557] Make Flink write pipeline write task scalable (apache#2506) [HUDI-1545] Add test cases for INSERT_OVERWRITE Operation (apache#2483) [HUDI-1603] fix DefaultHoodieRecordPayload serialization failure (apache#2556) [MINOR] Fix the wrong comment for HoodieJavaWriteClientExample (apache#2559) [HUDI-1526] Translate the api partitionBy in spark datasource to hoodie.datasource.write.partitionpath.field (apache#2431) [HUDI-1612] Fix write test flakiness in StreamWriteITCase (apache#2567) [HUDI-1612] Fix write test flakiness in StreamWriteITCase [MINOR] Default to empty list for unset datadog tags property (apache#2574) [MINOR] Add clustering to feature list (apache#2568) [HUDI-1598] Write as minor batches during one checkpoint interval for the new writer (apache#2553) [HUDI-1109] Support Spark Structured Streaming read from Hudi table (apache#2485) [HUDI-1621] Gets the parallelism from context when init StreamWriteOperatorCoordinator (apache#2579) [HUDI-1381] Schedule compaction based on time elapsed (apache#2260) [HUDI-1582] Throw an exception when syncHoodieTable() fails, with RuntimeException (apache#2536) [HUDI-1539] Fix bug in HoodieCombineRealtimeRecordReader with reading empty iterators (apache#2583) [HUDI-1315] Adding builder for HoodieTableMetaClient initialization (apache#2534) [HUDI-1486] Remove inline inflight rollback in hoodie writer (apache#2359) [HUDI-1586] [Common Core] [Flink Integration] Reduce the coupling of hadoop. (apache#2540) [HUDI-1624] The state based index should bootstrap from existing base files (apache#2581) [HUDI-1477] Support copyOnWriteTable in java client (apache#2382) [MINOR] Ensure directory exists before listing all marker files. (apache#2594) [MINOR] hive sync checks for table after creating db if auto create is true (apache#2591) [HUDI-1620] Add azure pipelines configs (apache#2582) [HUDI-1347] Fix Hbase index to make rollback synchronous (via config) (apache#2188) [HUDI-1637] Avoid to rename for bucket update when there is only one flush action during a checkpoint (apache#2599) [HUDI-1638] Some improvements to BucketAssignFunction (apache#2600) [HUDI-1367] Make deltaStreamer transition from dfsSouce to kafkasouce (apache#2227) [HUDI-1269] Make whether the failure of connect hive affects hudi ingest process configurable (apache#2443) [HUDI-1611] Added a configuration to allow specific directories to be filtered out during Metadata Table bootstrap. (apache#2565) [Hudi-1583]: Fix bug that Hudi will skip remaining log files if there is logFile with zero size in logFileList when merge on read. (apache#2584) [HUDI-1632] Supports merge on read write mode for Flink writer (apache#2593) [HUDI-1540] Fixing commons codec dependency in bundle jars (apache#2562) [HUDI-1644] Do not delete older rollback instants as part of rollback. Archival can take care of removing old instants cleanly (apache#2610) [HUDI-1634] Re-bootstrap metadata table when un-synced instants have been archived. (apache#2595) [HUDI-1584] Modify maker file path, which should start with the target base path. (apache#2539) [MINOR] Fix default value for hoodie.deltastreamer.source.kafka.auto.reset.offsets (apache#2617) [HUDI-1553] Configuration and metrics for the TimelineService. (apache#2495) [HUDI-1587] Add latency and freshness support (apache#2541) [HUDI-1647] Supports snapshot read for Flink (apache#2613) [HUDI-1646] Provide mechanism to read uncommitted data through InputFormat (apache#2611) [HUDI-1655] Support custom date format and fix unsupported exception in DatePartitionPathSelector (apache#2621) [HUDI-1636] Support Builder Pattern To Build Table Properties For HoodieTableConfig (apache#2596) [HUDI-1660] Excluding compaction and clustering instants from inflight rollback (apache#2631) [HUDI-1661] Exclude clustering commits from getExtraMetadataFromLatest API (apache#2632) [MINOR] Fix import in StreamerUtil.java (apache#2638) [HUDI-1618] Fixing NPE with Parquet src in multi table delta streamer (apache#2577) [HUDI-1662] Fix hive date type conversion for mor table (apache#2634) [HUDI-1673] Replace scala.Tule2 to Pair in FlinkHoodieBloomIndex (apache#2642) [MINOR] HoodieClientTestHarness close resources in AfterAll phase (apache#2646) [HUDI-1635] Improvements to Hudi Test Suite (apache#2628) [HUDI-1651] Fix archival of requested replacecommit (apache#2622) [HUDI-1663] Streaming read for Flink MOR table (apache#2640) [HUDI-1678] Row level delete for Flink sink (apache#2659) [HUDI-1664] Avro schema inference for Flink SQL table (apache#2658) [HUDI-1681] Support object storage for Flink writer (apache#2662) [HUDI-1685] keep updating current date for every batch (apache#2671) [HUDI-1496] Fixing input stream detection of GCS FileSystem (apache#2500) [HUDI-1684] Tweak hudi-flink-bundle module pom and reorganize the pacakges for hudi-flink module (apache#2669) [HUDI-1692] Bounded source for stream writer (apache#2674) [HUDI-1552] Improve performance of key lookups from base file in Metadata Table. (apache#2494) [HUDI-1552] Improve performance of key lookups from base file in Metadata Table. [HUDI-1695] Fixed the error messaging (apache#2679) [HUDI 1615] Fixing null schema in bulk_insert row writer path (apache#2653) [HUDI-845] Added locking capability to allow multiple writers (apache#2374) [HUDI-1701] Implement HoodieTableSource.explainSource for all kinds of pushing down (apache#2690) [HUDI-1704] Use PRIMARY KEY syntax to define record keys for Flink Hudi table (apache#2694) [HUDI-1688]hudi write should uncache rdd, when the write operation is finnished (apache#2673) [MINOR] Remove unused var in AbstractHoodieWriteClient (apache#2693) [HUDI-1653] Add support for composite keys in NonpartitionedKeyGenerator (apache#2627) [HUDI-1705] Flush as per data bucket for mini-batch write (apache#2695) [1568] Fixing spark3 bundles (apache#2625) [HUDI-1650] Custom avro kafka deserializer. (apache#2619) [HUDI-1667]: Fix a null value related bug for spark vectorized reader. (apache#2636) [HUDI-1709] Improving config names and adding hive metastore uri config (apache#2699) [MINOR][DOCUMENT] Update README doc for integ test (apache#2703) [HUDI-1710] Read optimized query type for Flink batch reader (apache#2702) [HUDI-1712] Rename & standardize config to match other configs (apache#2708) [hotfix] Log the error message for creating table source first (apache#2711) [HUDI-1495] Bump Flink version to 1.12.2 (apache#2718) [HUDI-1728] Fix MethodNotFound for HiveMetastore Locks (apache#2731) [HUDI-1478] Introduce HoodieBloomIndex to hudi-java-client (apache#2608) [HUDI-1729] Asynchronous Hive sync and commits cleaning for Flink writer (apache#2732) [HOTFIX] close spark session in functional test suite and disable spark3 test for spark2 (apache#2727) [HOTFIX] Disable ITs for Spark3 and scala2.12 (apache#2733) [HOTFIX] fix deploy staging jars script [MINOR] Add Missing Apache License to test files (apache#2736) [UBER] Fixed creation of HoodieMetadataClient which now uses a Builder pattern instead of a constructor. Reviewers: balajee, O955 Project Hoodie Project Reviewer: Add blocking reviewers!, PHID-PROJ-pxfpotkfgkanblb3detq! JIRA Issues: HUDI-593 Differential Revision: https://code.uberinternal.com/D5867129

…OSS master Summary: [HUDI-1509]: Reverting LinkedHashSet changes to combine fields from oldSchema and newSchema in favor of using only new schema for record rewriting (apache#2424) [MINOR] Bumping snapshot version to 0.7.0 (apache#2435) [HUDI-1533] Make SerializableSchema work for large schemas and add ability to sortBy numeric values (apache#2453) [HUDI-1529] Add block size to the FileStatus objects returned from metadata table to avoid too many file splits (apache#2451) [HUDI-1532] Fixed suboptimal implementation of a magic sequence search (apache#2440) [HUDI-1535] Fix 0.7.0 snapshot (apache#2456) [MINOR] Fixing setting defaults for index config (apache#2457) [HUDI-1540] Fixing commons codec shading in spark bundle (apache#2460) [HUDI 1308] Harden RFC-15 Implementation based on production testing (apache#2441) [MINOR] Remove redundant judgments (apache#2466) [MINOR] Fix dataSource cannot use hoodie.datasource.hive_sync.auto_create_database (apache#2444) [MINOR] Disabling problematic tests temporarily to stabilize CI (apache#2468) [MINOR] Make a separate travis CI job for hudi-utilities (apache#2469) [HUDI-1512] Fix spark 2 unit tests failure with Spark 3 (apache#2412) [HUDI-1511] InstantGenerateOperator support multiple parallelism (apache#2434) [HUDI-1332] Introduce FlinkHoodieBloomIndex to hudi-flink-client (apache#2375) [HUDI] Add bloom index for hudi-flink-client [MINOR] Remove InstantGeneratorOperator parallelism limit in HoodieFlinkStreamer and update docs (apache#2471) [MINOR] Improve code readability,remove the continue keyword (apache#2459) [HOTFIX] Revert upgrade flink verison to 1.12.0 (apache#2473) [HUDI-1453] Fix NPE using HoodieFlinkStreamer to etl data from kafka to hudi (apache#2474) [MINOR] Use skipTests flag for skip.hudi-spark2.unit.tests property (apache#2477) [HUDI-1476] Introduce unit test infra for java client (apache#2478) [MINOR] Update doap with 0.7.0 release (apache#2491) [MINOR]Fix NPE when using HoodieFlinkStreamer with multi parallelism (apache#2492) [HUDI-1234] Insert new records to data files without merging for "Insert" operation. (apache#2111) [MINOR] Add Jira URL and Mailing List (apache#2404) [HUDI-1522] Add a new pipeline for Flink writer (apache#2430) [HUDI-1522] Add a new pipeline for Flink writer [HUDI-623] Remove UpgradePayloadFromUberToApache (apache#2455) [HUDI-1555] Remove isEmpty to improve clustering execution performance (apache#2502) [HUDI-1266] Add unit test for validating replacecommit rollback (apache#2418) [MINOR] Quickstart.generateUpdates method add check (apache#2505) [HUDI-1519] Improve minKey/maxKey computation in HoodieHFileWriter (apache#2427) [HUDI-1550] Honor ordering field for MOR Spark datasource reader (apache#2497) [MINOR] Fix method comment typo (apache#2518) [MINOR] Rename FileSystemViewHandler to RequestHandler and corrected the class comment (apache#2458) [HUDI-1335] Introduce FlinkHoodieSimpleIndex to hudi-flink-client (apache#2271) [HUDI-1523] Call mkdir(partition) only if not exists (apache#2501) [HUDI-1538] Try to init class trying different signatures instead of checking its name (apache#2476) [HUDI-1538] Try to init class trying different signatures instead of checking its name. [HUDI-1547] CI intermittent failure: TestJsonStringToHoodieRecordMapF… (apache#2521) [MINOR] Fixing the default value for source ordering field for payload config (apache#2516) [HUDI-1420] HoodieTableMetaClient.getMarkerFolderPath works incorrectly on windows client with hdfs server for wrong file seperator (apache#2526) [HUDI-1571] Adding commit_show_records_info to display record sizes for commit (apache#2514) [HUDI-1589] Fix Rollback Metadata AVRO backwards incompatiblity (apache#2543) [MINOR] Fix wrong logic for checking state condition (apache#2524) [HUDI-1557] Make Flink write pipeline write task scalable (apache#2506) [HUDI-1545] Add test cases for INSERT_OVERWRITE Operation (apache#2483) [HUDI-1603] fix DefaultHoodieRecordPayload serialization failure (apache#2556) [MINOR] Fix the wrong comment for HoodieJavaWriteClientExample (apache#2559) [HUDI-1526] Translate the api partitionBy in spark datasource to hoodie.datasource.write.partitionpath.field (apache#2431) [HUDI-1612] Fix write test flakiness in StreamWriteITCase (apache#2567) [HUDI-1612] Fix write test flakiness in StreamWriteITCase [MINOR] Default to empty list for unset datadog tags property (apache#2574) [MINOR] Add clustering to feature list (apache#2568) [HUDI-1598] Write as minor batches during one checkpoint interval for the new writer (apache#2553) [HUDI-1109] Support Spark Structured Streaming read from Hudi table (apache#2485) [HUDI-1621] Gets the parallelism from context when init StreamWriteOperatorCoordinator (apache#2579) [HUDI-1381] Schedule compaction based on time elapsed (apache#2260) [HUDI-1582] Throw an exception when syncHoodieTable() fails, with RuntimeException (apache#2536) [HUDI-1539] Fix bug in HoodieCombineRealtimeRecordReader with reading empty iterators (apache#2583) [HUDI-1315] Adding builder for HoodieTableMetaClient initialization (apache#2534) [HUDI-1486] Remove inline inflight rollback in hoodie writer (apache#2359) [HUDI-1586] [Common Core] [Flink Integration] Reduce the coupling of hadoop. (apache#2540) [HUDI-1624] The state based index should bootstrap from existing base files (apache#2581) [HUDI-1477] Support copyOnWriteTable in java client (apache#2382) [MINOR] Ensure directory exists before listing all marker files. (apache#2594) [MINOR] hive sync checks for table after creating db if auto create is true (apache#2591) [HUDI-1620] Add azure pipelines configs (apache#2582) [HUDI-1347] Fix Hbase index to make rollback synchronous (via config) (apache#2188) [HUDI-1637] Avoid to rename for bucket update when there is only one flush action during a checkpoint (apache#2599) [HUDI-1638] Some improvements to BucketAssignFunction (apache#2600) [HUDI-1367] Make deltaStreamer transition from dfsSouce to kafkasouce (apache#2227) [HUDI-1269] Make whether the failure of connect hive affects hudi ingest process configurable (apache#2443) [HUDI-1611] Added a configuration to allow specific directories to be filtered out during Metadata Table bootstrap. (apache#2565) [Hudi-1583]: Fix bug that Hudi will skip remaining log files if there is logFile with zero size in logFileList when merge on read. (apache#2584) [HUDI-1632] Supports merge on read write mode for Flink writer (apache#2593) [HUDI-1540] Fixing commons codec dependency in bundle jars (apache#2562) [HUDI-1644] Do not delete older rollback instants as part of rollback. Archival can take care of removing old instants cleanly (apache#2610) [HUDI-1634] Re-bootstrap metadata table when un-synced instants have been archived. (apache#2595) [HUDI-1584] Modify maker file path, which should start with the target base path. (apache#2539) [MINOR] Fix default value for hoodie.deltastreamer.source.kafka.auto.reset.offsets (apache#2617) [HUDI-1553] Configuration and metrics for the TimelineService. (apache#2495) [HUDI-1587] Add latency and freshness support (apache#2541) [HUDI-1647] Supports snapshot read for Flink (apache#2613) [HUDI-1646] Provide mechanism to read uncommitted data through InputFormat (apache#2611) [HUDI-1655] Support custom date format and fix unsupported exception in DatePartitionPathSelector (apache#2621) [HUDI-1636] Support Builder Pattern To Build Table Properties For HoodieTableConfig (apache#2596) [HUDI-1660] Excluding compaction and clustering instants from inflight rollback (apache#2631) [HUDI-1661] Exclude clustering commits from getExtraMetadataFromLatest API (apache#2632) [MINOR] Fix import in StreamerUtil.java (apache#2638) [HUDI-1618] Fixing NPE with Parquet src in multi table delta streamer (apache#2577) [HUDI-1662] Fix hive date type conversion for mor table (apache#2634) [HUDI-1673] Replace scala.Tule2 to Pair in FlinkHoodieBloomIndex (apache#2642) [MINOR] HoodieClientTestHarness close resources in AfterAll phase (apache#2646) [HUDI-1635] Improvements to Hudi Test Suite (apache#2628) [HUDI-1651] Fix archival of requested replacecommit (apache#2622) [HUDI-1663] Streaming read for Flink MOR table (apache#2640) [HUDI-1678] Row level delete for Flink sink (apache#2659) [HUDI-1664] Avro schema inference for Flink SQL table (apache#2658) [HUDI-1681] Support object storage for Flink writer (apache#2662) [HUDI-1685] keep updating current date for every batch (apache#2671) [HUDI-1496] Fixing input stream detection of GCS FileSystem (apache#2500) [HUDI-1684] Tweak hudi-flink-bundle module pom and reorganize the pacakges for hudi-flink module (apache#2669) [HUDI-1692] Bounded source for stream writer (apache#2674) [HUDI-1552] Improve performance of key lookups from base file in Metadata Table. (apache#2494) [HUDI-1552] Improve performance of key lookups from base file in Metadata Table. [HUDI-1695] Fixed the error messaging (apache#2679) [HUDI 1615] Fixing null schema in bulk_insert row writer path (apache#2653) [HUDI-845] Added locking capability to allow multiple writers (apache#2374) [HUDI-1701] Implement HoodieTableSource.explainSource for all kinds of pushing down (apache#2690) [HUDI-1704] Use PRIMARY KEY syntax to define record keys for Flink Hudi table (apache#2694) [HUDI-1688]hudi write should uncache rdd, when the write operation is finnished (apache#2673) [MINOR] Remove unused var in AbstractHoodieWriteClient (apache#2693) [HUDI-1653] Add support for composite keys in NonpartitionedKeyGenerator (apache#2627) [HUDI-1705] Flush as per data bucket for mini-batch write (apache#2695) [1568] Fixing spark3 bundles (apache#2625) [HUDI-1650] Custom avro kafka deserializer. (apache#2619) [HUDI-1667]: Fix a null value related bug for spark vectorized reader. (apache#2636) [HUDI-1709] Improving config names and adding hive metastore uri config (apache#2699) [MINOR][DOCUMENT] Update README doc for integ test (apache#2703) [HUDI-1710] Read optimized query type for Flink batch reader (apache#2702) [HUDI-1712] Rename & standardize config to match other configs (apache#2708) [hotfix] Log the error message for creating table source first (apache#2711) [HUDI-1495] Bump Flink version to 1.12.2 (apache#2718) [HUDI-1728] Fix MethodNotFound for HiveMetastore Locks (apache#2731) [HUDI-1478] Introduce HoodieBloomIndex to hudi-java-client (apache#2608) [HUDI-1729] Asynchronous Hive sync and commits cleaning for Flink writer (apache#2732) [HOTFIX] close spark session in functional test suite and disable spark3 test for spark2 (apache#2727) [HOTFIX] Disable ITs for Spark3 and scala2.12 (apache#2733) [HOTFIX] fix deploy staging jars script [MINOR] Add Missing Apache License to test files (apache#2736) [UBER] Fixed creation of HoodieMetadataClient which now uses a Builder pattern instead of a constructor. Reviewers: balajee Reviewed By: balajee JIRA Issues: HUDI-593 Differential Revision: https://code.uberinternal.com/D5867129

Tips

What is the purpose of the pull request

HoodieTableMetadatainitialization, and either held in the driver or serialized to executors. So none of the metadata table listing happens from executors. and not more than once during a commit.HoodieTableMetadataobject that is held in the driver, each call will open/close the file slice. Results are cached in-memory like before, further improving performanceBrief change log

(for example:)

Verify this pull request

(Please pick either of the following options)

This pull request is a trivial rework / code cleanup without any test coverage.

(or)

This pull request is already covered by existing tests, such as (please describe tests).

(or)

This change added tests and can be verified as follows:

(example:)

Committer checklist

Has a corresponding JIRA in PR title & commit

Commit message is descriptive of the change

CI is green

Necessary doc changes done or have another open PR

For large changes, please consider breaking it into sub-tasks under an umbrella JIRA.