-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-39470][SQL] Support cast of ANSI intervals to decimals #36857

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

cc @srielau |

|

@cloud-fan @gengliangwang @HyukjinKwon @srielau Could you review this PR, please. |

| buildCast[Long](_, dt => changePrecision(dayTimeIntervalToDecimal(dt, x.endField), target)) | ||

| case x: YearMonthIntervalType => | ||

| buildCast[Int](_, ym => | ||

| changePrecision(Decimal(yearMonthIntervalToInt(ym, x.startField, x.endField)), target)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

shall we consider ansi mode here? what if the decimal precision is too small, e.g. decimal(1, 0)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As we did before, we don't consider non-ANSI mode for ANSI intervals. So, we are in ANSI mode always for ANSI intervals.

what if the decimal precision is too small, e.g. decimal(1, 0)

I think changePrecision() should output an error. Let me double check this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yea let's add some negative tests

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Added a test

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I will take confusion on what is scale and what is precision to my grave....

We can round/truncate, but not modulo/wrap.

So I see:

CAST(INTERVAL '1.234' SECOND AS DECIMAL(1,0)) as perfectly fine.

But:

CAST(INTERVAL('123.4' SECOND AS DECIMAL(4, 2)) is an overflow.

On trunc vs round the semantic is given in DECIMAL to DECIMAL:

select cast(1.5 AS DECIMAL(1, 0));

2

PS: I loathe that cannot-change-decimal-precision error. In most cases we should just call it an overflow.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

PS: I loathe that cannot-change-decimal-precision error. In most cases we should just call it an overflow.

I plan to do that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Though, changing of scale/precision while keeping the same value is different thing from changing the value and get overflow.

In any case, I am not going to change decimal logic (errors) here in the PR.

| } | ||

|

|

||

| test("cast ANSI intervals to decimals") { | ||

| Seq( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need examples with rounding:

INTERVAL '12.123' SECOND AS DECIMAL(3, 1) => 12.1

INTERVAL '12.005' SECOND AS DECIMAL(4, 2) => 12.01

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, we don't round and don't loose info. See the last check that I added:

select cast(interval '10.123' second as decimal(1, 0))

-- !query schema

struct<>

-- !query output

org.apache.spark.SparkArithmeticException

[CANNOT_CHANGE_DECIMAL_PRECISION] Decimal(compact, 10, 18, 6) cannot be represented as Decimal(1, 0). If necessary set "spark.sql.ansi.enabled" to "false" to bypass this error.

== SQL(line 1, position 8) ==

select cast(interval '10.123' second as decimal(1, 0))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

Both of your cases are the same, actually - total number of digits is greater than 3 or 4.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is different. My examples test sucess. Yours tests a failure. Obviously any number>= 10 and <100 cannot be even approximated in a single digit.

But any number between 0 and 9 can be approximated in a digit.

I’m curious why you see truncation rather than rounding given that decimal to decimal rounds.

| case _: NumberFormatException => null | ||

| } | ||

| case x: DayTimeIntervalType => | ||

| buildCast[Long](_, dt => changePrecision(dayTimeIntervalToDecimal(dt, x.endField), target)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@MaxGekk as you said I think it makes sense to always go with ANSI behavior in new features. Does changePrecision take a bool flag like nullOnOverflow?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Does changePrecision take a bool flag like nullOnOverflow?

No, it checks the SQL config actually, passed to the Cast expression:

private[this] def changePrecision(value: Decimal, decimalType: DecimalType): Decimal = {

if (value.changePrecision(decimalType.precision, decimalType.scale)) {

value

} else {

if (!ansiEnabled) {

null

} else {

throw QueryExecutionErrors.cannotChangeDecimalPrecisionError(

value, decimalType.precision, decimalType.scale, queryContext)

}

}

}Probably, it makes sense to incapsulate the logic:

spark/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala

Lines 993 to 1000 in a4f96af

| case StringType if !ansiEnabled => | |

| buildCast[UTF8String](_, s => { | |

| val d = Decimal.fromString(s) | |

| if (d == null) null else changePrecision(d, target) | |

| }) | |

| case StringType if ansiEnabled => | |

| buildCast[UTF8String](_, | |

| s => changePrecision(Decimal.fromStringANSI(s, target, queryContext), target)) |

Also cc @gengliangwang

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah always go with ANSI behavior in new features. Either adding a new parameter on changePrecision or adding a new method are fine.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

let's add a new parameter then.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have added new parameter already.

| select cast(interval '08:11:10.001' hour to second as decimal(10, 4)); | ||

| select cast(interval '1 01:02:03.1' day to second as decimal(8, 1)); | ||

|

|

||

| select cast(interval '10.123' second as decimal(1, 0)); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

how about decimal(4, 2)? will we fail or truncate?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added a check. The result is truncated. Please, take a look.

|

@cloud-fan @srielau I added more checks, please, take a look at this PR one more time. |

| -- !query schema | ||

| struct<CAST(INTERVAL '10.005' SECOND AS DECIMAL(4,2)):decimal(4,2)> | ||

| -- !query output | ||

| 10.01 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@srielau I think the rounding behavior is correct.

|

Merging to master. Thank you, @cloud-fan and @srielau for review. |

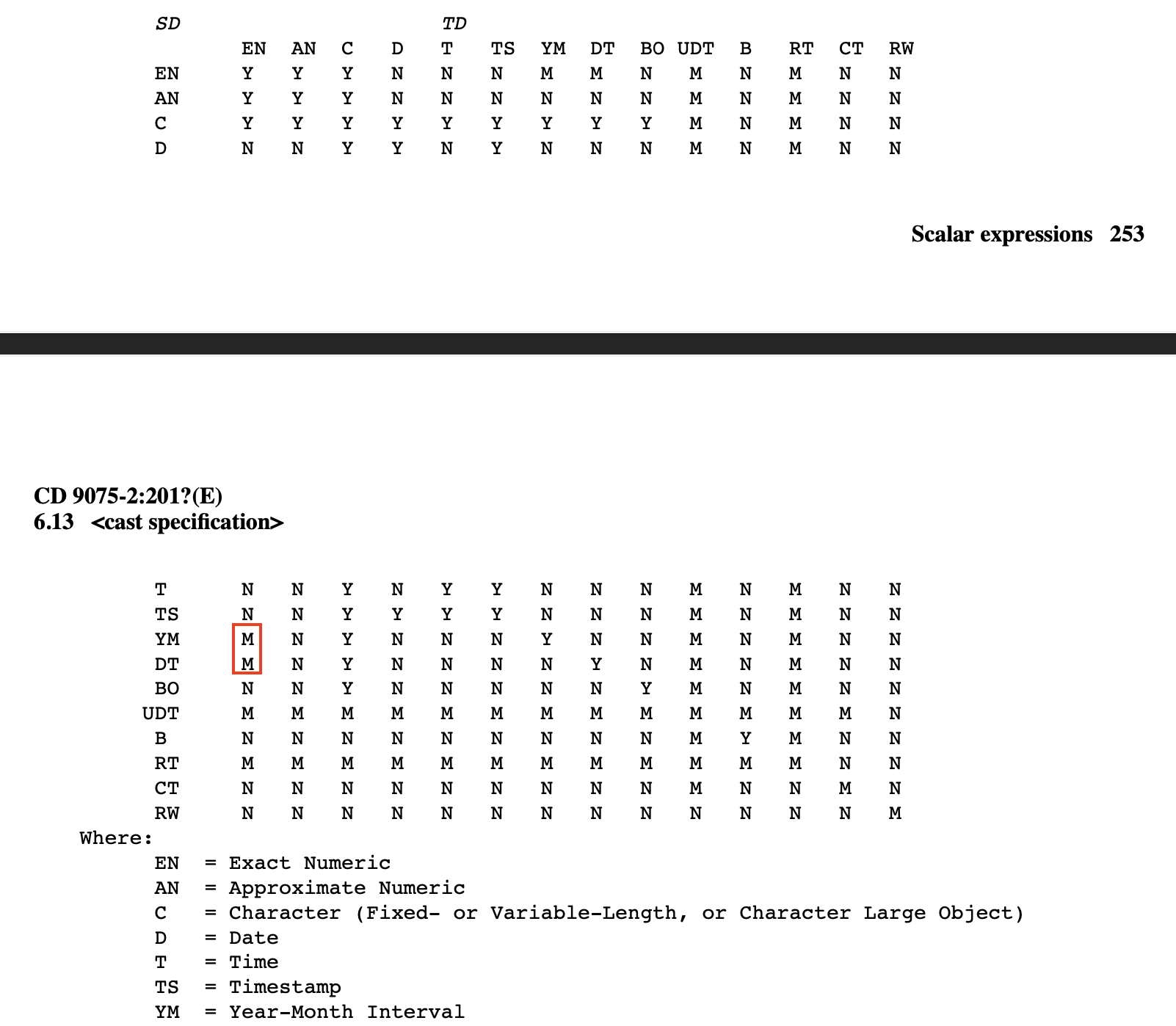

### What changes were proposed in this pull request? In the PR, I propose to update the doc page https://spark.apache.org/docs/latest/sql-ref-ansi-compliance.html#cast about new behaviour of CAST introduced by the following PRs: 1. [[SPARK-40008][SQL] Support casting of integrals to ANSI intervals](#37442) 2. [[SPARK-39451][SQL] Support casting intervals to integrals in ANSI mode](#36811) 3. [[SPARK-39470][SQL] Support cast of ANSI intervals to decimals](#36857) 4. [[SPARK-40014][SQL] Support cast of decimals to ANSI intervals](#37466) <img width="1004" alt="Screenshot 2022-08-12 at 15 00 10" src="https://user-images.githubusercontent.com/1580697/184349887-700c64f6-e9c8-40c4-85cc-a77072506cf5.png"> ### Why are the changes needed? To inform Spark SQL about new behaviour of `CAST`. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By building Spark SQL docs and check them manually: ``` $ SKIP_SCALADOC=1 SKIP_PYTHONDOC=1 SKIP_RDOC=1 bundle exec jekyll build ``` Closes #37495 from MaxGekk/doc-interval-cast. Authored-by: Max Gekk <[email protected]> Signed-off-by: Max Gekk <[email protected]>

What changes were proposed in this pull request?

In the PR, I propose to support casts of ANSI intervals to decimals, and follow the SQL standard:

Why are the changes needed?

To improve user experience with Spark SQL, and to conform to the SQL standard.

Does this PR introduce any user-facing change?

No, it just extends existing behavior of casts.

Before:

After:

How was this patch tested?

By running new tests: