-

Notifications

You must be signed in to change notification settings - Fork 593

HDDS-5360. DN failed to process all delete block commands in one heartbeat interval #2420

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

jojochuang

left a comment

jojochuang

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So basically make delete block operation asynchronous, moving it to another thread.

From a very quick look at the PR, looks reasonable to me.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this is hard to read. Can you move out this Callable?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure. I refactored the code.

|

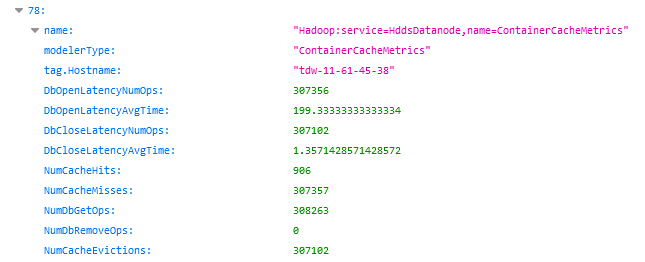

This is the rocksDB cache metrics data captured from one DN. It costs about 200ms for open a rocksdb to persist the block deletion trasaction command. Becased on current default configuration, one DN can handle at most 60s / 200ms = 300 rocksdb opens if cache missed in 60s which is SCM block deleting command generation interval. |

This is yet another reason why we should reconsider the 1-db-per-container design :) |

|

@jojochuang , do you mean 1-db-per-volume design? We think the same way. |

jojochuang

left a comment

jojochuang

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

just one comment. The rest looks good to me.

...he/hadoop/ozone/container/common/statemachine/commandhandler/DeleteBlocksCommandHandler.java

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh one more thing. We should do

Thread.currentThread().interrupt();

|

Hi @adoroszlai , do we need to do something to free disk space if "Warning: ForkStarter IOException: java.io.IOException: No space left on device" is found? |

jojochuang

left a comment

jojochuang

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 from me. Waiting for Attila's review.

| while (!deleteCommandQueues.isEmpty()) { | ||

| DeleteCmdInfo cmd = deleteCommandQueues.poll(); | ||

| try { | ||

| processCmd(cmd.getCmd(), cmd.getContainer(), cmd.getContext(), |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

thanks for the work ! NIT, can we use DeleteCmdInfo as the only one parameter of processCmd

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure.

|

Thanks @jojochuang and @JacksonYao287 for the code review. Also thanks @adoroszlai for taking care of the "no space left" issue. |

|

|

||

| cluster.shutdownHddsDatanode(keyLocation.getPipeline().getFirstNode()); | ||

|

|

||

| waitForReplicaCount(containerID, 2, cluster); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why was this removed? Without this, the test may give false negative: replica count may be 3 both initially and after re-replication, and we need to distinguish between the two.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Because delete thread pool shutdown takes time, when it finishes, the replication manager already have the container replicated. So the wait for 2 replicas randomly fails because of there are already 3 replicas.

Sure. I will take a look of timeout issue. |

* master: HDDS-5358. Incorrect cache entry invalidation causes intermittent failure in testGetS3SecretAndRevokeS3Secret (apache#2518) HDDS-5608. Fix wrong command in ugrade doc (apache#2524) HDDS-5000. Run CI checks selectively (apache#2479) HDDS-4929. Select target datanodes and containers to move for Container Balancer (apache#2441) HDDS-5283. getStorageSize cast to int can cause issue (apache#2303) HDDS-5449 Recon namespace summary 'du' information should return replicated size of a key (apache#2489) HDDS-5558. vUnit invocation unit() may produce NPE (apache#2513) HDDS-5531. For Link Buckets avoid showing metadata. (apache#2502) HDDS-5549. Add 1.1 to supported versions in security policy (apache#2519) HDDS-5555. remove pipeline manager v1 code (apache#2511) HDDS-5546.OM Service ID change causes OM startup failure. (apache#2512) HDDS-5360. DN failed to process all delete block commands in one heartbeat interval (apache#2420) HDDS-5021. dev-support Dockerfile is badly outdated (apache#2480)

* master: HDDS-5358. Incorrect cache entry invalidation causes intermittent failure in testGetS3SecretAndRevokeS3Secret (apache#2518) HDDS-5608. Fix wrong command in ugrade doc (apache#2524) HDDS-5000. Run CI checks selectively (apache#2479) HDDS-4929. Select target datanodes and containers to move for Container Balancer (apache#2441) HDDS-5283. getStorageSize cast to int can cause issue (apache#2303) HDDS-5449 Recon namespace summary 'du' information should return replicated size of a key (apache#2489) HDDS-5558. vUnit invocation unit() may produce NPE (apache#2513) HDDS-5531. For Link Buckets avoid showing metadata. (apache#2502) HDDS-5549. Add 1.1 to supported versions in security policy (apache#2519) HDDS-5555. remove pipeline manager v1 code (apache#2511) HDDS-5546.OM Service ID change causes OM startup failure. (apache#2512) HDDS-5360. DN failed to process all delete block commands in one heartbeat interval (apache#2420) HDDS-5021. dev-support Dockerfile is badly outdated (apache#2480)

…one heartbeat interval (apache#2420)" This reverts commit f9ec7e9. Conflicts: hadoop-hdds/container-service/src/main/java/org/apache/hadoop/ozone/container/common/statemachine/DatanodeConfiguration.java hadoop-hdds/container-service/src/main/java/org/apache/hadoop/ozone/container/common/statemachine/commandhandler/DeleteBlocksCommandHandler.java

https://issues.apache.org/jira/browse/HDDS-5360