PyTorch Implementation of AnimeGANv2

Updates

-

2021-10-17Add weights for FacePortraitV2. -

2021-11-07Thanks to ak92501, a web demo is integrated to Huggingface Spaces with Gradio. -

2021-11-07Thanks to xhlulu, thetorch.hubmodel is now available. See Torch Hub Usage.

Inference

python test.py --input_dir [image_folder_path] --device [cpu/cuda]

You can load the model via torch.hub:

import torch

model = torch.hub.load("bryandlee/animegan2-pytorch", "generator").eval()

out = model(img_tensor) # BCHW tensorCurrently, the following pretrained shorthands are available:

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="celeba_distill")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v2")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="paprika")You can also load the face2paint util function:

from PIL import Image

face2paint = torch.hub.load("bryandlee/animegan2-pytorch:main", "face2paint", size=512)

img = Image.open(...).convert("RGB")

out = face2paint(model, img)More details about torch.hub is in the torch docs

- Install the original repo's dependencies: python 3.6, tensorflow 1.15.0-gpu

- Install torch >= 1.7.1

- Clone the original repo & run

git clone https://github.com/TachibanaYoshino/AnimeGANv2

python convert_weights.py

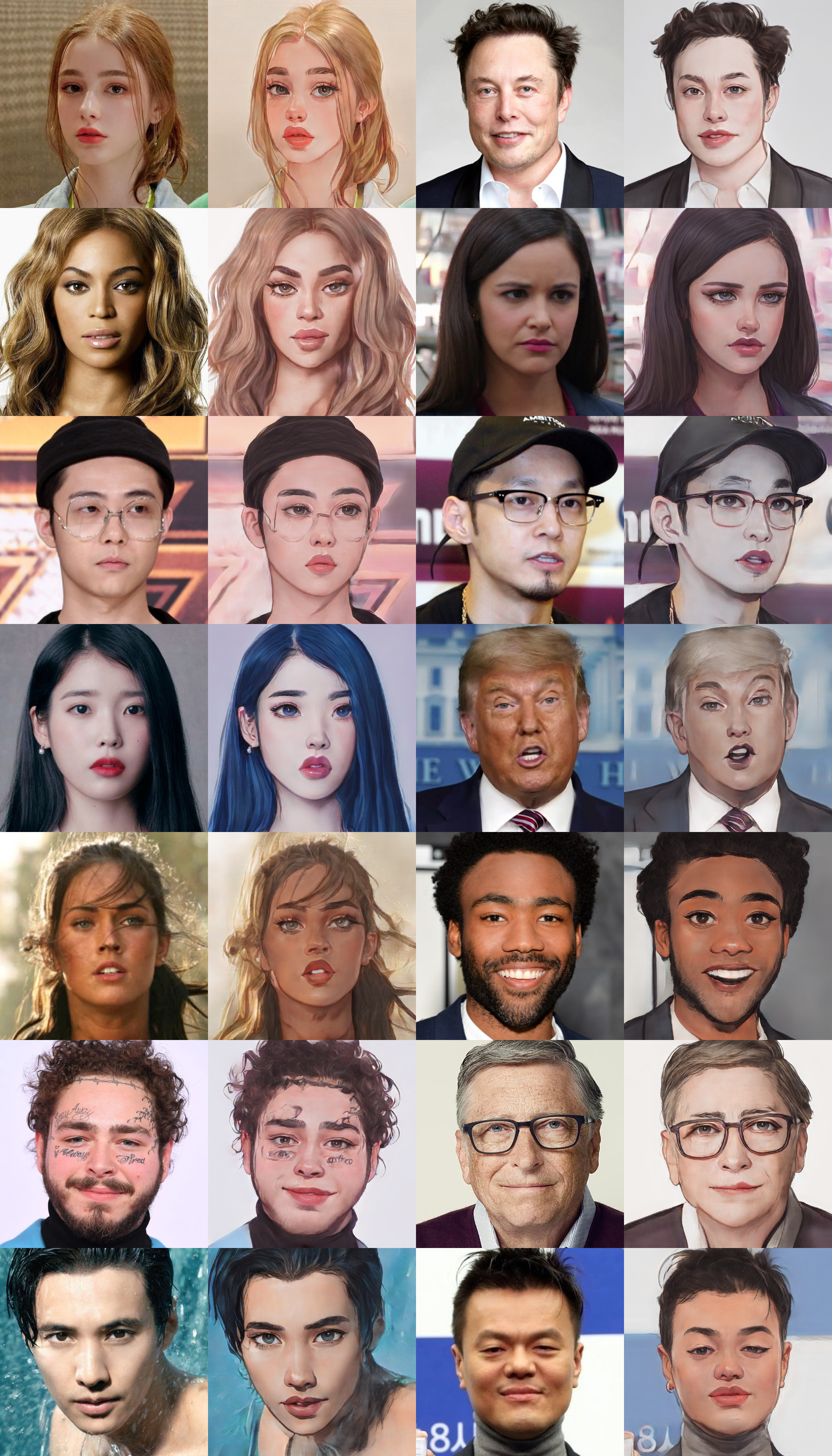

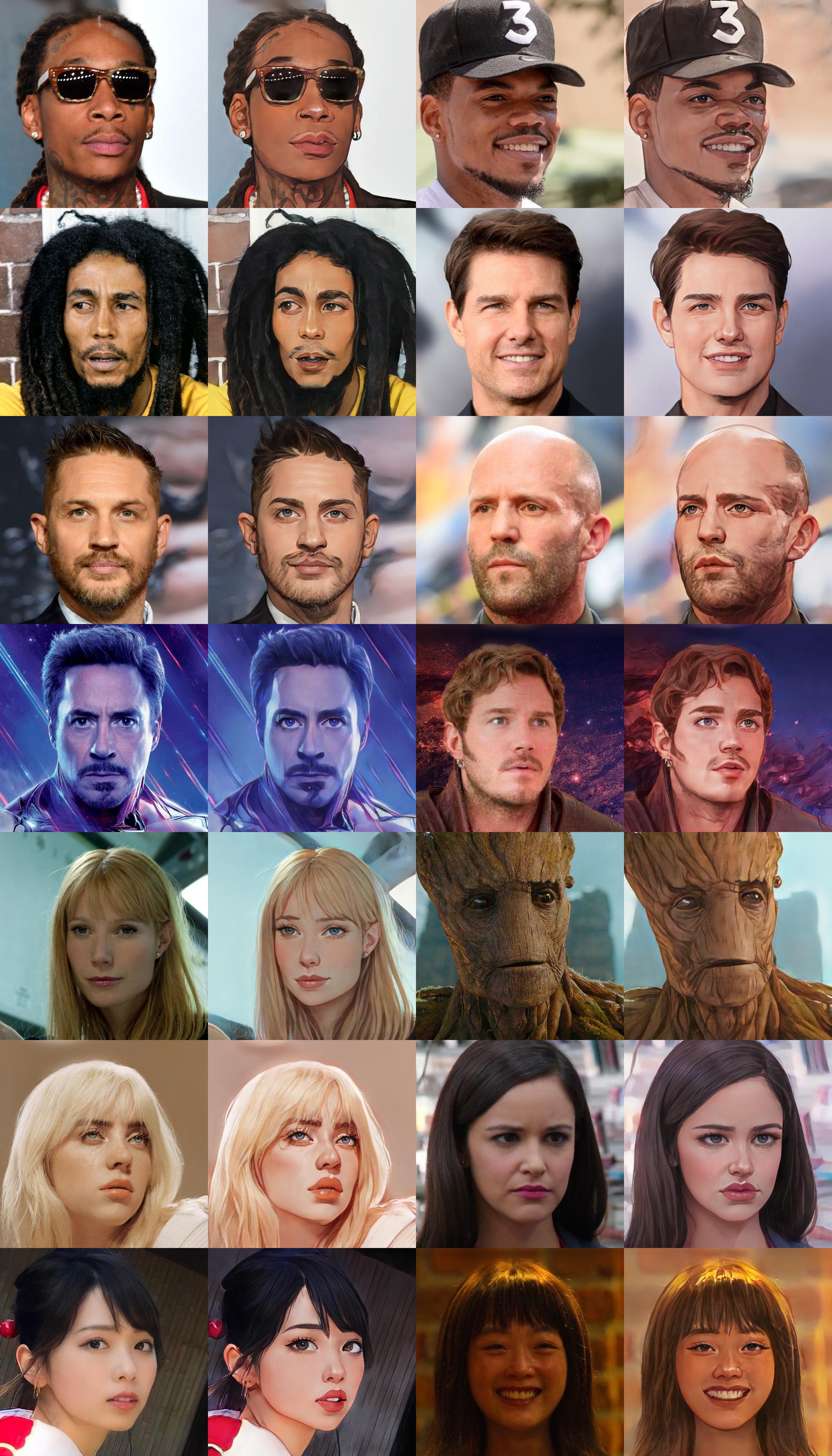

samples

Results from converted `Paprika` style model (input image, original tensorflow result, pytorch result from left to right)

Note: Results from converted weights slightly different due to the bilinear upsample issue

Webtoon Face [ckpt]

samples

Trained on 256x256 face images. Distilled from webtoon face model with L2 + VGG + GAN Loss and CelebA-HQ images.

Face Portrait v1 [ckpt]

Face Portrait v2 [ckpt]