-

Notifications

You must be signed in to change notification settings - Fork 5.5k

Support internal communication with thrift #13894

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

62b3ce0 to

ec44775

Compare

presto-main/src/main/java/com/facebook/presto/server/PrestoServer.java

Outdated

Show resolved

Hide resolved

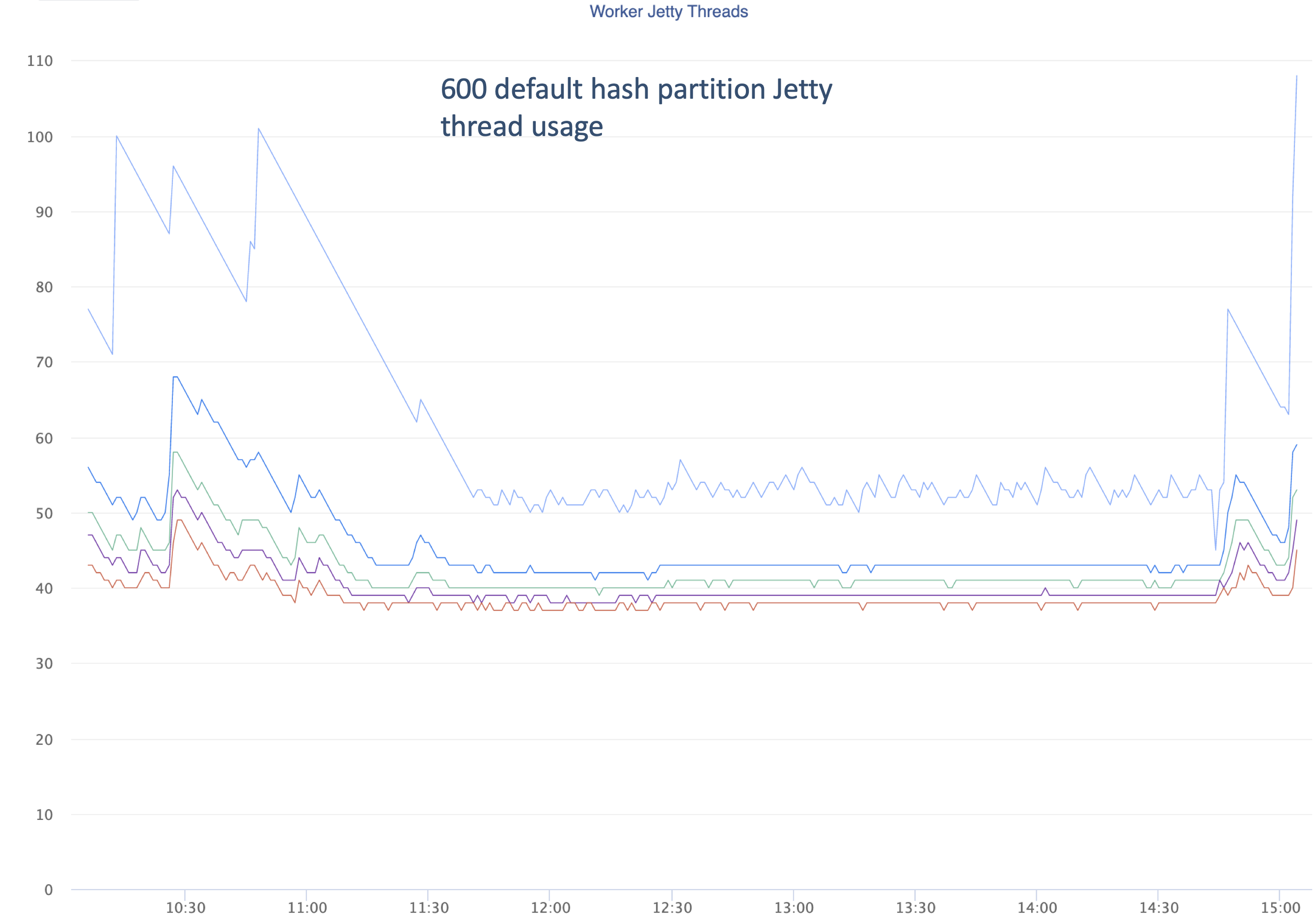

@highker Nice win for latency! -- So I assume this improve wall time for light queries with little data for exchange? Not sure how to interpret the first image though? Why still see "Worker Jetty Threads" even with Thrift PRC? |

Ya, I think so.

It just shows we used very few Jetty threads given Jetty thread pool is always full in prod. I didn't touch the task update/status part. So that tunnel still goes through HTTP. That should be easy to change as well on top of the framework. |

Yeah. Especially if we only want to migrate the RPC part (independent of migrating the encoding part, as there are recursive fields which Drift doesn't support well). Here is an POC of migrating |

|

Nice! One note: Drift uses native memory to buffer the entire request / response. This is needed to run blocking Thrift encoding / decoding. We should be tracking native memory utilization very closely when switching to Thrift. |

|

I didn't completely understand the graphs uploaded above. Can you add more context around those graphs? |

|

@mayankgarg1990, the figure is to show the cluster is running healthy and fast with 600 fanout. The cluster is not blocked by jetty threads anymore. @arhimondr, that is a very good point. We will monitor that for sure! |

3e59787 to

acb46e5

Compare

|

I will start to take a look at the PR. @tdcmeehan I am wondering if you are also interested in taking a look into this ? 😃 |

wenleix

left a comment

wenleix

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The first 3 commits LGTM. One question do we want to use "*" from artifacts id? Looks like we only use it when it's too cumbersome to list all artifacts. How many artifacts does netty have? :)

wenleix

left a comment

wenleix

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"Initial support with Thrift RPC" LGTM. but definitely need someone else to review :P .

IIRC the announcer based broadcast mechanism works well for worker node, but somehow doesn't work for coordinator (thinking about "TableFinishOperator" which has COOORDINATOR_ONLY partitioning). Maybe want to add a comment in TestingPrestoServer.java when doing thrift server port announcement ? :)

presto-main/src/main/java/com/facebook/presto/server/testing/TestingPrestoServer.java

Outdated

Show resolved

Hide resolved

wenleix

left a comment

wenleix

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"Add thrift support for exchange server". LGTM. minor comments.

presto-main/src/main/java/com/facebook/presto/server/ForAsyncTask.java

Outdated

Show resolved

Hide resolved

presto-main/src/main/java/com/facebook/presto/execution/buffer/SerializedPage.java

Outdated

Show resolved

Hide resolved

presto-main/src/main/java/com/facebook/presto/server/thrift/ThriftTaskClient.java

Outdated

Show resolved

Hide resolved

presto-main/src/main/java/com/facebook/presto/server/thrift/ThriftTaskService.java

Outdated

Show resolved

Hide resolved

presto-main/src/main/java/com/facebook/presto/metadata/ThriftRemoteNodeState.java

Outdated

Show resolved

Hide resolved

8a86533 to

bdaf87e

Compare

SyncMemoryBackend::handleCommand does not override parent method. Remove the implementation to avoid netty dependency.

Introduce RpcShuffleClient that allows using different RPC for shuffle. Refactor HttpPageBufferClient into PageBufferClient and HttpRpcShuffleClient that implements RpcShuffleClient. PageBufferClient now only handles page buffering and scheduling logic. The actual RPC detail is handled in HttpRpcShuffleClient

The return result of acknowledgeResults does not need handling. Use a future to make the call non-blocking instead of feeding it to a thread pool.

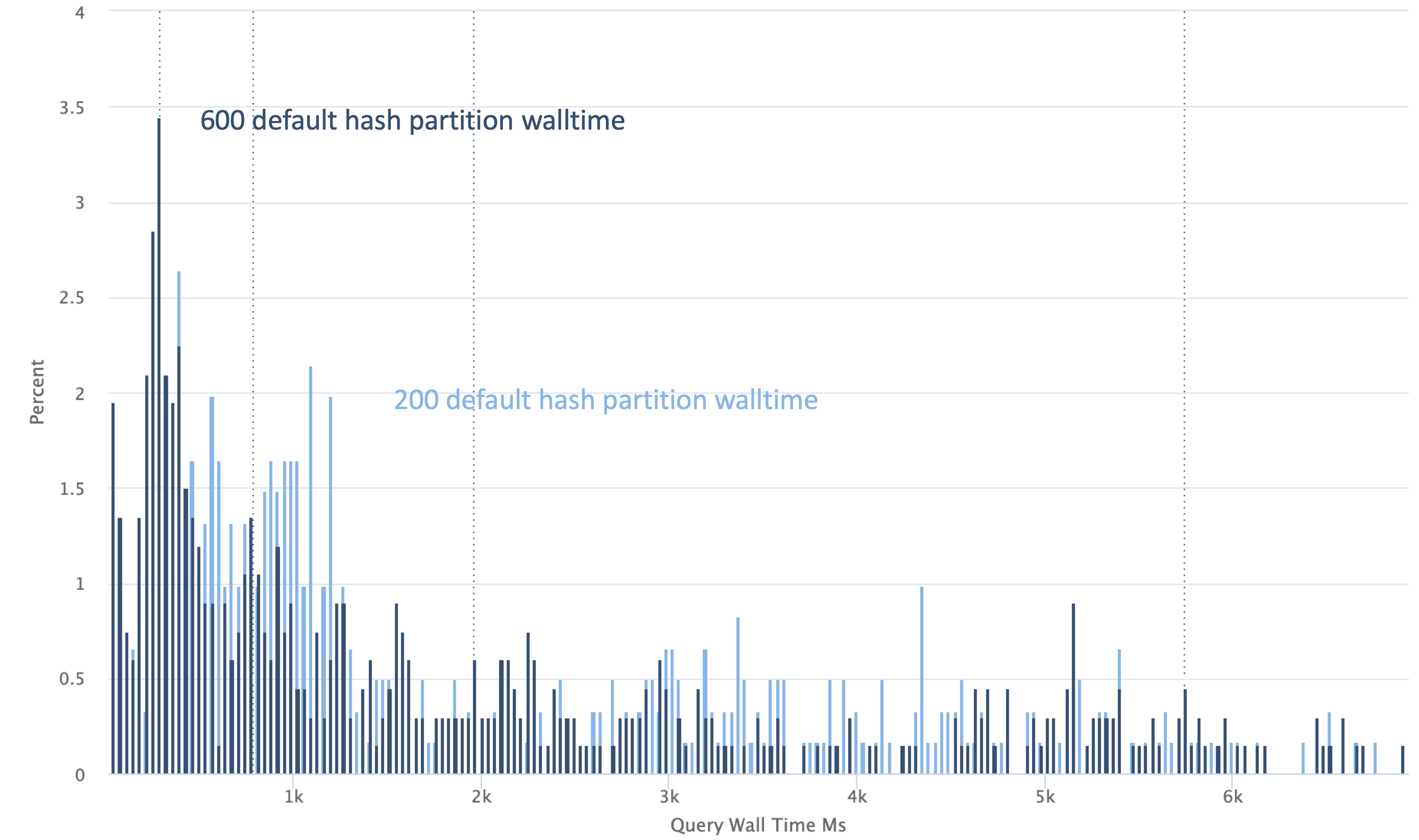

@highker Could you explain the second pic? |

|

@guhanjie my guess is the Y axis is percent of the workload, and we're seeing a subsection of the X axis which might explain why it doesn't sum to 100. FWIW, we later discovered that while this change did improve performance, it also increased native memory usage. This means clusters needed to be restarted sooner than previously. This is because the Thrift communication library under the hood was allocating more and ever increasing native memory to copy the shuffle output. This is because to copy the shuffle data over Thrift, we needed to copy the data one more time than before. After a certain point, we decided upon the following:

|

HTTP is too unreliable to use for internal communication. Add thrift support.