-

Notifications

You must be signed in to change notification settings - Fork 462

deploy etcd as static pod #148

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

deploy etcd as static pod #148

Conversation

|

testing locally: [core@adahiya-0-master-0 ~]$ cat /etc/os-release

NAME="Red Hat CoreOS"

VERSION="4.0"

ID="rhcos"

ID_LIKE="rhel fedora"

VERSION_ID="4.0"

PRETTY_NAME="Red Hat CoreOS 4.0"

ANSI_COLOR="0;31"

HOME_URL="https://www.redhat.com/"

BUG_REPORT_URL="https://bugzilla.redhat.com/"

REDHAT_BUGZILLA_PRODUCT="Red Hat 7"

REDHAT_BUGZILLA_PRODUCT_VERSION="4.0"

REDHAT_SUPPORT_PRODUCT="Red Hat"

REDHAT_SUPPORT_PRODUCT_VERSION="4.0"

OSTREE_VERSION=47.29etcd is running as static pod: oc -n kube-system get pods etcd-member-adahiya-0-master-0

NAME READY STATUS RESTARTS AGE

etcd-member-adahiya-0-master-0 1/1 Running 0 8mapiVersion: v1

kind: Pod

metadata:

annotations:

kubernetes.io/config.hash: c26521395dbc1da7d5ef74aca0694d11

kubernetes.io/config.mirror: c26521395dbc1da7d5ef74aca0694d11

kubernetes.io/config.seen: 2018-10-29T23:56:49.011541332Z

kubernetes.io/config.source: file

creationTimestamp: 2018-10-29T23:59:58Z

labels:

k8s-app: etcd

name: etcd-member-adahiya-0-master-0

namespace: kube-system

resourceVersion: "524"

selfLink: /api/v1/namespaces/kube-system/pods/etcd-member-adahiya-0-master-0

uid: bd5393bf-dbd6-11e8-8085-62c04d92e4a1

spec:

containers:

- command:

- /bin/sh

- -c

- |

#!/bin/sh

set -euo pipefail

source /run/etcd/environment

/usr/local/bin/etcd \

--discovery-srv tt.testing \

--initial-advertise-peer-urls=https://${ETCD_IPV4_ADDRESS}:2380 \

--cert-file=/etc/ssl/etcd/system:etcd-server:${ETCD_DNS_NAME}.crt \

--key-file=/etc/ssl/etcd/system:etcd-server:${ETCD_DNS_NAME}.key \

--trusted-ca-file=/etc/ssl/etcd/ca.crt \

--client-cert-auth=true \

--peer-cert-file=/etc/ssl/etcd/system:etcd-peer:${ETCD_DNS_NAME}.crt \

--peer-key-file=/etc/ssl/etcd/system:etcd-peer:${ETCD_DNS_NAME}.key \

--peer-trusted-ca-file=/etc/ssl/etcd/ca.crt \

--peer-client-cert-auth=true \

--advertise-client-urls=https://${ETCD_IPV4_ADDRESS}:2379 \

--listen-client-urls=https://0.0.0.0:2379 \

--listen-peer-urls=https://0.0.0.0:2380 \

env:

- name: ETCD_DATA_DIR

value: /var/lib/etcd

- name: ETCD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

image: quay.io/coreos/etcd:v3.2.14

imagePullPolicy: IfNotPresent

name: etcd-member

ports:

- containerPort: 2380

hostPort: 2380

name: peer

protocol: TCP

- containerPort: 2379

hostPort: 2379

name: server

protocol: TCP

resources: {}

securityContext: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /run/etcd/

name: discovery

- mountPath: /etc/ssl/etcd

name: certs

- mountPath: /var/lib/etcd

name: data-dir

dnsPolicy: ClusterFirst

hostNetwork: true

initContainers:

- args:

- --discovery-srv=tt.testing

- --output-file=/run/etcd/environment

- --v=4

image: docker.io/abhinavdahiya/origin-setup-etcd-environment

imagePullPolicy: Always

name: discovery

resources: {}

securityContext: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /run/etcd/

name: discovery

- command:

- /bin/sh

- -c

- |

#!/bin/sh

set -euo pipefail

source /run/etcd/environment

[ -e /etc/ssl/etcd/system:etcd-server:${ETCD_DNS_NAME}.crt -a \

-e /etc/ssl/etcd/system:etcd-server:${ETCD_DNS_NAME}.key ] || \

/usr/local/bin/kube-client-agent \

request \

--orgname=system:etcd-servers \

--cacrt=/etc/ssl/etcd/root-ca.crt \

--assetsdir=/etc/ssl/etcd \

--address=https://adahiya-0-api.tt.testing:6443 \

--dnsnames=localhost,etcd.kube-system.svc,etcd.kube-system.svc.cluster.local,${ETCD_DNS_NAME} \

--commonname=system:etcd-server:${ETCD_DNS_NAME} \

--ipaddrs=${ETCD_IPV4_ADDRESS},127.0.0.1 \

[ -e /etc/ssl/etcd/system:etcd-peer:${ETCD_DNS_NAME}.crt -a \

-e /etc/ssl/etcd/system:etcd-peer:${ETCD_DNS_NAME}.key ] || \

/usr/local/bin/kube-client-agent \

request \

--orgname=system:etcd-peers \

--cacrt=/etc/ssl/etcd/root-ca.crt \

--assetsdir=/etc/ssl/etcd \

--address=https://adahiya-0-api.tt.testing:6443 \

--dnsnames=${ETCD_DNS_NAME},tt.testing \

--commonname=system:etcd-peer:${ETCD_DNS_NAME} \

--ipaddrs=${ETCD_IPV4_ADDRESS} \

image: quay.io/coreos/kube-client-agent:678cc8e6841e2121ebfdb6e2db568fce290b67d6

imagePullPolicy: IfNotPresent

name: certs

resources: {}

securityContext: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /run/etcd/

name: discovery

- mountPath: /etc/ssl/etcd

name: certs

nodeName: adahiya-0-master-0

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

operator: Exists

volumes:

- emptyDir: {}

name: discovery

- hostPath:

path: /etc/ssl/etcd

type: ""

name: certs

- hostPath:

path: /var/lib/etcd

type: ""

name: data-dir

status:

conditions:

- lastProbeTime: null

lastTransitionTime: 2018-10-29T23:57:17Z

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: 2018-10-29T23:57:26Z

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: null

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: 2018-10-29T23:56:49Z

status: "True"

type: PodScheduled

containerStatuses:

- containerID: cri-o://93397f616d48322fbd1130d0921543f1971fa88f42e1ec25f078cd9c78937deb

image: quay.io/coreos/etcd:v3.2.14

imageID: quay.io/coreos/etcd@sha256:688e6c102955fe927c34db97e6352d0e0962554735b2db5f2f66f3f94cfe8fd1

lastState: {}

name: etcd-member

ready: true

restartCount: 0

state:

running:

startedAt: 2018-10-29T23:57:25Z

hostIP: 192.168.126.11

initContainerStatuses:

- containerID: cri-o://d562d128a0c6f1f7933358d8cf8428f97497959aeffda587de3725e0bb0ef15e

image: docker.io/abhinavdahiya/origin-setup-etcd-environment:latest

imageID: docker.io/abhinavdahiya/origin-setup-etcd-environment@sha256:1535b9373527ded931114a497bcd61c3ebe5385e4eda05c3abcbc638366023cd

lastState: {}

name: discovery

ready: true

restartCount: 0

state:

terminated:

containerID: cri-o://d562d128a0c6f1f7933358d8cf8428f97497959aeffda587de3725e0bb0ef15e

exitCode: 0

finishedAt: 2018-10-29T23:57:09Z

reason: Completed

startedAt: 2018-10-29T23:57:09Z

- containerID: cri-o://c1788ae91c7ebc348e9fd3905091b2b853bfe8544efd5aee66c0bbd007ed9a34

image: quay.io/coreos/kube-client-agent:678cc8e6841e2121ebfdb6e2db568fce290b67d6

imageID: quay.io/coreos/kube-client-agent@sha256:8564ab65bcb1064006d2fc9c6e32a5ca3f4326cdd2da9a2efc4fb7cc0e0b6041

lastState: {}

name: certs

ready: true

restartCount: 0

state:

terminated:

containerID: cri-o://c1788ae91c7ebc348e9fd3905091b2b853bfe8544efd5aee66c0bbd007ed9a34

exitCode: 0

finishedAt: 2018-10-29T23:57:17Z

reason: Completed

startedAt: 2018-10-29T23:57:16Z

phase: Running

podIP: 192.168.126.11

qosClass: BestEffort

startTime: 2018-10-29T23:56:49Z |

830a1df to

66360f8

Compare

|

/hold cancel |

|

from one of the master installed by CI. This does not have the updated kubelet from openshift/origin#21274 |

|

/test e2e-aws |

|

@abhinavdahiya I think this was brought up in chat, but RHCOS pipeline v1 is in a frozen state (but still producing images), RHCOS pipeline v2 is including the latest kubelet changes a bit faster. |

|

Requires installer moving to new pipeline for RHCOS :( openshift/installer#554 |

|

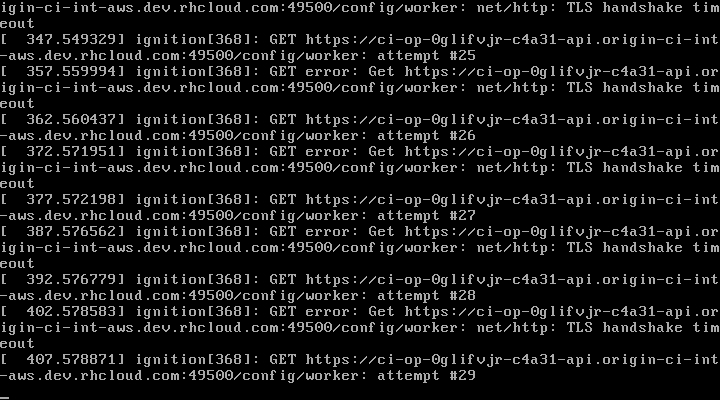

from the bootstrap node in ci etcd running as static pods. |

|

|

|

Looks like the same issue we keep hitting with #126 |

|

Yeah, we're hitting the same thing here with e2e. Mine was able to get through after ~7 retries. |

|

/test e2e-aws |

aaronlevy

left a comment

aaronlevy

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Couple questions and some minor nits about follow-up tasks.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: Is there a location this can be reviewed? Somewhat opaque here. Feel like this should be in final location / reviewed as well to land this. If we merge as-is please open issues tracking that we need to fix this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

its in this repo https://github.com/openshift/machine-config-operator/tree/master/cmd/setup-etcd-environment

openshift/release#2036 will allow us to use the official release image.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry for the late nit, but can we change the name to something that indicates it’s scoped to either bootstrap or installer or machine? “installer-bootstrap-etcd” or “machine-master-etcd”? Would just prefer a bit of scoping

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@smarterclayton i opened a issue to track the rename #152

i will fix this 😇

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: we can't release with this, so should be tracked as an open issue.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Does this need to be privileged?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Does this need to be privileged?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: open issue to fix this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we ever want ETCD_DNS_NAME to differ from the node name? The node name already has to resolve to a routable address.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ETCD_DNS_NAME this is the name in the SRV record that resolves to this machine's IP.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ack

|

I'll give my unofficial lgtm (not in OWNER). Would be nice if we could reduce need for privileged containers, but don't need to block on that. /lgtm |

|

/hold need to wait for approval from @crawford and/or @smarterclayton |

|

Was not aware that a rando (me) could lgtm and would merge without another approver... |

|

/retest |

66360f8 to

0d51b76

Compare

0d51b76 to

7a33492

Compare

7a33492 to

ee546e9

Compare

|

/lgtm |

|

[APPROVALNOTIFIER] This PR is APPROVED This pull-request has been approved by: aaronlevy, abhinavdahiya, crawford The full list of commands accepted by this bot can be found here. The pull request process is described here DetailsNeeds approval from an approver in each of these files:

Approvers can indicate their approval by writing |

|

/refresh |

…anup Bug 1705753: some godoc changes on the config CRD type for better oc explain behavior

openshift/origin#21274 was merged that allows kubelet to run static pods even when kubelet is bootstrapping its certifcates with apiserver.

/hold