-

Notifications

You must be signed in to change notification settings - Fork 535

IBM Public Cloud Support #202

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

IBM Public Cloud Support #202

Conversation

|

/assign @derekwaynecarr |

sudhaponnaganti

left a comment

sudhaponnaganti

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

FYI @jupierce

| authors: | ||

| - "@csrwng" | ||

| reviewers: | ||

| - "@derekwaynecarr" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

expecting the owners of the control plane operators here, compare https://github.com/openshift/enhancements/pull/202/files#diff-8d49ecd990d312a72c0ffcdd1784ad05R92.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@sttts did you mean a different pr? the link above is for this one

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, meant this one. Just expected the owners of the mentioned operators to be informed about these plans by being reviewer of the enhancement.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ack, will add more reviewers

| - kubernetes apiserver | ||

| - kubernetes controller manager | ||

| - kubernetes scheduler | ||

| - openshift apiserver |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

has it been discussed what is needed to move that into the customer cluster?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It hasn't... when we first looked at this, however, we were in a catch 22 situation, where in order to be able to schedule pods we needed the openshift crds and controller functional.

| code should include config observers that assemble a new configuration for their | ||

| respective control plane components. This will ensure that drift in future versions | ||

| is kept under control and that a single code base is used to manage control plane | ||

| configuration. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

where is this beta control plane operator?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we're working on it, will be added to the current hypershift-toolkit repo. For the second phase we will create separate repos for each of the control plane controllers.

| Public Cloud team the necessary tools to generate manifests needed for a hosted | ||

| control plane. | ||

| - Ensure that this deployment model remains functional through regular e2e testing | ||

| on IBM Public Cloud. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"regular" means we get a normal CI job in the openshift org making sure our control plane code changes don't break it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Will the CI job be blocking for OpenShift PRs? E.g. if deployment topology changes and some apiserver suddenly does not serve certain APIs because they moves, their CI will break.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"regular" means we get a normal CI job in the openshift org making sure our control plane code changes don't break it?

That is the plan. We currently have a periodic job that creates clusters based on the 4.3 branches. One will be added for the master/4.4 branch.

Will the CI job be blocking for OpenShift PRs? E.g. if deployment topology changes and some apiserver suddenly does not serve certain APIs because they moves, their CI will break.

That will be harder. We would require capacity on the IBM Cloud to run that many jobs. Not sure that is feasible right now. The periodic job should block a release, but not individual PRs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We describe those apiserver changes (they are happening, now for oauth) in enhancements. The IBM team has to watch that repo to be informed.

|

@deads2k @mfojtik @ironcladlou @spadgett @abhinavdahiya @crawford @miabbott Please let me know if I should include other reviewers |

|

|

||

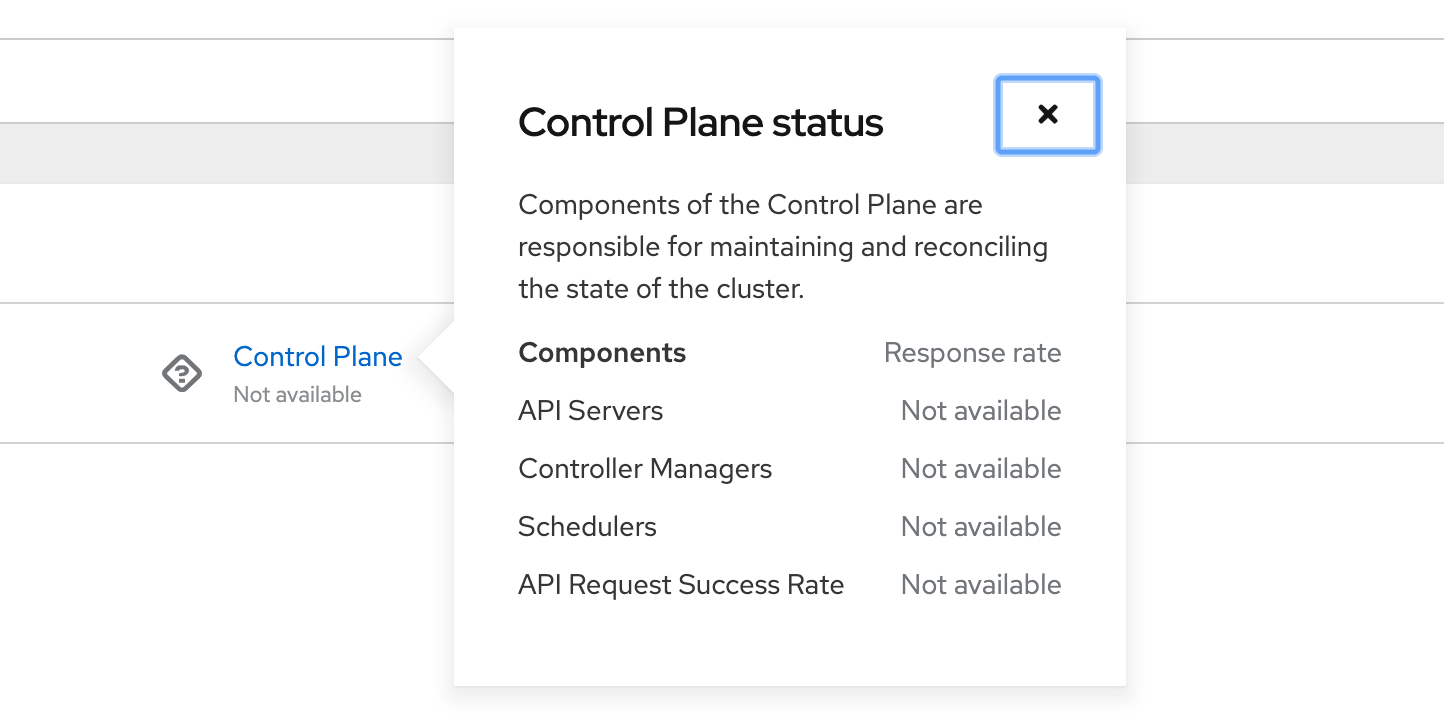

| #### Console Changes | ||

| The console should not report the control plane as being down if no metrics | ||

| datapoints exist for control plane components in this configuration. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ack, this shouldn't be an issue.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@andybraren We probably need to remove that since we never expect to have control plane metrics. It's misleading to say not available.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, created an issue to track this for 4.5 Dashboards https://issues.redhat.com/browse/MGMT-438

| - openshift controller manager | ||

| - cluster version operator | ||

| - control plane operator(s)\* | ||

| - oauth server\+ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@csrwng Are there any lingering issues where the console backend rejects the OAuth server certificate in this deployment?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@spadgett no issue at the moment. Thx!

|

cc: @lucab |

|

From an OS point of view, there are at least two things in this proposal that looks uncomfortably hairy to me:

In short, the post-GA story is under-specified so it's quite hard to judge it. The rest of the document seems to hint at a heavy UPI+RHEL environment, which offers a lot of escape hatches and is more likely to result in a "pet nodes" provisioning flow. If that's indeed the priority, then it would be better to descope RHCOS and leave if for "future exploration" (with the risk that it may be very hard or impossible to retrofit). If RHCOS workers are instead a requirement, then clarifying the points above may result in vastly different design and required work. |

|

|

||

| ### Non-Goals | ||

|

|

||

| - Make hosted control planes a supported deployment model outside of IBM Public Cloud. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I find this confusing...IBM Public Cloud means a lot of different things, and a sort of baseline obvious one is having OpenShift support the default "self driving" path in their existing IaaS. But I guess we're doing hosted control plane first?

Maybe the enhancement should be called: "IBM Public Cloud Hosted Control Plane" ?

And one thing I would say here is that we should think of this "fairly" - if some other IaaS showed up and was willing to commit significant resources to maintaining a similar thing... clearly vast amounts of the design would likely be shared. But that can come later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Absolutely, I think the things we learn from this work is something we can likely reuse in other cases. And perhaps at the proper time, make the pattern something standalone that's configured per provider. So definitely, this non-goal is a point-in-time statement.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I find this confusing...IBM Public Cloud means a lot of different things, and a sort of baseline obvious one is having OpenShift support the default "self driving" path in their existing IaaS. But I guess we're doing hosted control plane first?

At least in the foreseeable future, supporting the self-hosted path is not a priority afaik, but @derekwaynecarr can likely provide more insight into that.

cgwalters

left a comment

cgwalters

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for writing this enhancement BTW!

I will say I have trouble keeping in my head the fundamental impacts this makes to the default OpenShift 4 "self-driving" ("non-hosted? Need a term...) mode. Particularly given the other fundamental changes going on like the etcd operator that affect how we think of the control plane too.

Maybe we can use "hostedCP" as a shorthand term when discussing this? (HCP is obvious but three letter acronyms are too common etc.)

@lucab thank you for the feedback. Yes, this proposal is definitely under-specified where it comes to RHCOS. It's more a statement that we don't expect to continue supporting IBM cloud without RHCOS forever. We should have a separate enhancement proposal/design specifically for RHCOS, given that as I understand it, the RHCOS team has already done some initial investigations around this. |

|

|

||

| Enables an OpenShift cluster to be hosted on top of a Kubernetes/OpenShift cluster. | ||

|

|

||

| Given a release image, a CLI tool generates manifests that instantiate the control plane |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OS upgrades for the worker nodes is owned by the customer? Or does IBM provide tooling for that? Are they using openshift-ansible?

In the "Post-GA" world with RHCOS...do we forsee trying to enable the MCO to manage upgrades for the workers w/RHCOS?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Isn't this covered in the Managed Workers section below?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The second half is yes, thanks!

| #### Managed Workers | ||

| RHCOS adds support for bootstrapping on IBM Public Cloud. The MCO is added to | ||

| the components that get installed on the management cluster. This enables upgrading | ||

| of RHCOS nodes using the same mechanisms as in self-hosted OpenShift. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So compute nodes still run machine-config daemons and cluster admins can write MachineConfig entries, create MachineSets, and all that good stuff? They just don't have any objects representing or control over the control-plane machines?

|

The service is now GA and running 4.4.11, so let's merge this and then make updates to explain usage of Cluster Profile(s). /approve |

|

[APPROVALNOTIFIER] This PR is APPROVED This pull-request has been approved by: csrwng, derekwaynecarr, sudhaponnaganti The full list of commands accepted by this bot can be found here. The pull request process is described here DetailsNeeds approval from an approver in each of these files:

Approvers can indicate their approval by writing |

| minimum set of manifests to allow skipping the component should be annotated. | ||

| However, in the case of the Machine API and Machine Configuration operators, | ||

| the CRDs that represent machines, machinesets and autoscalers should also be | ||

| skipped. Monitoring alerts for components that do not get installed in the user |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This part won't be that easy, unless we get provided with a list of it. Also it will not fire if there are no metrics for those components, so don't think its a problem.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is what we addressed with openshift/cluster-monitoring-operator#705

No other alerts related to control plane components have surfaced.

|

|

||

| Changes are required in different areas of the product in order to make clusters deployed | ||

| using this method viable. These include changes to the cluster version operator (CVO), web | ||

| console, second level operators (SLOs) deployed by the CVO, and RHCOS. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

please don't use SLO as an acronym here. It is very commonly used for "Service Level Objectives".

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ack, will do a follow-up to remove.

| the new cluster. Minting of kubelet certificates for these worker nodes is handled | ||

| by IBM automation. | ||

|

|

||

| Components that run on the management cluster include: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

for cluster-monitoring: as apiserver monitoring is effectively being disabled when running in "ROKS mode" what is monitoring control plane components on the management cluster?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IBM is running their own monitoring solution on their management/tugboat clusters.

| cluster should also be skipped where possible. | ||

|

|

||

| #### Console Changes | ||

| The console should not report the control plane as being down if no metrics |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are all edge cases for disabling monitoring of control plane components on the worker clusters covered in openshift/cluster-monitoring-operator#705 ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So far yes

No description provided.