-

Notifications

You must be signed in to change notification settings - Fork 535

Enable GL backend via Surfman #3375

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

kvark

left a comment

kvark

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for trying these ideas out!

Fortunately, I found a few places that would explain why it doesn't work yet :)

zicklag

left a comment

zicklag

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sweet. I'll come back and fix those probably later tonight. Thanks!

02e90d1 to

6f77195

Compare

src/backend/gl/src/queue.rs

Outdated

|

|

||

| // Wait for rendering to finish | ||

| unsafe { | ||

| let wait_result = gl.client_wait_sync( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this actually needs to be wait_sync, not client_wait_sync

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting, glow doesn't actually have a wait_sync. 😲

https://docs.rs/glow/0.6.0/glow/?search=wait_sync

Should I open an issue for glow on that? Maybe that is just a simple PR to glow I could make?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I recommend implementing it quickly in a fork, making a PR (it's a non-breaking change), and using your fork for the dependency here in the meantime.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, updated glow with the extra binding and submitted a PR and updated this branch with the changes, but still no change in rendering.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks like this still needs to be done?

127: Add glWaitSync Binding r=grovesNL a=zicklag Needed this for `gfx-backend-gl`: gfx-rs/gfx#3375 (comment). Co-authored-by: Zicklag <[email protected]>

|

What if, after we render to the renderbuffer on the main context (in submit), we bind it for reading to a framebuffer, and actually issue a blit to some other FBO? Just to replicate the same scenario that we have on surface context (in present). This would ensure that the image goes through the necessary memory barriers and layout changes under the hood. Of course we don't want to ship that, but it would confirm the theory that there is a workaround, at least. |

1ebbe01 to

c077a2f

Compare

I'll try that next, but I just found something interesting. I just made a new commit that gets rid of context sharing completely and I'm still getting the same rendering artifacts. Also, I'm trying to figure out why we need separate contexts for the surface and the root context. Is that because in multi-threading you cannot use the same context across different threads? Being that we can't share anything across extra threads right now anyway because of Not that it is helping in this case it seems. Edit: I'm not actually sure how to try what you suggested in |

That's an interesting experiment! We should double-check if the sharing is actually requested at the low level (by debugging through context creation).

Let's do it in present() then. To clarify: this operation is purely artificial and makes no practical sense, it's just to convince the driver to transition the renderbuffer into "readable" state. So, in present() before switching the current context, you could create an FBO and attach the renderbuffer to it, bind the FBO for reading. Then maybe create another temporary RBO, another temporary FBO, attach them, and bind from drawing. Then do a blit. After that, we can proceed as usual by switch the contexts and doing a real blit. |

|

@zicklag have you tried to make further progress here? |

|

Haven't had the chance to try anything else yet. |

|

What platform are you on? Can you reproduce the rendering issue on the quad example? |

|

I tested this PR without the last commit on both macOS and Linux with a modified quad example and got no issues... |

|

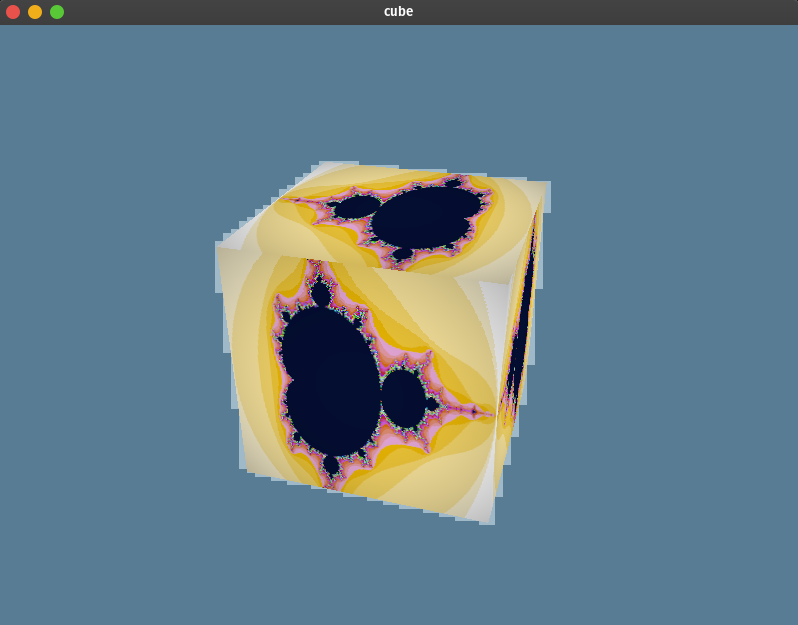

I'm on Linux, but the quad example did work before this PR: only the WGPU Cube example actually exhibits the problem so far. For now, because of other priorities this is on the back burner for me probably for a while depending on how things go. Especially with Bevy getting an OpenGL renderer backend which would have been one of my larger use-cases. I'd still love for this to work! I just probably won't have time to spend on it for a while. |

|

I think the difference with the

I'd expect them to happily throw it out if gfx-rs had a working GL backend. |

Hmm, that would be good to do.

Ahgh, I know. You're going to convince me to keep trying this when I have time. LOL 😃 OK, well I'll try to get back to this eventually, then. Right now all my time is going to getting out an 0.2 release of my Arsenal game engine ( which is built on Bevy ). GL support is definitely something we really want for Arsenal so this will probably take priority at some point, but the first goal is for me to get Arsenal working at all. |

|

Sorry, didn't mean to try convincing you. Looks like you got enough on your plate at the moment :) Just wanted to argue/elaborate on why GL backend would still be a strategic goal for us, even if Bevy doesn't use it. |

|

Yeah, no problem man. :D I really do want to get this figured out. We'll figure out it sometime. 👍 It would be amazing to support all of the graphics APIs through one interface. |

|

Bummer. I added wireframe rendering to the quad example, and it still doesn't exhibit the issue. |

|

Do you see artifacts in the quad example with https://github.com/kvark/gfx/tree/gl-test ? |

Just ran it and it looks fine.

Oh, interesting. I'll try it again.

I should be able to get it rebased. I'll see if I can do that now, I've got ~20 mins. It shouldn't be too hard. |

c077a2f to

bf32c5e

Compare

8845f60 to

98c6da9

Compare

src/backend/gl/src/queue.rs

Outdated

|

|

||

| // Wait for rendering to finish | ||

| unsafe { | ||

| let wait_result = gl.client_wait_sync( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks like this still needs to be done?

|

Unable to test the wgpu's cube example on macOS, getting

This is unrelated to your PR but unfortunate. Will look into it, not a blocker. |

98c6da9 to

ca21330

Compare

ca21330 to

75658d8

Compare

kvark

left a comment

kvark

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Alright, looks like everything is in place!

bors r+

Sorry, it won't let me reply to our comment inline. What still needs to be done? |

3375: [ Failed ( so far ) ] Attempt to Fix Rendering Artifacts r=kvark a=zicklag ( Will, hopefully ) fix issue #3353 PR checklist: - [ ] `make` succeeds (on *nix) - [ ] `make reftests` succeeds - [ ] tested examples with the following backends: Co-authored-by: Zicklag <[email protected]>

It was using |

|

Could you make a similar PR towards hal-0.6 branch? |

|

Build failed: |

Ah, cool.

Sweet! 😃 I haven't tested the WGPU examples yet, but I'll try those out in a sec ( if there's no major conflicts to resolve ). If those don't work for me, it might just be that my driver's goofy. Also it's running the Mesa driver, but I've got a hybrid GPU setup on my machine, so I can choose to run it with the GPU which I didn't realize earlier so I'm not sure if that is the reason for the weirdness. I'll have to try both.

Sounds good. I'll do that. 👍 |

|

Oh, yeah, I forgot about that extra framebuffer was only added for |

|

But we don't need Surfman (and GL backend) on windows at all, since it's not able to support WGL in the long run anyway |

|

Ah, then no reason to pollute the code with extra |

|

Yes, rip out GL on windows completely :) |

|

There that should make CI pass now. |

|

I was worried that the GL error I'm seeing on macOS is really about something broken with the presentation, and just manifesting itself on the next command. But it turns out to be a genuine error of buffer copying. Not sure what's going on there, but we can proceed. |

|

Just about out of time now, I'll try to do the hal-0.6 PR and a test of the WGPU examples tomorrow! 🙂 |

|

Thank you! I'll do some more cleanup of the GL backend in master in the meantime. |

( Will, hopefully ) fix issue #3353

PR checklist:

makesucceeds (on *nix)make reftestssucceeds