-

Notifications

You must be signed in to change notification settings - Fork 14

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Bull Scheduler increments the next scheduled job when manually promoted #3667

Comments

github-merge-queue bot

pushed a commit

that referenced

this issue

Nov 19, 2024

…y Promoted (#3957) Updated Bull Board-related packages to take advantage of the feature present in the newer version that allows a new job to be added (same as duplicate), allowing the schedulers to be executed without affecting their current time to be executed again. _Notes:_ 1. during the local tests, when a delayed job was deleted and the `queue-consumers` restarted, the delayed job was restored. 2. this PR is intended to be part of the upcoming release. The research for the ticket will continue. ## Using the add/duplicate option While creating the new job the properties can be edited, for instance, the corn expression can be edited to create a new time to execute the scheduler. _Please note that `timestamp` and `prevMillis` should be removed. These properties are generated once the job is created. As mentioned in the source code, `timestamp` is the "Timestamp when the job was created." and `prevMillis` is a "Internal property used by repeatable jobs."_  ```json { "repeat": { "count": 1, "key": "__default__::::0 7 * * *", "cron": "0 7 * * *" }, "jobId": "repeat:2c2720c5e8b4e9ce99993becec27a0ff:1731999600000", "delay": 42485905, "timestamp": 1731957114095, "prevMillis": 1731999600000, "attempts": 3, "backoff": { "type": "fixed", "delay": 180000 } } ``` ## Refactor - Refactored the code to move the Bull Board configuration to the Nestjs modules instead of doing it on the main.ts. The refactored code is equivalent to the one previously on the `main.ts`. - Updated icons and labels to make the dashboard look more like part of the SIMS. The way it was done was by targeting less effort. In case it causes some noise during the PR review the code will be removed.

github-merge-queue bot

pushed a commit

that referenced

this issue

Nov 22, 2024

…y promoted (Ensure Next Delayed Job) (#3978) - Ensures a scheduled job will have a delayed job created with the next expected scheduled time based on the configured cron expression. - DB row used as a lock to ensure the initialization code will not recreate delayed jobs or create them in an unwanted way if two PODs start at the same time. To be clear, the lock approach is the same used in other areas of the application and locks a single row in the table queue-consumers, please not, the table will not be locked. Please see below the sample query executed. ```sql START TRANSACTION select "QueueConfiguration"."id" AS "QueueConfiguration_id" from "sims"."queue_configurations" "QueueConfiguration" where (("QueueConfiguration"."queue_name" = 'archive-applications')) LIMIT 1 FOR UPDATE COMMIT ``` - While the queue is paused, no other delayed jobs will be created.

github-merge-queue bot

pushed a commit

that referenced

this issue

Nov 22, 2024

To proceed with the investigation about the intermittent ioredis issue on DEV, the package was updated and more logs were added to try to narrow down the root cause of the issue.

github-merge-queue bot

pushed a commit

that referenced

this issue

Nov 22, 2024

During the prior investigation was detected that when all the schedulers try to execute redis operations at the same time it fails after a certain amount of concurrent connections. The possible solutions being investigated are: 1. Allow the connection sharing as mentioned here https://github.com/OptimalBits/bull/blob/develop/PATTERNS.md#reusing-redis-connections 2. Control the service initialization to prevent multiple concurrent connections. This is PR an attempt to test the first options.

github-merge-queue bot

pushed a commit

that referenced

this issue

Nov 25, 2024

Implementing the easy/fast (and stable) approach to resolve the queue initialization issues. During the previous approach, sharing the ioredis connections (change being reverted in this PR) worked but there was also a possible false-positive memory leak warning. Further investigation will be needed if we change this approach in the feature but for now, keeping the existing approach. The current solution extends the current "specific queue-based" lock to an "all queues-based" lock. The initialization is still pretty fast (around 1 second) and even if we double the number of schedulers in the future it still will be good enough (the code is executed once during queue-consumers initialization). _Notes_: The logs before mentioned the queue-name every time and now it has changed to only the first one. The queue-name should be in the log context also but many schedulers are not "overriding" it, which should be resolved in the schedulers.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

…y promoted (Ensure Next Delayed Job) (#3978) - Ensures a scheduled job will have a delayed job created with the next expected scheduled time based on the configured cron expression. - DB row used as a lock to ensure the initialization code will not recreate delayed jobs or create them in an unwanted way if two PODs start at the same time. To be clear, the lock approach is the same used in other areas of the application and locks a single row in the table queue-consumers, please not, the table will not be locked. Please see below the sample query executed. ```sql START TRANSACTION select "QueueConfiguration"."id" AS "QueueConfiguration_id" from "sims"."queue_configurations" "QueueConfiguration" where (("QueueConfiguration"."queue_name" = 'archive-applications')) LIMIT 1 FOR UPDATE COMMIT ``` - While the queue is paused, no other delayed jobs will be created.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

To proceed with the investigation about the intermittent ioredis issue on DEV, the package was updated and more logs were added to try to narrow down the root cause of the issue.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

During the prior investigation was detected that when all the schedulers try to execute redis operations at the same time it fails after a certain amount of concurrent connections. The possible solutions being investigated are: 1. Allow the connection sharing as mentioned here https://github.com/OptimalBits/bull/blob/develop/PATTERNS.md#reusing-redis-connections 2. Control the service initialization to prevent multiple concurrent connections. This is PR an attempt to test the first options.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

Implementing the easy/fast (and stable) approach to resolve the queue initialization issues. During the previous approach, sharing the ioredis connections (change being reverted in this PR) worked but there was also a possible false-positive memory leak warning. Further investigation will be needed if we change this approach in the feature but for now, keeping the existing approach. The current solution extends the current "specific queue-based" lock to an "all queues-based" lock. The initialization is still pretty fast (around 1 second) and even if we double the number of schedulers in the future it still will be good enough (the code is executed once during queue-consumers initialization). _Notes_: The logs before mentioned the queue-name every time and now it has changed to only the first one. The queue-name should be in the log context also but many schedulers are not "overriding" it, which should be resolved in the schedulers.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

During the prior investigation was detected that when all the schedulers try to execute redis operations at the same time it fails after a certain amount of concurrent connections. The possible solutions being investigated are: 1. Allow the connection sharing as mentioned here https://github.com/OptimalBits/bull/blob/develop/PATTERNS.md#reusing-redis-connections 2. Control the service initialization to prevent multiple concurrent connections. This is PR an attempt to test the first options.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

Implementing the easy/fast (and stable) approach to resolve the queue initialization issues. During the previous approach, sharing the ioredis connections (change being reverted in this PR) worked but there was also a possible false-positive memory leak warning. Further investigation will be needed if we change this approach in the feature but for now, keeping the existing approach. The current solution extends the current "specific queue-based" lock to an "all queues-based" lock. The initialization is still pretty fast (around 1 second) and even if we double the number of schedulers in the future it still will be good enough (the code is executed once during queue-consumers initialization). _Notes_: The logs before mentioned the queue-name every time and now it has changed to only the first one. The queue-name should be in the log context also but many schedulers are not "overriding" it, which should be resolved in the schedulers.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

…y promoted (Ensure Next Delayed Job) (#3978) - Ensures a scheduled job will have a delayed job created with the next expected scheduled time based on the configured cron expression. - DB row used as a lock to ensure the initialization code will not recreate delayed jobs or create them in an unwanted way if two PODs start at the same time. To be clear, the lock approach is the same used in other areas of the application and locks a single row in the table queue-consumers, please not, the table will not be locked. Please see below the sample query executed. ```sql START TRANSACTION select "QueueConfiguration"."id" AS "QueueConfiguration_id" from "sims"."queue_configurations" "QueueConfiguration" where (("QueueConfiguration"."queue_name" = 'archive-applications')) LIMIT 1 FOR UPDATE COMMIT ``` - While the queue is paused, no other delayed jobs will be created.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

To proceed with the investigation about the intermittent ioredis issue on DEV, the package was updated and more logs were added to try to narrow down the root cause of the issue.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

During the prior investigation was detected that when all the schedulers try to execute redis operations at the same time it fails after a certain amount of concurrent connections. The possible solutions being investigated are: 1. Allow the connection sharing as mentioned here https://github.com/OptimalBits/bull/blob/develop/PATTERNS.md#reusing-redis-connections 2. Control the service initialization to prevent multiple concurrent connections. This is PR an attempt to test the first options.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

Implementing the easy/fast (and stable) approach to resolve the queue initialization issues. During the previous approach, sharing the ioredis connections (change being reverted in this PR) worked but there was also a possible false-positive memory leak warning. Further investigation will be needed if we change this approach in the feature but for now, keeping the existing approach. The current solution extends the current "specific queue-based" lock to an "all queues-based" lock. The initialization is still pretty fast (around 1 second) and even if we double the number of schedulers in the future it still will be good enough (the code is executed once during queue-consumers initialization). _Notes_: The logs before mentioned the queue-name every time and now it has changed to only the first one. The queue-name should be in the log context also but many schedulers are not "overriding" it, which should be resolved in the schedulers.

andrewsignori-aot

added a commit

that referenced

this issue

Nov 25, 2024

Moving the latest changes from Bull Scheduler to release 2.1.

This was referenced Dec 9, 2024

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Describe the task

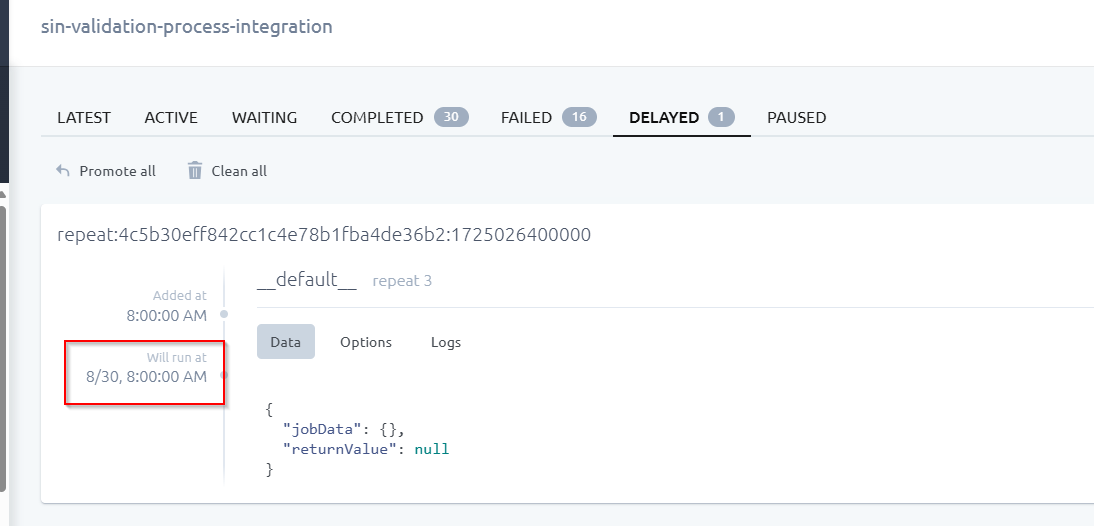

When a scheduled job of the scheduler(Bull Queue) is manually promoted, then the next schedule is automatically incremented .

This seems to be the default behavior of the bull framework.

But our expectation is that manually promoting the job is to handle something on a manual way if there is a need and it should not affect the regular schedules.

Here is an example:

A job is scheduled to run at AUG-30 at 8:AM (Current date being AUG-29 2:00 PM).

When I manually run the scheduler job outside it's regular schedule, then the scheduler skips it's next regular schedule and considers the 2nd next schedule as it's next schedule.

Acceptance Criteria

** Potential Solution(May not be the final solution) **

From the version

5.18.0of the Bull Dashboard UI, they have introduced the feature of manually adding a job to a Queue through the UI. To get this feature, we just need to update the bull-dashboard to the latest version. Please also note that, we need little investigation on how to use this add job in an appropriate way.https://github.com/felixmosh/bull-board/releases/tag/v5.18.0

The text was updated successfully, but these errors were encountered: