-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-32492][SQL] Fulfill missing column meta information COLUMN_SIZE /DECIMAL_DIGITS/NUM_PREC_RADIX/ORDINAL_POSITION for thriftserver client tools #29303

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

… server client tools

|

Test build #126807 has finished for PR 29303 at commit

|

|

cc @cloud-fan @gatorsmile @maropu @wangyum thanks very much |

|

@yaooqinn FYI to make the GitHub Actions tests pass, you might have to rebase. |

|

I see, thanks, @HyukjinKwon. |

|

retest this please |

|

Test build #126845 has finished for PR 29303 at commit

|

|

Test build #126852 has finished for PR 29303 at commit

|

|

retest this please |

|

Test build #126867 has finished for PR 29303 at commit

|

| private def getDecimalDigits(typ: DataType): Option[Int] = typ match { | ||

| case BooleanType | _: IntegerType => Some(0) | ||

| case FloatType => Some(7) | ||

| case DoubleType => Some(15) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

how do we pick these numbers?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

They are from hive's TypeDescriptor and I also checked the results, which are same from hive and spark, e.g. select 0.12345678901234567890D

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the result is 0.12345678901234568 which have 17 decimal digits but hive says it's 15 for double...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It seems to follow IEEE 754 https://en.wikipedia.org/wiki/IEEE_754

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we add a code comment to explain it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK

...tserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkGetColumnsOperation.scala

Show resolved

Hide resolved

|

|

||

| private def getColumnSize(typ: DataType): Option[Int] = typ match { | ||

| case StringType | BinaryType => None | ||

| case ArrayType(et, _) => getColumnSize(et) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

how can we report column size for array type? We don't know how many elements in an array.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

oh, right...

|

To check if we could fetch the new metadata via |

|

Test build #126887 has finished for PR 29303 at commit

|

| } | ||

| } | ||

|

|

||

| test("SparkGetColumnsOperation") { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please make this test title clearer, too.

| val decimalType = DecimalType(10, 2) | ||

| val ddl = | ||

| s""" | ||

| |CREATE TABLE $schemaName.$tableName |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we don't have a strict rule for the format though, how about following the existing format?

spark/sql/core/src/test/scala/org/apache/spark/sql/ShowCreateTableSuite.scala

Lines 35 to 41 in 8014b0b

| s"""CREATE TABLE ddl_test ( | |

| | a STRING, | |

| | b STRING, | |

| | `extra col` ARRAY<INT>, | |

| | `<another>` STRUCT<x: INT, y: ARRAY<BOOLEAN>> | |

| |) | |

| |USING json |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, this one looks better.

| s""" | ||

| |CREATE TABLE $schemaName.$tableName | ||

| | ( | ||

| | a boolean comment '0', |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you test all the types where possible for test coverage?

| assert(typeNames.get(3) === decimalType.sql) | ||

|

|

||

| val colSize = columns.get(6).getI32Val.getValues | ||

| assert(colSize.get(3).intValue() === decimalType.defaultSize) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you check all the elements in colSize? (Rather, I think we need to check all elements in the fetched rowSet.)

| * returned. | ||

| * For array, map, string, and binaries, the column size is variable, return null as unknown. | ||

| */ | ||

| private def getColumnSize(typ: DataType): Option[Int] = typ match { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hive returns the almost same values for column size?

https://github.com/apache/hive/blob/3e5e99eae154ceb8f9aa4e4ec71e6b05310e98e4/service/src/java/org/apache/hive/service/cli/operation/GetColumnsOperation.java#L187-L211

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hive does not return same result for each type

The new test can end to end cover the meta operations both for binary and HTTP mode, but SparkMetadataOperationSuite seems only works for binary only |

|

Test build #126960 has finished for PR 29303 at commit

|

|

Test build #126965 has finished for PR 29303 at commit

|

| } else { | ||

| Some(sizeArr.map(_.get).sum) | ||

| } | ||

| case other => Some(other.defaultSize) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: I think it's safer to list the types we know the size, instead of listing the types we don't know the size. I'd prefer

case dt @ (_: NumericType | DateType | TimestampType) => dt.defaultSize

...

|

Test build #126974 has finished for PR 29303 at commit

|

| null, // CHAR_OCTET_LENGTH | ||

| null, // ORDINAL_POSITION | ||

| pos.asInstanceOf[AnyRef], // ORDINAL_POSITION | ||

| "YES", // IS_NULLABLE |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should this respect column.nullable?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IS_NULLABLE is related to PK, "YES" here is more specific as we don't have such thing implemented

|

thanks, merging to master! |

| Thread.sleep(10) | ||

| status = client.getOperationStatus(opHandle) | ||

| } | ||

| val getCol = client.getColumns(sessionHandle, "", schemaName, tableName, null) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

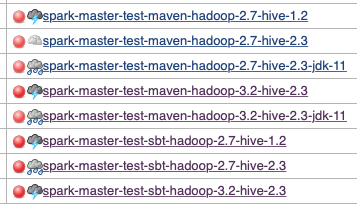

Hi, @yaooqinn and @cloud-fan . I'm not sure but this new test case seems to break all Jenkins Maven jobs on master branch. Could you take a look?

org.apache.hive.service.cli.HiveSQLException: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassCastException: org.apache.hadoop.hive.ql.security.HadoopDefaultAuthenticator cannot be cast to org.apache.hadoop.hive.ql.security.HiveAuthenticationProvider

at org.apache.hive.service.cli.thrift.ThriftCLIServiceClient.checkStatus(ThriftCLIServiceClient.java:42)

at org.apache.hive.service.cli.thrift.ThriftCLIServiceClient.getColumns(ThriftCLIServiceClient.java:275)

at org.apache.spark.sql.hive.thriftserver.ThriftServerWithSparkContextSuite.$anonfun$$init$$17(ThriftServerWithSparkContextSuite.scala:146)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's revert if it takes a while to fix.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks like something related to another version of Hive which belong to another job(maybe failed) not being cleaned up.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ya. It seems that Hive 2.3 is affected. And, JDK11 maven seems not running those test suite.

Do we have a follow-up idea, @yaooqinn and @cloud-fan ?

The error message is clear, java.lang.ClassCastException. If there is no idea, I'd like to recommend to revert first and pass Maven Jenkins properly.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

BTW, this is not a flaky failure. This broke some profiles consistently.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

BTW, it's up to you, @cloud-fan . I'll not revert this by myself since PRBuilder is still working.

### What changes were proposed in this pull request? The newly added test fails Jenkins maven jobs, see #29303 (comment) We move the test from `ThriftServerWithSparkContextSuite` to `SparkMetadataOperationSuite`, the former uses an embedded thrift server where the server and the client are in the same JVM process and the latter forks a new process to start the server where the server and client are isolated. The sbt runner seems to be fine with the test in the `ThriftServerWithSparkContextSuite`, but the maven runner with `scalates`t plugin will face the classloader issue as we will switch classloader to the one in the `sharedState` which is not the one that hive uses to load some classes. This is more like an issue that belongs to the maven runner or the `scalatest`. So in this PR, we simply move it to bypass the issue. BTW, we should test against the way of using embedded thrift server to verify whether it is just a maven issue or not, there could be some use cases with this API. ### Why are the changes needed? Jenkins recovery ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? modified uts Closes #29347 from yaooqinn/SPARK-32492-F. Authored-by: Kent Yao <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

What changes were proposed in this pull request?

This PR fulfills some missing fields for SparkGetColumnsOperation including COLUMN_SIZE /DECIMAL_DIGITS/NUM_PREC_RADIX/ORDINAL_POSITION

and improve the test coverage.

Why are the changes needed?

make jdbc tools happier

Does this PR introduce any user-facing change?

yes,

before

after

How was this patch tested?

add unit tests