-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-3181] [ML] Implement huber loss for LinearRegression. #19020

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #80975 has finished for PR 19020 at commit

|

|

Test build #80977 has finished for PR 19020 at commit

|

|

Test build #80981 has finished for PR 19020 at commit

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this can be factored out and only instantiated once, no?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think no. I was trying to factored it out, but because LeastSquaresAggregator and HuberAggregator pass in the type of itself, so the compile will complain. Maybe @sethah can give some suggestion?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh right, ok.

|

So did we decide not to expose the equivalents of |

|

@MLnick Yeah, I think we have get an agreement in JIRA discussion. |

|

Test build #80983 has finished for PR 19020 at commit

|

WeichenXu123

left a comment

WeichenXu123

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great work! I leave some minor comments.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Use coefficients(numFeatures)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

coefficients doesn't contain intercept element, so we can't get intercept from coefficients array.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is it OK to include intercept into coefficients? or create a class variable for intercept?

Anyway maybe we should avoid getting values from Broadcast in each add.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I created a class variable for intercept.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The epsilon is parameter M. Use consistent name is better.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Use ~== relTol instead of ===

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Use ===

|

Test build #81310 has finished for PR 19020 at commit

|

0220df7 to

4836810

Compare

|

Test build #81308 has finished for PR 19020 at commit

|

|

Test build #81315 has finished for PR 19020 at commit

|

|

@MLnick @WeichenXu123 Thanks for your comments, also cc @jkbradley @hhbyyh @sethah, would you mind to have a look? Thanks. |

|

Looks good. cc @jkbradley Thanks! |

|

big vote for python and R :) |

|

I'll check this out now |

jkbradley

left a comment

jkbradley

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This seems very useful to add--thanks! I have a few questions:

- Echoing @WeichenXu123 's comment: Why use "epsilon" as the Param name?

- I'd like us to provide the estimated scaling factor (sigma from the paper) in the Model. That seems useful for model interpretation and debugging.

We should update the persistence test by updating allParamSettings.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This description is misleadingly general since this claim only applies to normally distributed data. How about referencing the part of the paper which talks about this so that people can look up what is meant here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

style: It'd be nice to put parentheses around (linearLoss / sigma) for clarity

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Mark as expertParam (same for set/get)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's keep exact specifications of the losses being used. This is one of my big annoyances with many ML libraries: It's hard to tell exactly what loss is being used, which makes it hard to compare/validate results across different ML libraries.

It'd also be nice to make it clear what we mean by "huber," in particular that we estimate the scale parameter from data.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree, and I added math formula for both squaredError and huber loss function.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Log epsilon (M) as well

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How about calling this "squaredError" since the loss is "squared error," not "least squares."

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you know if these integration tests are in the L1 penalty regime or in the L2 regime? It'd be nice to make sure we're testing both.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, the test data was composed by two parts: inlierData and outlierData, and I have checked both regimes have been test. Thanks.

|

I disagree that this should be combined with Linear Regression. IMO, this belongs as its own algorithm. The fact that there would be code duplication in that case is indicative that we don't have good abstractions and code sharing in place, not that we should combine different algorithms using |

|

@jkbradley Thanks for your comments, I have addressed all your inline comments. Please see replies to your other questions below:

We have two candidate name: epsilon or m , both of them are not very descriptive. I referred sklearn HuberRegressor, and keep consistent with it.

I'm hesitating to add it to |

|

@sethah To the issue that whether huber linear regression should share codebase with |

|

Test build #82075 has finished for PR 19020 at commit

|

|

Jenkins, test this please. |

|

Test build #82086 has started for PR 19020 at commit |

|

Jenkins, test this please. |

|

@yanboliang Yeah, I saw the discussion and it seems to me the reason was: there would be too much code duplication. Sure, it's true that there would be code duplication, but to me that's a reason to work on the internals so that there is less code duplication, rather than just to continue patching around a design that doesn't work very well. We can combine them, I just don't think we should. I know I'm late to the discussion, so there's already been a lot of work. But these things can't really be undone due to backwards compatibility. We could work on creating better interfaces for plugging in loss/prediction/optimizer, which I think is the best way to approach it. Linear and logistic regression seem like they are just becoming giant, monolithic pieces of code. I guess the argument against it will be lack of developer bandwidth. If that's the case, ok, but I'd argue to just leave Huber regression to be implemented by an external package in that case. If we don't have bandwidth do it in a robust, well-designed way then I don't think doing it the easy way is a good solution either. My first vote is to implement as a separate estimator, my second vote would be to leave it for a Spark package. |

|

Test build #82151 has finished for PR 19020 at commit

|

I see; that seems fine then, though I worry that we use "epsilon" in MLlib (tests) for "a very small positive number." Can we document it more clearly, including the comment that it matches sklearn and is "M" from the paper?

I'd say:

Re: @sethah 's comment about separating classes, I'll comment in the JIRA since that's a bigger discussion. |

|

I also vote to combine them as one estimator, here are my two cents: |

|

LGTM. thanks! |

8356ffb to

2c404ff

Compare

|

Test build #84790 has finished for PR 19020 at commit

|

|

Jenkins, test this please. |

|

Test build #84797 has finished for PR 19020 at commit

|

2c404ff to

4304b6e

Compare

|

Test build #84817 has finished for PR 19020 at commit

|

hhbyyh

left a comment

hhbyyh

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM.

One thing I noticed is that we did not really compare the loss with other lib (like sklearn), which is something also missing for other linear algorithms. Do you think it would be a good idea to add it?

| val linearLoss = label - margin | ||

|

|

||

| if (math.abs(linearLoss) <= sigma * epsilon) { | ||

| lossSum += 0.5 * weight * (sigma + math.pow(linearLoss, 2.0) / sigma) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

extra space after +

| with LinearRegressionParams with MLWritable { | ||

|

|

||

| def this(uid: String, coefficients: Vector, intercept: Double) = | ||

| this(uid, coefficients, intercept, 1.0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is it better to set default scale to a impossible value like 0, or -1

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

scale denotes that |y - X'w - c| is scaled down, I think it makes sense to be set 1.0 for least squares regression.

|

Test build #84883 has finished for PR 19020 at commit

|

|

Merged into master, thanks for all your reviewing. |

What changes were proposed in this pull request?

MLlib

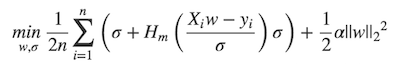

LinearRegressionsupports huber loss addition to leastSquares loss. The huber loss objective function is:Refer Eq.(6) and Eq.(8) in A robust hybrid of lasso and ridge regression. This objective is jointly convex as a function of (w, σ) ∈ R × (0,∞), we can use L-BFGS-B to solve it.

The current implementation is a straight forward porting for Python scikit-learn

HuberRegressor. There are some differences:lossSum/weightSum), but sklearn uses total loss (lossSum).So if fitting w/o regularization, MLlib and sklearn produce the same output. If fitting w/ regularization, MLlib should set

regParamdivide by the number of instances to match the output of sklearn.How was this patch tested?

Unit tests.