-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[MINOR] Fixed hadoop configuration not being applied by FileIndex #8595

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

PTAL.@danny0405 |

| } | ||

| String[] partitions = getOrBuildPartitionPaths().stream().map(p -> fullPartitionPath(path, p)).toArray(String[]::new); | ||

| FileStatus[] allFiles = FSUtils.getFilesInPartitions(HoodieFlinkEngineContext.DEFAULT, metadataConfig, path.toString(), partitions) | ||

| FileStatus[] allFiles = FSUtils.getFilesInPartitions(hoodieFlinkEngineContext, metadataConfig, path.toString(), partitions) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What kind of hadoop configuration do you wanna to pass around?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

|

||

| private FileIndex(Path path, Configuration conf, RowType rowType, DataPruner dataPruner, PartitionPruners.PartitionPruner partitionPruner, int dataBucket) { | ||

| org.apache.hadoop.conf.Configuration hadoopConf = HadoopConfigurations.getHadoopConf(conf); | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

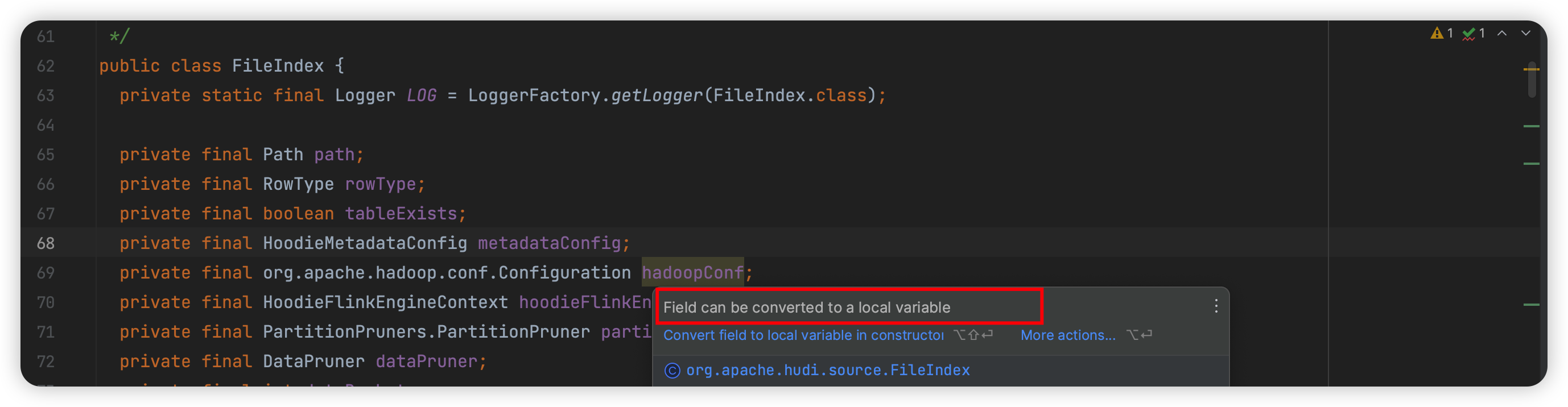

Can we keep the hadoop conf as member instead?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yeah, I will keep the hadoop conf as member

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

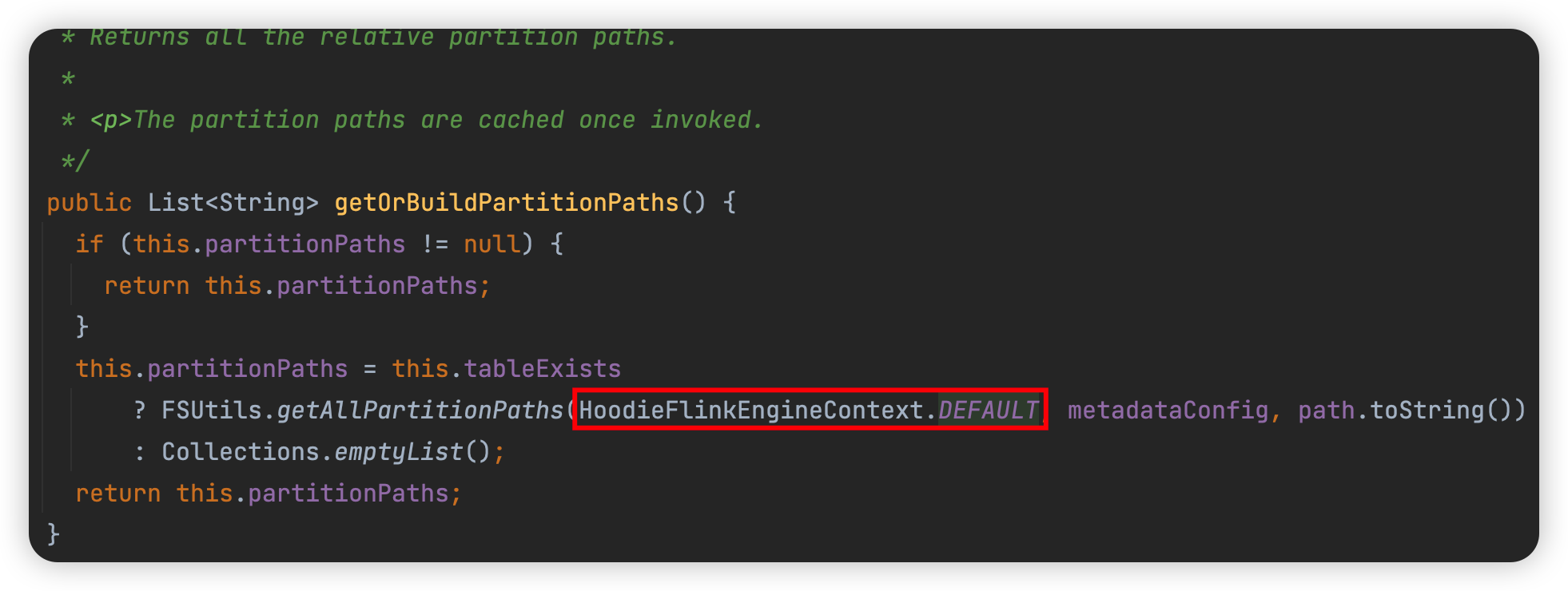

Generating the HoodieFlinkEngineContext on the fly should be fine.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you are right.

…he#8595) Passed the hadoop config options from per-job to the FileIndex correctly.

…he#8595) Passed the hadoop config options from per-job to the FileIndex correctly.

…he#8595) Passed the hadoop config options from per-job to the FileIndex correctly.

Change Logs

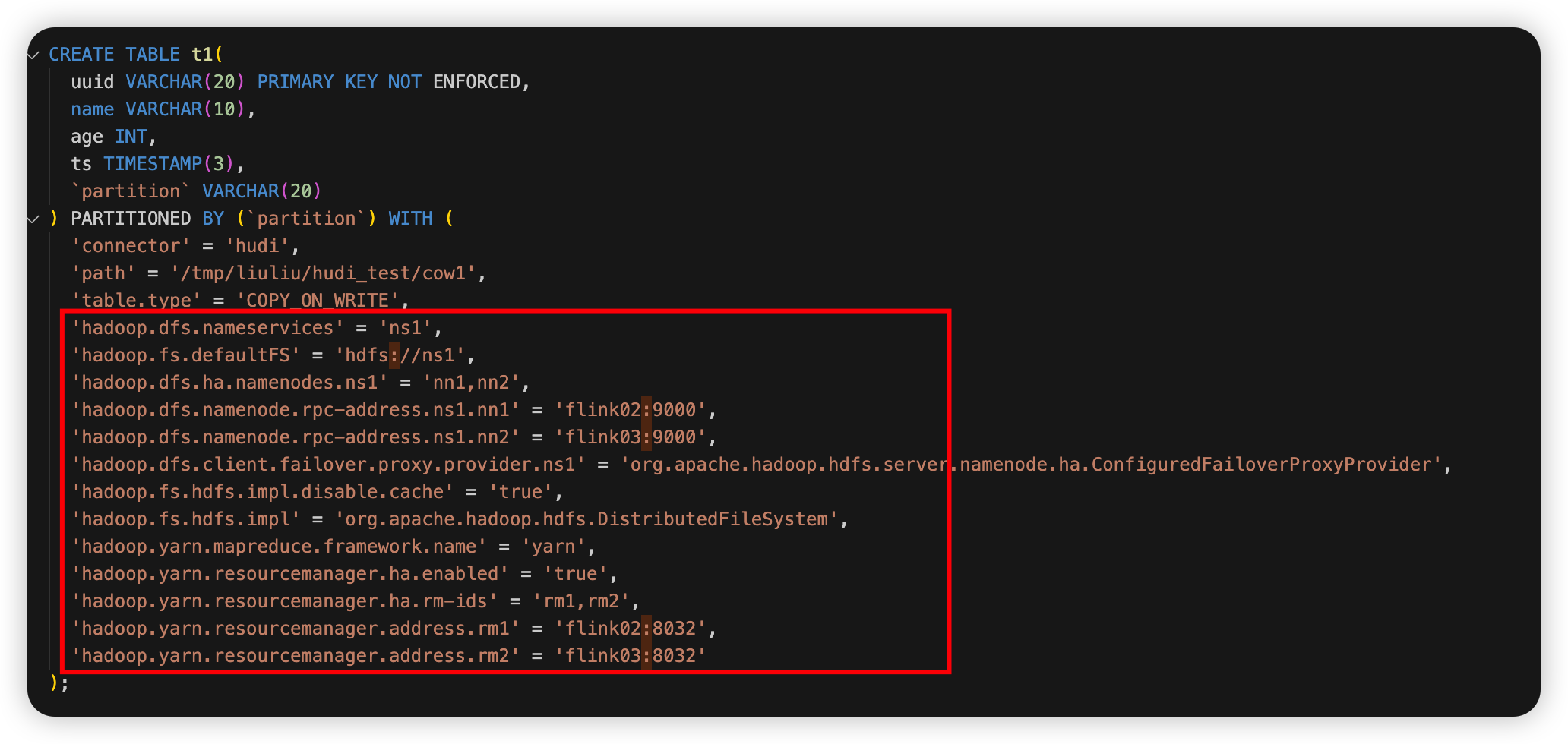

Fixed hadoop configuration not being applied by org.apache.hudi.source.FileIndex

Impact

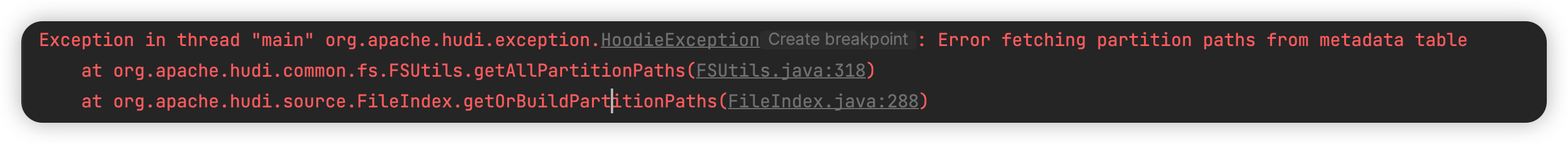

FileIndex uses the DEFAULT HoodieFlinkEngineContext to get the partitionPath without using the information in the configuration.

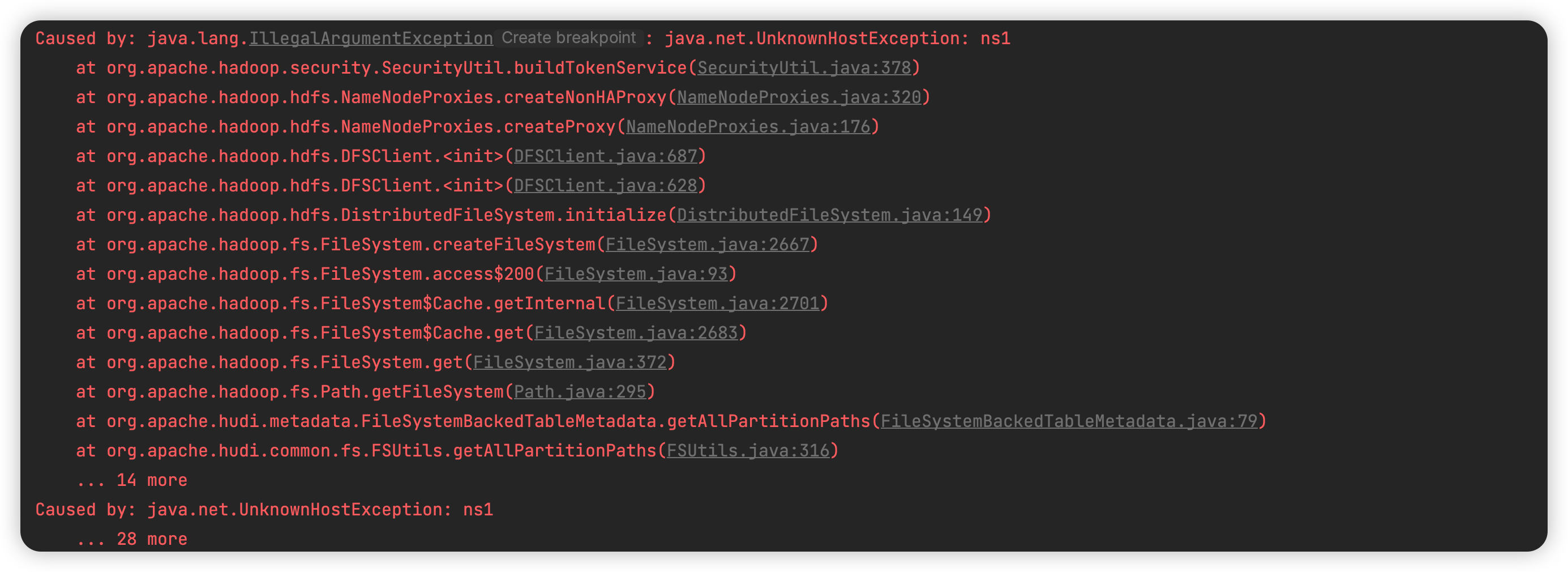

Since I was connecting to a remote hadoop, I subsequently got the following error due to missing configuration

Risk level (write none, low medium or high below)

none

Documentation Update

none

Contributor's checklist