-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI-5341] IncrementalCleaning consider clustering completed later #7405

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[HUDI-5341] IncrementalCleaning consider clustering completed later #7405

Conversation

|

@danny0405 How aboud add a check before archive , varify that every instant completed time should be earlier than the last completed clean-instant start clean time one by one. |

| instant -> (HoodieTimeline.compareTimestamps(instant.getTimestamp(), HoodieTimeline.GREATER_THAN_OR_EQUALS, | ||

| cleanMetadata.getEarliestCommitToRetain()) | ||

| || (instant.getMarkerFileAccessTimestamp().isPresent() | ||

| && (HoodieTimeline.compareTimestamps(instant.getMarkerFileAccessTimestamp().get(), HoodieTimeline.GREATER_THAN_OR_EQUALS, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are many cases that the instant meta file can be accessed , not just cluetring.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are many cases that the instant meta file can be accessed , not just cluetring.

Adjust access time to modification time.

| earlestUnCleanCompletedInstant.map(instant -> | ||

| compareTimestamps(instant.getTimestamp(), GREATER_THAN, s.getTimestamp())) | ||

| .orElse(true) | ||

| ).filter(s -> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We better check the timeline clean metadata files, only when all the replaced clustering files are cleaned, can the clustering instant be archived.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We better check the timeline clean metadata files, only when all the replaced clustering files are cleaned, can the clustering instant be archived.

no completed clustering instant -> no need to check

firstCompletedClusteringInstant is not null && no completed clean instant -> return firstCompletedClusteringInstant

firstCompletedClusteringInstant is not null && earliestCleanInstant is not null -> return firstUncleanClusteringInstant

| this.state = isInflight ? State.INFLIGHT : State.COMPLETED; | ||

| this.action = action; | ||

| this.timestamp = timestamp; | ||

| this.markerFileModificationTimestamp = Option.ofNullable(null); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Removes this line.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Removes this line.

Removed.

| this.state = state; | ||

| this.action = action; | ||

| this.timestamp = timestamp; | ||

| this.markerFileModificationTimestamp = Option.ofNullable(null); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ditto.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Removed

|

5341.patch.zip We can go ahead if you think it is okey, and it would be great if you can test it also. |

| cleanMetadata.getEarliestCommitToRetain()) && HoodieTimeline.compareTimestamps(instant.getTimestamp(), | ||

| instant -> (HoodieTimeline.compareTimestamps(instant.getTimestamp(), HoodieTimeline.GREATER_THAN_OR_EQUALS, | ||

| cleanMetadata.getEarliestCommitToRetain()) | ||

| || (instant.getMarkerFileModificationTimestamp().isPresent() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I didn't think the condition is needed for the commits which isn't the replacecommit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Guarantee compatibility. Maybe other new kinds of instant need to clean later ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If an out-of-order replace commit finished before the clean start and the instant time of the replace commit is before the earliest commit to retain, it won't be cleaned and left in the timeline. Archiver will then archive it since it's last modified time is earlier than the last clean in the timeline. What do you think?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If an out-of-order replace commit finished before the clean start and the instant time of the replace commit is before the earliest commit to retain, it won't be cleaned and left in the timeline. Archiver will then archive it since it's last modified time is earlier than the last clean in the timeline. What do you think?

You are right,it still won't clean the clustering instant in this scenario.

| HoodieActiveTimeline activeTimeline = metaClient.getActiveTimeline(); | ||

| Option<HoodieInstant> firstCompletedClusteringInstant = activeTimeline.getCommitsTimeline().filterCompletedInstants() | ||

| .filter(hoodieInstant -> hoodieInstant.getAction().equals(HoodieTimeline.REPLACE_COMMIT_ACTION)).firstInstant(); | ||

| if (!firstCompletedClusteringInstant.isPresent()) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If the firstCompletedClusteringInstant isn't present, it's no need to get the latest clean time from the clean metadata.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Move it behind else.

| table.getActiveTimeline(), config.getInlineCompactDeltaCommitMax()) | ||

| : Option.empty(); | ||

|

|

||

| Option<HoodieInstant> earlestUnCleanCompletedInstant = CleanerUtils.getEarliestUnCleanCompletedInstant(metaClient); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Fixed

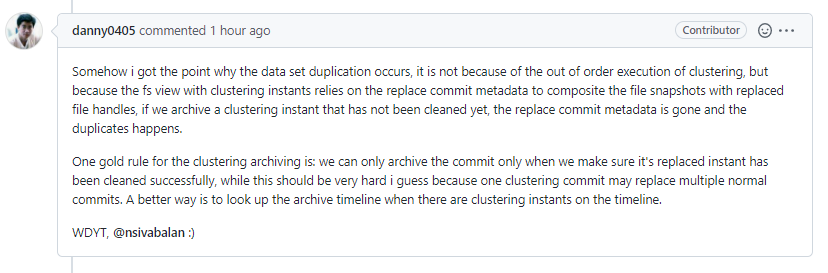

But this patch still not consider clustering completed time ? If previous clustering instant in timeline compelted later than following clean instant cleaned cutoff time, there are still risks. |

|

@danny0405, @zhuanshenbsj1, @stream2000, IMO, the clean operation should modify the WDYT? |

It still need to confirm whether clustering instant has been cleaned when archiving. |

|

@zhuanshenbsj1, +1. I have modified the PTAL. |

|

@danny0405, IMO, this pull request could be close because the 7568 could replace the pull request. |

|

closing the PR as we have the PR #7568 |

Change Logs

refer to #7401 (close accidentally)

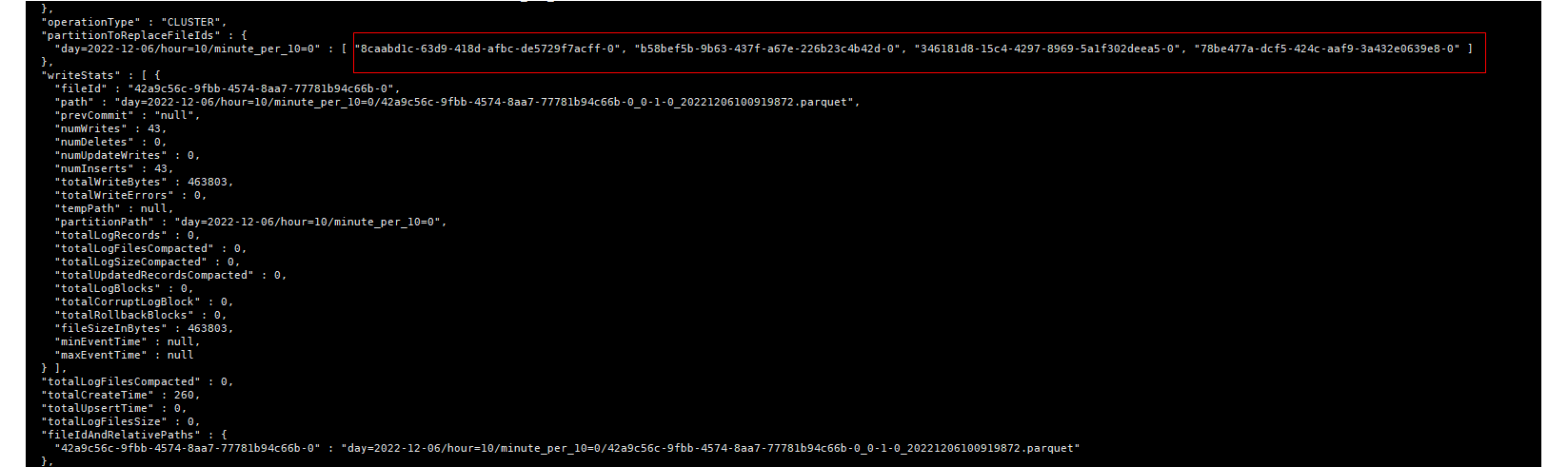

In some scenes, such as offline clustering or online sync clustering(Parallel), later plans could completed before previous plans. If later plan belong to the next partation, and cleaning speed catch up with it,Incremental Cleaning mode for getPartitionPathsForIncrementalCleaning would ignore previous plans ,eventually lead to duplicate data.

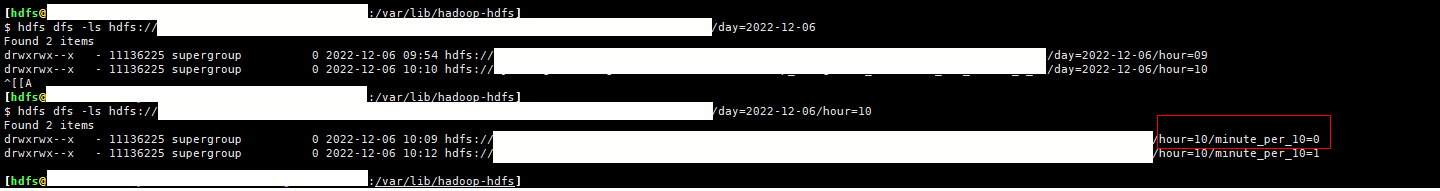

partation : day=2022-12-06/hour=10/minute_per_10=0 , day=2022-12-06/hour=10/minute_per_10=1

cleaning catch up with clustering belong to partation day=2022-12-06/hour=10/minute_per_10=1

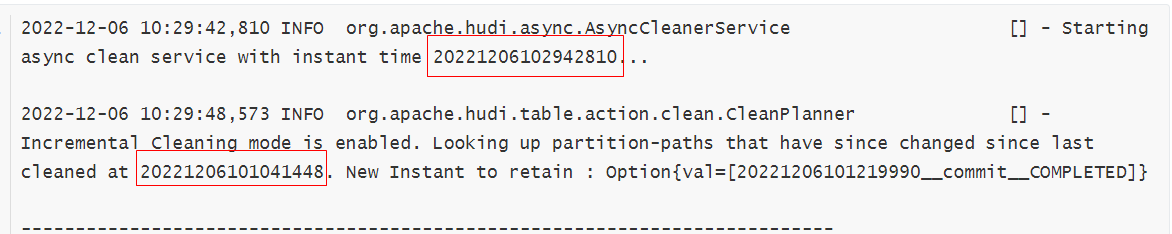

log:

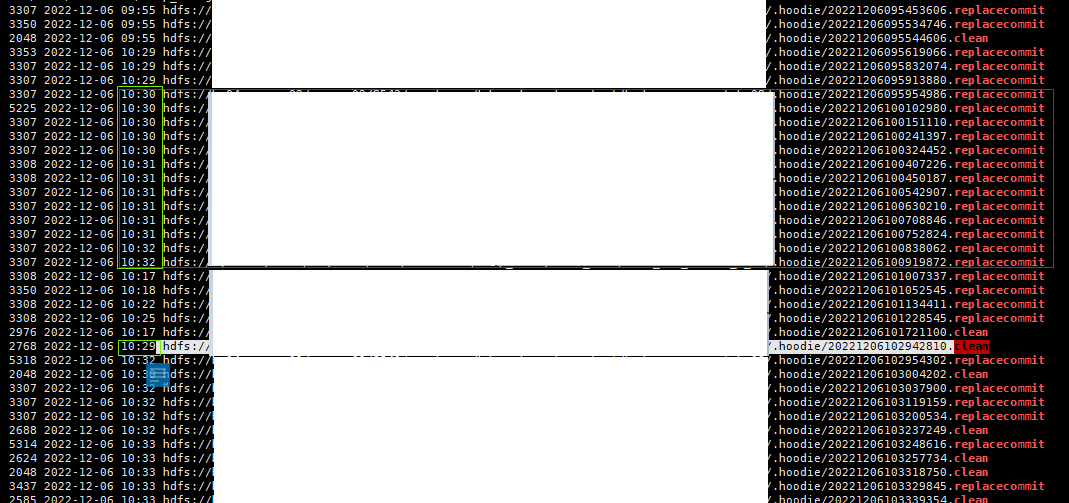

instant:

the file in previous clustering plan belong to partation 2022-12-06/hour=10/minute_per_10=0 won't clean forever. and it will cause duplicate data when the instants archived.

Impact

Describe any public API or user-facing feature change or any performance impact.

Risk level (write none, low medium or high below)

If medium or high, explain what verification was done to mitigate the risks.

Documentation Update

Describe any necessary documentation update if there is any new feature, config, or user-facing change

ticket number here and follow the instruction to make

changes to the website.

Contributor's checklist