[HUDI-5341] IncrementalCleaning consider later clustering #7401

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Change Logs

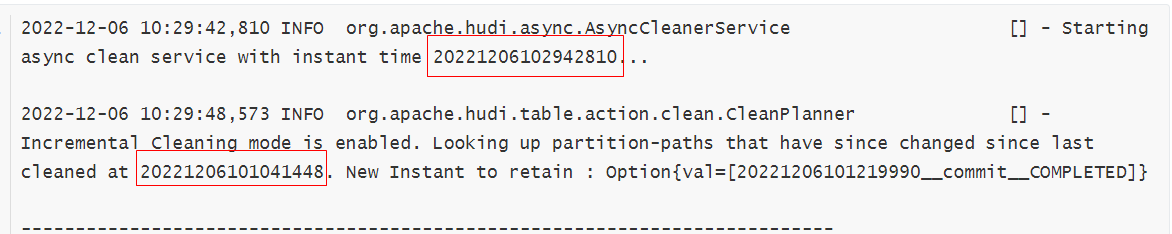

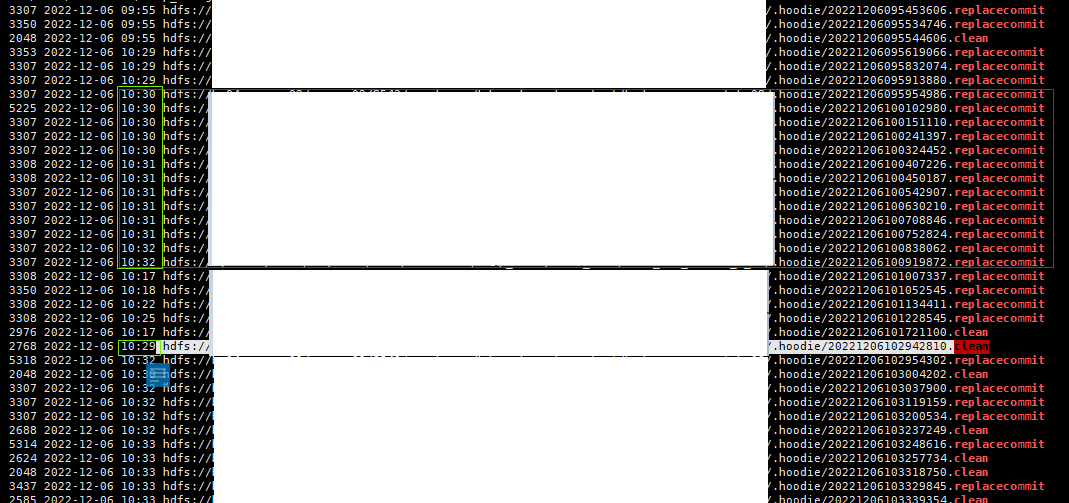

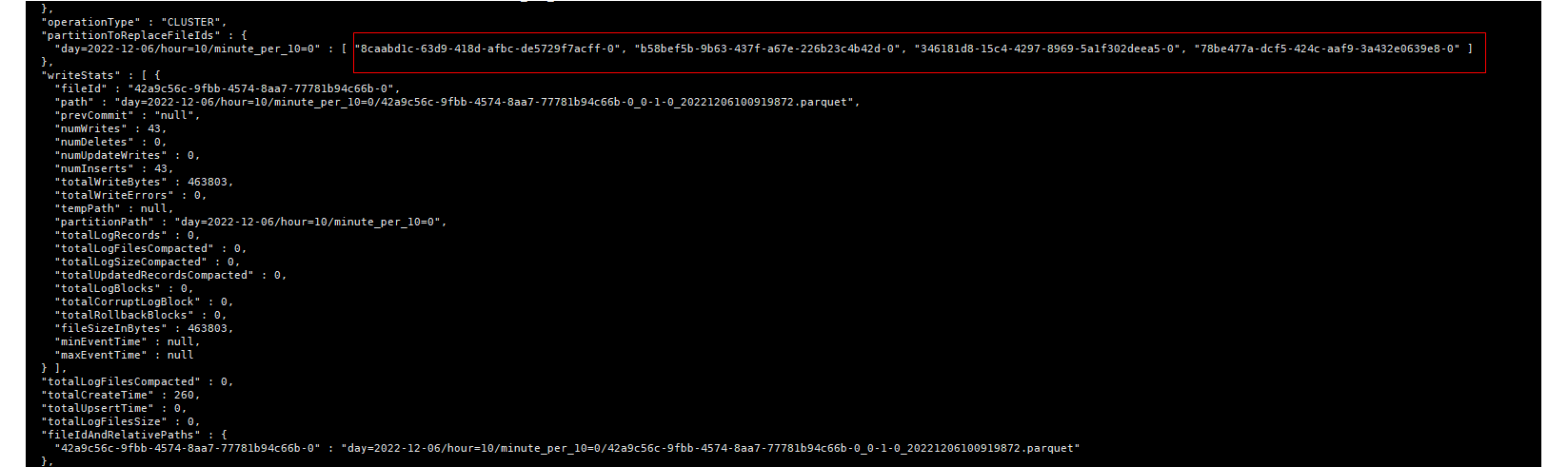

In some scenes, such as offline clustering or online sync clustering(Parallel), later plans could completed before previous plans. If later plan belong to the next partation, and cleaning speed catch up with it,Incremental Cleaning mode for getPartitionPathsForIncrementalCleaning would ignore previous plans ,eventually lead to duplicate data.

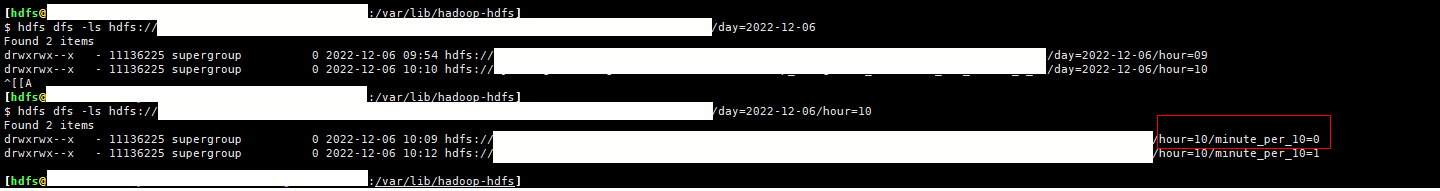

partation : day=2022-12-06/hour=10/minute_per_10=0 , day=2022-12-06/hour=10/minute_per_10=1

cleaning catch up with clustering belong to partation day=2022-12-06/hour=10/minute_per_10=1

log:

instant:

the file in previous clustering plan belong to partation 2022-12-06/hour=10/minute_per_10=0 won't clean forever. and it will cause duplicate data when the instants archived.

Impact

Describe any public API or user-facing feature change or any performance impact.

Risk level (write none, low medium or high below)

If medium or high, explain what verification was done to mitigate the risks.

Documentation Update

Describe any necessary documentation update if there is any new feature, config, or user-facing change

ticket number here and follow the instruction to make

changes to the website.

Contributor's checklist