Conversation

|

@isovector , I've pushed an empty commit to trigger the CI pipelines. I hope you don't mind. |

|

@mdimjasevic not at all, thanks for being on top of it |

|

Looks like the redis thread isn't gracefully shutting down. |

| conn <- connectLowLevel | ||

| Log.info l $ Log.msg (Log.val "successfully connected to Redis") | ||

|

|

||

| reconnectOnce <- once . retry $ reconnectRedis robustConnection -- avoid concurrent attempts to reconnect |

There was a problem hiding this comment.

Essentially you say, once was not working? If once was working, there should never have been a race for updating the MVar. Or rather, the MVar would be swapped several times which could have been optimised by putting swapMVar below once. Or is there more to it which I haven't grasped in this case?

There was a problem hiding this comment.

What's bugging me is that I don't see the memory leak. After all pending Redis actions went through the (admittedly redundant) swapMVar, no memory should be retained. Though, I do see that it seems fixed by this PR.

There was a problem hiding this comment.

I also don't see it, which is why I spent 16 hours digging through heap profiles before I tried changing the code 😊 . My best guess is that once isn't safe in a concurrent environment. It's implemented (indirectly) via modifyMVar, which comes with this warning:

This function is only atomic if there are no other producers for this MVar. In other words, it cannot guarantee that, by the time modifyMVar_ gets the chance to write to the MVar, the value of the MVar has not been altered by a write operation from another thread.

which makes me think once is indeed the culprit. But I don't know!

The other difference between the two logics is that HEAD will still call runRedis in runRobust on a known-bad connection, and it could be some of the janky pipelining in hedis is responsible for leaking the stacks here.

|

@jschaul asked me:

The results are here: which responds with |

|

@jschaul @stefanwire this is ready to merge, but I don't have the necessary bits. |

Thank you, looks fine to me, as expected. |

|

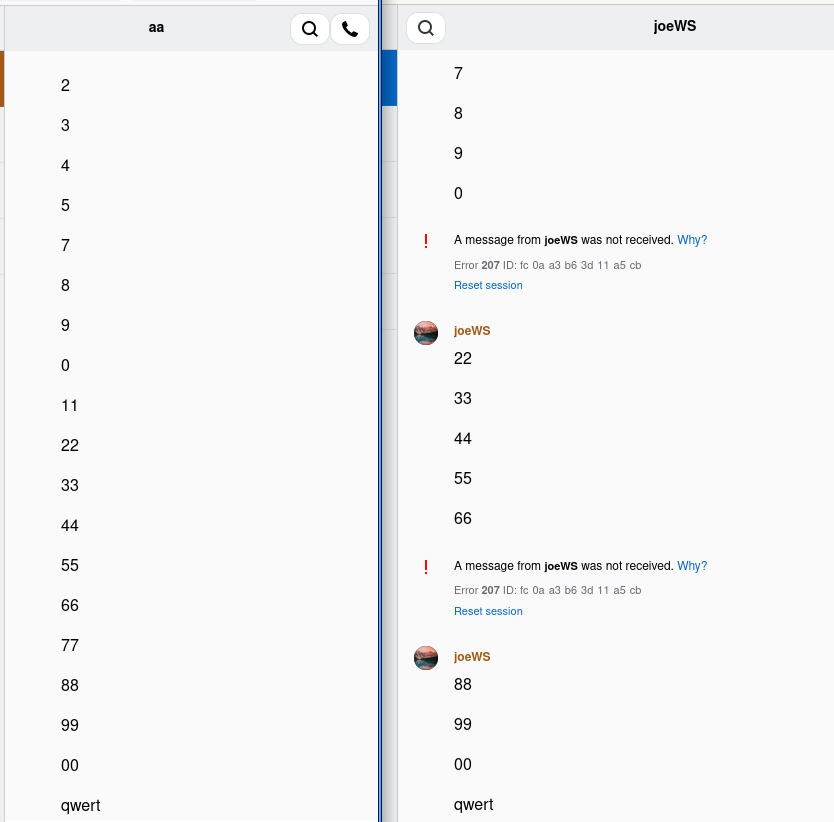

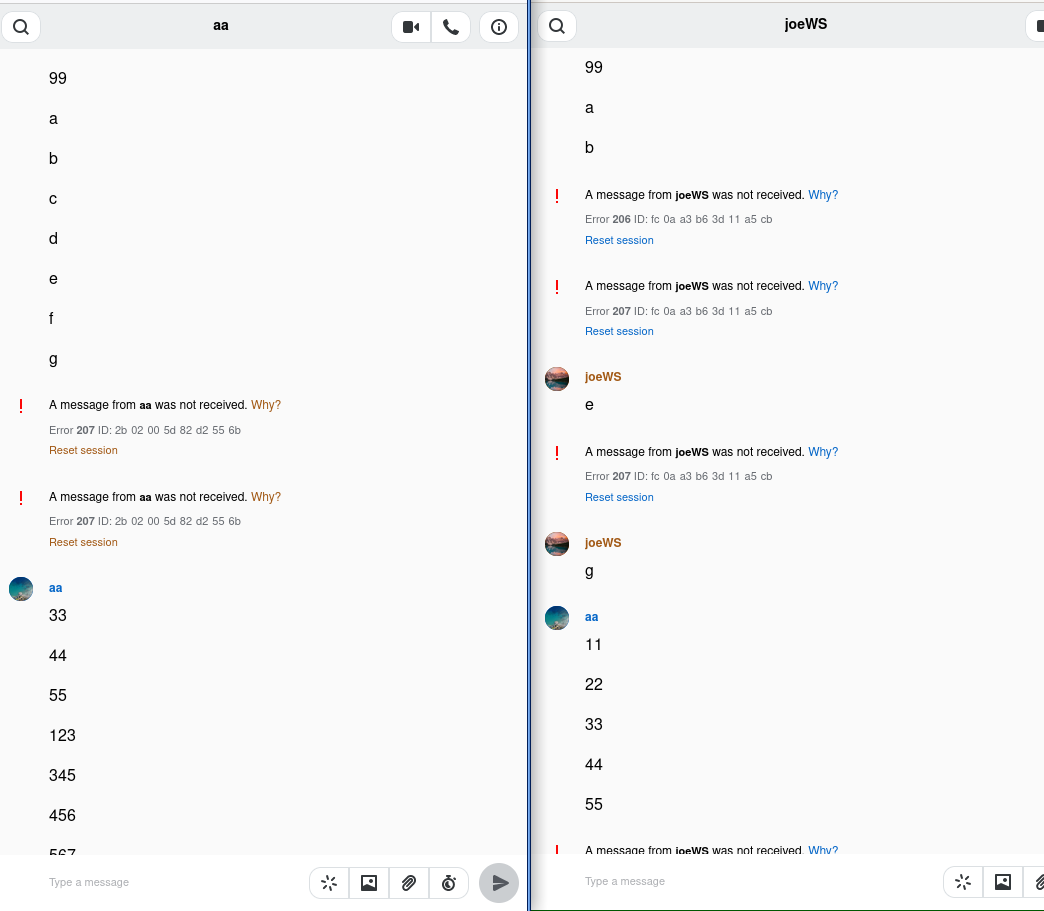

I ran one more manual test on our staging environment.

Current behaviour (on staging): And after deploying this patch: Message loss for end users, on web (error 207) occurs right now when redis is restarted; and it also occurs after this patch. Possibly messages are not delivered at all; or they are delivered out-of-order, in both cases they end up not being decryptable/visible to the end user. So we still have an operational problem here with redis & hedis & gundeck & cannon. As this patch does not seem to significantly make this problem worse (in the end, things recover; even if intermediate messages are lost), and it may fix a memory leak, let's still get this improvement in. The retry logic here; or the general architecture about websockets needs some improvement though at some point. |

This PR fixes a memory leak in gundeck caused by its reconnection logic when Redis is down. The heap before this PR:

and after:

Each spike here is a series of requests sent to gundeck while redis is down; the resulting taper-off in both charts is when redis comes back online.

It's unclear exactly what is causing this behavior in HEAD, but the original logic is highly suspicious. An

MVaris created with the redis connection and is always assumed to be full. Subsequent requests to Redis then catch any exceptions they might see, and trigger a reconnection event, where they spin, attempting to reconnect. Only one thread may spin on this reconnection event, but the others are all blocked on it, and all will be in contention to update theMVar--- ironically, they will all be attempting to update it to the same value. My assumption is the old, bad behavior is caused by new threads attempting to talk to Redis, even though the application knows the connection has been lost, and then jumping in to do pointless work. Due to theMVarcontention, all threads will keep the expensiveConnectionobject on the stack attempting to update the value.Furthermore, the problem is exacerbated by nothing pining redis, so by the time we discover it's down, we already have work we'd like to accomplish.

This PR instead creates a new thread who is responsible for maintaining the connection to redis. It pings redis every second, and if that ever fails, it immediately empties the

MVar Connection, so that other requests to Redis will block until it has been refilled. This new thread will then spin, attempting to reconnect to redis, and will fill theMVaras soon as it's back online, which will unblock the other threads. This makes it extremely clear who is responsible for what, and prevents the weird recursiveretryloops andMVarupdate races.Additionally, you'll notice a ~140MB allocation spike at the beginning of each new reconnection in the new logic. This is a caused by insane stupidity in hedis' implementation that I found while diagnosing this problem. It wouldn't be very much work to fix if we're at all concerned about it.

My debugging trials and tribulations are documented here if anyone is interested in all the things that didn't help in diagnosing this problem :)

Checklist

changelog.d