Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Auto merge of rust-lang#97585 - lqd:const-alloc-intern, r=RalfJung

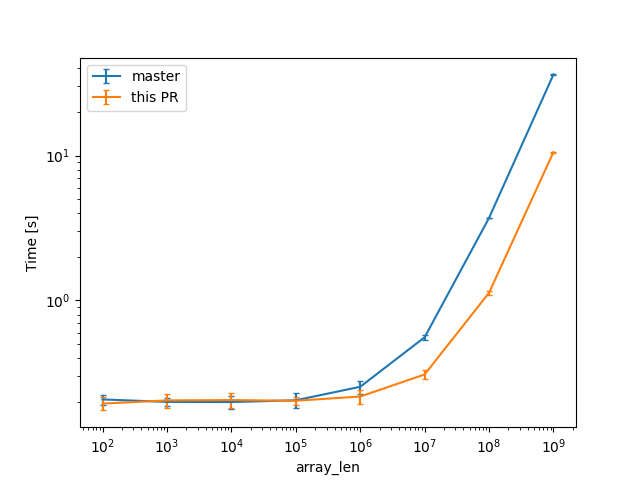

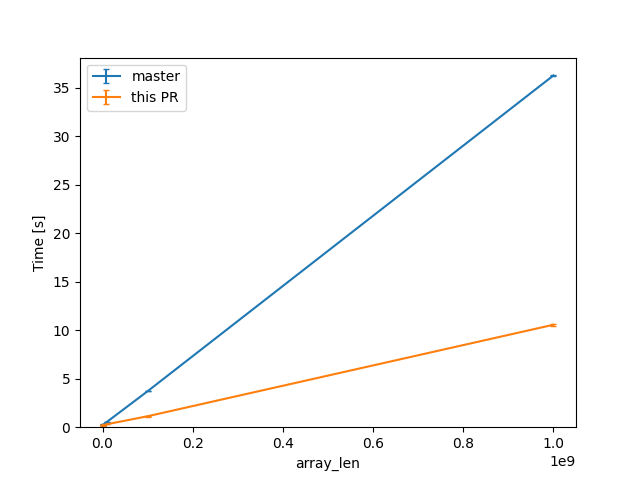

CTFE interning: don't walk allocations that don't need it The interning of const allocations visits the mplace looking for references to intern. Walking big aggregates like big static arrays can be costly, so we only do it if the allocation we're interning contains references or interior mutability. Walking ZSTs was avoided before, and this optimization is now applied to cases where there are no references/relocations either. --- While initially looking at this in the context of rust-lang#93215, I've been testing with smaller allocations than the 16GB one in that issue, and with different init/uninit patterns (esp. via padding). In that example, by default, `eval_to_allocation_raw` is the heaviest query followed by `incr_comp_serialize_result_cache`. So I'll show numbers when incremental compilation is disabled, to focus on the const allocations themselves at 95% of the compilation time, at bigger array sizes on these minimal examples like `static ARRAY: [u64; LEN] = [0; LEN];`. That is a close construction to parts of the `ctfe-stress-test-5` benchmark, which has const allocations in the megabytes, while most crates usually have way smaller ones. This PR will have the most impact in these situations, as the walk during the interning starts to dominate the runtime. Unicode crates (some of which are present in our benchmarks) like `ucd`, `encoding_rs`, etc come to mind as having bigger than usual allocations as well, because of big tables of code points (in the hundreds of KB, so still an order of magnitude or 2 less than the stress test). In a check build, for a single static array shown above, from 100 to 10^9 u64s (for lengths in powers of ten), the constant factors are lowered: (log scales for easier comparisons)  (linear scale for absolute diff at higher Ns)  For one of the alternatives of that issue ```rust const ROWS: usize = 100_000; const COLS: usize = 10_000; static TWODARRAY: [[u128; COLS]; ROWS] = [[0; COLS]; ROWS]; ``` we can see a similar reduction of around 3x (from 38s to 12s or so). For the same size, the slowest case IIRC is when there are uninitialized bytes e.g. via padding ```rust const ROWS: usize = 100_000; const COLS: usize = 10_000; static TWODARRAY: [[(u64, u8); COLS]; ROWS] = [[(0, 0); COLS]; ROWS]; ``` then interning/walking does not dominate anymore (but means there is likely still some interesting work left to do here). Compile times in this case rise up quite a bit, and avoiding interning walks has less impact: around 23%, from 730s on master to 568s with this PR.

- Loading branch information