MSM tuning for high core count #227

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

This PR optimizes multi-scalar-multiplication for machines with a high-core-count.

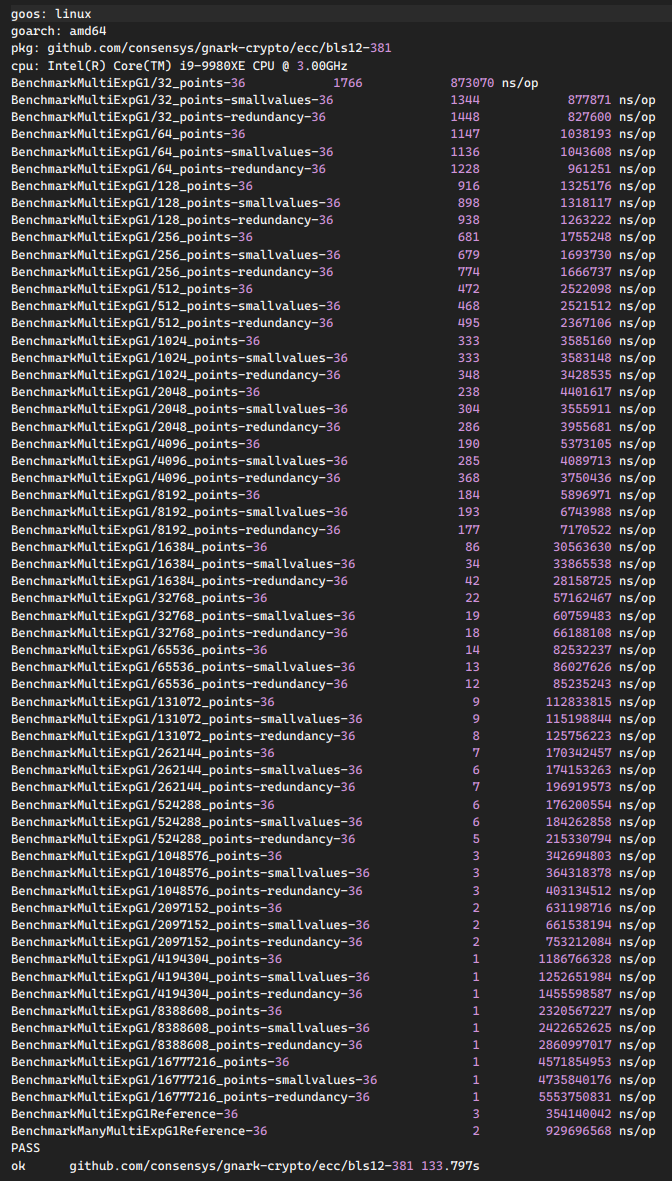

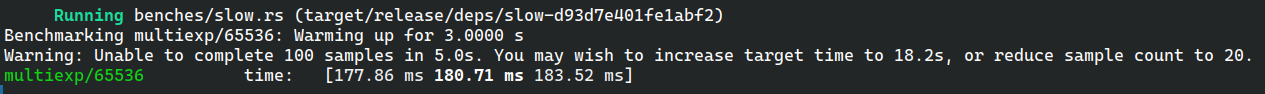

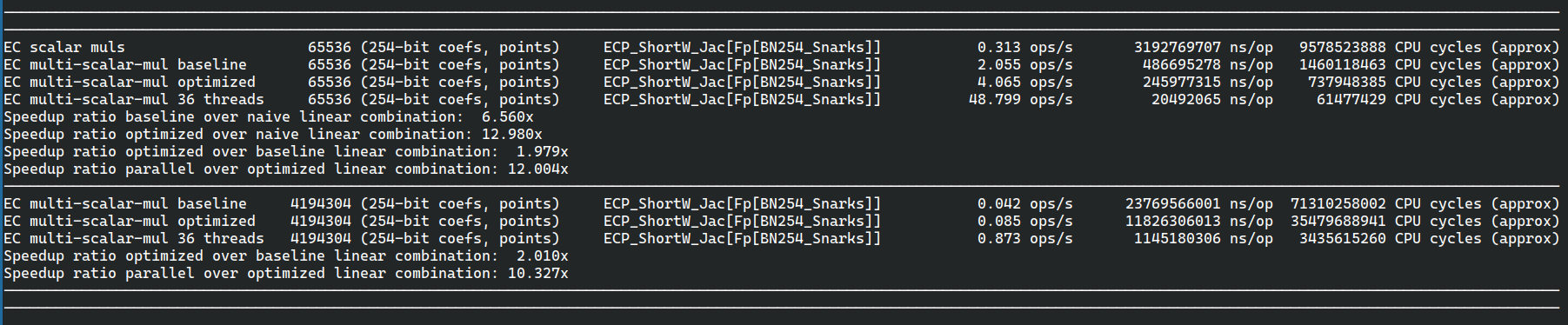

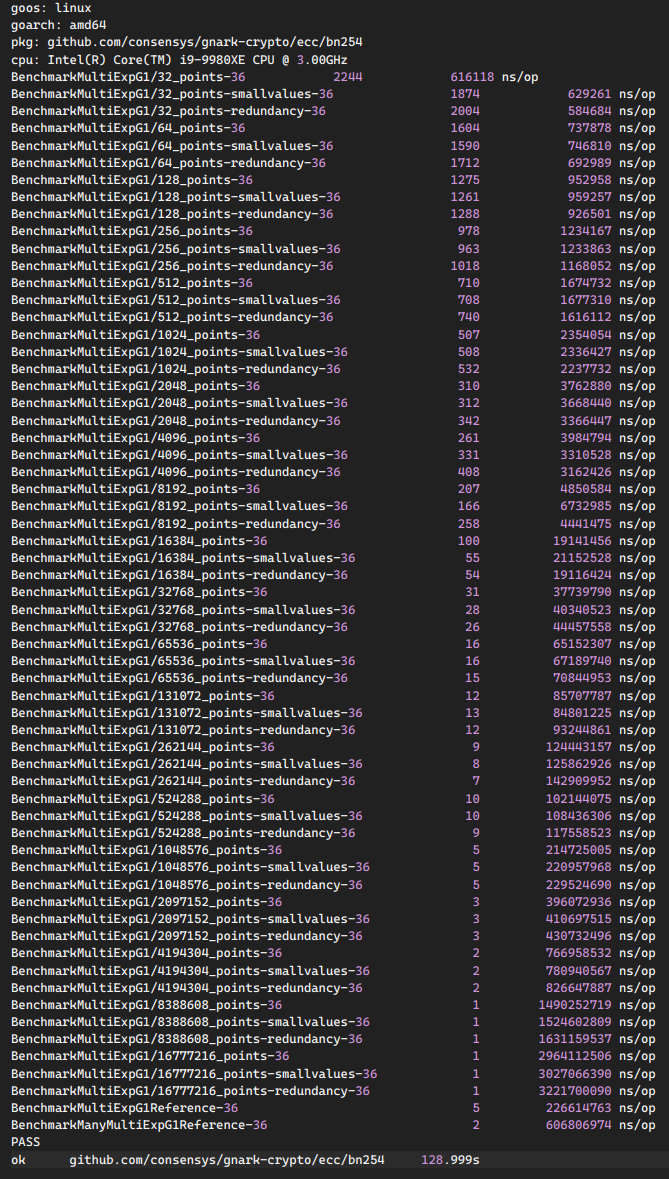

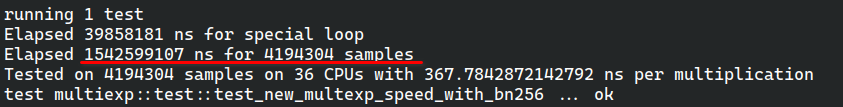

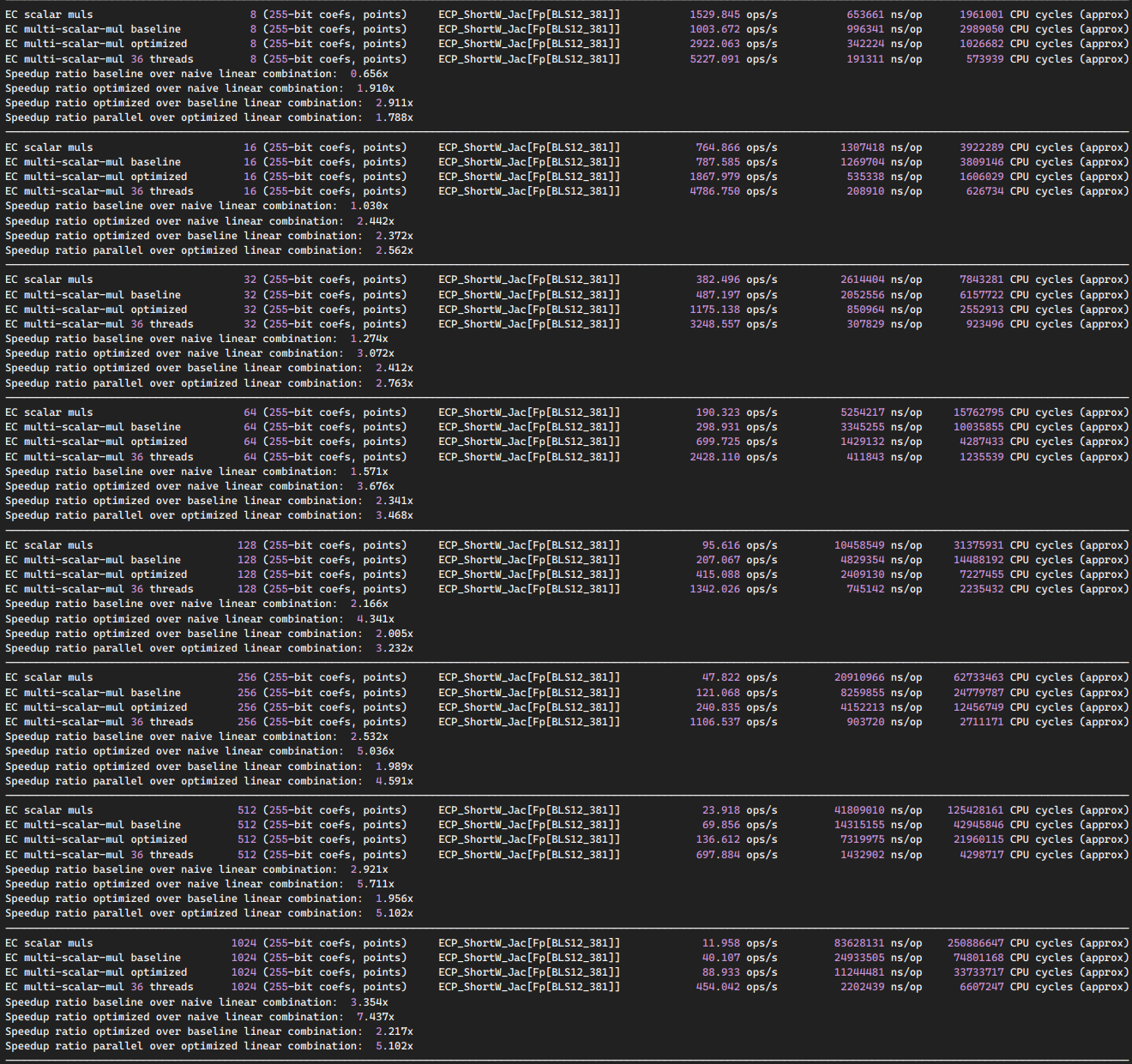

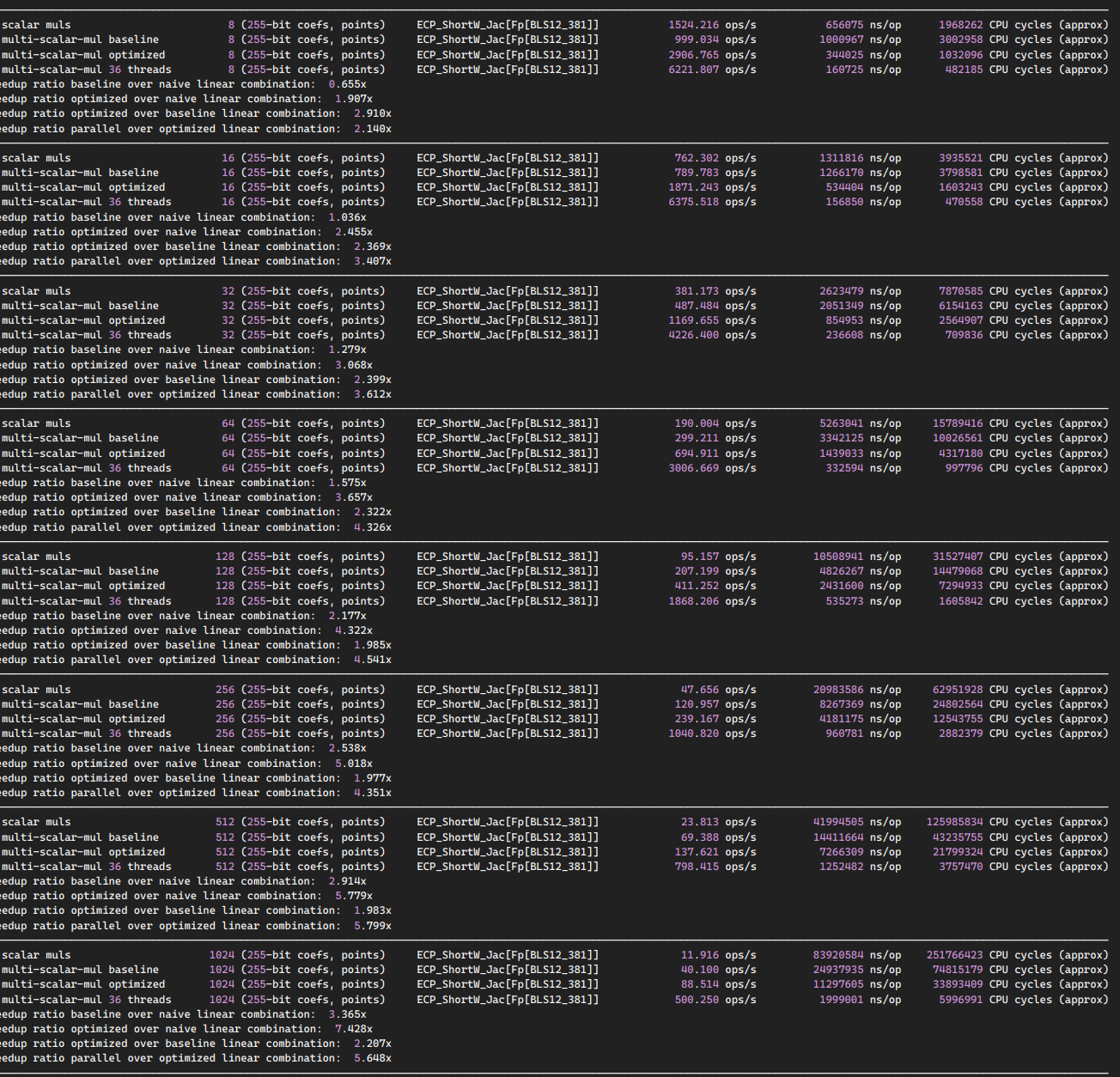

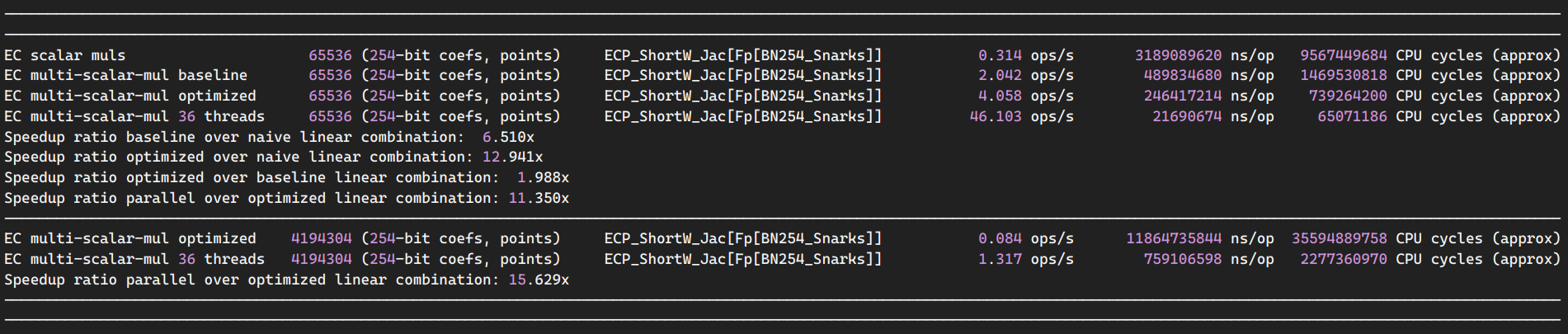

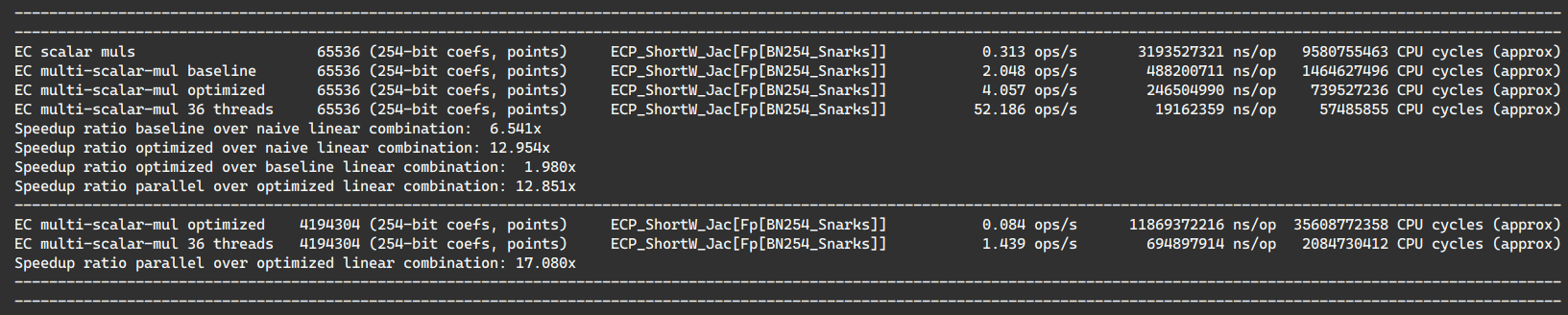

On a i9-9980XE (Skylake X, 18 cores, overclocked and liquid-cooled all-clocked turbo 4.1GHz)

Features

Reentrancy / nested parallelism: A new precise barrier

syncScopehas been introduced to the threadpool.Contrary to

syncAllwhich can only be called in the root thread and so prevents nested parallelism,syncScopecan be called from any thread. Hence parallel MSM and parallel sum reductions / batch additions can be called from within other parallel function, for example a ZK prover that needs to schedule multiple parallel MSMs in parallel.Bug fix

The parallel speedup bench reported the perf ratio of the last iteration instead of the average of all iterations.

Given that most of Constantine is constant-time and the CPU was primed/hot, there were few variations but still ...

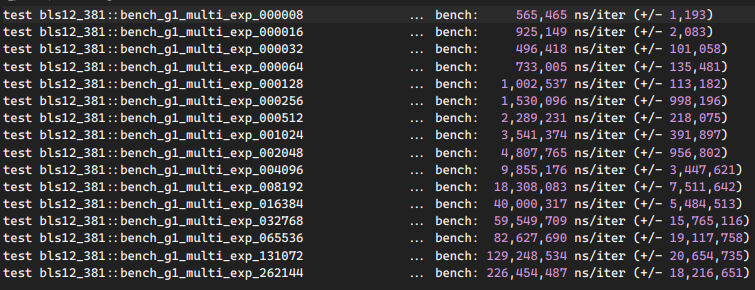

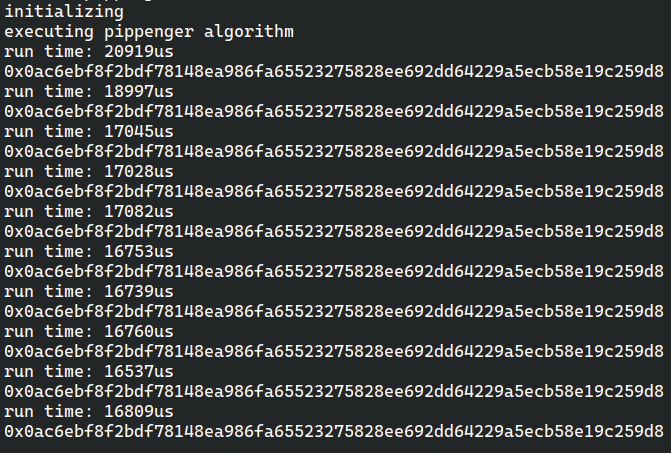

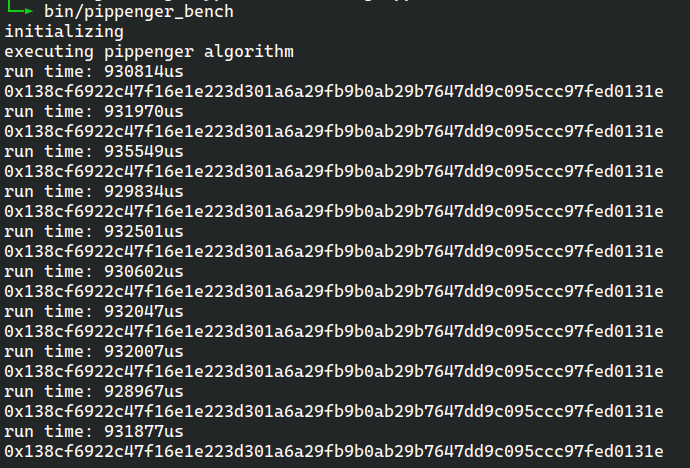

Before

After

Observations

Starting from 512 and up, we have a 50% to 150% perf improvement to core utilization (yes 2.5x). Note that somehow on 8 cores, the previous 512 strategy was displaying over 7x speedup while the new strategy only provides 5.5x. We make the choice of privileging scaling on high core count.

At the top range of our bench 262144 inputs (2^18) the multithreading speedup is over 15x instead of just 11x as previously.

We might reach the limit of Amdahl's Law and might need algorithm refactoring if we want to go further as the serial reductions might be a bottleneck for further parallelism.