-

Notifications

You must be signed in to change notification settings - Fork 911

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Yolo classifier + AI - stick ( movidius, laceli ) #1505

Comments

|

This is very interesting. I've been looking for ways to add some sort of AI to enhance motion detection. I found someone using the Pi GPU. |

Movement => Classify Continuous classification |

|

The sample code from here: which uses MobileNet SSD (I believe from here: https://github.com/chuanqi305/MobileNet-SSD) and a PiCamera module to do about 5 fps on a Pi 3 with Movidius NCS. I've modified the PyImageSearch sample code to get images via MQTT instead of the PiCamera video stream and then run object detection on them. If a "person" is detected I write out the detected file which will ultimately get pushed to my cell phone in a way yet TBD. I've written a simple node-red flow also running on the Pi3 with the NCS that presents an ftp server and sends the image files to the NCS detection script. The Pi3 also runs the MQTT server and node-red. I then configured motioneyeOS on a PiZeroW to ftp its motion images to the Pi3 node-red ftp server. Its working great, been running all afternoon. Since virtually all security DVRs and netcams can ftp their detected images, I thing this system has great generality and could produce a system worthy of a high priority push notification since the false positive rate will be near zero. I plan to put it up on github soon, but it will be my first github project attempt so it might take me longer than I'd like. Running the "Fast Netcam" or v4l2 MJPEG streams into the neural network instead of "snapshots" might be even better, but the FLIR Lorex security DVR I have uses proprietary protocols so ftp'd snapshots is what I used. There is a lot of ugly code to support the lameness of my DVR so after I got it working (been running for three days now) I simplified things for this simple test system I plan to share as a starting point project to integrate AI with video motion detection to greatly lower the false alarm rate. To suggest an enhancement to motioneye, I'd like to see an option for it to push jpegs directoy to an MQTT server instead of ftp. I don't think video motion detection like motioneye is obsolete, it makes a great front-end to reduce the load on the network and AI subsystem letting more cameras be handled with less hardware. Edit: Been running for over 8 hours now. I've the Pi3 also configured as a wifi AP with motioneyeOS connected to it, So I have a stand-alone system with only two parts. There have been 869 frames detected as "motion frames" only 352 had a "person" detected by the AI. Looking at a slide show of the detected images I saw no false positives, over 50% of the frames would be false alarms and very annoying if Emailed. I was testing the system so the number of real events was a lot higher than would normally be the case. So far complete immunity from shadows, reflections, etc. I think this has great potential! |

|

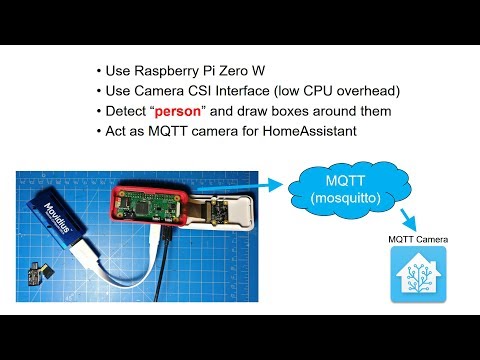

Here's is my attempt at something similar: https://github.com/debsahu/PiCamMovidius |

|

This is basically the demo I started with from PyImageSearch tutorial,

except it left out the MQTT and HA stuff. One camera per Movidius is a bit

expensive.

My system is a stand-alone add-on device put on the same network as a

security DVR or set of netcams and receives the "snapsots" via ftp from the

DVR. Any video motion detection reduces the load on the AI and the AI

pushes the false alarm rate to essentially zero. I'm handling 9 cameras

without problems at the moment.

I would like to try some of your other models, YOLO in particular, once I

get further along. I'm in the process of putting it up on gitub, but its

taking a while as I learn github's formatting and endeavor to make my

instructions clear.

Thanks for theinformation, I might have to look into HA again as a way to

push my notifications

…On Sun, May 27, 2018 at 8:25 PM, deb ***@***.***> wrote:

Here's is my attempt at something similar:

[image: PiMovidiusCamera] <https://www.youtube.com/watch?v=1q7SU6tp4Yk>

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#1505 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AeB7JoKPEGGh9FANfy2kZZ_X8lnnT9kVks5t21IagaJpZM4Tt4m3>

.

|

|

@wb666greene using ocv3 causes fps to drop below 1 (~0.88). Using yolov2 with native PiCamera library is a struggle, I tried. Getting started with GitHub is not straight forward, was a struggle initially. My suggestion is use GitHub desktop. |

|

Thanks I'll look into github desktop. But I've put the code up anyways as my frustration with github is at the point I'm just giving up for now. Getting my system sending notifications is my next order of business. Here is a link to my github crude as it is: I'd like to try other models but ti they don't run on the Movidius the frame rate is not likely to be high enough for my use. DarkNet YOLO was really impressive, but took ~18 seconds to run an image on my i7 desktop without CUDA. |

|

Thanks for the extra background info. I'll add some of these links to my github, think they are very helpful and more enlightening that anything I could write up. My main point is that I've made an "add-on" for an existing video security DVR instead of making a security camera with AI. Expect these to flood the market soon, it'll be a good thing! but until then, I wanted AI in my existing system without a lot of expense or rewiring. MotioneyeOS is a a perfectly good way get a simple video security DVR going, and in fact it has far superior video motion detection than does my FLIR/Lorex system, but you are on your own for "weatherproofing" and adding IR illumination to your MotioneyeOS setup -- not a small job! I used it so I could give a simple example instead of over-complicating things with all the ugly code needed to deal with my FLIR/Lorex system's lameness. |

|

Living on the edge |

|

dlib has very interesting functionality for face recognition http://blog.dlib.net/2017/02/high-quality-face-recognition-with-deep.html That could run on pi... this is quite impressing |

|

I just had a play with Movidius on a RPi 3B+ recently, with version 2 of the NCSDK (still in beta). Here's what I've found:

With all that said, Movidius can still be used for our application as a 2nd-pass system that combs through all the recorded videos to detect person in non-real-time. This may be useful for various use cases, for example:

On 2nd thought, Movidius can still be used for real-time person detection. Integrate into motion software and feed one frame every 3rd or 4th frame into the Movidius. The NCSDKv2 C API is documented here for anyone who wishes to try: https://movidius.github.io/ncsdk/ncapi/ncapi2/c_api/readme.html |

|

Thanks for posting this. I was about to try and install ncsdk V2 on an old machine to give tiny yolo a try, saved me a lot of time wasting! I was hoping the increased resolution of the TinyYolo model over MobileNetSSD would help. Any possibility you could upload or send me your compiled graph? I'd still like to play with the model, but you've removed my motivation for getting setup to compile it with the SDK. Have you tried any of the multi-threaded or multi-processing examples? Running on my i7 desktop I've found using USB3 only improved the frame rate by less than half a frame/sec over USB2. I've been running Chuanqi's MobileNetSSD since July on a Pi3B handling D1 "snapshots" from 9 cameras with overlapping fields of view from my pre-existing Lorex security system. I use the activation of PIR motion sensors to filter (or "gate" ) the images sent to the AI to reduce the load. It works great, I get the snapshots via FTP, and filter what goes to the AI. Only real complaint is the latency to detection can be as high as 3 or 4 seconds, although usually its about 2 seconds, other than the latency it seems real-time enough for me -- effectively the same as motioneye 1 frame/second snapshots. Your use case (1) was my goal. I never looked at the video anyways, as the Lorex system's "scrubbing" is so poor. With the Emailed AI snapshots I now have a timestamp to use should I ever need to go back and look at the 24/7 video record (what the Lorex is really good at, but everything built on top of it is just plain pitiful). I have three system modes, Idle, Audio, and Notify. Idle means we are home and going in and out and don't want to be nagged by the AI. Audio means were are home, but want audio notification of a person in the monitored areas -- fantastic for mail and package deliveries. Notify sends Email images to our cell phones. The key is the Audio AI mode has never woken us up in the middle of the night with a false alarm, and the only Emails have all been valid, mailman, political canvasser, package delivery, etc. Much as I like Motioneye and MotioneyeOS I'm finding the PiCamera modules are not really suitable for 24/7 use as after a period of several days to a couple of weeks the module "crashes" and only returns a static image from before the crash. Everything else seems to work fine SSH, node-red dashboard, cron, etc. but the AI is effectively blind until a reboot. I've a software only MobileNetSSD AI running on a Pi2B and Pi3B with Pi NoIR camera modules, while it only gets one AI frame about every 2 seconds, it still can be surprisingly useful for monitoring key entry areas, but the "soft" camera failures is a serious issue. I've not ever run Motioneye OS 24/7 long enough to know if it suffers the issue or not. I should probably setup my PiZeroW and try. With this experience, I' starting to swap out some Lorex cameras with 720p Onviif "netcams" (USAVision, ~$20 on Amazon) since I don't really care about the video, its a step up in snapshot resolution (1280x720), one Pi3B+ and Movidius can handle about four cameras with ~1 second worst case detection latency. In 24/7 testing usage I am getting Movidius "TIMEOUT" errors every three or four days. It seems I can recover with a try block around the NCS API function calls, and having the except deallocate the graph and close the device, followed by a repeat device scan, open and load graph. Tolerable amount of blind time once every few days. I plan to rewrite for the V2 API to see if it fixes the issue, the V2 multistick example doesn't seem to have any errors yet in over a week of running. |

|

@jasaw Looks like MobileNetSSD is the only practical AI for security camera use at present on resource constrained systems. While MobileNetSSD also makes a lot of wrong calls, if you only care about detecting "people", which seems fine for secruity camera systems, it performs very well in my experience. |

|

@MrJBSwe Also so far the development environment looks like only C/C++ at present. If python bindings become available for it I'll get a whole lot more interested. Not that I'm any great python guru, but I find it really hard to beat for "rapid prototyping". At this point I think the AI network is more of a limitation for security system purposes than is the hardware to run the network. MobileNetSSD cpu only can get 7+ fps on my i7 desktop with the OpenCV 3.4.2 dnn module and simple non- threaded python code. |

|

I have implemented Movidius support into motion and used ChuanQi's MobileNetSSD person detector to work alongside the classic motion detection algorithm. If "Show Frame Changes" in motionEye is enabled, it will also draw a red box around the detected person and the confidence percentage at the top right corner. I have only tested on a Raspberry Pi with Pi camera with single camera stream. If you have multiple camera streams, the code expects multiple Movidius NC sticks, one stick per mvnc-enabled camera stream. Camera streams with mvnc disabled will use the classic motion detection algorithm. Code is here: How to use:

Note: There seems to be some issue getting motionEye front-end to work reliably with this movidius motion. Quite often motionEye is not able to get the mjpg stream from motion, but accessing the stream directly from web browser via port 8081 works fine. Restarting motionEye multiple times seems to workaround this problem for me. Maybe someone can help me look into it? |

|

@jasaw Using the V1 ncsapi and ChuanQi's MoblileNetSSD on a Pi3B I'm getting about 5.6 fps with the Pi camera module (1280x720) using simple python code and openCV 3.4.2 for image drawing, boxes, and labels (the python PiCamera library I use creates a capture thread). With simple threaded python code I'm also getting about 5.7 fps from a 1280x720 Onvif netcam (the ~$20 one I mentioned in an earlier reply). This same code and camera running on my i7 Desktop (heavily loaded) is getting about 8 fps. On a lightly loaded AMD quad core its getting about 9 fps Have you seen any performance improvements of V1 vs. V2 of the ncsapi? I now have one Pi3B setup with the V2 ncsapi and have run some of the examples (using a USB camera at 640x480) I was most interested in the multi-stick examples, but I've found that the two python examples from the appzoo that I've tried are in pretty bad shape -- not exiting and cleaning up threads properly. I pretty much duplicate their 3-stick results but I don't think they are measuring the frame rate correctly. The frame rate seems camera limited as dropping the light level drops the frame rate and their detection overlays show incredible "lag" |

|

@wb666greene I don't know exactly how many frames I'm getting from the Movidius stick. I'm not even following the threaded example. I think it doesn't matter anyway as long as it's running at roughly 5 fps. With my implementation, everything still runs at whatever frame rate you set, say 30 fps, but inference is only done at 5 fps. A person usually doesn't move in and out of camera view within 200ms (5fps), so it's pretty safe to assume that we'll at least get a few frames of the person, which is more than enough for inference. I'm going to refactor my code so that I can merge it into upstream motion, and have multi-stick support as well. |

|

I have implemented proper MVNC support into motion software. See my earlier post for usage instructions: #1505 (comment) |

|

@wb666greene I've finally measured the frame rate from my implementation. |

|

I did some temperature testing. I ran a short test pushing 11 fps and managed to get the NC stick to thermal throttle within 10 minutes, at ambient temperature of 24 degrees Celsius. The stick starts to throttle when it reaches 70 degrees Celsius, and frame rate dropped to 8 fps. I believe this is just the first level throttling (there are 2 stages). According to Intel's documentation, these are the throttle states:

The stick temperature seems to plateau at 55 degrees Celsius when pushing 5.5 fps, again ambient temperature of 24 degrees Celsius. |

|

I have recently tried Nvidia Xavier => I get like 5fps with yolov2 Since it is quite expensive, I'm still putting my hope in direction of AI - sticks like Movidius X RK3399Pro is an interesting addition ( but I prefer to buy the AI-stick separate with a mature API ;-) |

|

@jasaw I have given up on the v2 SDK for now, sticking with the V1 sdk and made some code variations to see what frame rates I can get with the same Python code (auto configuring for Python 3.5 vs 2.7) on three different systems comparing Thread and Multiprocessing to the baseline single main loop code which gave 3.2 fps for the Onvif cameras and 5.3 fps for a USB camera and openCV capture. The Onvif cameras are 1280x720 and the USB camera was also set to 1280x720. These tests suggested using three Python threads, one to round-robin sample the Onvif cameras, one to process the AI on the NCS, and the main thread to do everything else (MQTT for state and result reporting, saving images with detections, displaying the live images, etc.) would be the way to go. I got 10.7 fps on my i7 desktop with NCS on USB3 running Python 3.5 on an overnight run. Running the same code on Pi3B+ with Python 2.7 I'm getting 7.1 fps, but its only been running all morning. My work area is ~27C and I'm not seeing any evidence of thermal throttling (or it hits so fast my test runs haven't been short enough to see it). I don't think the v1 SDK has the temperature reporting features, I haven't checked the "throttling state" as I really only care about the "equilibrium" frame rate I can obtain. For my purposes 6 fps will support 4 cameras. I'm going to try adding a fourth thread to service a second NCS stick. @MrJBSwe |

I have 2 movidus and like them as a "appetizer" while waiting for movidius x ( or something similar ) I want to run yolov2 ( or something similar ) at >= 4 fps ( yolo tiny gives too random results ). I plan to check out your code wb666greene & jasaw, interesting work ! this is a bit interesting ( price is right ;-) |

|

@wb666greene From what I've read, thermal reporting is only available on v2 SDK. In my test, I'm pushing 11 fps consistently through the stick until it starts to go in and out of thermal throttle state cycle of 8 fps (thermal throttled) for 1 second, 11 fps (normal) for 3 seconds. If you take the average, it's still pushing 10 fps, which may explain the 10.7 fps that you're seeing on your i7 desktop. I imagine at higher ambient temperature like summer 45 degrees Celsius, it's going to stay throttled for much longer, possibly even go into 2nd stage throttle. |

|

Maybe something... Similar to Khadas Edge, RK3399Pro and the upcoming Rock Pi 4 & RockPro64-AI, I guess the trend is RK3399Pro for multiple reason ( but a I still hope for movidius X and or Laceli ) BM1880 List Movidius X has been released ! |

|

@MrJBSwe Yes, Neural Compute Stick 2 has finally been released. Let's see if I can get one to play with. I see a few obstacles in supporting NCS 2.

Excited and disappointed at the same time... |

|

@MrJBSwe Thanks for the info on the Lightspeeur devices, the price is nice, but its usefulness is going to depend on the quality and clarity of the sample code. My experience with 4K images and 300x300 pixel MobilenetSSD-v2 means I won't hazard a guess about what increases or decreases accuracy. Hardware failure a few months ago gave me the opportunity to upgrade to a 4K capable system. I figured a virtual PTZ by cropping the image would be very convenient and minimize how much I had to be on a ladder adjusting things so I got a 4K UHD camera and mounted it as close as I could to an existing HD camera so as to have nearly the same vield of view, Running MobilenetSSD-v2 (which I'd switched to a few months before the failure) totally blew me away in terms of how many more detections I got with the UHD camera. I use a full frame detect, crop (zoom in) and re-detect with higher threshold to reduce false positives. I suspect I get better results with UHD images because the cropped image for verification is "better", but I expected it to perform very poorly as I expected the initial detections would be greatly reduced, which seems not to be the case. |

|

2020 feb, h7 plus med 32 MB of SDRAM, 32 MB of flash 5MP Low Energy, UVC support, nice IDE Simple AI support |

Turns out that I was wrong. The new undocumented API supports querying all the inference capable hardware. I have 2x NCS2 and 2x NCS1 sticks, and the API gives me the name of each device that has the USB path in it, e.g. MYRIAD.1.2-ma2480. I can then choose which model to load to which stick, but for my use case, I loaded the same model to all the sticks. After a lot of effort, I managed to run motion with multiple Myriad sticks on my Raspberry Pi 4. The performance scales quite linearly, which is great. Can't wait to try out NCS3 ! For anyone who is interested, sample code that uses the new query API is documented here |

|

@jasaw |

|

@wb666greene The python example is in your openvino install directory. |

|

@jasaw Sorry for the me culpa stupidity. |

|

I have just tested openvino + motion + two NCS2 + chuanqi's MobileNetSSD, very similar setup as my previous 1st gen NCS with NCSDK framework.

Turns out that the low fps with two NCS2 was caused by my camera reducing frame rate because I was testing it in dark environment and auto-brightness feature reduced shutter speed thus reduced the frame rate. I tested it again in the morning and I managed to get 25 fps with two NCS2. With a single NCS, I get 8.5 fps, similar to NCSDK. I also tried the VGG_VOC0712Plus_SSD_300x300_ft_iter_160000 SSD model (that Intel uses in their SSD examples), but found that it is a lot less accurate than chuanqi's MobileNetSSD. It false detects a dog as a person, and runs very slow too. I only get 4 fps on my RPi4 with two NCS2 sticks. |

|

Here's a new recipe for getting motion to work with Intel NCS2 on raspberry Pi, using OpenVINO framework and ChuanQi's MobileNetSSD neural net model. I set one system up for my own use, thought it might be useful to someone else too. Compile and Install ffmpeg (optional)This ffmpeg step is only needed if you want to use h264_omx hardware accelerated video encoder. ffmpeg dependenciesHow to install and run motion software with MobileNetSSD alternate detection library on Raspbian

|

|

@wb666greene You are right that ChuanQi's MobileNetSSD model accuracy is not satisfactory. I am also getting a lot of false positives under certain lighting conditions. I would like to try the MobileNetSSD-v2_coco tensorflow lite model that you are using, but I can't find a way to PM you. If you could point me to where I can download the model, that would be great. I have Intel's Model Optimizer too, so I can do the model conversion. Is there any specific option that you had to use during Model Optimizer conversion? I just discovered MobileNetSSDv3. The difference between v3 and v2 is the v3 has more optimizations that improve the accuracy without affecting the speed. I haven't got time to look for a v3 model. I may even consider training my own model, only if time permits. |

|

You can download my converted ssd-v2 model here:

https://1drv.ms/u/s!AnWizTQQ52YzgT-8qDa1-DqgSsAT?e=tH2qEj

Hope this helps.

…On Fri, Dec 20, 2019 at 6:40 PM jasaw ***@***.***> wrote:

@wb666greene <https://github.com/wb666greene> You are right that

ChuanQi's MobileNetSSD model accuracy is not satisfactory. I am also

getting a lot of false positives under certain lighting conditions. I would

like to try the MobileNetSSD-v2_coco tensorflow lite model that you are

using, but I can't find a way to PM you. If you could point me to where I

can download the model, that would be great. I have Intel's Model Optimizer

too, so I can do the model conversion. Is there any specific option that

you had to use during Model Optimizer conversion?

I just discovered MobileNetSSDv3. The difference between v3 and v2 is the

v3 has more optimizations that improve the accuracy without affecting the

speed. I haven't got time to look for a v3 model. I may even consider

training my own model, only if time permits.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1505>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHQHWJUCRHUTLLTLGDBFJQTQZVQZBANCNFSM4E5XRG3Q>

.

|

|

@wb666greene sorry for disturbing you again, but can you please share the model before it was converted to xml and bin files? |

|

Thanks guys for what you re doing, keep it up. Cant wait for my new ordered coral usb accelerator to come. I am curious how it will work with rpi 4 with motioneye + few pi zeros with cameras. |

|

If I send you my model downloader command will that work?

~/intel/openvino/deployment_tools/tools/model_downloader$ ./downloader.py

--name ssd_mobilenet_v2_coco

I then just followed the model optimizer "recipe" using all the defaults.

I do have a saved_model.pb file that is ~70MB but I believe it was created

by the model downloader.

I recently switched from "installing" OpenVINO to using apt and the Intel

repos so I may have lost the stuff in the default directories

Here is my model optimizer command line:

./mo_tf.py --input_model /home/wally/ssdv2/frozen_inference_graph.pb

--tensorflow_use_custom_operations_config

/home/wally/ssdv2/ssd_v2_support.json

--tensorflow_object_detection_api_pipeline_config

/home/wally/ssdv2/pipeline.config --data_type FP16 --log_level DEBUG

I ran it twice once for FP16 (for NCS) and again with FP32 (for CPU), I

believe newest OpenVINO CPU module can now use FP16 models. I have no idea

how to get the model optimizer to change much of anything, beyond this

option.

…On Sun, Dec 22, 2019 at 7:12 AM jasaw ***@***.***> wrote:

@wb666greene <https://github.com/wb666greene> sorry for disturbing you

again, but can you please share the model before it was converted to xml

and bin files?

I tried your precompiled model, but my code is expecting input bgr values

from 0 to 255 rather than 0 to 1. I normally just get model optimizer to

scale the input as part of the compilation process .

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1505>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHQHWJST365M2PAOFBC23RDQZ5RULANCNFSM4E5XRG3Q>

.

|

If you don't already have the Pi Cameras, IMHO you should rethink this. You can buy IP cameras (aka netcams) for about the price of the Pi Camera Module and they have solved the weatherproofing and mounting issues for you Here are some test recent test results of my Python code running on Pi4B, Jetson Nano, and Coral Development board decoding multiple 3 fps rtsp streams: ` 5DEC2019wbk some Pi4B tests with rtsp cameras, 3fps per stream: 6DEC2019wbk Some UHD tests on Jetson Nano 7DEC2019wbk Coral Development Board Note that with the Pi4B and these kind of workloads a fan is essential to keep from thermal throttling. The Coral Development board has a built in fan, the Jetson Nano has a rather massive heat-sink that is almost the size of the entire Coral Dev board :) Edit: What is causing the "strikeout" text? |

Well, i already bought most of the stuff i needed in belief i will get better "system" for same money, but with that coral stick + small additional stuff like pi camera cable reductions i am at 300€, and it is not over yet. I would maybe do better with some classic ip camera system, but meh, it is what it is 😄 3x Pi Zero W Coral USB stick will come this week and i believe it can be nice combo with Pi 4. I will need to buy some fake camera housing + make it waterproof. Also router, and if i will get some reasonable fps during testing (>15 fps at 720p - 1080p), i will also buy some IR lighting and SSD/HDD(will have to think it trough). I am only sad that H.265 is not possible with this setup. I am worried that i will have too much problems with motion/motioneyeos orwith pi zeros -> i will have to write a lot of custom code. Regarding heating, i have just bigger heatsink, maybe it will hold on 😁 I am pretty big newbie, not even coding python (java mostly), with small experience with opencv from school. With that in mind i really appreciate what you re doing 🙂 |

|

I hope you have better results with PizeroW and PiCamera module and

MotioneyeOSs than I have had.

The IR illumination issue is yet another thing in favor of purpose built

"IP cams".

If you can return the PiCamera modules to the vendor I'd recommend doing

so, the PiZeroW are cheap enough and useful enough for other things, like

volumeIO music players, etc. that its not an unacceptable loss.

Using MotioneyeOS 20190119 I can't get 5 fps to a web browser with "fast

network camera enabled" and 720p. Latency is horrible at 5-6 seconds when

viewed in Chrome browser.

After the holidays, I'll set this up as an rtsp netcam in my AI system and

report how it performs. But I expect little after viewing the http//:8081

stream in VLC or a browser.

IMHO there would have to be miraculous improvements in current motioneeyOS

to make this viable on a PiZeroW.

MotioneyeOS on a PizeroW ftp of motion detected "snapshots" to my initial

AI running on a Pi2B was my initial starting point, but but it was of

little use beyond proof of principle demos.

Merry Christmas!

Happy Hanukka, etc. whatever you celebrate this time of year!

My wife gets a paid holiday for Kwansaa so we are celebrating that too!

…On Tue, Dec 24, 2019 at 2:00 PM Samuel Šutaj ***@***.***> wrote:

If you don't already have the Pi Cameras, IMHO you should rethink this.

You can buy IP cameras (aka netcams) for about the price of the Pi Camera

Module and they have solved the weatherproofing and mounting issues for you

Well, i already bought most of the stuff i needed in belief i will get

better "system" for same money, but with that coral stick + small

additional stuff like pi camera cable reductions i am at 300€, and it is

not over yet. I would maybe do better with some classic ip camera system,

but meh, it is what it is 😄

3x Pi Zero W

3x Pi NoIR Camera V2

1x Pi 4B 4GB

Coral USB stick will come this week and i believe it can be nice combo

with Pi 4. I will need to buy some fake camera housing + make it

waterproof. Also router, and if i will get some reasonable fps during

testing (>15 fps at 720p - 1080p), i will also buy some IR lighting and

SSD/HDD(will have to think it trough). I am only sad that H.265 is not

possible with this setup.

I am worried that i will have too much problems with motion/motioneyeos

orwith pi zeros -> i will have to write a lot of custom code. Regarding

heating, i have just bigger heatsink, maybe it will hold on 😁

I am pretty big newbie, not even coding python (java mostly), with small

experience with opencv from school. With that in mind i really appreciate

what you re doing 🙂

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#1505>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AHQHWJXSGKSNXXXTCL7TVBTQ2JS6HANCNFSM4E5XRG3Q>

.

|

|

@SamuelSutaj I fired up my old PiZeroW with MotioneyeOS and it was not working. I re-flashed the SD card with the current version (20190911). I used the default settings except for turning off motion detection, setting image size to 1280x720 and setting frame rate to 10 fps for both camera and streaming. I get ~5.2 fps with it as a "netcam" (http://MeyeOS:8081). Using the "fast network camera" setting may help (especially if you want higher than 720p resolution), but 5 fps per camera is more than good enough -- IMHO 2-3 fps/camera is generally fine. Seems the current verison works better than I remembered on the PiZeroW. But the PiZeroW WiFi is not the best, in the same room with the WiFi Router running overnight, I had three camera outages lasting from 8 to 19 minutes. My AI code automatically recovers from camera outages, but the camera is blind during these outages. "short runs" without the camera drop outs, can hit ~8 fps, I know its camera limited as my AI on this test system can hit ~24 fps with multiple cameras and an NCS2. |

|

@wb666greene Thank you for sharing the model optimizer command for converting ssd_mobilenet_v2_coco. I tried the same command but added Is this the correct input and output format?

I also tried ChuanQi's |

|

@jasaw But, I also got no results with SSDv2 when I did the mean subtractions. Not doing it fixed the issues for me, only difference in my Python code for using SSD v1 vs v2: |

|

Hello, is there a guide to set this up a test of this? I have docker running on a x86 machine, sadly no dongle right now but I have an Intel GPU and of course an option to add an Nvidia GPU card. I was thinking to try to use the CPU first round just to test and then add GPU later. I also have a few Raspberries so ARM could be an option as-well. If there would be a dev-release from GIT or a step-by-step guide to add a object detection model I would be very interested in testing. :D |

|

With Intel GPU, you could try Intel's OpenVINO. Here's my guide: #1505 (comment) Obviously ignore the whole Raspbian part because you're running on x86 machine. To use Intel GPU, you'll need to update |

|

Hello @jasaw get stuck on the Git clone the lib_openvino_ssd library I cannot compile as this is for ARM not Intel X86. Could you please offer some guidance?

Tried to edit the Makefile to -march=x86-64 but it still fails. :D |

|

Sorry, I forgot my makefile was hard coded for ARM. Try removing -march=armv7-a from the makefile. |

|

[2018]

[2021] I'm not sure where motion/AI has got to these days, despite having a look around, so I'll keep this system running a while longer. |

|

@Chiny91 Very glad to hear that it has been working well for you. I have been running 4 movidius sticks with my OpenVINO recipe for 2 years and it has been working more reliably than expected. It has fallen over twice so far because movidius 2 sticks have very high peak power draw and RPi USB 5V supply is not stable enough. I ended up using externally powered USB hub. Regarding false positives, I've only gotten the occasional few. I'm sure there's room for improvement on the neural model itself, but haven't got time to train my own model. I've also been playing with Jetson Nano and it looks like a much better hardware for our purpose. The hardware is A LOT more capable than movidius 2, but cheaper than a RPi + movidius2 combo. Software stack looks cleaner too because motion just calls straight into standard openCV library. Dave (motion developer) mentioned that he was working on new version of motion that uses openCV. Not sure where he's up to. |

|

Idea is good, but price is awfully high for a 1080p camera, unless the "rolling shutter" and potential of 120 fps is a requirement. I still think its premature to build the AI into cameras right now, the improvement I got going from MobilenetSSD_v1 to MobilenetSSD_v2 was great enough it'd have been a bummer to have a bunch of cameras stuck on MobilenetSSD_v1. My exception is the OAK-D which is three cameras and a Myriaid-X in one camera housing, The color central camera and two monochrome cameras either side of it allows full color AI with depth information from the stereo cameras to be estimated from the synchronized frames. The new Corel MPCIe and M.2 TPU modules cost less than half the USB3 TPU version but it also obsoletes the original edgetpu API and replaced it with PyCoral API. A Coral MPCIe TPU and an old i7-4500U "mini-PC" is getting 35 fps running 7 4K and 7 1080p 3 fps H.265 cameras connecting to the RTSP streams from my security DVR. I've since switched the cameras from H.265 to H.264 as I was getting high latency with h.265, moving to H.264 dropped my latency to ~2 seconds compared to 6+ seconds. No free lunch, I lose a few days of retention on my 24/7 recordings, but I want low latency notification at the start of a potential crime to stop or mitigate the losses, watching nice video of my stuff being carried away the next morning is just not really useful. |

I think combining motion detection with classification like yolo and using AI-sticks as workhorse could fit very nicely in to the core purpose of motioneyeos.

The trend is a usb-dongle drawing less than 1 watt could do the classifying job, example movidius or laceli

The text was updated successfully, but these errors were encountered: