- About

- Installing

- Using in plain Python scripts

- Authentication

- Using in Jupyter

- Serving data from a local Kamu workspace

- Using with Spark

Python client library for Kamu.

Start with kamu-cli repo if you are not familiar with the project.

Install the library:

pip install kamuConsider installing with extra features:

pip install kamu[jupyter-autoviz,jupyter-sql,spark]jupyter-autoviz- Jupyter auto-viz for Pandas data framesjupyter-sql- Jupyter%%sqlcell magicspark- extra libraries temporarily required to communicate with Spark engine

import kamu

con = kamu.connect("grpc+tls://node.demo.kamu.dev:50050")

# Executes query on the node and returns result as Pandas DataFrame

df = con.query(

"""

select

event_time, open, close, volume

from 'kamu/co.alphavantage.tickers.daily.spy'

where from_symbol = 'spy' and to_symbol = 'usd'

order by event_time

"""

)

print(df)By default the connection will use DataFusion engine with Postgres-like SQL dialect.

The client library is based on modern ADBC standard and the underlying connection can be used directly with other libraries supporting ADBC data sources:

import kamu

import pandas

con = kamu.connect("grpc+tls://node.demo.kamu.dev:50050")

df = pandas.read_sql_query(

"select 1 as x",

con.as_adbc(),

)You can supply an access token via token parameter:

kamu.connect("grpc+tls://node.demo.kamu.dev:50050", token="<access-token>")When token is not provided the library will authenticate as anonymous user. If node allows anonymous access the client will get a session token assigned during the handshake procedure and will use it for all subsequent requests.

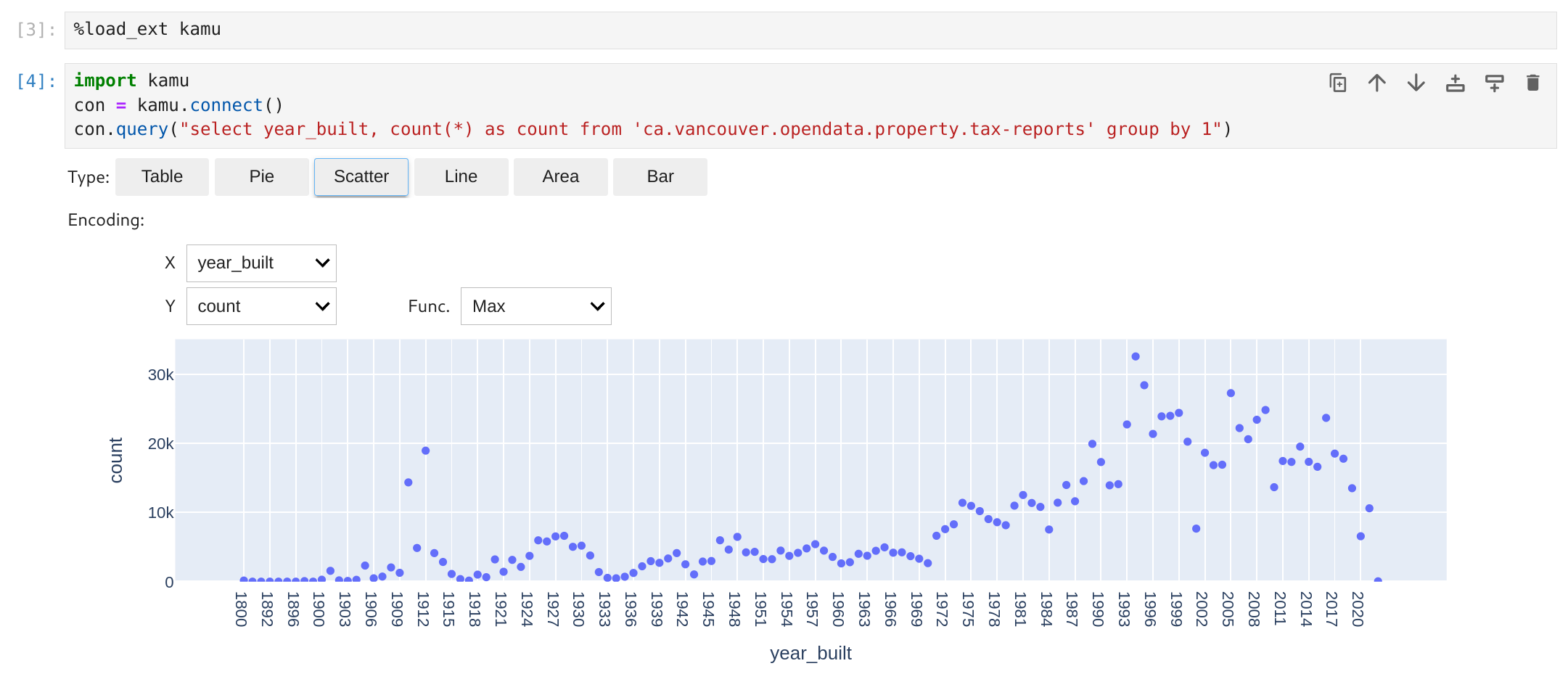

Load the extension in your notebook:

%load_ext kamuCreate connection:

con = kamu.connect("grpc+tls://node.demo.kamu.dev:50050")Extension provides a convenience %%sql magic:

%%sql

select

event_time, open, close, volume

from 'kamu/co.alphavantage.tickers.daily.spy'

where from_symbol = 'spy' and to_symbol = 'usd'

order by event_timeThe above is equivalent to:

con.query("...")To save the query result into a variable use:

%%sql -o df

select * from xThe above is equivalent to:

df = con.query("...")

dfTo silence the output add -q:

%%sql -o df -q

select * from xThe kamu extension automatically registers autovizwidget to offer some options to visualize your data frames.

This library should work with most Python-based notebook environments.

Here's an example Google Colab Notebook.

If you have kamu-cli you can serve data directly from a local workspace like so:

con = kamu.connect("file:///path/to/workspace")This will automatically start a kamu sql server sub-process and connect to it using an appropriate protocol.

Use file:// to start the server in the current directory.

You can specify a different engine when connecting:

con = kamu.connect("http://livy:8888", engine="spark")Note that currently Spark connectivity relies on Livy HTTP gateway but in future will be unified under ADBC.

You can also provide extra configuration to the connection:

con = kamu.connect(

"http://livy:8888",

engine="spark",

connection_params=dict(

driver_memory="1000m",

executor_memory="2000m",

),

)