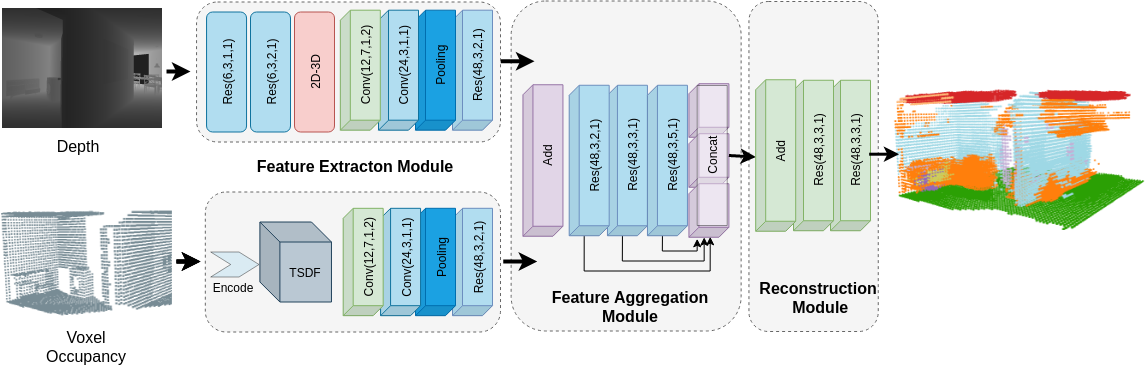

A 3D Convolutional Neural Network for semantic scene completions from depth maps

- pytorch≥1.4.0

- torch_scatter

- imageio

- scipy

- scikit-learn

- tqdm

You can install the requirements by running pip install -r requirements.txt.

If you use other versions of PyTorch or CUDA, be sure to select the corresponding version of torch_scatter.

The raw data can be found in SSCNet.

The repackaged data can be downloaded via Google Drive or BaiduYun(Access code:lpmk).

The repackaged data includes:

rgb_tensor = npz_file['rgb'] # pytorch tensor of color image

depth_tensor = npz_file['depth'] # pytorch tensor of depth

tsdf_hr = npz_file['tsdf_hr'] # flipped TSDF, (240, 144, 240)

tsdf_lr = npz_file['tsdf_lr'] # flipped TSDF, ( 60, 36, 60)

target_hr = npz_file['target_hr'] # ground truth, (240, 144, 240)

target_lr = npz_file['target_lr'] # ground truth, ( 60, 36, 60)

position = npz_file['position'] # 2D-3D projection mapping indexConfigure the data path in config.py

'train': '/path/to/your/training/data'

'val': '/path/to/your/testing/data'

Edit the training script run_SSC_train.sh, then run

bash run_SSC_train.sh

Edit the testing script run_SSC_test.sh, then run

bash run_SSC_test.sh

The SSC Network is deployed as ROS node for scene completions from depth topics. Please follow the follow instructuon for setting up ROS scene completion node.

-

A python library providing C++ (CPU/CUDA) backend implementations for:

- Fixed size TSDF volume computation from a single depth image

- Fixed size 3D Volumetric grid computation by probabilistically fusing pointcloud (for SCFusion)

- 3D projection indices from a 2D depth image

NOTE: CUDA 10.2 is required for GPU backend.

Install the python extension from voxel_utils folder for inference on depth images from ROS topics:

cd voxel_utils

make #compile C++/CUDA code

pyhton setup.py install # install the package into current python environmentpython infer_ros.py --model palnet --resume trained_model.pth

A pretreined model can be download from here.

NOTE: Make sure to activate catkin workspace before starting inference.

The Semantic Scene Completion Networks are adapted from PALNet and DDRNet. Please cite the respective papers:

@InProceedings{Li2019ddr,

author = {Li, Jie and Liu, Yu and Gong, Dong and Shi, Qinfeng and Yuan, Xia and Zhao, Chunxia and Reid, Ian},

title = {RGBD Based Dimensional Decomposition Residual Network for 3D Semantic Scene Completion},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

month = {June},

pages = {7693--7702},

year = {2019}

}

@article{li2019palnet,

title={Depth Based Semantic Scene Completion With Position Importance Aware Loss},

author={Li, Jie and Liu, Yu and Yuan, Xia and Zhao, Chunxia and Siegwart, Roland and Reid, Ian and Cadena, Cesar},

journal={IEEE Robotics and Automation Letters},

volume={5},

number={1},

pages={219--226},

year={2019},

publisher={IEEE}

}