-

Notifications

You must be signed in to change notification settings - Fork 1k

Add scala docs to javadocs #1368

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add scala docs to javadocs #1368

Conversation

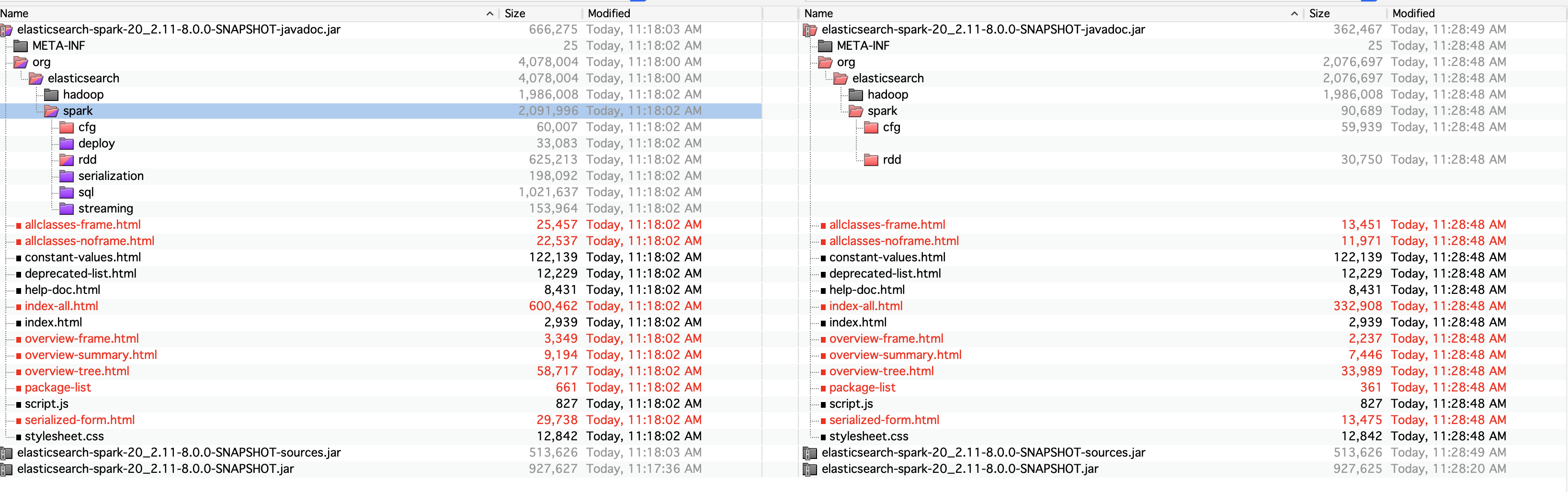

This commit introduces a 3rd party plugin to co-locate scala docs with java docs. https://github.com/lightbend/genjavadoc Fixes: elastic#1363

|

Use the following commands to test: |

|

While reading some of the documentation I gotta say this sounds pretty gnarly. What's the main motivation of this over just using This motivation also makes no sense to me:

But, this isn't Java code, it's Scala. So why are they doing all this hackiness to make a Scala API more amenable to Java programmers? I mean, aren't the consumers of this API Scala developers, and thereby more inclined to want the more robust Scaladoc vs a watered-down and potentially inaccurate Javadoc representation of the API? Is the assumption here that while we are implementing the API in Scala, it can still technically be consumed by Java code thereby targeting a Java audience? Is this accurate in this case? Do we expect the consumers of this stuff to be primarily Java folks or Scala folks? |

|

To add, my comments are mainly rooted in my ignorance of the Scala ecosystem. If this is a common pattern for documentation of APIs implemented in Scala then by all means, I'll defer to the Scala experts here on whether this is a good idea. I simply found this method of JavaDoc generation to be a bit odd and want to ensure we have strong motivations for introducing this since it carries some cost. |

spark/sql-13/build.gradle

Outdated

| } | ||

|

|

||

| javadoc { | ||

| dependsOn scaladoc //ensures that scala compiles and scala docs are valid |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We don't need to run scaladoc to generate the javadocs. We just need to compile. This should depend on compileScala instead.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

since nothing else actually runs the scala docs, I thought that generating them next to the java docs (even if not used) would serve as a good as test. But it is unnecessary and I will change it.

Nope. Any consumer that wants to use this library with Spark will need to use this API. That could Java or Scala devs. My guess is most are Java devs. If we think most are Scala devs we should look at publishing the scala docs jar.

Not sure if this is a common pattern, but the library is by from Lightbend formerly known as Typesafe, which is well known. |

Gotcha, understood. Yeah, in that case I would agree then that having the API in Javadoc format would be most accessible. I assume while you were testing this the compile-time cost wasn't appreciable? If it is, there are perhaps things we could do, like make the compiler plugin conditional on the |

We should probably avoid making these assumptions without any data. My gut feeling is that it's split 40/40/20 across Scala, Python, and Java (respectively), but I don't have any data to back this up other than past issues I can think of.

From what I'm reading, it "emits structurally equivalent Java code for all Scala sources of a project, keeping the Scaladoc comments (with a few format adaptions)." Is this structural Java code created alongside the regular compiler class file output, or is this structural Java code an intermediary step? I'm assuming its the former, but I want us to be sure. Scala has some whacky language features in it that just do not translate well to structural Java code. |

Is the Java API documentation missing because it's nested under the Scala source? I want to make sure I'm understanding this clearly since I admittedly haven't spent much time looking into the Javadoc/Scaladoc problem for this part of the project. |

Right, since this is implemented as a Scala compiler plugin those Java source files are generated whenever the Scala source is compiled, regardless of whether we're actually generated JavaDocs or not, so that's the bit I would expect to have a cost. I suspect one of the most costly parts of the Scala compile process is building the AST, which needs to be done regardless of this generation step so in way it makes sense that it doesn't add much time to the build. 👍 |

Ok cool, this was my major paranoia. I was mostly worried that the compiler plugin was taking the scala source, turning it into java source, and then converting the resulting java source into byte code/class files. But it sounds like the scala source still compiles to byte code and class files directly, with the added generation of some Java files for use in the javadoc process. |

jbaiera

left a comment

jbaiera

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One small nit, but it looks like an easy change. Thanks for diving into this!

spark/sql-20/build.gradle

Outdated

| "-Ywarn-numeric-widen", | ||

| "-Xfatal-warnings" | ||

| "-Xfatal-warnings", | ||

| "-Xplugin:" + configurations.scalaCompilerPlugin.asPath, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see that in the sql-13 build file we add these with tasks.withType(...) instead of appending here. I think I'm fine with either, but we should try to do the same in both files.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

done 2e42e73

|

@jbaiera - should be ready for another look. thanks! |

jbaiera

left a comment

jbaiera

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

This commit introduces a 3rd party plugin to co-locate scala docs with java docs. https://github.com/lightbend/genjavadoc Fixes: elastic#1363

This commit introduces a 3rd party plugin to co-locate scala docs with java docs. https://github.com/lightbend/genjavadoc Fixes: #1363

#1368 introduces a plugin that is documents scala apis for java developers. This plugin does not support 2.10 and this variant was missed in the initial testing. This commit for 7.x conditionally uses this plugin based on the scala major version. 2.10 support has been removed for 8.x.

This commit introduces a 3rd party plugin to co-locate

scala docs with java docs. https://github.com/lightbend/genjavadoc

Fixes: #1363