-

Notifications

You must be signed in to change notification settings - Fork 189

How does your UHSDR software DSP work

DSP means "digital signal processing" - this is the basic foundation of the audio processing in the mcHF and all other SDRs (software defined radios) on the market.

This document is meant as a brief description of the functioning of the UHSDR software (and part of the hardware) in order to help users to understand the basic principles of their transceiver as well as to give the programmer an overview over the main functioning software DSP blocks in the mcHF and other supported SDRs.

[parts of this document have been taken from my decription of the Teensy SDR and adapted for the mcHF and parts of this Wiki are inspired by the excellent source code description by Clint KA7OEI, DD4WH 2016_08_11]

As with all IQ SDR radios, the real RF (radio frequency) input signals are transformed by hardware components into complex I & Q signals (see Smith 1998 for a mathematical description of these signals). These analog I & Q signals are then digitized by an ADC (analog-digital-converter) and further processed (filtered, demodulated etc.) in software. At the end, the digital signals are again transformed into analog audio by the DAC (digital-analog-converter) and amplified in the headphone amp for listening. That´s basically all for Rx and in Tx the whole thing works the other way round! ;-)

In general, there are four methods for a receiver to demodulate signals (Youngblood 2003): 1. The filter method, where the signal filtering occurs in crystal/mechanical filters (commonly used in superhet receivers), 2.The phasing method (normally hardware-based in old-style tube phasing receivers or in the R2Pro, see EMRFD), 3. The “ Weaver ” or “ Third ” method (nearly forgotten method and rarely implemented, but see Weaver 1956, Kainka 1983 & the excellent website by Summers 2015), 4. The “ Fourth method ” (van Graas 1990), which uses a quadrature sampling detector (QSD) and which has become popular due to publications and a patent by Tayloe (2001).

UHSDR transceivers like the mcHF use the standard approach for an IQ SDR radio (also implemented by many of the PC-based or stand-alone commercial SDR radios like the KX2, KX3, Flex-Radio series, Softrock etc.) – i.e. a hardware QSD (fourth method) to produce the I & Q signals and the phasing method (second method) implemented in software to suppress the undesired sideband in various ways.

The hardware used to produce the I & Q signals in the mcHF is a single balanced QSD (based on U15 = 3253) followed by an operation amp OPA 2350 (U16) to amplify (and lowpass filter) the IQ signals together with the sampling caps C68 and C69 which also determine the bandwidth of this QSD. Youngblood (2002) has provided us with an excellent description and explanation why the Quadrature Sampling architecture in RX and TX acts as an additional filter. When trying to calculate this from the specs of the mcHF (single balanced QSD, sampling caps = 22nF, switching resistance of the SN74CBT3253C = 4-5 Ohms), I get: BW = 1/ (PI * 2 * (50+4) * 22nF) = 121kHz. We would only need 48kHz here, because that is the displayed bandwidth in spectrum and waterfall display. So, if we used 33nF or 47nF for C68 and C69, it would still be sufficient (before you replace the caps, do the math yourself ! ;-)), but we would have better suppression of non-interesting frequencies and prevent overload of the ADC a bit better and this "filter" also acts against aliases in the ADC.

Local oscillator (LO) is the famous Si570. Famous not only for the low phase noise, but also for its quite high power consumption and the restriction to frequencies above 4MHz. [Best would be to take something like the Si514 as the LO, which has much lower power demand, but comparable phase noise, and also produces frequencies from 100kHz upwards - (vladn has very good experience with this LO: http://theradioboard.com/rb/viewtopic.php?f=4&t=6641) and my Teensy SDR Receiver also now works with the Si514]. The LO runs with four times the Rx or Tx frequency and feeds a Johnson counter (U11). Thus, the phase noise requirements are not that high, because the use of the Johnson counter U11 (7474) with fLO = 4 x fRx reduces the phase noise by a factor of four.

The I & Q signals from the QSD are then input to the Audio codec. They are converted by the ADC with a sample rate of 48kHz. I am not sure whether there is any built-in analog anti-aliasing filter in front of the ADC ?

From this point on, all the processing is in software and is performed by the STM32F4, a 32-bit microprocessor.

The software path starts by stuffing the I & Q audio samples into two buffer variables. The I and Q signals are just sampled amplitude measurements of the input signal measured in 1/48000 sec = 20.1 µsec intervalls. They are however 90 degrees out of phase (I = cos, Q = sin) and that is the key to the flexibility to use them for all kind of demodulation and also other goodies in your mcHF.

-

spectrum visualization as scope display and waterfall: these display the amplitude (= magnitude of the signal) at different frequencies. Because the I & Q samples represent magnitudes at different points in time, we need a time to frequency domain conversion for this purpose. The standard method for this is a Fast Fourier Transform = FFT. The spectrum and waterfall display in the mcHF use 256-point complex FFTs, that means we have 256 "bins" = individual calculations of signal magnitude for a division of the 48kHz spectrum. So the resolution of the spectrum / waterfall display is 48000/256 = 187.5Hz. In magnify mode, we had to find other tricks to get more resolution for a higher magnification of the spectrum display --> Zoom FFT as sketched by Lyons 2011, this allows a high resolution picture of the spectrum with up to 6Hz resolution!

-

demodulation: this is of course the core of the UHSDR software: demodulation with I & Q is very easy ;-):

I + Q = USB

I - Q = LSB

SQRT (I * I + Q * Q) = AM

I = synchronous AM, if we are exactly on the carrier frequency

FM = atan(Q * I_old - I * Q_old, I * I_old + Q * Q_old) [taken from the excellent description by Clint, KA7OEI]

That´s it, all demodulation can be done in software by these simple calculations. Of course, in reality, it becomes a little more complex.

Because of tolerances in the hardware components, the amplitudes and phases of the I and Q signals are not perfectly matched accurately, i.e. they are not exactly 90 degrees out of phase and their amplitudes differ a bit. But this is corrected in software by scaling the I and Q signals: The amplitude of I and Q can be calibrated by simply adjusting the input gains of the signals. Phase calibration is just as simple: A small amount of the I signal can be mixed into the Q channel and vice versa. This is equivalent to a slight phase shift of the signals (because the amplitude of a wave of different phase added to another wave determines the phase of the resulting wave). Ideally, the amplitude- and phase-corrected I and Q signals then have exactly the same amplitude and are exactly 90 degrees out of phase. The mcHF has the ability to AUTOMATICALLY correct imbalances in phase and amplitude using the algorithm by Moseley & Slump (2006), which leads to a final mirror rejection of about 65dB.

Every SDR should use an IF (intermediate frequency) in order to get rid of all the noise present near DC (below 1kHz) caused by power line hum (50/60Hz) etc. The UHSDR software gives the user maximum flexibility to choose the IF frequency (-6kHz, +6kHz, -12kHz, +12kHz). Because of the usage of the IF (frequency translation) we need to translate our I & Q signals to baseband before the real audio processing begins. This is accomplished using complex frequency translation. For the -+12kHz translation this is accomplished without any multiplications by simply changing the sign of the I and Q of some of the samples (see chapter 13.1.2 in Whiteley 2011 for details). For frequency translation by +-6kHz, a complex software NCO (numerically controlled oscillator) is built and each input sample is multiplied by the real and imaginary parts of the NCO generator (for an explanation and an evaluation of its effectiveness see Lyons 2014, Wheatley 2011). The NCO uses two IF generators with 90 degrees phase shift (cosIF&sinIF) and uses four complex multipliers to multiply the I and Q signals with the NCOs. At the end, the resulting signals are added and substracted depending on whether you use a negative or a positive IF.

After passing the frequency translation, the I and Q signals are still I and Q, but now they are in baseband and can be processed by the filters! So never switch off frequency translation in your UHSDR TRX, unless you have a very good reason to do so.

What we have achieved up to now, is the downconversion of the RF signal into an audio signal, the sampling of the signal by the ADC, the conversion of the single signal into the phase-shifted I&Q, the correction of amplitude and phase of I & Q and the conversion to baseband. So we now have near to perfect I&Q audio signals in baseband with exactly equal amplitude and exactly 90 degree phase difference.

Opposite sideband suppression is limited in an IQ-SDR like the mcHF by the need for accurate phase shifting AND amplitude match of the Hilbert transforms done in the I & Q paths. We need Hilbert transforms that differ exactly by 90 degrees between I and Q. This is easy to achieve in the digital domain. BUT: phase shift equality and amplitude match is not easy to obtain for the low audio frequencies [<500Hz]. I suspect that the main reason for the inaccuracy of the Hilbert Transform Filters is the inaccuracy of the filter calculations being performed in single precision [STM32F4 has single precision FPU]. In PC SDR programs you will have double precision! That is the reason (my hypothesis) why we have imperfect sideband rejection compared to a PC SDR program. Another reason is the phase and amplitude error in I & Q produced by the hardware (but this is nicely corrected by our automatic algorithm or by manual correction in software). Just to illustrate the accuracy needed: for 60dB sideband rejection we need a phase shift accuracy of at least 0.1 degrees AND an amplitude match of at least 0.02dB over the entire audio range.

See this diagram: http://www.panoradio-sdr.de/wp-content/uploads/2017/01/ssb_suppression-1024x614.png

We would like to obtain good sideband suppression and simultaneously have good low frequency audio response. That is only possible with steep Hilbert bandpass filters. Originally the filters in the mcHF were 89 tap Hilbert filters running at 48ksps. These do not have very steep slopes and were designed to have good low frequency audio response, which you can see in this diagram showing the 3k6 Hilbert Filter that had been implemented in the mcHF since many months:

In reality, this filter has about 30dB - 35dB of opposite sideband suppression and superb low frequency audio response. This is probably due to the nice flat low freq audio passband which supports low freq audio, but also leads to an unsatisfying sideband rejection.

This new filter was designed to obtain both: a nice low audio freq response AND a nice opposite sideband rejection.

It differs, because it has 199 taps AND runs at 12ksps sample rate. Thus, it can theoretically achieve 4 times steeper slope because of the decimation and additionally more than twice steeper slope because of the much higher number of taps --> more than 8 times steeper slopes. It should be able to provide both: good low frequency audio response (-6dB point is at 135Hz) AND good opposite sideband suppression of at least 50dB (we hope it does ;-)).

However, this filter has to be used AFTER the decimation, because the original sample rate is 48ksps. So, in order to use it, we altered the whole audio path for SSB/CW demodulation in the mcHF:

I -> FIR lowpass -> downsample-by-4 -> Hilbert +45degrees -> add/substract for USB/LSB

. -> etc.

Q -> FIR lowpass -> downsample-by-4 -> Hilbert -45degrees -> add/substract for USB/LSB

The old audio path was:

I -> Hilbert +0degrees -> add/substract for USB/LSB

. -> FIR lowpass - downsample-by-4 -> etc.

Q -> Hilbert -90degrees -> add/substract for USB/LSB

The new filter is now implemented (May 11th, 2017) and we hope that the opposite sideband rejection of the mcHF will be much better now (> 50dB is our wishful thinking, which has been "confirmed" by sporadic tests and reports of other users). You are welcome to perform sophisticated and repoducable accurate measurements and place them here as a diagram or table.

This is the path, that the audio signal now has to go through:

- Hilbert transform with Hilbert FIR filters OR lowpass Filtering with FIR filters (AM & FM)

- Demodulation according to the formulae above

- Decimation filter & Decimation

- Automatic notch filter (if activated)

- Automatic noise reduction (if activated)

- Audio Filter (depending on user choice, see below)

- AGC processing

- Manual notch filter (if activated)

- Manual peak filter (if activated)

- Bass equalizer

- Interpolation & Interpolation filter

- Anti-Alias Filter (only for certain filter combinations)

- Treble Equalizer

All these calculations for the different feature are done in real-time! Hard work for the STM32F4 . . .

Now let´s have a look at these 12 steps in a little more detail, if you like . . .

- & 2. Hilbert FIR filters are very phase linear filters that are used here to perform the "phasing" that serves to suppress the undesired sideband. In the mcHF we use a Hilbert FIR (89 taps) with 0° phase shift in the I path and a -90° phase shift in the Q path. Why do we need a 0° phase shift, isn´t that pretty useless? Well, the phase shift of -90° in the Q path needs processing time, so we have to spend that time in the I path too, alternatively to the Hilbert 0° we could use a delay. So how does this Hilbert filtering work? The desired sideband in I is not shifted. The desired sideband in Q is at 90° and is shifted by the Hilbert -90° to 0°, so it adds to the desired sideband from the I path (+3dB !). The undesired sideband has a 180° shift compared to the desired sideband and is at 180° in the I path. The undesired sideband from the Q path is at 90° and is shifted to 0° by the Hilbert, so the undesired sideband components in I & Q eliminate each other! Well, probably others can explain this a lot better: https://www.dsprelated.com/showarticle/176.php

One other desired feature of the Hilbert filters is the bandpass filtering. Depending on the desired bandwidth that the user has chosen, a suitable Hilbert Filter is used. The Hilbert Filters used in the UHSDR software have bandwidths of

3.6kHz, 4.5kHz, 5kHz, 6kHz, 7.5kHz or 10kHz

The coefficients have been precalculated with Iowa Hills Hilbert Filter Designer and are stored in memory in the mcHF.

Alternatively, for AM or FM demodulation, we do not need the Hilbert Transform, we use lowpass FIR filters instead. Again, depending on the desired bandwidth that the user has chosen, a suitable FIR lowpass Filter is used. The FIR lowpass Filters used in the mcHF have bandwidths of

2.3kHz, 3.6kHz, 4.5kHz, 5kHz, 6kHz, 7.5kHz, 10kHz

These coefficients have been precalculated with Iowa Hills FIR Filter Designer and are also stored in memory in the UHSDR software.

´3. Decimation means reducing the sampling rate of a signal. This has the advantage, that less samples have to be processed per time unit and filters can have a steeper stopband transition with the same number of coefficients. To prevent aliasing, we need good lowpass filtering BEFORE the decimation. This lowpass filter function is already fulfilled by the Hilbert/FIR filters, so we do not need to care much about that. The CMSIS decimation functions that are used in the mcHF have built-in lowpass FIR filtering with a minimum no. of 4 taps, so we use these 4 taps in order to be processor efficient. For filters with low bandwidth, we use decimation by 4 (12kHz sample rate), for higher bandwidths we use decimation by two (24kHz sample rate).

´4. & 5. The automatic notch filter and automatic noise reduction have been implemented by Clint KA7OEI using LMS algorithms. For a detailed description of these algorithms see part 1 and part 3 of the excellent -but also math-oriented- article series by Smith (1998). At the moment this is outdated, because we now have a prototype of a spectral noise reduction working. See the corresponding WIKI section on "noise reduction". The automatic notch filter is deactivated at the moment/not working with satisfying results (work in progress).

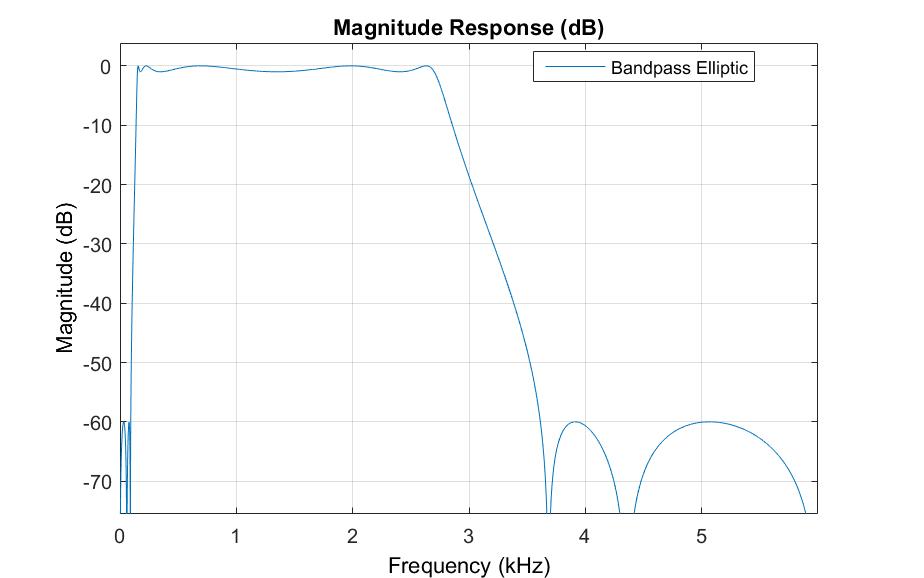

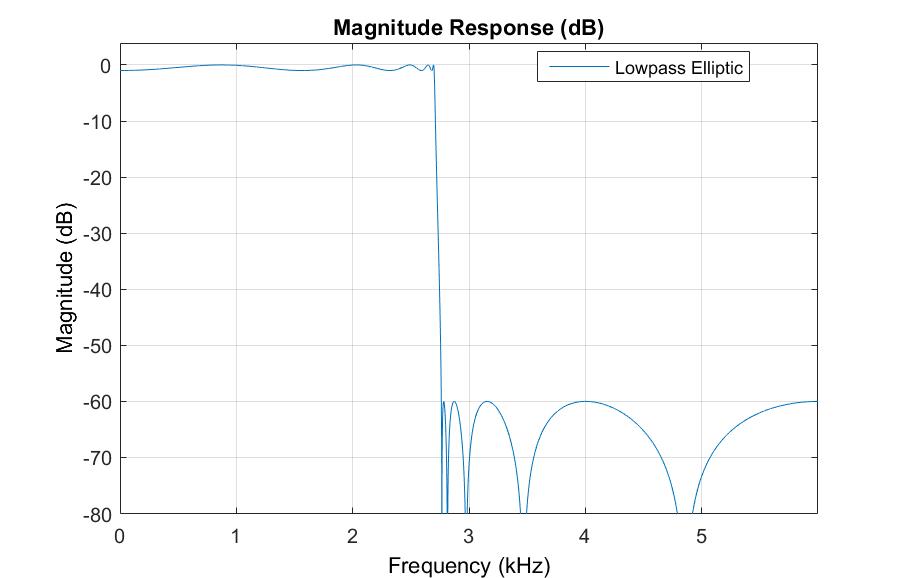

´6. This is the main audio filter, the brick wall that shapes your audio signal and prevents other signals of disturbing your audio experience ;-). Clint has implemented a very efficient type of IIR filters here. The main advantage of these kind of filters over FIR filters is their high efficiency compared to the processor power that they use. Their main disadvantage is the non-linear phase response (which, at this place of the audio chain, does not matter any more, because the human ear is insensitive to phase differences) and the complexity of calculating the coefficients and ensuring that these filters are stable (unstable IIR filters oscillate and have annoying output even when they have no input . . . ). The UHSDR software uses ARMA structure ladder filters at this place, which are very stable, if the coefficients are calculated with the algorithms implemented in fdatools in MATLAB. We mainly use 10th order IIR filters. We expanded the range of filters that the user can choose at this place of the audio chain to the following (all filters have elliptic response and 60dB stopband):

Bandwidths:

Lowpass & bandpass version, for some of them several bandpass versions: 300Hz, 500Hz, 1.4kHz, 1.6kHz, 1.8kHz, 2.1kHz, 2.3kHz, 2.5kHz, 2.7kHz, 2.9kHz, 3.2kHz, 3.4kHz, 3.6kHz, 3.8kHz

Here an example of a typical bandpass IIR filter of the mcHF:

Only lowpass version: 4.0kHz, 4.2kHz, 4.4kHz, 4.6kHz, 4.8kHz, 5.0kHz, 5.5kHz, 6.0kHz, 6.5kHz, 7.0kHz, 7.5kHz, 8.0kHz, 8.5kHz, 9.0kHz, 9.5kHz, 10.0kHz

Here an example of a typical lowpass IIR filter of the mcHF:

´7. AGC processor : The mcHF has a very good working AGC, that can be adjusted by the user for virtually every specific purpose.

´8. & 9. Manual notch and peak filter and bass equalizer : These three filters are implemented using cascaded IIR biquad filters. In comparison with the IIR ARMA structure filter, that uses precalculated coefficients, these filters use coefficients that are calculated on-the-fly in the UHSDR transceiver! Notch and peak frequencies and bass attenuation/enhancement can therefore be adjusted by the user in very fine steps. These filters are very efficient, using very low processor power, nonetheless enabling a notch filter with a Q of 10 leading to a notch bandwidth of a few tens of Hz only! [it could even be much narrower, but you would no longer be able to use it, because you would not find the notch ;-)] The formulae for the calculation of the coefficients are based on the famous DSP EQ cookbook by Robert Bristow-Johnson: http://www.musicdsp.org/files/Audio-EQ-Cookbook.txt

´10. & 11. In order to convert to the 48kHz sample rate again, we use the CMSIS interpolation routine. Again, this has a built in interpolation FIR filter. We only use the minimal version with 4 taps. The interpolation filtering is then done with an IIR filter, which is more effective in terms of processor power (maybe not??). For some bandwidths it is better to have a 16 tap FIR interpolation filter, in these cases we do not use an additional IIR interpolation filter. With this strategy we have completely eliminated alias products in the audio range.

`12. This treble equalizer has the same structure as the bass equalizer filter (IIR biquad). The nature of treble audio signals is a higher frequency, so we work at the 48kHz sample rate in order to prevent aliasing.

All audio calculations in the TX path are at 48kHz sample rate.

´1. Audio source (microphone, USB, LINE)

´2. IIR Filter: All TX IIR filters are Bandpass filters with ARMA structure IIR filters of 10th order elliptic with 60dB stopband response with precalculated coefficients [fdatools in MATLAB]. There are three TX audio filters the user can choose from:

SOPRANO: 250-2950Hz

TENOR: 150-2850Hz

BASS: 50-2750Hz

[No, we will not provide other bandwidths for the TX filters, because these filters are already at the legal bandwidth limit in many countries]

´3. Bass & Treble Equalizer: Bass & Treble response for TX can also be adjusted by the user (-20dB to +5dB). This is implemented with two cascaded biquad filters similar to the RX case. This filter is specific for the SSB TX case, in all other modes this filter is skipped.

´4. FIR Hilbert Transform with two mighty 201-tap-Filters, I with 0° phase shift, Q with -90° phase shift. The problem with Hilbert transforms is that they perform badly at low audio frequencies (Lyons 2011). So do not expect a superb sideband suppression when you look at a 300Hz tone in SSB mode. The sideband suppression for that tone is about -38dBc. For higher frequencies, eg. a 1000Hz tone, the sideband suppression can be as good as -70dBc or better. I have also tested the use of FIR Hilbert filters at this place with +45° phase shift in the I path and -45° phase shift in the Q path. These two filters had exactly the same sideband suppression and filter response in the low frequency audio band. So we still use the 0° and -90° version.

´5. Compression

´6. Frequency translation

´7. I & Q amplitude and phase correction

TO BE CONTINUED . . .

Thanks to all contributors in forums and all SDR radio fans who helped and/or commented: Clint KA7OEI, vladn, Andreas DF8OE, Danilo DB4PLE, Michael DL2FW, Esteban Benito, Joris van Scheindelen PE1KTH, Pete El Supremo, Rich Heslip VE3MKC, Rob PA0RWE, and kpc.

KA7OEI: http://ka7oei.blogspot.de/2015_03_01_archive.html

http://ka7oei.blogspot.de/2015/12/adding-fm-transmit-to-mchf-transceiver.html

http://ka7oei.blogspot.de/2015/11/fm-squelch-and-subaudible-tone.html

http://ka7oei.blogspot.de/2015/05/adding-waterfall-display-to-mchf.html

http://ka7oei.blogspot.de/2015/03/update-on-mchf-adding-more-features.html

Hayward, W., R. Campbell & B. Larkin (2003): Experimental Methods in RF Design. – ARRL. [= EMRFD]

Heslip, R. (2015): The Teensy Software Defined Radio. – The QRP Quarterly July 2015: 37-40.

Kainka, B. (1983): Transceiver-Zwischenfrequenzteil nach der dritten Methode. – cq-DL 1/83: 4-9.

Lyons, R.G. (2011): Understanding Digital Processing. – Pearson, 3rd edition.

Moseley, N.A. & C.H. Slump (2006): A low-complexity feed-forward I/Q imbalance compensation algorithm. in 17th Annual Workshop on Circuits, Nov. 2006, pp. 158–164. http://doc.utwente.nl/66726/1/moseley.pdf

PA0RWE, R. (2015): Stand-alone SDR ontvanger met de PSoC. - Benelux QRP Club Nieuwsbrief 156: 19-21.[see also: http://pa0rwe.nl]

Pratt, W. (2017): WDSP guide. - Using WDSP - for software developers. - https://github.com/TAPR/OpenHPSDR-wdsp/blob/master/WDSP%20Guide.pdf

Smith, D. (1998): Signals, Samples and Stuff: A DSP Tutorial (Part 1). – QEX Mar/Apr 1998: 3-16.[see also the other three parts: http://www.arrl.org/software-defined-radio]

Summers, H. (2015): Weaver article library. – online: http://www.hanssummers.com/weaver/weaverlib [2015-12-06]

van Graas, H. (1990): The Fourth Method: Generatin gand Detecting SSB Signals. – QEX Sep 1990: 7-11.[cited by Youngblood 2003, if anybody has the original paper, I would be glad to receive a pdf]

Weaver, D. (1956): A Third Method of Generation of Single-Sideband Signals. – Proceedings of the IRE, Dec 1956.

Wheatley, M. (2011): CuteSDR Technical Manual Ver. 1.01. - http://sourceforge.net/projects/cutesdr/

Youngblood, G. (2003): A software-defined radio for the masses (Part 4). – QEX Mar/Apr 2003: 20-31. http://www.arrl.org/software-defined-radio

- Supported SDR Hardware

- UHSDR: Manuals

- mcHF: Building your own SDR

- OVI40: Building your own SDR

- UHSDR: SW Installation on SDR

- UHSDR: Theory of Operation

- UHSDR: SW Development

- UHSDR: Supported Hardware

- UHSDR: Manuals

- Building a mcHF SDR

-

Building a OVI40 SDR

- UHSDR SW Installation

- Theory of Operation

- UHSDR SW Development