-

Notifications

You must be signed in to change notification settings - Fork 1.1k

change load local ./ hf parquet dataset #398

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@microsoft-github-policy-service agree |

|

@kindaQ HF datasets has caching (https://huggingface.co/docs/datasets/cache) so that if you copy the downloaded data into a machine and properly set HF_DATASETS_CACHE, that machine can directly load the datasets without downloading. If you agree caching will solve your issue, we would prefer not to merge this PR and add another layer of complexity. If caching does not solve your case, please explain. Thank you. |

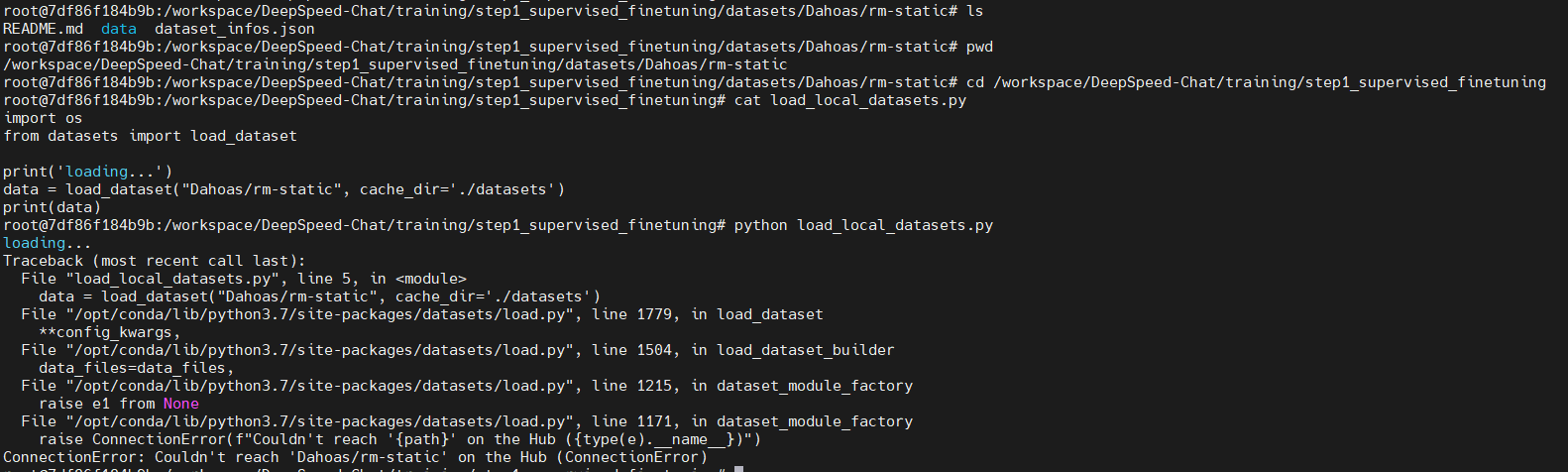

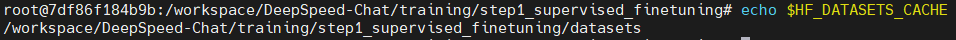

I have tried " export HF_DATASETS_CACHE="/path/to/another/directory"" and "dataset = load_dataset('LOADING_SCRIPT', cache_dir="PATH/TO/MY/CACHE/DIR")", would you please try it in your machine which cannot connect to huggingface.co The cache_dir only change the directory that saves the downloaded cache files, but won't change the downloading step. |

|

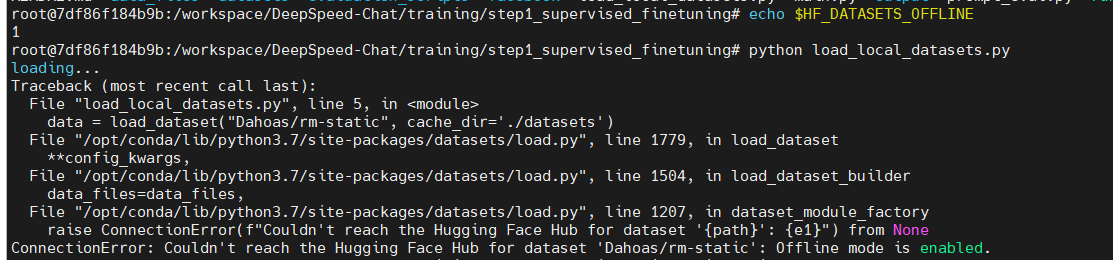

Based on HF's doc https://huggingface.co/docs/datasets/loading#offline, could you try to set HF_DATASETS_OFFLINE to 1 to enable full offline mode? |

I have reviewed the code of "load_dataset", the first param only support so changing cache_dir could not solve my issue |

| return raw_datasets.DahoasFullhhrlhfDataset(output_path, seed, | ||

| local_rank) | ||

| elif dataset_name == "Dahoas/synthetic-instruct-gptj-pairwise": | ||

| dn = None |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please change dn to longer and meaningful name, such as local_data_dir

| class PromptRawDataset(object): | ||

|

|

||

| def __init__(self, output_path, seed, local_rank): | ||

| def __init__(self, output_path, seed, local_rank, dataset_name=None): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

dataset_name is a confusing variable name, I recommend to change it to local_data_dir. Same comment apply to all other classes.

| return None | ||

|

|

||

|

|

||

| class LocalParquetDataset(PromptRawDataset): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do we need this class? This LocalParquetDataset class is never used in your PR. Please remove it if it's not necessary.

|

@kindaQ Thanks for the clarifications. Now I agree that this PR is needed. I finished my review and left some comments that need your fix. Please also write a short paragraph of documentation about this feature at https://github.com/microsoft/DeepSpeedExamples/blob/master/applications/DeepSpeed-Chat/README.md#-adding-and-using-your-own-datasets-in-deepspeed-chat, in order for other users to actually understand how to use your contribution. One other thing is that the formatting test failed, please follow https://www.deepspeed.ai/contributing/ to fix it with pre-commit. |

|

@kindaQ this PR was having formatting issues. I helped to fix it this time, but next time please make sure to use pre-commit to resolve them: "pre-commit install" then "pre-commit run --files files_you_modified" |

thx a lot. |

* change load local ./ hf parquet dataset * change get_raw_dataset load local dir of downloaded huggingface datasets * pre-commit formatting --------- Co-authored-by: Conglong Li <[email protected]>

* change load local ./ hf parquet dataset * change get_raw_dataset load local dir of downloaded huggingface datasets * pre-commit formatting --------- Co-authored-by: Conglong Li <[email protected]>

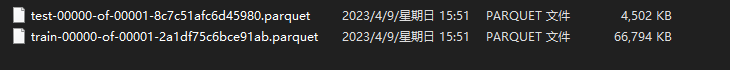

add load downloaded huggingface dataset, in case of machines cannot connect to huggingface.co