-

Notifications

You must be signed in to change notification settings - Fork 358

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Fix value_counts() to work properly when dropna is True #1116

Conversation

Codecov Report

@@ Coverage Diff @@

## master #1116 +/- ##

==========================================

- Coverage 95.14% 95.11% -0.04%

==========================================

Files 35 35

Lines 7003 7039 +36

==========================================

+ Hits 6663 6695 +32

- Misses 340 344 +4

Continue to review full report at Codecov.

|

| pser.index.value_counts(ascending=True, dropna=False), almost=True) | ||

|

|

||

| # Series with MultiIndex some of index is NaN. | ||

| # This test only available for pandas >= 0.24. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why are these only for pandas >= 0.24?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

because pandas < 0.24 doesn't support None for MultiIndex like below way.

>>> pd.__version__

'0.23.0'

>>> pidx = pd.MultiIndex.from_tuples([('x', 'a'), None, ('y', 'c')])

Traceback (most recent call last):

...

TypeError: object of type 'NoneType' has no len()so i think this test should be ran on pandas >= 0.24

| set_arrow_conf = False | ||

| if LooseVersion(pyspark.__version__) < LooseVersion("2.4") and \ | ||

| default_session().conf.get("spark.sql.execution.arrow.enabled") == "true": | ||

| default_session().conf.set("spark.sql.execution.arrow.enabled", "false") | ||

| set_arrow_conf = True |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do we need this?

If we need this, could you make sure to set back to the original conf?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we need this to test at pyspark2.3 same reason with #1116 (comment),

i fixed the test logic to make sure setting back to original conf value.

Thanks for the review :)

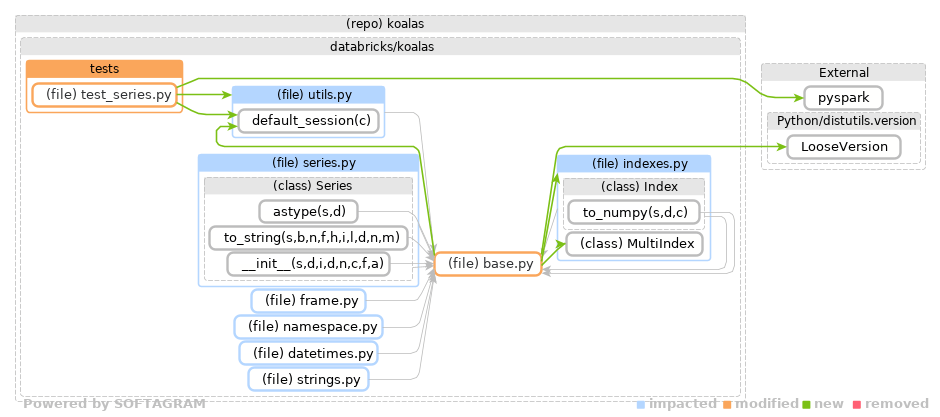

Softagram Impact Report for pull/1116 (head commit: c04be15)

|

| RuntimeError, | ||

| lambda: ks.MultiIndex.from_tuples([('x', 'a'), ('x', 'b')]).value_counts()) | ||

| else: | ||

| self._test_value_counts() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you disable arrow execution only when pyspark < 2.4 and for MultiIndex?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

okay i will 👍

thanks !

| from databricks.koalas.indexes import MultiIndex | ||

| if LooseVersion(pyspark.__version__) < LooseVersion("2.4") and \ | ||

| default_session().conf.get("spark.sql.execution.arrow.enabled") == "true" and \ | ||

| isinstance(self, MultiIndex): | ||

| raise RuntimeError("if you're using pyspark < 2.4, set conf " | ||

| "'spark.sql.execution.arrow.enabled' to 'false' " | ||

| "for using this function with MultiIndex") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Btw, I think we should do this only in MultiIndex by overriding.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ah, that seems better. thanks for the advice!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

Let me make a followup. |

|

@HyukjinKwon ah, thanks! can you cc me after finished?? |

This PR address the comment at #1116 (comment)

This PR address the comment at databricks/koalas#1116 (comment)

This PR Resolves comment #949 (comment)