-

Notifications

You must be signed in to change notification settings - Fork 1.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Images being skewed by Frame Converters? #1115

Comments

|

The official Java API of OpenCV doesn't support well custom strides

(steps). You might need to resize your images if you need to use that API.

|

|

Leptonica also doesn't do too well in that department...

|

|

We could enhance the frame converters to copy the buffers in those cases though. |

|

Can you explain to me what you mean by custom strides? I'm generating the images by using ghost4j to convert from PDF to Java Image, then processing them as a Mat to deskew them, then returning them as an Image. As far as I can tell, the Image makes it through this intact, but has issues when I convert them again later. I got these examples by using ImageIO.write() on the Image, then using Imgcodecs.write() for the Mat. |

|

BufferedImage can't use native memory, so we need to copy buffers anyway in

that case, so yes, the strides could get normalized that way.

|

|

I see. You said earlier that I could resize the images to use that API. How would I go about doing that? I figure you don't mean just decreasing the resolution. Would I have to do something to change the strides somehow? |

|

Resizing images with OpenCV so they have a width that is a multiple of 4

should make them compatible with pretty much anything.

|

|

Thank you, That worked! I made it so that it adds a little padding to the right when scanning them in to make the columns a multiple of four, and that fixed it. If you don't mind explaining, why is that the case? Why is this affected by the number of columns? |

|

The Wikipedia article is a good introduction I find: https://en.wikipedia.org/wiki/Stride_of_an_array |

|

I rewrote the converter function, it now supports 8-bit grayscale and 8-bit RGB without corrupting the stride of the Unfortunately I had to drop big-endian support entirely as I lack a machine to test that. And I didn't touch the reverse function at all, maybe I'll do it when I have a bit of spare time but no promises... Also, I removed import org.bytedeco.javacpp.BytePointer;

import org.bytedeco.javacpp.Pointer;

import org.bytedeco.javacv.Frame;

import org.bytedeco.javacv.LeptonicaFrameConverter;

import org.bytedeco.leptonica.PIX;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.IntBuffer;

public class FixedLeptonicaFrameConverter extends LeptonicaFrameConverter {

PIX pix;

static boolean isEqual(Frame frame, PIX pix) {

return pix != null && frame != null && frame.image != null && frame.image.length > 0

&& frame.imageWidth == pix.w() && frame.imageHeight == pix.h()

&& frame.imageChannels == pix.d() / 8 && frame.imageDepth == Frame.DEPTH_UBYTE

&& (ByteOrder.nativeOrder().equals(ByteOrder.LITTLE_ENDIAN)

|| new Pointer(frame.image[0]).address() == pix.data().address())

&& frame.imageStride * Math.abs(frame.imageDepth) / 8 == pix.wpl() * 4;

}

public PIX convert(Frame frame) {

if (frame == null || frame.image == null) {

return null;

} else if (frame.opaque instanceof PIX) {

return (PIX) frame.opaque;

} else if (!isEqual(frame, pix)) {

//I simply lack a machine to test this.

if (ByteOrder.nativeOrder().equals(ByteOrder.BIG_ENDIAN)) {

System.err.println("This converter does not support running on big-endian machines");

return null;

}

//PIX data should be packed as tightly as possible, see https://github.com/DanBloomberg/leptonica/blob/0d4477653691a8cb4f63fa751d43574c757ccc9f/src/pix.h#L133

//For anything not greyscale or RGB @ 8 bit per pixel, this involves more bit-shift logic than I'm willing to write (and I lack test cases)

if (frame.imageChannels != 3 && frame.imageChannels != 1) {

System.out.println(String.format("Image has %d channels, converter only supports 3 (RGB) or 1 (grayscale) for input", frame.imageChannels));

return null;

}

if (frame.imageDepth != 8 || !(frame.image[0] instanceof ByteBuffer)) {

System.out.println(String.format("Image has bit depth %d, converter only supports 8 (1 byte/px) for input", frame.imageDepth));

return null;

}

// Leptonica frame scan lines must be padded to 32 bit / 4 bytes of stride (line) length, otherwise one gets nasty scan effects

// See http://www.leptonica.org/library-notes.html#PIX

int srcChannelDepthBytes = frame.imageDepth / 8;

int srcBytesPerPixel = srcChannelDepthBytes * frame.imageChannels;

// Leptonica counts RGB images as 24 bits per pixel, while the data actually is 32 bit per pixel

int destBytesPerPixel = srcBytesPerPixel;

if (frame.imageChannels == 3) {

// RGB pixels are stored as RGBA, so they take up 4 bytes!

// See https://github.com/DanBloomberg/leptonica/blob/master/src/pix.h#L157

destBytesPerPixel = 4;

}

int currentStrideLength = frame.imageWidth * destBytesPerPixel;

int targetStridePad = 4 - (currentStrideLength % 4);

if (targetStridePad == 4)

targetStridePad = 0;

int targetStrideLength = (currentStrideLength) + targetStridePad;

ByteBuffer src = ((ByteBuffer) frame.image[0]).order(ByteOrder.LITTLE_ENDIAN);

int newSize = targetStrideLength * frame.imageHeight;

ByteBuffer dst = ByteBuffer.allocate(newSize).order(ByteOrder.LITTLE_ENDIAN);

/*

System.out.println(String.format(

"src: %d bytes total, %d channels @ %d bytes per pixel, stride length %d",

frame.image[0].capacity(),

frame.imageChannels,

srcBytesPerPixel,

currentStrideLength

));

System.out.println(String.format(

"dst: %d bytes total, stride length %d, stride pad %d",

newSize,

targetStrideLength,

targetStridePad

));

*/

//The source bytes will be RGB, which means it will have to be copied byte-by-byte to match Leptonica RGBA

//todo: use qword copy ops?

byte[] rowData = new byte[targetStrideLength];

for (int row = 0; row < frame.imageHeight; row++) {

for (int col = 0; col < frame.imageWidth; col++) {

int srcIndex = (frame.imageStride * row) + (col * frame.imageChannels);

if (frame.imageChannels == 1) {

byte v = src.get(srcIndex);

rowData[col] = v;

//System.out.println(String.format("row %03d col %03d idx src %03d val %02x", row, col, srcIndex,v));

} else if (frame.imageChannels == 3) {

int dstIndex = col * destBytesPerPixel;

byte[] pixelData = new byte[3];

src.get(srcIndex, pixelData);

// Convert BGR (OpenCV's standard ordering) to RGB (Leptonica)

// See https://learnopencv.com/why-does-opencv-use-bgr-color-format/ and https://github.com/DanBloomberg/leptonica/blob/master/src/pix.h#L157

rowData[dstIndex] = pixelData[2]; //dst: r

rowData[dstIndex + 1] = pixelData[1]; //dst: g

rowData[dstIndex + 2] = pixelData[0]; // dst: b

rowData[dstIndex + 3] = 0;

//System.out.println(String.format("row %03d col %03d idx src %03d dst %03d val r %02x g %02x b %02x", row, col, srcIndex,dstIndex, pixelData[2], pixelData[1], pixelData[1]));

}

}

//And since pixel data in source is little-endian, but Leptonica is big-endian on 32-bit level, now invert accordingly...

ByteBuffer rowBuffer = ByteBuffer.wrap(rowData);

IntBuffer inverted = rowBuffer.order(ByteOrder.BIG_ENDIAN).asIntBuffer();

//System.out.println(Arrays.toString(rowBuffer.array()));

dst.position(row * targetStrideLength).asIntBuffer().put(inverted);

}

pix = PIX.create(frame.imageWidth, frame.imageHeight, destBytesPerPixel * 8, new BytePointer(dst.position(0)));

}

return pix;

}

}Test harness: import org.bytedeco.javacpp.BytePointer;

import org.bytedeco.javacv.Frame;

import org.bytedeco.javacv.LeptonicaFrameConverter;

import org.bytedeco.javacv.OpenCVFrameConverter;

import org.bytedeco.leptonica.PIX;

import org.bytedeco.leptonica.global.leptonica;

import org.bytedeco.opencv.global.opencv_imgcodecs;

import org.bytedeco.opencv.opencv_core.Mat;

import org.bytedeco.opencv.opencv_core.Point;

import org.bytedeco.opencv.opencv_core.Scalar;

import org.opencv.core.CvType;

import java.nio.ByteOrder;

import static org.bytedeco.opencv.global.opencv_core.CV_8UC1;

import static org.bytedeco.opencv.global.opencv_core.CV_8UC3;

import static org.bytedeco.opencv.global.opencv_imgproc.line;

public class FrameConverterTest {

public static void main(String[] args) {

testBw(10,8);

testBw(10,9);

testBw(10,10);

testBw(10,11);

testRgb(10,8);

testRgb(10,9);

testRgb(10,10);

testRgb(10,11);

}

public static void testBw(int rows, int cols) {

LeptonicaFrameConverter lfcFixed = new FixedLeptonicaFrameConverter();

OpenCVFrameConverter.ToMat matConverter = new OpenCVFrameConverter.ToMat();

Mat originalImage=new Mat(rows,cols,CV_8UC1);

int stepX=255/cols;

int stepY=255/rows;

int stepTotal=Math.min(stepX,stepY);

for(int i=0;i<originalImage.rows();i++) {

for(int j=0;j<originalImage.cols();j++) {

line(originalImage, new Point(j, i), new Point(j, i), new Scalar(stepTotal*j));

}

}

System.out.println(String.format("orig\n capacity %d\n w %d\n h %d\n ch %d\n dpt %d\n str %d",originalImage.asByteBuffer().capacity(),originalImage.cols(),originalImage.rows(),originalImage.channels(),originalImage.depth(),originalImage.step()));

opencv_imgcodecs.imwrite(System.getProperty("user.dir")+"/mat-bw-"+rows+"x"+cols+".bmp", originalImage);

Frame ocrFrame = matConverter.convert(originalImage);

System.out.println(String.format("frame\n capacity %d\n w %d\n h %d\n ch %d\n dpt %d\n str %d",ocrFrame.image[0].capacity(),ocrFrame.imageWidth,ocrFrame.imageHeight,ocrFrame.imageChannels,ocrFrame.imageDepth,ocrFrame.imageStride));

PIX converted = lfcFixed.convert(ocrFrame);

System.out.println(String.format("fixconverted pix\n capacity %d\n w %d\n h %d\n ch %d\n dpt %d\n wpl %d",converted.createBuffer().capacity(),converted.w(),converted.h(),-1,converted.d(),converted.wpl()));

leptonica.pixWrite(System.getProperty("user.dir")+"/pix-bw-"+rows+"x"+cols+".bmp", converted,leptonica.IFF_BMP);

}

public static void testRgb(int rows, int cols) {

LeptonicaFrameConverter lfcFixed = new FixedLeptonicaFrameConverter();

OpenCVFrameConverter.ToMat matConverter = new OpenCVFrameConverter.ToMat();

Mat originalImage=new Mat(rows,cols, CV_8UC3);

int stepX=255/cols;

int stepY=255/rows;

for(int i=0;i<originalImage.rows();i++) {

for(int j=0;j<originalImage.cols();j++) {

// Warning: OpenCV uses BGR ordering under the hood!

// See https://learnopencv.com/why-does-opencv-use-bgr-color-format/

line(originalImage, new Point(j, i), new Point(j, i), new Scalar(i*stepY,j*stepX,0,0));

}

}

System.out.println(String.format("orig\n capacity %d\n w %d\n h %d\n ch %d\n dpt %d\n str %d",originalImage.asByteBuffer().capacity(),originalImage.cols(),originalImage.rows(),originalImage.channels(),originalImage.depth(),originalImage.step()));

opencv_imgcodecs.imwrite(System.getProperty("user.dir")+"/mat-rgb-"+rows+"x"+cols+".bmp", originalImage);

Frame ocrFrame = matConverter.convert(originalImage);

System.out.println(String.format("frame\n capacity %d\n w %d\n h %d\n ch %d\n dpt %d\n str %d",ocrFrame.image[0].capacity(),ocrFrame.imageWidth,ocrFrame.imageHeight,ocrFrame.imageChannels,ocrFrame.imageDepth,ocrFrame.imageStride));

PIX converted = lfcFixed.convert(ocrFrame);

System.out.println(String.format("fixconverted pix\n capacity %d\n w %d\n h %d\n ch %d\n dpt %d\n wpl %d",converted.createBuffer().capacity(),converted.w(),converted.h(),-1,converted.d(),converted.wpl()));

leptonica.pixWrite(System.getProperty("user.dir")+"/pix-rgb-"+rows+"x"+cols+".bmp", converted,leptonica.IFF_BMP);

}

} |

|

I also seriously question the usability of |

|

Great! Please open a pull request with that |

|

@saudet sure, can do - I'd take the liberty and introduce unit tests for the test harness. Are you fine with junit5? |

…tride sizes, see issue bytedeco#1115)

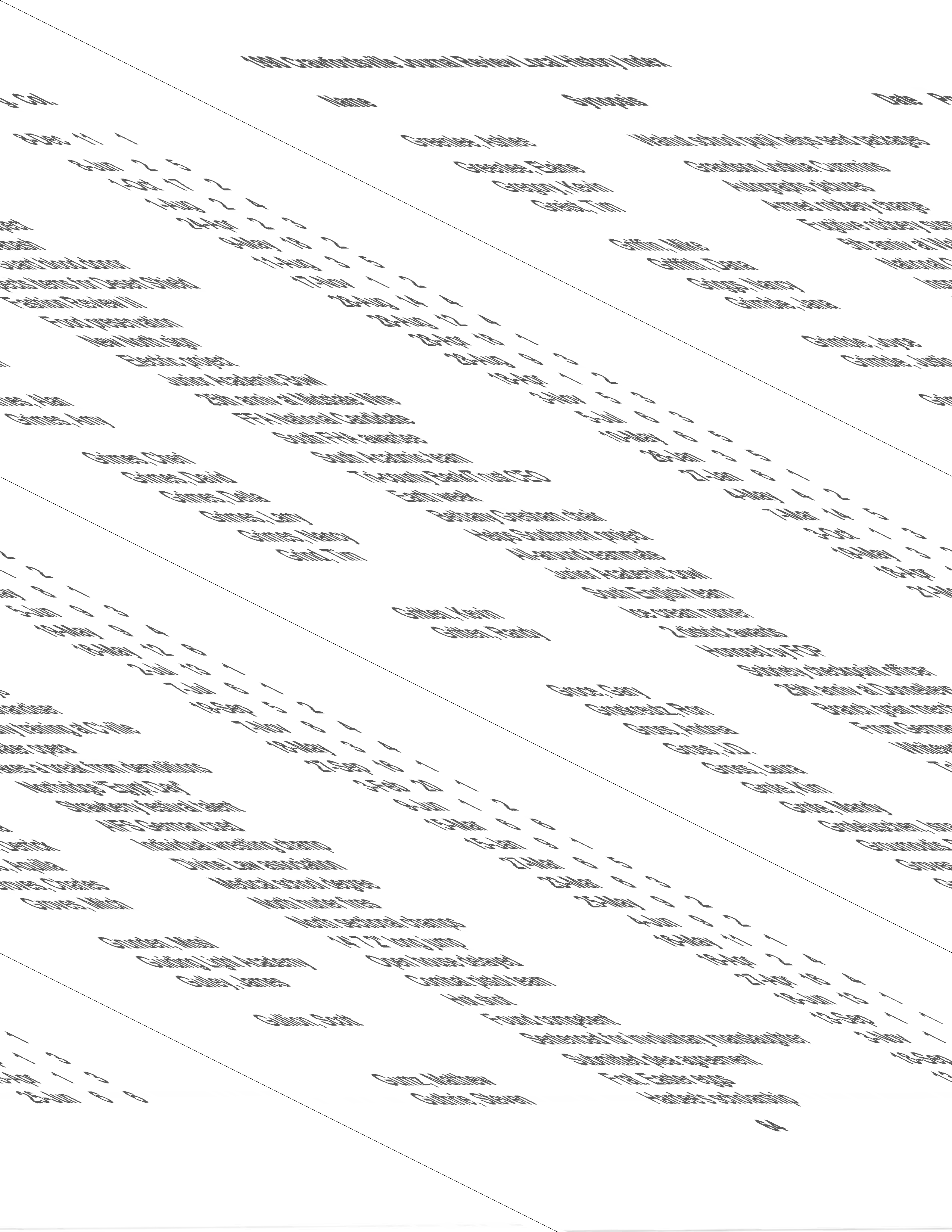

I've been working on a project that needs to convert between several different types of images (BufferedImage, Mat, PIX), and I've noticed a strange issue when using the JavaCV frame converters, wherein the output image will be heavily skewed. For example, The original BufferedImage:

vs. The Mat after conversion:

Does anyone know what could be causing this?

The text was updated successfully, but these errors were encountered: