A tensorflow implementation of Xi Chen et al's "InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets" paper. ( See : https://arxiv.org/abs/1606.03657 ) I've added supervised loss to original InfoGAN to achieve the consistency of generated categories and training stability. The result is promising. It helped the model to generate consistent categories and to converge fast compared to original InfoGAN.

- tensorflow >= rc0.10

- sugartensor >= 0.0.1

Execute

python train.py

to train the network. You can see the result ckpt files and log files in the 'asset/train' directory. Launch tensorboard --logdir asset/train/log to monitor training process.

Execute

python generate.py

to generate sample image. The 'sample.png' file will be generated in the 'asset/train' directory.

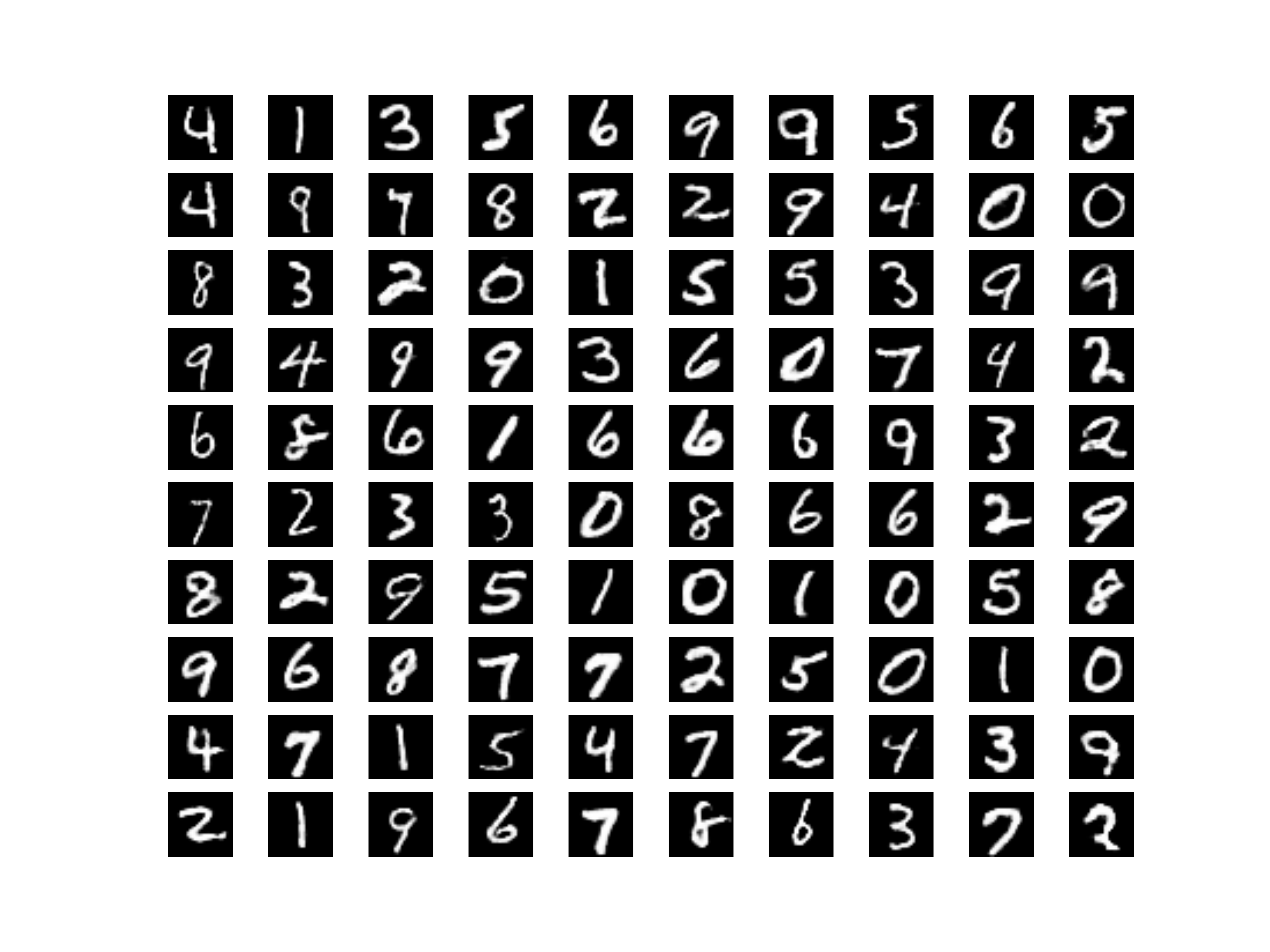

This image was generated by Supervised InfoGAN network.

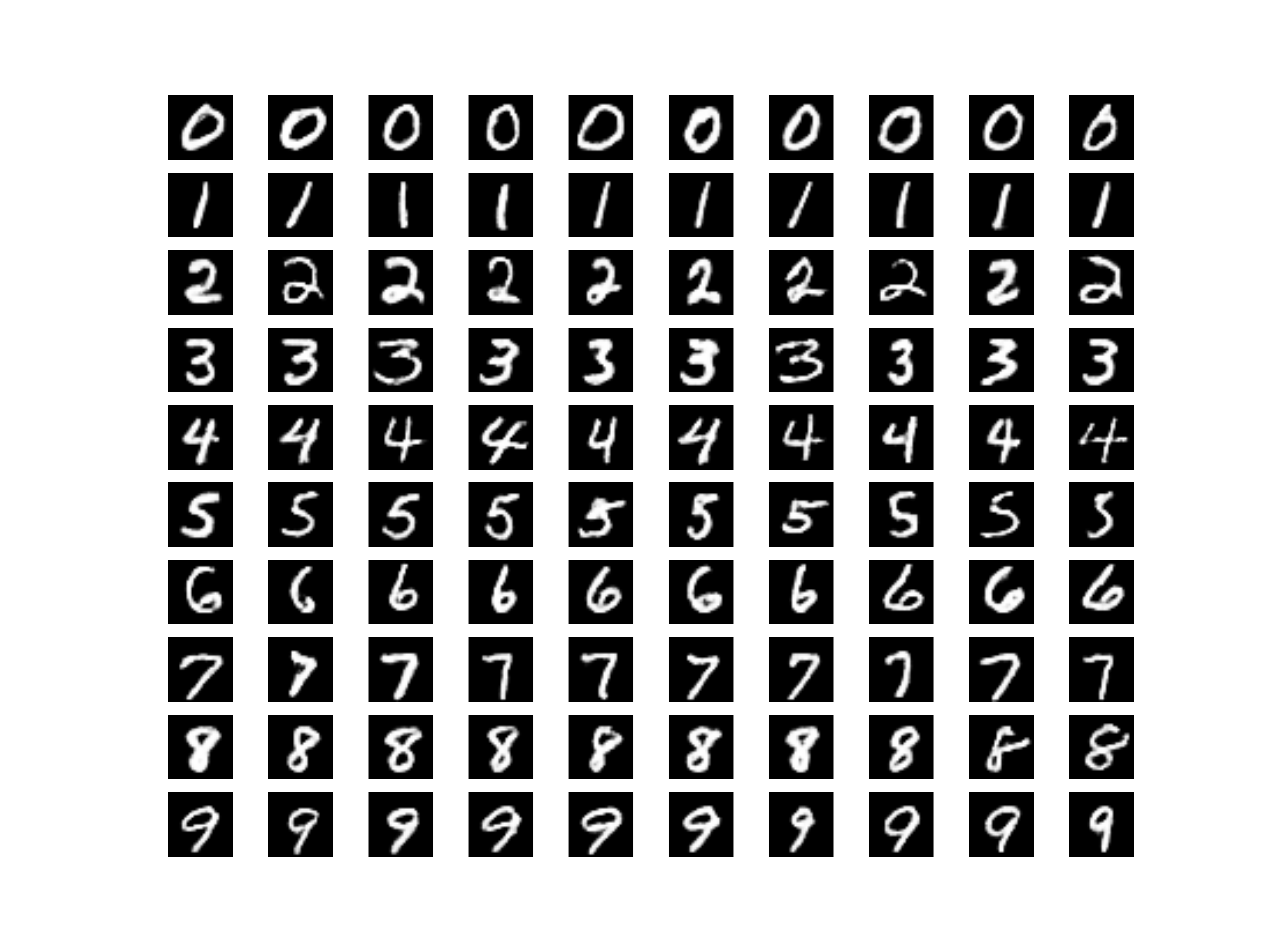

And this image was generated by category factors.

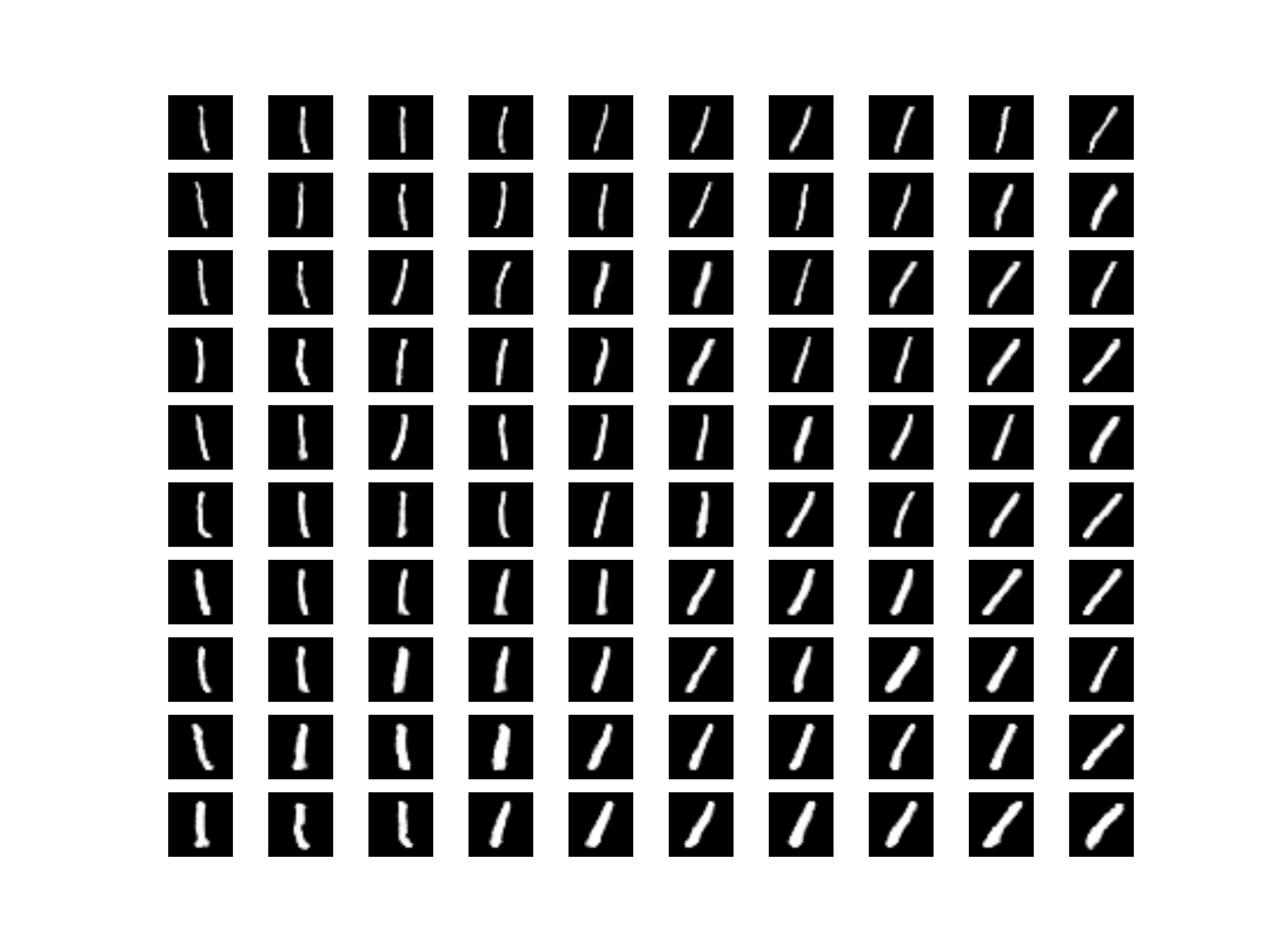

And this image was generated by continuous factors.

You can see the rotation change along the X axis and the thickness change along the Y axis.

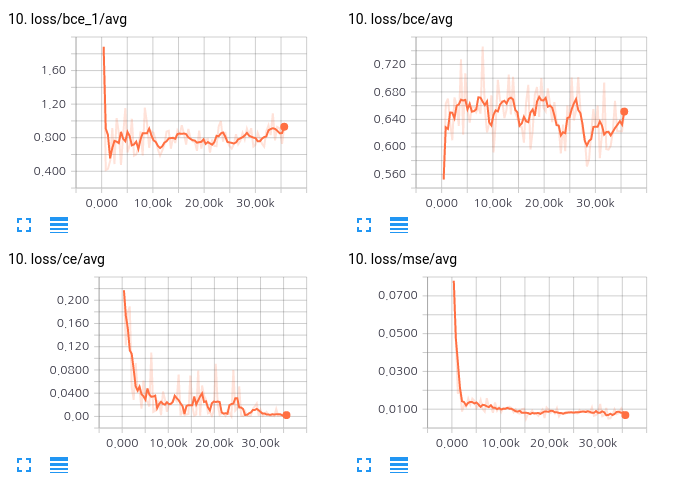

The following image is the loss chart in the training process. This looks more stable than my original InfoGAN implementation.

- Original GAN tensorflow implementation

- InfoGAN tensorflow implementation

- EBGAN tensorflow implementation

Namju Kim ([email protected]) at Jamonglabs Co., Ltd.