-

Notifications

You must be signed in to change notification settings - Fork 650

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

add dascoin adaptor #1356

add dascoin adaptor #1356

Conversation

|

I don't know if I can help, but maybe by walking through it together we can find an answer. The exception is generated at https://github.com/oxarbitrage/bitshares-core/blob/2055342dc4c94a70bf358e7a6773c29f0f49ec38/libraries/chain/fork_database.cpp#L81:L87 It seems to be checking to see if the item (block) passed as a parameter has its previous id set to "empty" or a "null value". If not, look the value up in the index to get the actual block, and set it in the member item->prev. But while previous_id() is not the "empty" value, it is also not a value that is in the index. So my first guess would be that previous_id() is returning garbage (perhaps never set correctly, or setting another value overwrote this one), or is being filled by a value that is not a block id. |

|

Your node may have received the blocks out-of-sequence. In the specific case head_block_num is 12573, and it tried to push 12578 on top of that. It's only really a problem if it fails to push 12574 on top of 12573. |

|

|

||

| if(_elasticsearch_operation_object) { | ||

| oho->op.visit(fc::from_static_variant(os.op_object, FC_PACK_MAX_DEPTH)); | ||

| if (os.op_object.is_object()) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In what case isn't the result an object? And why is the operation completely ignored in that case?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't see operations that are not objects, added an else with a log to check if it was happening at some point. Synchronized a decent part of the chain without hitting the log.

If it happen for any reason i think it will be ok to avoid visiting the adaptor completely, rest of the data will be inserted anyways.

Unsure if remove the check or not.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IMO remove it. We already know that oho->op is a valid operation object at this point. It will be serialized using fc::reflect, which always creates an fc::variant_object.

| else if (element.is_array()) | ||

| adapt(element.get_array()); | ||

| } | ||

| for (const auto& i : keys_to_rename) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What's the point of renaming keys with an underscore? Example perhaps?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am trying to contact Damir from Dascoin to ask some of this questions and invite to participate here if the can and want.

By investigating a bit the final data in elastic i found that for example underscore is used at operation_history.op_object.amount_.amount in a transfer operation. Probably in this case there are issues with a field and subfield named the same but not 100% certain about it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

in https://pastebin.com/7n2YkkTH for operation_type=17 amount_ is used. trying to find more samples by checking all the operation types.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

operation_type 33 https://pastebin.com/Pr1XiWDg uses _amount but please also note owner_

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hm, maybe ES gets confused if for a given field name the value can have different types? I. e. either a JSON-object or a number in the case of "amount"? In that case the selection algorithm for keys to rename might not work in all cases.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok, but I think that will not be caught by the keys_to_rename check. The check only operates within a single operation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right here. it seems that the amount_.amount is done at this check. There is maybe an issue with having field.field with Elastic. Will research more and try to figure this out.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hello folks, maybe I can shed some light here; please note that the adaptor is created to best fit the Dascoin use, so it might need some tweaking for BitShares.

First thing to note is the fact that ES creates schema as it see fields for the first time, meaning that i.e. if in one operation we see a fields called 'foo' of type String, then all subsequent fields named 'foo' need to be of the same type. That why I had to rename some of the fields.

Second thing is that ES sometimes gets confused with fields named 'foo.foo.bar', in which case I also had to perform a rename.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @damirn . I like the approach and as you mention we have to do a bit more in Bitshares side to make this work.

By checking the console output of elastic while adding data i am seeing:

[operation_history.op_object.total_claimed.amount] of different type, current_type [text], merged_type [long]

I think this one is because sometimes numbers came from the core as "212112"(if they are too big) and some others just as 222. so we probably can convert to string all numbers(or all to long) in amount fields. Other ideas welcome.

similar issue here:

mapper [operation_history.op_object.min_to_receive.amount] of different type, current_type [long], merged_type [text]

Other i am observing to rename in the proposals:

Can't merge a non object mapping [operation_history.op_object.proposed_ops.op] with an object mapping [operation_history.op_object.proposed_ops.op]

I propose to add code to fix those in Bitshares, keep going and find more errors, fix if needed. What do you think @pmconrad ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@oxarbitrage I have resolved the issue of "212112" vs 222 by recompiling fc with WITH_EXOTIC_JSON_PARSERS defined and then calling fc::json::to_string() with fc::json::output_formatting::legacy_generator as a second parameter.

| fc::mutable_variant_object tmp2(tmp.get_object()); | ||

| if (tmp2.find("current_fees") != tmp2.end()) | ||

| { | ||

| tmp2.erase("current_fees"); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why?

libraries/plugins/elasticsearch/include/graphene/elasticsearch/elasticsearch_plugin.hpp

Outdated

Show resolved

Hide resolved

|

Synchronized up to block #2180000. No error in the witness_mode side either in the ES console. All This is with the last commit and with the changes needed at FC. |

libraries/plugins/elasticsearch/include/graphene/elasticsearch/elasticsearch_plugin.hpp

Show resolved

Hide resolved

libraries/plugins/elasticsearch/include/graphene/elasticsearch/elasticsearch_plugin.hpp

Show resolved

Hide resolved

|

Merged fc, please bump. |

943c097 to

4f9094f

Compare

| auto& memo = o["memo"]; | ||

| if (memo.is_string()) | ||

| { | ||

| o["memo_"] = variant(o["memo"]); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

o["memo"] already is a variant, no need to wrap it in another one.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good to me, thanks!

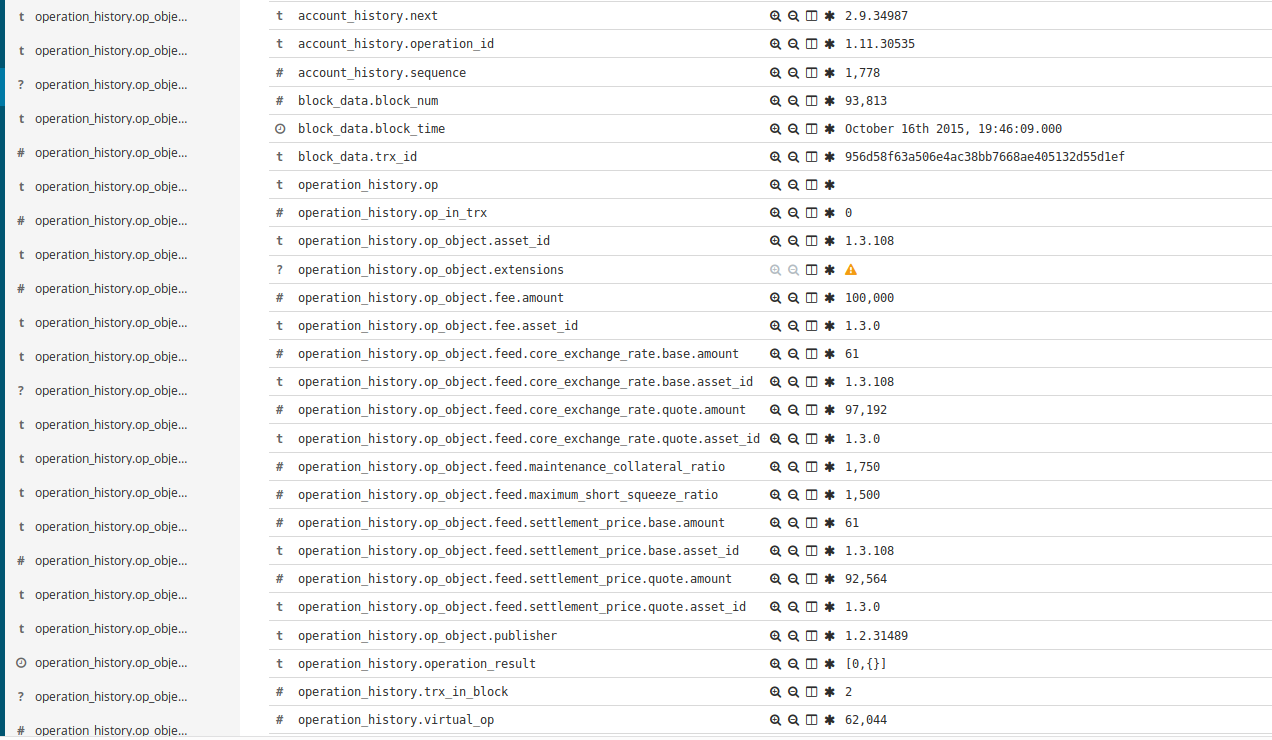

This pull request is the Dascoin(https://github.com/techsolutions-ltd/dascoin-blockchain) modifications made to elasticsearch plugin to index all operation fields. This code was provided to me by Damir from Dascoin.

Basically it creates the

adaptor_structin the hpp and visit it at the cpp indoOperationHistorywhen sendingopfield.The rest are some specific changes made by me to remain compatible with previous plugin versions like adding the object in new field

op_objectand adding argument to use this code withelasticsearch-operation-objectNow we have everything indexed as:

Problem i am having is that many blocks seems to be having some issue that i am trying to identify:

any input is appreciated.