Catanatron is a high-performance simulator and strong AI player for Settlers of Catan. You can run thousands of games in the order of seconds. The goal is to find the strongest Settlers of Catan bot possible.

Get Started with the Full Documentation: https://docs.catanatron.com

Join our Discord: https://discord.gg/FgFmb75TWd!

Catanatron provides a catanatron-play CLI tool to run large scale simulations.

-

Clone the repository:

git clone [email protected]:bcollazo/catanatron.git cd catanatron/

-

Create a virtual environment (requires Python 3.11 or higher)

python -m venv venv source ./venv/bin/activate -

Install dependencies

pip install -e . -

(Optional) Install developer and advanced dependencies

pip install -e ".[web,gym,dev]"

Run simulations and generate datasets via the CLI:

catanatron-play --players=R,R,R,W --num=100Generate datasets from the games to analyze:

catanatron-play --num 100 --output my-data-path/ --output-format jsonSee more examples at https://docs.catanatron.com.

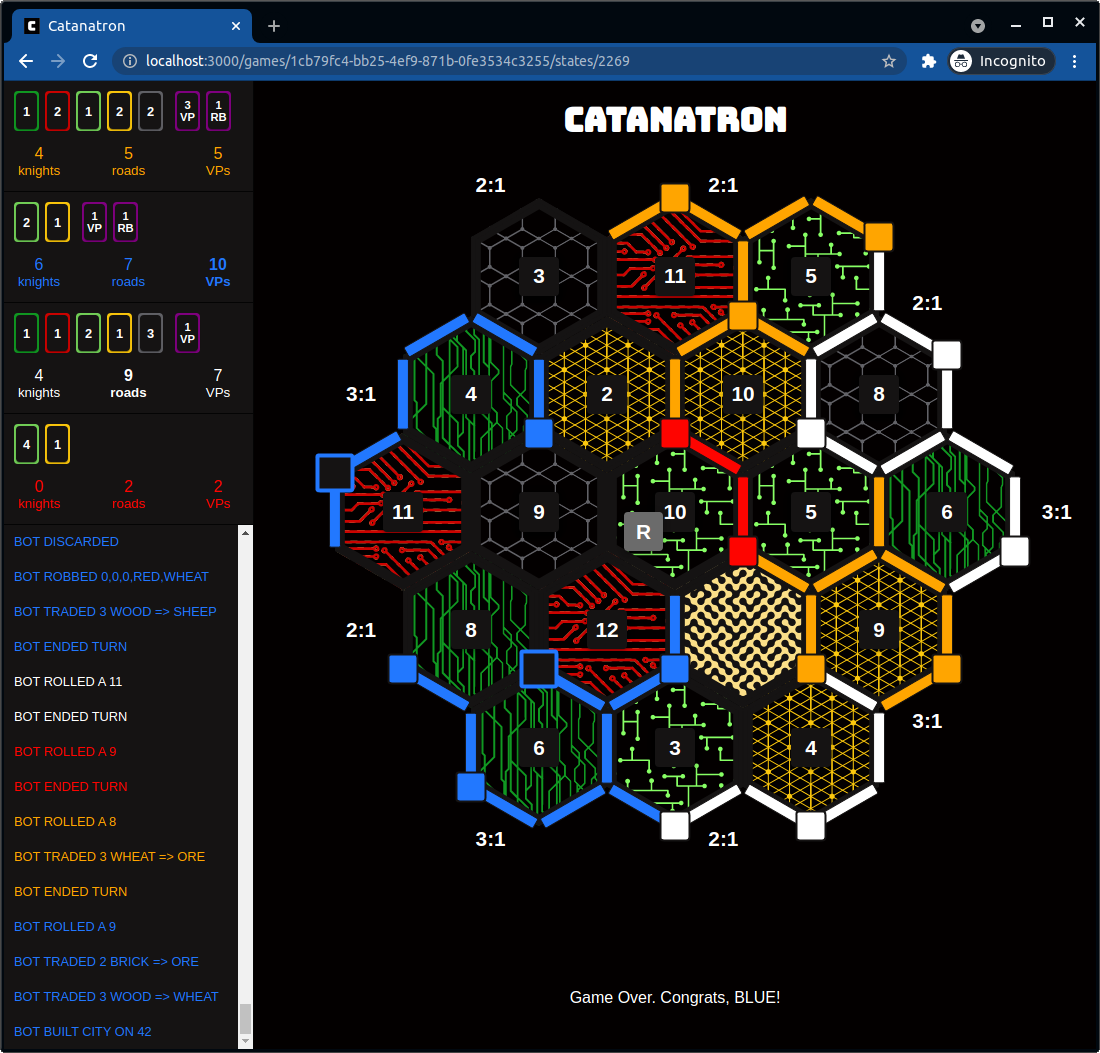

We provide Docker images so that you can watch, inspect, and play games against Catanatron via a web UI!

-

Ensure you have Docker installed (https://docs.docker.com/engine/install/)

-

Run the

docker-compose.yamlin the root folder of the repo:docker compose up

-

Visit http://localhost:3000 in your browser!

You can also use catanatron package directly which provides a core

implementation of the Settlers of Catan game logic.

from catanatron import Game, RandomPlayer, Color

# Play a simple 4v4 game

players = [

RandomPlayer(Color.RED),

RandomPlayer(Color.BLUE),

RandomPlayer(Color.WHITE),

RandomPlayer(Color.ORANGE),

]

game = Game(players)

print(game.play()) # returns winning colorSee more at http://docs.catanatron.com

For Reinforcement Learning, catanatron provides an Open AI / Gymnasium Environment.

Install it with:

pip install -e .[gym]and use it like:

import random

import gymnasium

import catanatron.gym

env = gymnasium.make("catanatron/Catanatron-v0")

observation, info = env.reset()

for _ in range(1000):

# your agent here (this takes random actions)

action = random.choice(info["valid_actions"])

observation, reward, terminated, truncated, info = env.step(action)

done = terminated or truncated

if done:

observation, info = env.reset()

env.close()See more at: https://docs.catanatron.com

Full documentation here: https://docs.catanatron.com

To develop for Catanatron core logic, install the dev dependencies and use the following test suite:

pip install .[web,gym,dev]

coverage run --source=catanatron -m pytest tests/ && coverage reportSee more at: https://docs.catanatron.com

See the motivation of the project here: 5 Ways NOT to Build a Catan AI.