v0.1.24

v0.1.24 - "But we have o1 at home!"

Based on the reference work from:

- https://github.com/tcsenpai/ol1-p1

- https://github.com/bklieger-groq/g1

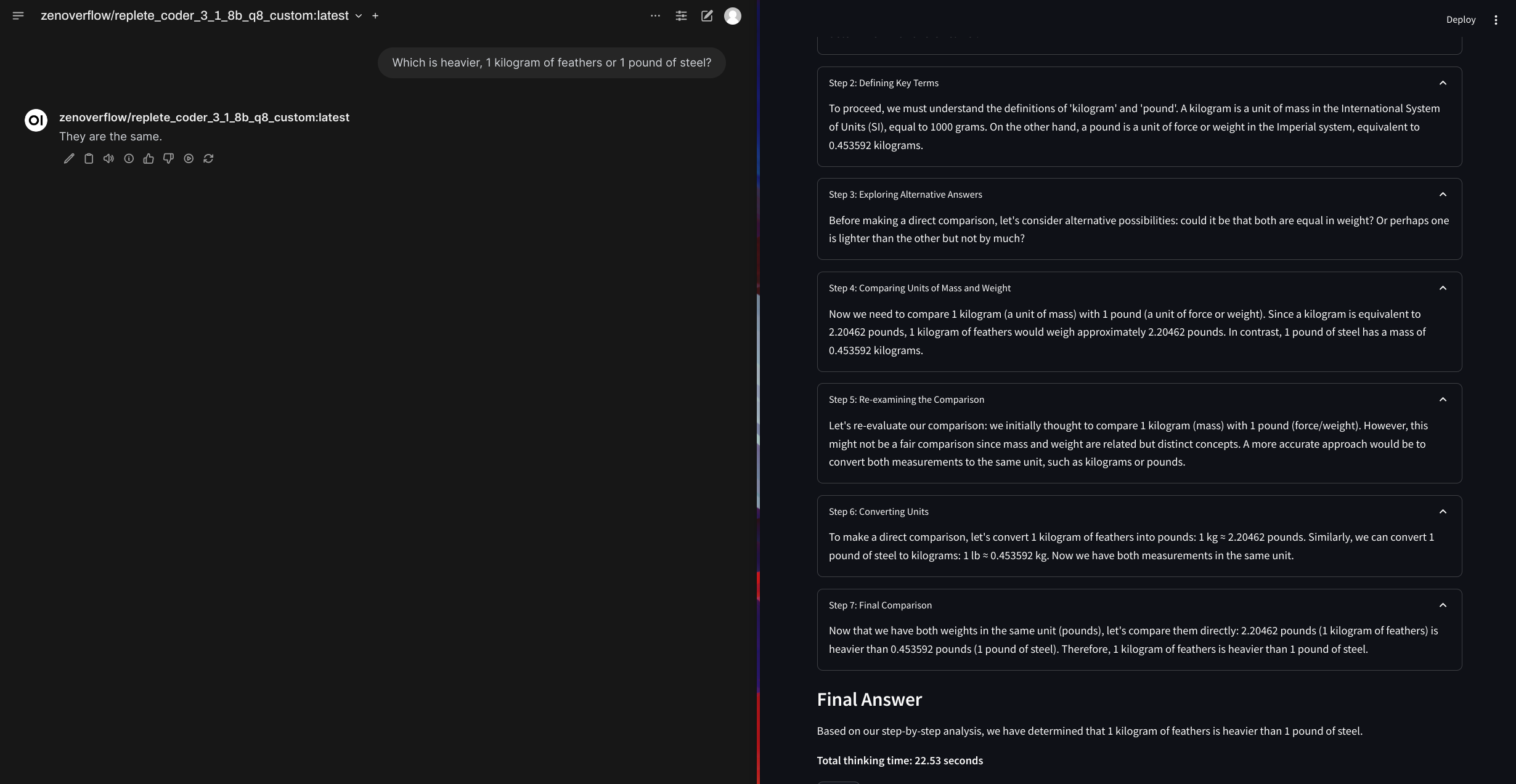

Minimal streamlit-based service with Ollama as a backend, that implements the o1-like reasoning chains.

Starting

# Start the service

harbor up ol1

# Open ol1 in the browser

harbor open ol1Configuration

# Get/set desired Ollama model for ol1

harbor ol1 model

# Set the temperature

harbor ol1 args set temperature 0.5Full Changelog: v0.1.23...v0.1.24