-

Notifications

You must be signed in to change notification settings - Fork 29k

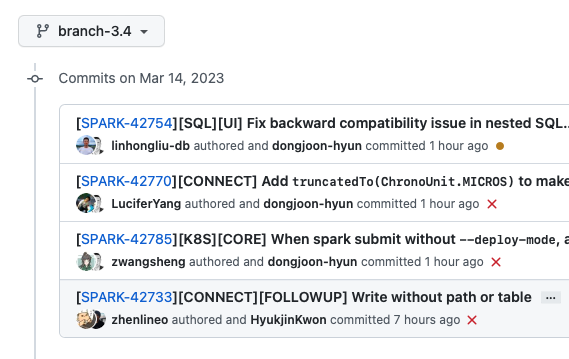

[SPARK-42733][CONNECT][Followup] Write without path or table #40358

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

Outdated

Show resolved

Hide resolved

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

Outdated

Show resolved

Hide resolved

|

Merged to master and branch-3.4. |

Fixes `DataFrameWriter.save` to work without path or table parameter. Added support of jdbc method in the writer as it is one of the impl that does not contains a path or table. DataFrameWriter.save should work without path parameter because some data sources, such as jdbc, noop, works without those parameters. The follow up fix for scala client of #40356 No Unit and E2E test Closes #40358 from zhenlineo/write-without-path-table. Authored-by: Zhen Li <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]> (cherry picked from commit 93334e2) Signed-off-by: Hyukjin Kwon <[email protected]>

| spark.range(10).write.format("noop").mode("append").save() | ||

| } | ||

|

|

||

| test("write jdbc") { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This seems to break branch-3.4 somehow. I'm checking it now.

[info] - write jdbc *** FAILED *** (527 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

[info] at io.grpc.Status.asRuntimeException(Status.java:535)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dongjoon-hyun I checked branch-3.4 locally and I can run the following without error:

build/sbt -Phive -Pconnect package

build/sbt "connect-client-jvm/test"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

To @zhenlineo , I reproduced the error locally in this way on branch-3.4 while the same command works in master.

$ build/sbt -Phive -Phadoop-3 assembly/package "protobuf/test" "connect-common/test" "connect/test" "connect-client-jvm/test"

...

[info] ClientE2ETestSuite:

[info] - spark result schema (319 milliseconds)

[info] - spark result array (350 milliseconds)

[info] - eager execution of sql (18 seconds, 3 milliseconds)

[info] - simple dataset (1 second, 194 milliseconds)

[info] - SPARK-42665: Ignore simple udf test until the udf is fully implemented. !!! IGNORED !!!

[info] - read and write (1 second, 32 milliseconds)

[info] - read path collision (32 milliseconds)

[info] - write table (5 seconds, 349 milliseconds)

[info] - write without table or path (170 milliseconds)

[info] - write jdbc *** FAILED *** (325 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dongjoon-hyun Be free to revert it in 3.4 and I will take a better look late today or tomorrow. Thanks. I can send a PR target at 3.4 directly. Or whatever is the easiest.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you, @zhenlineo . Let me check more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From my side, #40358 (comment) also failed.

$ build/sbt -Phive -Pconnect package

$ build/sbt "connect-client-jvm/test"

...

[info] ClientE2ETestSuite:

[info] - spark result schema (290 milliseconds)

[info] - spark result array (290 milliseconds)

[info] - eager execution of sql (15 seconds, 819 milliseconds)

[info] - simple dataset (1 second, 28 milliseconds)

[info] - SPARK-42665: Ignore simple udf test until the udf is fully implemented. !!! IGNORED !!!

[info] - read and write (929 milliseconds)

[info] - read path collision (31 milliseconds)

[info] - write table (4 seconds, 540 milliseconds)

[info] - write without table or path (348 milliseconds)

[info] - write jdbc *** FAILED *** (365 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

...

In this case, the usual suspect is Java. GitHub Action CI and I'm using Java 8.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dongjoon-hyun I saw the 3.4 build went back to green. Is this bf9c4b9 the fix? Is there still a problem that I shall fix?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dongjoon-hyun Aha, I saw the fix ab7c4f8. Thanks a lot!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, it's resolved for now. Thank you for checking, @zhenlineo !

Fixes `DataFrameWriter.save` to work without path or table parameter. Added support of jdbc method in the writer as it is one of the impl that does not contains a path or table. DataFrameWriter.save should work without path parameter because some data sources, such as jdbc, noop, works without those parameters. The follow up fix for scala client of apache#40356 No Unit and E2E test Closes apache#40358 from zhenlineo/write-without-path-table. Authored-by: Zhen Li <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]> (cherry picked from commit 93334e2) Signed-off-by: Hyukjin Kwon <[email protected]>

What changes were proposed in this pull request?

Fixes

DataFrameWriter.saveto work without path or table parameter.Added support of jdbc method in the writer as it is one of the impl that does not contains a path or table.

Why are the changes needed?

DataFrameWriter.save should work without path parameter because some data sources, such as jdbc, noop, works without those parameters.

The follow up fix for scala client of #40356

Does this PR introduce any user-facing change?

No

How was this patch tested?

Unit and E2E test