-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-41054][UI][CORE] Support RocksDB as KVStore in live UI #38567

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

+CC @shardulm94, @thejdeep |

5090cf9 to

2d98b2f

Compare

|

This pr is mostly adding ability to use a disk backed store in addition to in memory store. |

|

To comment on proposal in description, based on past prototypes I have worked on/seen: Maintaining state at driver on disk backed store and copying that to dfs has a few things which impact it - particularly for larger applications. They are not very robust to application crashes, interact in nontrivial ways with shutdown hook (hdfs failures) and increase application termination time during graceful shutdown. Depending on application characteristics, the impact of disk backed store can positively or negatively impact driver performance (positively - as updates are faster due to index, which was lacking in in memory store (when I added index, memory requirements increased :-( ), negatively due to increased disk activity): was difficult to predict. |

|

Also note that we cannot avoid parsing event files at history server with db generated at driver - unless the configs match for both (retained stages, tasks, queries, etc): particularly as these can be specified by users (for example, to aggressively cleanup for perf sensitive apps - but we would want a more accurate picture at SHS) |

I believe it covers the reverted PR #38542. The previous approach is to create an optional disk store for storing a subset of the

|

Yes, I created Jira https://issues.apache.org/jira/browse/SPARK-41053, and this one is just the beginning.

Yes, but it worthies starting the effort. We can keep developing it until it is ready. I run a benchmark with Protobuf serializer, and the results are promising. |

|

A newbie question: Does this design prefer the |

|

If this is part of a larger effort, would it be better to make this an spip instead @gengliangwang ? |

|

@mridulm yes I can start a SPIP since you have doubts. |

@LuciferYang I don't expect the RocksDB file to be large. This is an optional solution, it won't become the default at least for now. |

|

@mridulm FYI I have sent the SPIP to the dev list. |

|

|

||

| try { | ||

| open(dbPath, metadata, conf, Some(diskBackend)) | ||

| } catch { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For Live UI, are these two exception scenarios possible?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No. The LevelDB exception is not possible either. There are only two error-handling branches, and seems too much to distinguish whether the caller is for live UI or SHS.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If unexpected data cleaning doesn't occur in the live UI scenario, I think it is OK

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If something goes wrong and the kv store can't be created for live ui then the entire application fails?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@tgravescs If there is exception from RocksDB, it will delete the target directory and open the KV store again. Otherwise if the error is not from RocksDB, it will fall back to the in-memory store.

It follows the same logic in SHS here.

|

Sorry for the delay, I will try to review this later this week ... |

mridulm

left a comment

mridulm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just a minor comments, looks good to me.

core/src/main/scala/org/apache/spark/status/AppStatusStore.scala

Outdated

Show resolved

Hide resolved

mridulm

left a comment

mridulm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for working on this @gengliangwang !

Looking forward to the next PR in this SPIP :-)

LuciferYang

left a comment

LuciferYang

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1, LGTM

|

|

||

| try { | ||

| open(dbPath, metadata, conf, Some(diskBackend)) | ||

| } catch { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If something goes wrong and the kv store can't be created for live ui then the entire application fails?

tgravescs

left a comment

tgravescs

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

thanks @gengliangwang

|

@mridulm @LuciferYang @tgravescs Thanks for the review. |

| val store = new ElementTrackingStore(new InMemoryStore(), conf) | ||

| val storePath = conf.get(LIVE_UI_LOCAL_STORE_DIR).map(new File(_)) | ||

| // For the disk-based KV store of live UI, let's simply make it ROCKSDB only for now, | ||

| // instead of supporting both LevelDB and RocksDB. RocksDB is built based on LevelDB with |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

not related to this PR, I'm wondering why did we provide these two choices in the first place. RocksDB only seems sufficient.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

According to the context, I thought Reynold mentioned that the legal issue was resolved. Do you mean that Apache Spark still have legal issue with RocksDB, @gengliangwang ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dongjoon-hyun I was explaining why @vanzin chose LevelDB. Maybe I was wrong about his reason.

Do you mean that Apache Spark still have legal issue with RocksDB

I don't think so. If so, I wouldn't choose it as the only disk backend of live UI.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it. Thank you for confirming that.

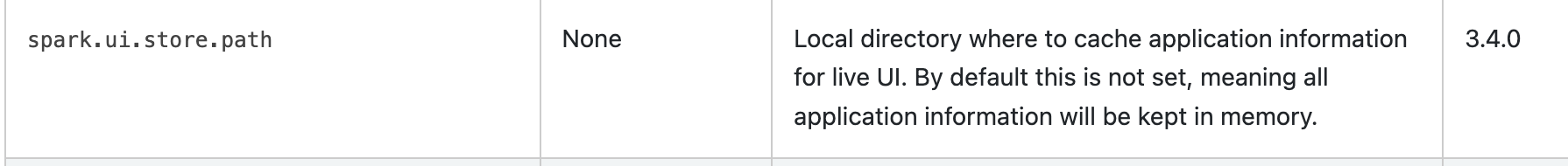

### What changes were proposed in this pull request? Support using RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration `spark.ui.store.path`. The configuration is optional. The default KV store will still be in memory. Note: let's make it ROCKSDB only for now instead of supporting both LevelDB and RocksDB. RocksDB is built based on LevelDB with improvements on writes and reads. Furthermore, we can reuse the RocksDBFileManager in streaming for replicating the local RocksDB file to DFS. The replication in DFS can be used for the Spark history server. ### Why are the changes needed? The current architecture of Spark live UI and Spark history server(SHS) is too simple to serve large clusters and heavy workloads: - Spark stores all the live UI date in memory. The size can be a few GBs and affects the driver's stability (OOM). - There is a limitation of storing 1000 queries only. Note that we can’t simply increase the limitation under the current Architecture. I did a memory profiling. Storing one query execution detail can take 800KB while storing one task requires 0.3KB. So for 1000 SQL queries with 1000* 2000 tasks, the memory usage for query execution and task data will be 1.4GB. Spark UI stores UI data for jobs/stages/executors as well. So to store 10k queries, it may take more than 14GB. - SHS has to parse JSON format event log for the initial start. The uncompressed event logs can be as big as a few GBs, and the parse can be quite slow. Some users reported they had to wait for more than half an hour. With RocksDB as KVStore, we can improve the stability of Spark driver and fasten the startup of SHS. ### Does this PR introduce _any_ user-facing change? Yes, supporting RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration `spark.ui.store.path`. The configuration is optional. The default KV store will still be in memory. ### How was this patch tested? New UT Preview of the doc change: <img width="895" alt="image" src="https://user-images.githubusercontent.com/1097932/203184691-b6815990-b7b0-422b-aded-8e1771c0c167.png"> Closes apache#38567 from gengliangwang/liveUIKVStore. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

### What changes were proposed in this pull request? Support using RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration `spark.ui.store.path`. The configuration is optional. The default KV store will still be in memory. Note: let's make it ROCKSDB only for now instead of supporting both LevelDB and RocksDB. RocksDB is built based on LevelDB with improvements on writes and reads. Furthermore, we can reuse the RocksDBFileManager in streaming for replicating the local RocksDB file to DFS. The replication in DFS can be used for the Spark history server. ### Why are the changes needed? The current architecture of Spark live UI and Spark history server(SHS) is too simple to serve large clusters and heavy workloads: - Spark stores all the live UI date in memory. The size can be a few GBs and affects the driver's stability (OOM). - There is a limitation of storing 1000 queries only. Note that we can’t simply increase the limitation under the current Architecture. I did a memory profiling. Storing one query execution detail can take 800KB while storing one task requires 0.3KB. So for 1000 SQL queries with 1000* 2000 tasks, the memory usage for query execution and task data will be 1.4GB. Spark UI stores UI data for jobs/stages/executors as well. So to store 10k queries, it may take more than 14GB. - SHS has to parse JSON format event log for the initial start. The uncompressed event logs can be as big as a few GBs, and the parse can be quite slow. Some users reported they had to wait for more than half an hour. With RocksDB as KVStore, we can improve the stability of Spark driver and fasten the startup of SHS. ### Does this PR introduce _any_ user-facing change? Yes, supporting RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration `spark.ui.store.path`. The configuration is optional. The default KV store will still be in memory. ### How was this patch tested? New UT Preview of the doc change: <img width="895" alt="image" src="https://user-images.githubusercontent.com/1097932/203184691-b6815990-b7b0-422b-aded-8e1771c0c167.png"> Closes apache#38567 from gengliangwang/liveUIKVStore. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

### What changes were proposed in this pull request? Support using RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration `spark.ui.store.path`. The configuration is optional. The default KV store will still be in memory. Note: let's make it ROCKSDB only for now instead of supporting both LevelDB and RocksDB. RocksDB is built based on LevelDB with improvements on writes and reads. Furthermore, we can reuse the RocksDBFileManager in streaming for replicating the local RocksDB file to DFS. The replication in DFS can be used for the Spark history server. ### Why are the changes needed? The current architecture of Spark live UI and Spark history server(SHS) is too simple to serve large clusters and heavy workloads: - Spark stores all the live UI date in memory. The size can be a few GBs and affects the driver's stability (OOM). - There is a limitation of storing 1000 queries only. Note that we can’t simply increase the limitation under the current Architecture. I did a memory profiling. Storing one query execution detail can take 800KB while storing one task requires 0.3KB. So for 1000 SQL queries with 1000* 2000 tasks, the memory usage for query execution and task data will be 1.4GB. Spark UI stores UI data for jobs/stages/executors as well. So to store 10k queries, it may take more than 14GB. - SHS has to parse JSON format event log for the initial start. The uncompressed event logs can be as big as a few GBs, and the parse can be quite slow. Some users reported they had to wait for more than half an hour. With RocksDB as KVStore, we can improve the stability of Spark driver and fasten the startup of SHS. ### Does this PR introduce _any_ user-facing change? Yes, supporting RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration `spark.ui.store.path`. The configuration is optional. The default KV store will still be in memory. ### How was this patch tested? New UT Preview of the doc change: <img width="895" alt="image" src="https://user-images.githubusercontent.com/1097932/203184691-b6815990-b7b0-422b-aded-8e1771c0c167.png"> Closes apache#38567 from gengliangwang/liveUIKVStore. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

What changes were proposed in this pull request?

Support using RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration

spark.ui.store.path. The configuration is optional. The default KV store will still be in memory.Note: let's make it ROCKSDB only for now instead of supporting both LevelDB and RocksDB. RocksDB is built based on LevelDB with improvements on writes and reads. Furthermore, we can reuse the RocksDBFileManager in streaming for replicating the local RocksDB file to DFS. The replication in DFS can be used for the Spark history server.

Why are the changes needed?

The current architecture of Spark live UI and Spark history server(SHS) is too simple to serve large clusters and heavy workloads:

With RocksDB as KVStore, we can improve the stability of Spark driver and fasten the startup of SHS.

Does this PR introduce any user-facing change?

Yes, supporting RocksDB as the KVStore in live UI. The location of RocksDB can be set via configuration

spark.ui.store.path. The configuration is optional. The default KV store will still be in memory.How was this patch tested?

New UT

Preview of the doc change: