-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-40374][SQL] Migrate type check failures of type creators onto error classes #38463

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -138,6 +138,11 @@ | |

| "Unable to convert column <name> of type <type> to JSON." | ||

| ] | ||

| }, | ||

| "CANNOT_DROP_ALL_FIELDS" : { | ||

| "message" : [ | ||

| "Cannot drop all fields in struct." | ||

| ] | ||

| }, | ||

| "CAST_WITHOUT_SUGGESTION" : { | ||

| "message" : [ | ||

| "cannot cast <srcType> to <targetType>." | ||

|

|

@@ -155,6 +160,21 @@ | |

| "To convert values from <srcType> to <targetType>, you can use the functions <functionNames> instead." | ||

| ] | ||

| }, | ||

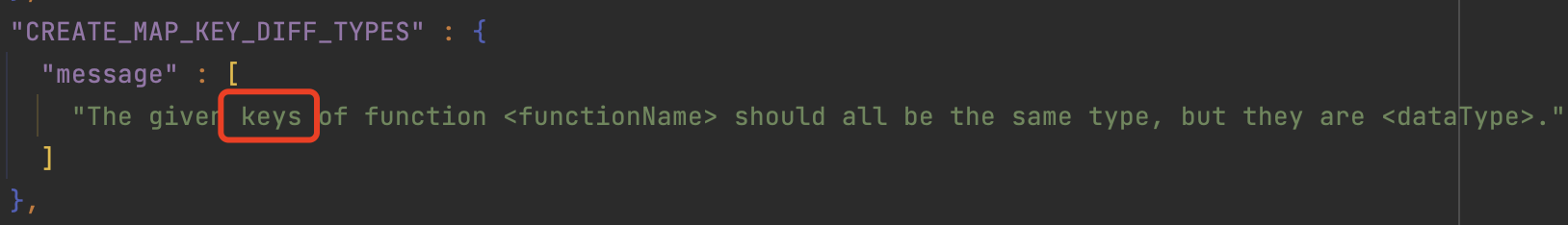

| "CREATE_MAP_KEY_DIFF_TYPES" : { | ||

| "message" : [ | ||

| "The given keys of function <functionName> should all be the same type, but they are <dataType>." | ||

| ] | ||

| }, | ||

| "CREATE_MAP_VALUE_DIFF_TYPES" : { | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. ditto |

||

| "message" : [ | ||

| "The given values of function <functionName> should all be the same type, but they are <dataType>." | ||

| ] | ||

| }, | ||

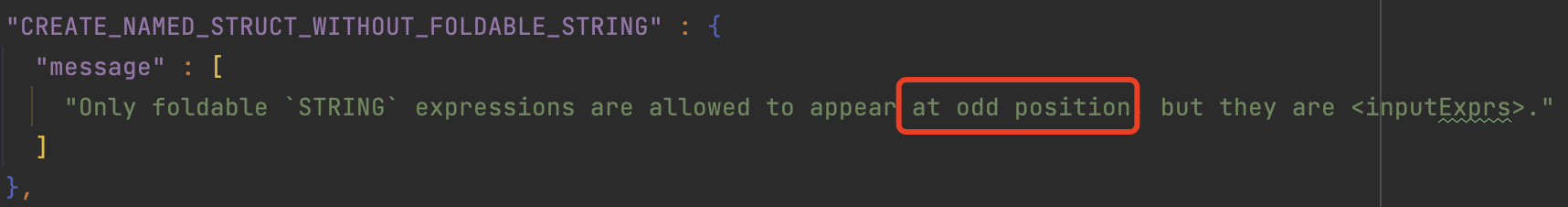

| "CREATE_NAMED_STRUCT_WITHOUT_FOLDABLE_STRING" : { | ||

| "message" : [ | ||

| "Only foldable `STRING` expressions are allowed to appear at odd position, but they are <inputExprs>." | ||

| ] | ||

| }, | ||

| "DATA_DIFF_TYPES" : { | ||

| "message" : [ | ||

| "Input to <functionName> should all be the same type, but it's <dataType>." | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -22,6 +22,8 @@ import scala.collection.mutable.ArrayBuffer | |

| import org.apache.spark.sql.catalyst.InternalRow | ||

| import org.apache.spark.sql.catalyst.analysis.{Resolver, TypeCheckResult, TypeCoercion, UnresolvedAttribute, UnresolvedExtractValue} | ||

| import org.apache.spark.sql.catalyst.analysis.FunctionRegistry.{FUNC_ALIAS, FunctionBuilder} | ||

| import org.apache.spark.sql.catalyst.analysis.TypeCheckResult.DataTypeMismatch | ||

| import org.apache.spark.sql.catalyst.expressions.Cast._ | ||

| import org.apache.spark.sql.catalyst.expressions.codegen._ | ||

| import org.apache.spark.sql.catalyst.expressions.codegen.Block._ | ||

| import org.apache.spark.sql.catalyst.parser.CatalystSqlParser | ||

|

|

@@ -202,16 +204,30 @@ case class CreateMap(children: Seq[Expression], useStringTypeWhenEmpty: Boolean) | |

|

|

||

| override def checkInputDataTypes(): TypeCheckResult = { | ||

| if (children.size % 2 != 0) { | ||

| TypeCheckResult.TypeCheckFailure( | ||

| s"$prettyName expects a positive even number of arguments.") | ||

| DataTypeMismatch( | ||

| errorSubClass = "WRONG_NUM_ARGS", | ||

| messageParameters = Map( | ||

| "functionName" -> toSQLId(prettyName), | ||

| "expectedNum" -> "2n (n > 0)", | ||

| "actualNum" -> children.length.toString | ||

| ) | ||

| ) | ||

| } else if (!TypeCoercion.haveSameType(keys.map(_.dataType))) { | ||

| TypeCheckResult.TypeCheckFailure( | ||

| "The given keys of function map should all be the same type, but they are " + | ||

| keys.map(_.dataType.catalogString).mkString("[", ", ", "]")) | ||

| DataTypeMismatch( | ||

| errorSubClass = "CREATE_MAP_KEY_DIFF_TYPES", | ||

| messageParameters = Map( | ||

| "functionName" -> toSQLId(prettyName), | ||

| "dataType" -> keys.map(key => toSQLType(key.dataType)).mkString("[", ", ", "]") | ||

| ) | ||

| ) | ||

| } else if (!TypeCoercion.haveSameType(values.map(_.dataType))) { | ||

| TypeCheckResult.TypeCheckFailure( | ||

| "The given values of function map should all be the same type, but they are " + | ||

| values.map(_.dataType.catalogString).mkString("[", ", ", "]")) | ||

| DataTypeMismatch( | ||

| errorSubClass = "CREATE_MAP_VALUE_DIFF_TYPES", | ||

| messageParameters = Map( | ||

| "functionName" -> toSQLId(prettyName), | ||

| "dataType" -> values.map(value => toSQLType(value.dataType)).mkString("[", ", ", "]") | ||

| ) | ||

| ) | ||

| } else { | ||

| TypeUtils.checkForMapKeyType(dataType.keyType) | ||

| } | ||

|

|

@@ -444,17 +460,32 @@ case class CreateNamedStruct(children: Seq[Expression]) extends Expression with | |

|

|

||

| override def checkInputDataTypes(): TypeCheckResult = { | ||

| if (children.size % 2 != 0) { | ||

| TypeCheckResult.TypeCheckFailure(s"$prettyName expects an even number of arguments.") | ||

| DataTypeMismatch( | ||

| errorSubClass = "WRONG_NUM_ARGS", | ||

| messageParameters = Map( | ||

| "functionName" -> toSQLId(prettyName), | ||

| "expectedNum" -> "2n (n > 0)", | ||

| "actualNum" -> children.length.toString | ||

| ) | ||

| ) | ||

| } else { | ||

| val invalidNames = nameExprs.filterNot(e => e.foldable && e.dataType == StringType) | ||

| if (invalidNames.nonEmpty) { | ||

| TypeCheckResult.TypeCheckFailure( | ||

| s"Only foldable ${StringType.catalogString} expressions are allowed to appear at odd" + | ||

| s" position, got: ${invalidNames.mkString(",")}") | ||

| DataTypeMismatch( | ||

| errorSubClass = "CREATE_NAMED_STRUCT_WITHOUT_FOLDABLE_STRING", | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Can

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It seems that would be hard to make NON_FOLDABLE_INPUT more general to cover the case. Let's introduce more specific error class for the case. |

||

| messageParameters = Map( | ||

| "inputExprs" -> invalidNames.map(toSQLExpr(_)).mkString("[", ", ", "]") | ||

| ) | ||

| ) | ||

| } else if (!names.contains(null)) { | ||

| TypeCheckResult.TypeCheckSuccess | ||

| } else { | ||

| TypeCheckResult.TypeCheckFailure("Field name should not be null") | ||

| DataTypeMismatch( | ||

| errorSubClass = "UNEXPECTED_NULL", | ||

| messageParameters = Map( | ||

| "exprName" -> nameExprs.map(toSQLExpr).mkString("[", ", ", "]") | ||

| ) | ||

| ) | ||

| } | ||

| } | ||

| } | ||

|

|

@@ -668,10 +699,19 @@ case class UpdateFields(structExpr: Expression, fieldOps: Seq[StructFieldsOperat | |

| override def checkInputDataTypes(): TypeCheckResult = { | ||

| val dataType = structExpr.dataType | ||

| if (!dataType.isInstanceOf[StructType]) { | ||

| TypeCheckResult.TypeCheckFailure("struct argument should be struct type, got: " + | ||

| dataType.catalogString) | ||

| DataTypeMismatch( | ||

| errorSubClass = "UNEXPECTED_INPUT_TYPE", | ||

| messageParameters = Map( | ||

| "paramIndex" -> "1", | ||

| "requiredType" -> toSQLType(StructType), | ||

| "inputSql" -> toSQLExpr(structExpr), | ||

| "inputType" -> toSQLType(structExpr.dataType)) | ||

| ) | ||

| } else if (newExprs.isEmpty) { | ||

| TypeCheckResult.TypeCheckFailure("cannot drop all fields in struct") | ||

| DataTypeMismatch( | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It seems that the original message did not clearly describe this error, the message doesn't look like

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It is some kind of |

||

| errorSubClass = "CANNOT_DROP_ALL_FIELDS", | ||

| messageParameters = Map.empty | ||

| ) | ||

| } else { | ||

| TypeCheckResult.TypeCheckSuccess | ||

| } | ||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Cloud we make

DATA_DIFF_TYPESmore general and reuse it?There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

but

Very hard to reuse it.

Maybe

functionNamepass to:map's keysormap's values, seems unreasonable