-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-39399] [CORE] [K8S]: Fix proxy-user authentication for Spark on k8s in cluster deploy mode #37880

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can one of the admins verify this patch? |

|

ping @yaooqinn |

|

+CC @squito, @HyukjinKwon |

9f96cf1 to

8825d93

Compare

…n k8s in cluster deploy mode

8825d93 to

00cee50

Compare

|

+CC @Ngone51 , @HyukjinKwon |

|

ping @holdenk |

|

Gentle ping @holdenk @dongjoon-hyun @Ngone51 , @HyukjinKwon |

1 similar comment

|

Gentle ping @holdenk @dongjoon-hyun @Ngone51 , @HyukjinKwon |

holdenk

left a comment

holdenk

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

@holdenk Thanks for approving the PR. Can you please merge this PR or tag someone who can do it? |

…8s in cluster deploy mode ### What changes were proposed in this pull request? The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: d2iq-archive#26 ### Why are the changes needed? When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails. Further details: https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063 https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135 ### Does this PR introduce _any_ user-facing change? Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s. ### How was this patch tested? The patch was tested by: 1. Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager. 2. Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager. 3. Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625 Closes #37880 from shrprasa/proxy_user_fix. Authored-by: Shrikant Prasad <[email protected]> Signed-off-by: Kent Yao <[email protected]> (cherry picked from commit b3b3557) Signed-off-by: Kent Yao <[email protected]>

…8s in cluster deploy mode ### What changes were proposed in this pull request? The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: d2iq-archive#26 ### Why are the changes needed? When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails. Further details: https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063 https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135 ### Does this PR introduce _any_ user-facing change? Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s. ### How was this patch tested? The patch was tested by: 1. Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager. 2. Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager. 3. Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625 Closes #37880 from shrprasa/proxy_user_fix. Authored-by: Shrikant Prasad <[email protected]> Signed-off-by: Kent Yao <[email protected]> (cherry picked from commit b3b3557) Signed-off-by: Kent Yao <[email protected]>

…8s in cluster deploy mode ### What changes were proposed in this pull request? The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: d2iq-archive#26 ### Why are the changes needed? When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails. Further details: https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063 https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135 ### Does this PR introduce _any_ user-facing change? Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s. ### How was this patch tested? The patch was tested by: 1. Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager. 2. Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager. 3. Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625 Closes #37880 from shrprasa/proxy_user_fix. Authored-by: Shrikant Prasad <[email protected]> Signed-off-by: Kent Yao <[email protected]> (cherry picked from commit b3b3557) Signed-off-by: Kent Yao <[email protected]>

|

Thanks @yaooqinn for merging the PR. |

|

Thank you, @shrprasa and all! |

|

cc @kazuyukitanimura , too |

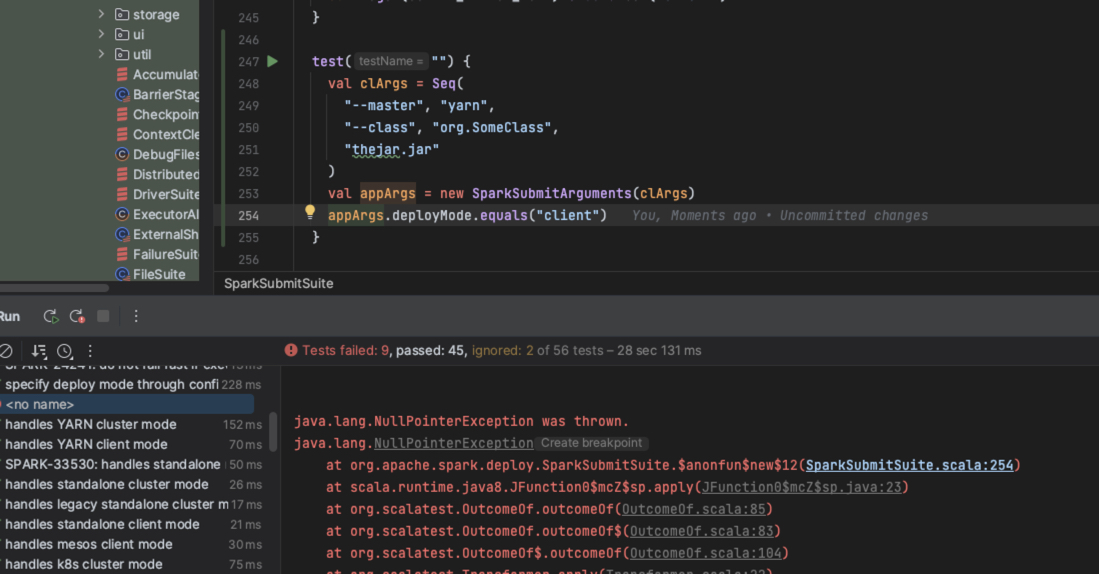

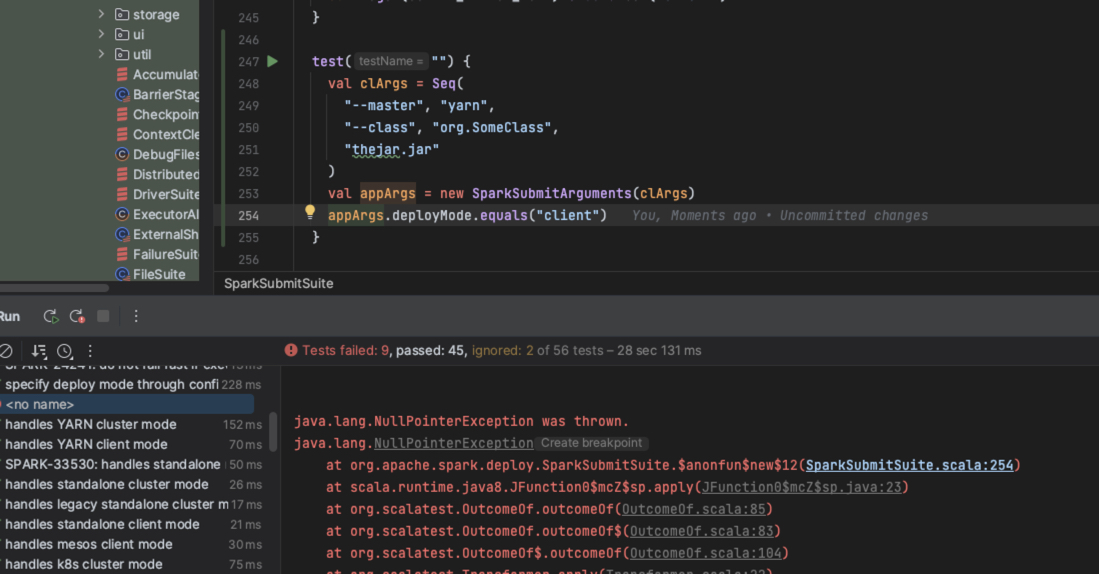

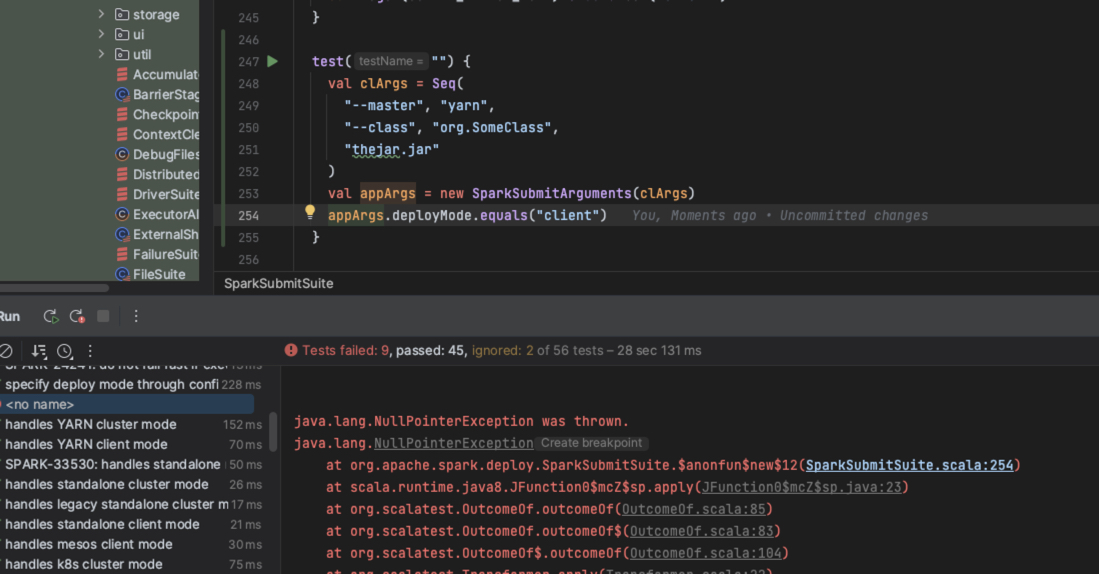

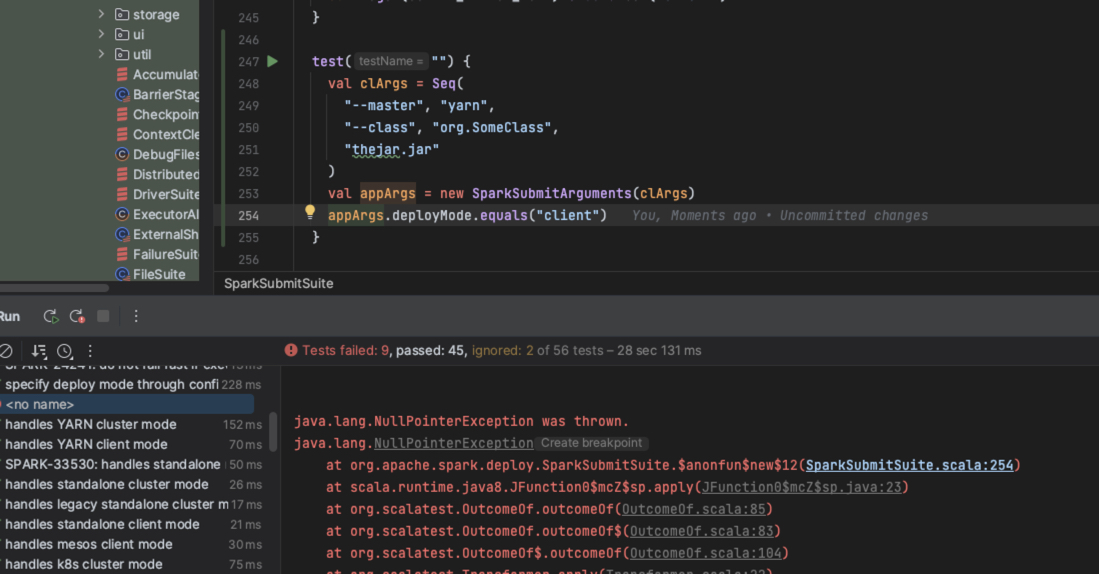

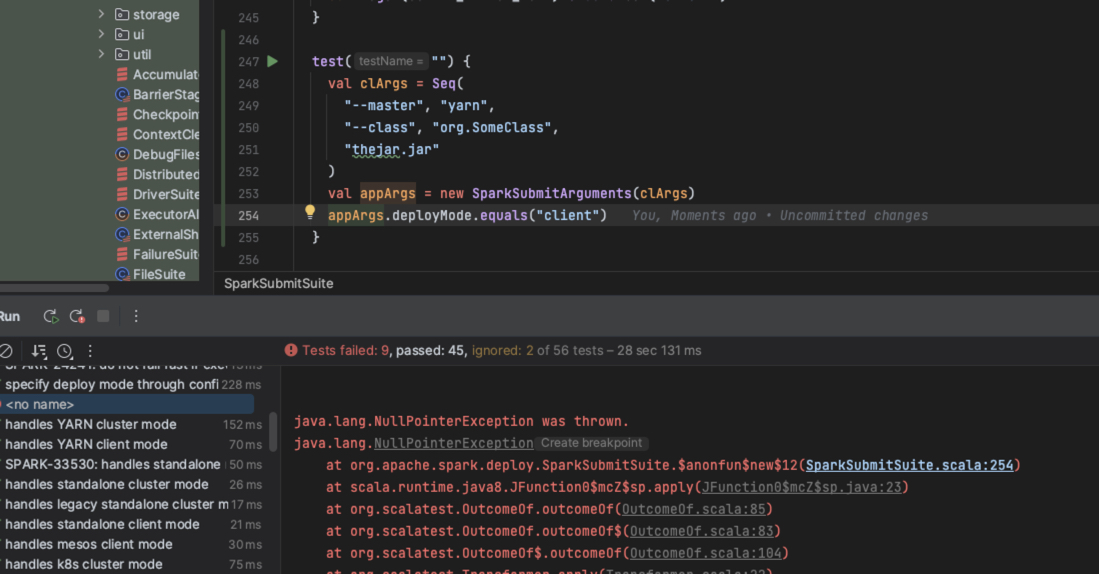

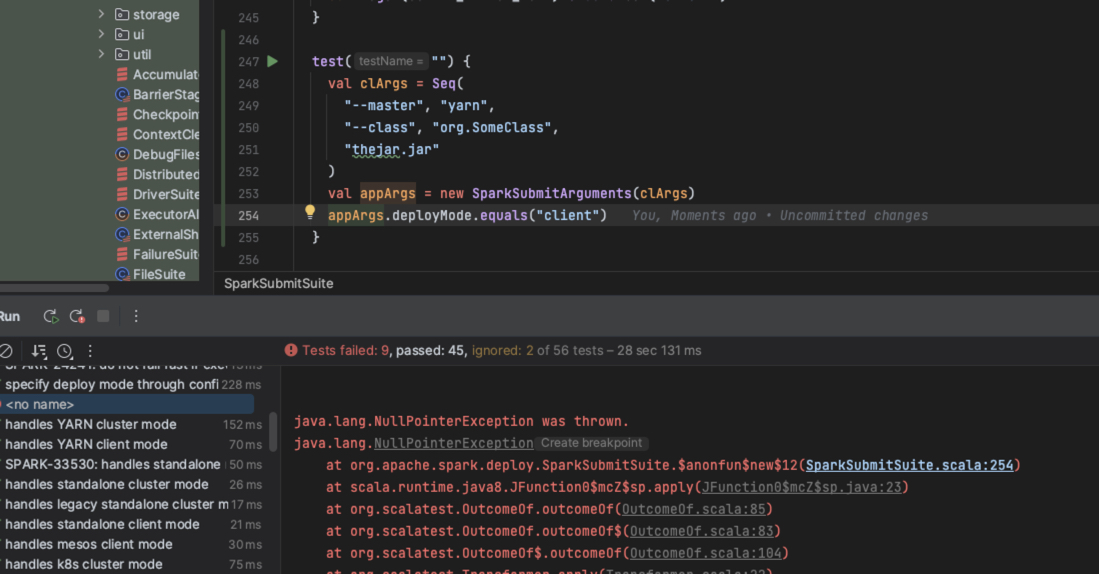

…void facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After #37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes #40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…void facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After #37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes #40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 767253b) Signed-off-by: Dongjoon Hyun <[email protected]>

…void facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After #37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes #40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 767253b) Signed-off-by: Dongjoon Hyun <[email protected]>

…void facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After #37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes #40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 767253b) Signed-off-by: Dongjoon Hyun <[email protected]>

…8s in cluster deploy mode ### What changes were proposed in this pull request? The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: d2iq-archive#26 ### Why are the changes needed? When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails. Further details: https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063 https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135 ### Does this PR introduce _any_ user-facing change? Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s. ### How was this patch tested? The patch was tested by: 1. Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager. 2. Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager. 3. Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625 Closes apache#37880 from shrprasa/proxy_user_fix. Authored-by: Shrikant Prasad <[email protected]> Signed-off-by: Kent Yao <[email protected]> (cherry picked from commit b3b3557) Signed-off-by: Kent Yao <[email protected]>

…void facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After apache#37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes apache#40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 767253b) Signed-off-by: Dongjoon Hyun <[email protected]>

…8s in cluster deploy mode ### What changes were proposed in this pull request? The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: d2iq-archive#26 ### Why are the changes needed? When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails. Further details: https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063 https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135 ### Does this PR introduce _any_ user-facing change? Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s. ### How was this patch tested? The patch was tested by: 1. Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager. 2. Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager. 3. Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625 Closes apache#37880 from shrprasa/proxy_user_fix. Authored-by: Shrikant Prasad <[email protected]> Signed-off-by: Kent Yao <[email protected]> (cherry picked from commit b3b3557) Signed-off-by: Kent Yao <[email protected]>

…void facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After apache#37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes apache#40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 767253b) Signed-off-by: Dongjoon Hyun <[email protected]>

What changes were proposed in this pull request?

The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: d2iq-archive#26

Why are the changes needed?

When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails.

Further details:

https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063

https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135

Does this PR introduce any user-facing change?

Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s.

How was this patch tested?

The patch was tested by:

Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager.

Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager.

Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625