-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-33933][SQL][3.0][test-maven] Materialize BroadcastQueryStage first to avoid broadcast timeout in AQE #31084

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

The failure is relevant to AQE. Could you take a look at it, @zhongyu09 ? |

I will have a look. |

|

Thanks! |

|

ok to test |

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Hi @dongjoon-hyun |

|

Test build #133820 has finished for PR 31084 at commit

|

|

Got it. I'm not sure about removing UTs. BTW, Jenkins passed at least. WDYT, @cloud-fan and @HyukjinKwon ? |

|

Retest this please |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #133857 has finished for PR 31084 at commit

|

|

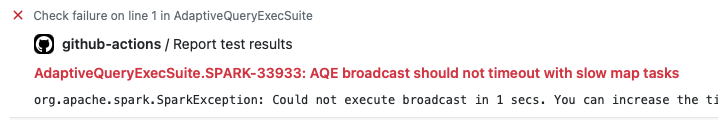

The same test case failed in Jenkins Maven environment again. (https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/133857/testReport) |

|

I'm wondering if this test is stable in |

Yes, also not stable in |

|

A partial fix is fine but let's make sure to mention/document what cases the partial fix does not cover. |

|

I reverted it for now for RC preparation . Let's make a PR with clarifying which case it doesn't cover, and why this is a partial fix. |

For partial fix, it is difficult to give an stable UT. I would rather give an stable fix. I think two directions:

I prefer for #1, it behavior more like non-AQE and is this PR's original intention and will have less impact to non-AQE. |

This PR is the same as #30998 to merge to branch 3.0

What changes were proposed in this pull request?

In AdaptiveSparkPlanExec.getFinalPhysicalPlan, when newStages are generated, sort the new stages by class type to make sure BroadcastQueryState precede others.

It can make sure the broadcast job are submitted before map jobs to avoid waiting for job schedule and cause broadcast timeout.

Why are the changes needed?

When enable AQE, in getFinalPhysicalPlan, spark traversal the physical plan bottom up and create query stage for materialized part by createQueryStages and materialize those new created query stages to submit map stages or broadcasting. When ShuffleQueryStage are materializing before BroadcastQueryStage, the map job and broadcast job are submitted almost at the same time, but map job will hold all the computing resources. If the map job runs slow (when lots of data needs to process and the resource is limited), the broadcast job cannot be started(and finished) before spark.sql.broadcastTimeout, thus cause whole job failed (introduced in SPARK-31475).

The workaround to increase spark.sql.broadcastTimeout doesn't make sense and graceful, because the data to broadcast is very small.

Does this PR introduce any user-facing change?

NO

How was this patch tested?