-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-34041][PYTHON][DOCS] Miscellaneous cleanup for new PySpark documentation #31082

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

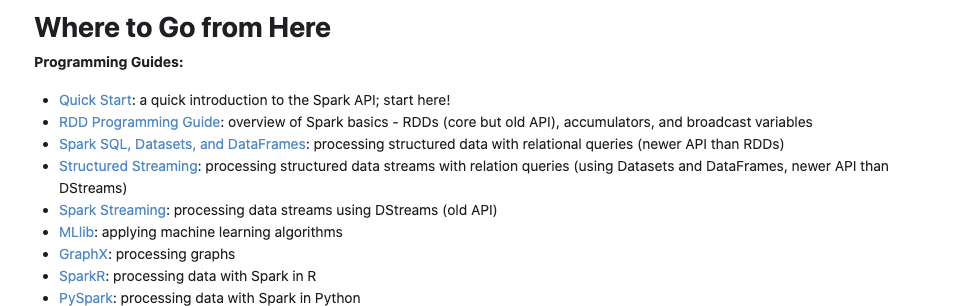

@@ -113,6 +113,8 @@ options for deployment: | |

| * [Spark Streaming](streaming-programming-guide.html): processing data streams using DStreams (old API) | ||

| * [MLlib](ml-guide.html): applying machine learning algorithms | ||

| * [GraphX](graphx-programming-guide.html): processing graphs | ||

| * [SparkR](sparkr.html): processing data with Spark in R | ||

| * [PySpark](api/python/getting_started/index.html): processing data with Spark in Python | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

|

|

||

| **API Docs:** | ||

|

|

||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -21,6 +21,9 @@ Getting Started | |

| =============== | ||

|

|

||

| This page summarizes the basic steps required to setup and get started with PySpark. | ||

| There are more guides shared with other languages such as | ||

| `Quick Start <http://spark.apache.org/docs/latest/quick-start.html>`_ in Programming Guides | ||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Is this needed to be absolute path? Cannot we use relative path?

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Yeah ... I tried so hard to find a good way but failed. This is because the link here is outer side of the PySpark documentation build. So it can't resolve the link when PySpark documentation builds. |

||

| at `the Spark documentation <http://spark.apache.org/docs/latest/index.html#where-to-go-from-here>`_. | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

|

|

||

| .. toctree:: | ||

| :maxdepth: 2 | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -21,8 +21,6 @@ Migration Guide | |

| =============== | ||

|

|

||

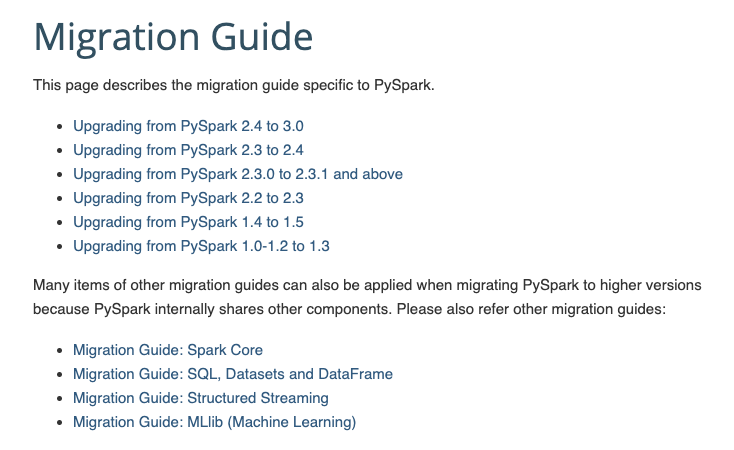

| This page describes the migration guide specific to PySpark. | ||

| Many items of other migration guides can also be applied when migrating PySpark to higher versions because PySpark internally shares other components. | ||

| Please also refer other migration guides such as `Migration Guide: SQL, Datasets and DataFrame <http://spark.apache.org/docs/latest/sql-migration-guide.html>`_. | ||

|

|

||

| .. toctree:: | ||

| :maxdepth: 2 | ||

|

|

@@ -33,3 +31,13 @@ Please also refer other migration guides such as `Migration Guide: SQL, Datasets | |

| pyspark_2.2_to_2.3 | ||

| pyspark_1.4_to_1.5 | ||

| pyspark_1.0_1.2_to_1.3 | ||

|

|

||

|

|

||

| Many items of other migration guides can also be applied when migrating PySpark to higher versions because PySpark internally shares other components. | ||

| Please also refer other migration guides: | ||

|

|

||

| - `Migration Guide: Spark Core <http://spark.apache.org/docs/latest/core-migration-guide.html>`_ | ||

| - `Migration Guide: SQL, Datasets and DataFrame <http://spark.apache.org/docs/latest/sql-migration-guide.html>`_ | ||

| - `Migration Guide: Structured Streaming <http://spark.apache.org/docs/latest/ss-migration-guide.html>`_ | ||

| - `Migration Guide: MLlib (Machine Learning) <http://spark.apache.org/docs/latest/ml-migration-guide.html>`_ | ||

|

|

||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -16,11 +16,11 @@ | |

| under the License. | ||

|

|

||

|

|

||

| ML | ||

| == | ||

| MLlib (DataFrame-based) | ||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I have mixed about it. I am aware that MLlib is the official name applied to both, but majority of users, I interacted with, prefers to use ML when speaking about

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. This one is actually the feedback who has a lot of major contributions in ML, @mengxr :-). I think that this name was picked based on what we documented here https://spark.apache.org/docs/latest/ml-guide.html which I think makes sense:

|

||

| ======================= | ||

|

|

||

| ML Pipeline APIs | ||

| ---------------- | ||

| Pipeline APIs | ||

| ------------- | ||

|

|

||

| .. currentmodule:: pyspark.ml | ||

|

|

||

|

|

@@ -188,8 +188,8 @@ Clustering | |

| PowerIterationClustering | ||

|

|

||

|

|

||

| ML Functions | ||

| ---------------------------- | ||

| Functions | ||

| --------- | ||

|

|

||

| .. currentmodule:: pyspark.ml.functions | ||

|

|

||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -16,8 +16,8 @@ | |

| under the License. | ||

|

|

||

|

|

||

| MLlib | ||

| ===== | ||

| MLlib (RDD-based) | ||

| ================= | ||

|

|

||

| Classification | ||

| -------------- | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -20,9 +20,21 @@ | |

| User Guide | ||

| ========== | ||

|

|

||

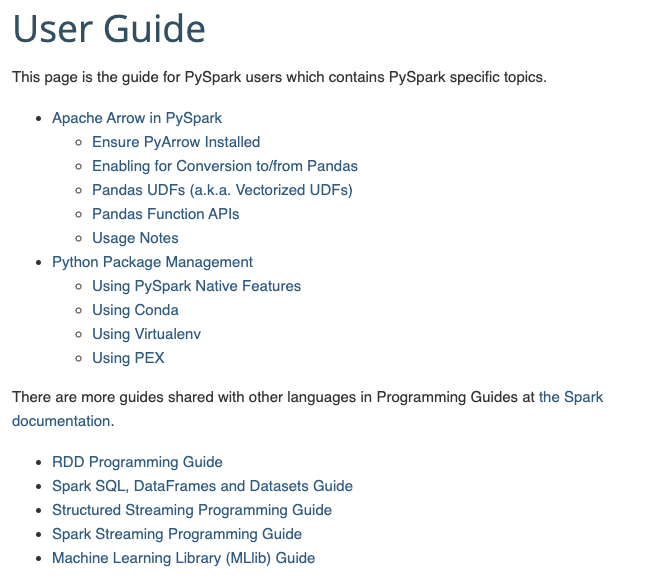

| This page is the guide for PySpark users which contains PySpark specific topics. | ||

|

|

||

| .. toctree:: | ||

| :maxdepth: 2 | ||

|

|

||

| arrow_pandas | ||

| python_packaging | ||

|

|

||

|

|

||

| There are more guides shared with other languages in Programming Guides | ||

| at `the Spark documentation <http://spark.apache.org/docs/latest/index.html#where-to-go-from-here>`_. | ||

|

|

||

| - `RDD Programming Guide <http://spark.apache.org/docs/latest/rdd-programming-guide.html>`_ | ||

| - `Spark SQL, DataFrames and Datasets Guide <http://spark.apache.org/docs/latest/sql-programming-guide.html>`_ | ||

| - `Structured Streaming Programming Guide <http://spark.apache.org/docs/latest/structured-streaming-programming-guide.html>`_ | ||

| - `Spark Streaming Programming Guide <http://spark.apache.org/docs/latest/streaming-programming-guide.html>`_ | ||

| - `Machine Learning Library (MLlib) Guide <http://spark.apache.org/docs/latest/ml-guide.html>`_ | ||

|

|

||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.