-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-32991][SQL] Use conf in shared state as the original configuraion for RESET #30045

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #129792 has finished for PR 30045 at commit

|

|

cc @cloud-fan @maropu @gatorsmile thanks~ |

|

Test build #129815 has finished for PR 30045 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #129825 has finished for PR 30045 at commit

|

|

cc @hvanhovell too |

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

sql/core/src/main/scala/org/apache/spark/sql/execution/command/SetCommand.scala

Show resolved

Hide resolved

sql/core/src/test/scala/org/apache/spark/sql/SparkSessionBuilderSuite.scala

Outdated

Show resolved

Hide resolved

|

Test build #129883 has finished for PR 30045 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #129900 has finished for PR 30045 at commit

|

|

From a cursory look, I think this is correct. But probably it's best to have @hvanhovell's look. |

| // When neither spark.sql.warehouse.dir nor hive.metastore.warehouse.dir is set | ||

| // we will set hive.metastore.warehouse.dir to the default value of spark.sql.warehouse.dir. | ||

| val sparkWarehouseDir = sparkConf.get(WAREHOUSE_PATH) | ||

| val sparkWarehouseDir = sparkWarehouse.getOrElse(sparkConf.get(WAREHOUSE_PATH)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

sparkWarehouse already has .orElse(sparkConf.getOption(WAREHOUSE_PATH.key)). Do you mean sparkWarehouse.getOrElse(WAREHOUSE_PATH.defaultValueString)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, here will finally touch the default value string if never matched before

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can we change to sparkWarehouse.getOrElse(WAREHOUSE_PATH.defaultValueString) so that it's more explicit?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

SGTM

| val metastorePath = Utils.createTempDir() | ||

| val tmpDb = "tmp_db" | ||

|

|

||

| // The initial configs used to generate SharedState, none of these should affect the global |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this is wrong now, as warehouse conf is an exception.

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #130150 has finished for PR 30045 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #130166 has finished for PR 30045 at commit

|

|

retest this please |

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

GA passed, merging to master, thanks! |

|

Test build #130184 has finished for PR 30045 at commit

|

|

@cloud-fan @yaooqinn I have a question about the semantics. We currently reset to the initial state of the first session. You can have many different sessions (e.g. in thrift server) with different initial settings, IMO it would be more sane to reset to the initial state of the current session. WDYT? |

|

^^ makes sense to me. |

|

makes sense. @yaooqinn what do you think? |

|

make sense to me too, I will raise a followup later. |

I did some research: w/o calling Let's assume the initial configs we talk about here is those going with the instantiating process of a SparkSession instance, not those being the first-time set

So do we have a way to actually create a new session with initial configs when there is an existing active one? The answer is NO. This situation only happens when these 2 APIs called, but the current approach actually meets our goal here. We do keep the session configs per session in such a use case. The actual problem here that revealed in the following case is that the GLOBAL SharedState is not being shared after those clear-like APIs being called bin/spark-shell \

--conf spark.sql.warehouse.dir=./warehouse \

--conf spark.sql.globalTempDatabase=mytemp \

--conf spark.sql.custom=abcscala> org.apache.spark.sql.SparkSession.clearDefaultSession()

scala> org.apache.spark.sql.SparkSession.clearActiveSession()

scala> val nes = org.apache.spark.sql.SparkSession.builder.config("spark.sql.warehouse.dir", "w2").config("spark.sql.globalTempDatabase", "m2").config("spark.sql.custom", "xyz").getOrCreate

20/12/07 00:59:35 WARN SparkContext: Using an existing SparkContext; some configuration may not take effect.

nes: org.apache.spark.sql.SparkSession = org.apache.spark.sql.SparkSession@175f1ff5

scala> nes.conf.get("spark.sql.warehouse.dir")

20/12/07 01:00:06 WARN SharedState: Not allowing to set spark.sql.warehouse.dir or hive.metastore.warehouse.dir in SparkSession's options, it should be set statically for cross-session usages

res2: String = w2

scala> nes.conf.get("spark.sql.globalTempDatabase")

res3: String = m2

scala> nes.conf.get("spark.sql.custom")

res4: String = xyz

scala> nes.sql("reset")

res5: org.apache.spark.sql.DataFrame = []

scala> nes.conf.get("spark.sql.globalTempDatabase")

res6: String = m2

scala> nes.conf.get("spark.sql.custom")

res7: String = xyz |

|

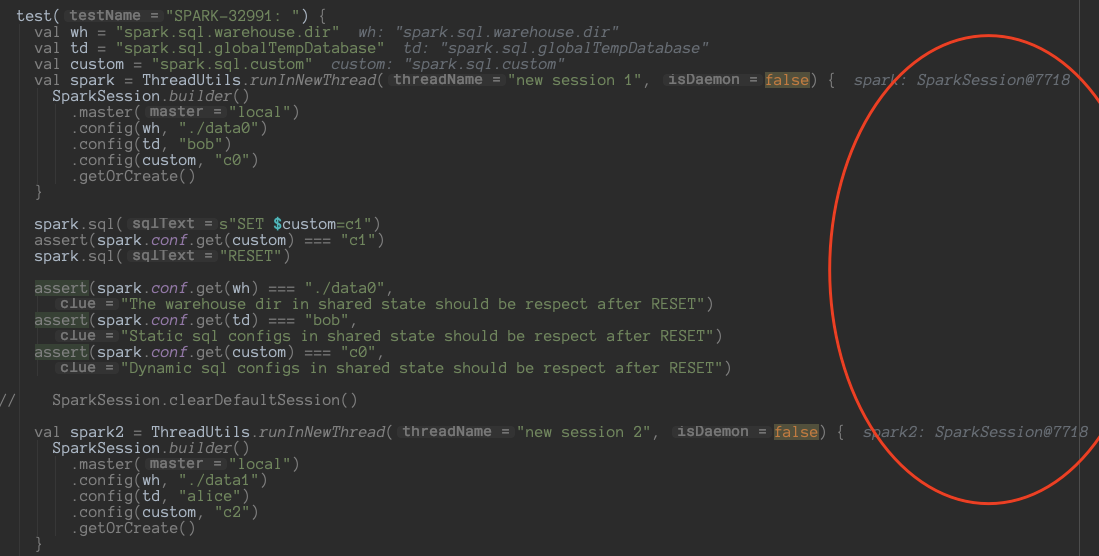

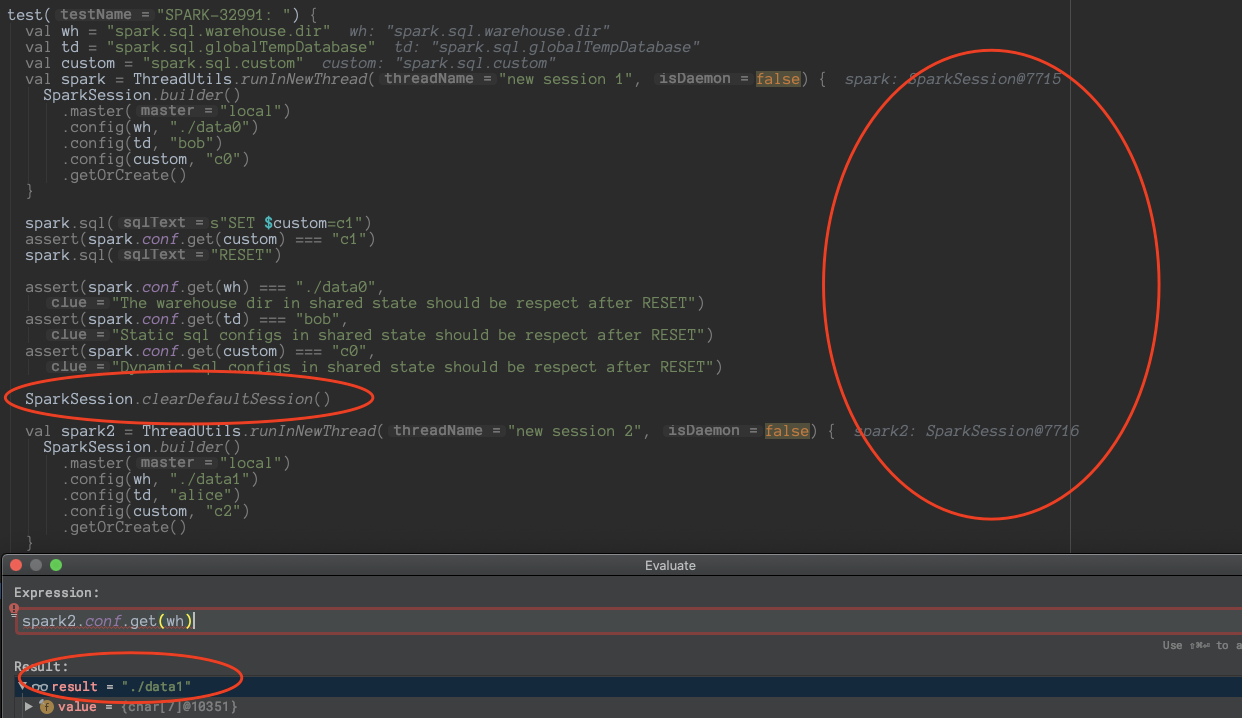

@yaooqinn active session is a thread local. What if we create a new session in another thread? |

w/o w/ |

|

I think you are right about reality. But it seems safer to handle the per-session initial configs as it's a property of the |

|

I have created #30642 to fix this |

…s first ### What changes were proposed in this pull request? As a follow-up of #30045, we modify the RESET command here to respect the session initial configs per session first then fall back to the `SharedState` conf, which makes each session could maintain a different copy of initial configs for resetting. ### Why are the changes needed? to make reset command saner. ### Does this PR introduce _any_ user-facing change? yes, RESET will respect session initials first not always go to the system defaults ### How was this patch tested? add new tests Closes #30642 from yaooqinn/SPARK-32991-F. Authored-by: Kent Yao <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…s first ### What changes were proposed in this pull request? As a follow-up of #30045, we modify the RESET command here to respect the session initial configs per session first then fall back to the `SharedState` conf, which makes each session could maintain a different copy of initial configs for resetting. ### Why are the changes needed? to make reset command saner. ### Does this PR introduce _any_ user-facing change? yes, RESET will respect session initials first not always go to the system defaults ### How was this patch tested? add new tests Closes #30642 from yaooqinn/SPARK-32991-F. Authored-by: Kent Yao <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 205d8e4) Signed-off-by: Wenchen Fan <[email protected]>

What changes were proposed in this pull request?

case

the case here covers the static and dynamic SQL configs behavior in

sharedStateandsessionState, and the specially handled configspark.sql.warehouse.dirthe case can be found here - https://github.com/yaooqinn/sugar/blob/master/src/main/scala/com/netease/mammut/spark/training/sql/WarehouseSCBeforeSS.scala

outputs and analysis

In this PR, we gather all valid config to the cloned conf of

sharedStateduring being constructed, well, actually onlyspark.sql.warehouse.diris missing. Then we use this conf as defaults forRESETCommand.SparkSession.clearActiveSession/clearDefaultSessionwill make the shared state invisible and unsharable. They will be internal only soon (confirmed with Wenchen), so cases with them called will not be a problem.Why are the changes needed?

bugfix for programming API to call RESET while users creating SparkContext first and config SparkSession later.

Does this PR introduce any user-facing change?

yes, before this change when you use programming API and call RESET, all configs will be reset to SparkContext.conf, now they go to SparkSession.sharedState.conf

How was this patch tested?

new tests