-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-32501][SQL] Convert null to "null" in structs, maps and arrays while casting to strings #29311

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #126804 has finished for PR 29311 at commit

|

|

@cloud-fan @HyukjinKwon @maropu Please, take a look at this. |

|

Looks a reasonable change. Actually, hive does so; |

|

Test build #126989 has finished for PR 29311 at commit

|

|

Test build #126992 has finished for PR 29311 at commit

|

|

Test build #127047 has finished for PR 29311 at commit

|

…-to-string # Conflicts: # docs/sql-migration-guide.md # sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala # sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala # sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/CastSuite.scala

|

Test build #127064 has finished for PR 29311 at commit

|

|

@cloud-fan @maropu @HyukjinKwon Please, review this PR. |

docs/sql-migration-guide.md

Outdated

|

|

||

| - In Spark 3.1, structs and maps are wrapped by the `{}` brackets in casting them to strings. For instance, the `show()` action and the `CAST` expression use such brackets. In Spark 3.0 and earlier, the `[]` brackets are used for the same purpose. To restore the behavior before Spark 3.1, you can set `spark.sql.legacy.castComplexTypesToString.enabled` to `true`. | ||

|

|

||

| - In Spark 3.1, `CAST` converts NULL elements of structures, arrays and maps to "null". In Spark 3.0 or earlier, NULL elements are converted to empty strings. To restore the behavior before Spark 3.1, you can set `spark.sql.legacy.castComplexTypesToString.enabled` to `true`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

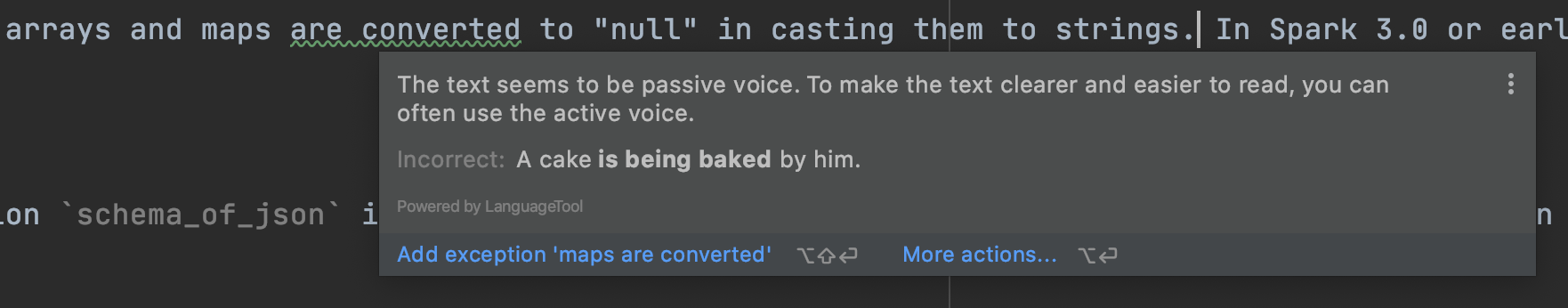

In Spark 3.1, NULL elements of structures, arrays and maps are converted to "null" in casting them to strings.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The passive stuff was not recommended by IntelliJ IDEA ;-) Native speakers don't like it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, but let's highlight it's for "casting to string", not for general CAST

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have replaced it by your sentence already.

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Cast.scala

Outdated

Show resolved

Hide resolved

|

Test build #127083 has finished for PR 29311 at commit

|

|

Test build #127088 has finished for PR 29311 at commit

|

|

thanks, merging to master! |

What changes were proposed in this pull request?

Convert

NULLelements of maps, structs and arrays to the"null"string while converting maps/struct/array values to strings. The SQL configspark.sql.legacy.omitNestedNullInCast.enabledcontrols the behaviour. When it istrue,NULLelements of structs/maps/arrays will be omitted otherwise, when it isfalse,NULLelements will be converted to"null".Why are the changes needed?

.show()is different from conversions to Hive strings, and as a consequence its output is different fromspark-sql(sql tests):Does this PR introduce any user-facing change?

Yes, before:

After:

How was this patch tested?

By existing test suite

CastSuite.