-

Notifications

You must be signed in to change notification settings - Fork 29k

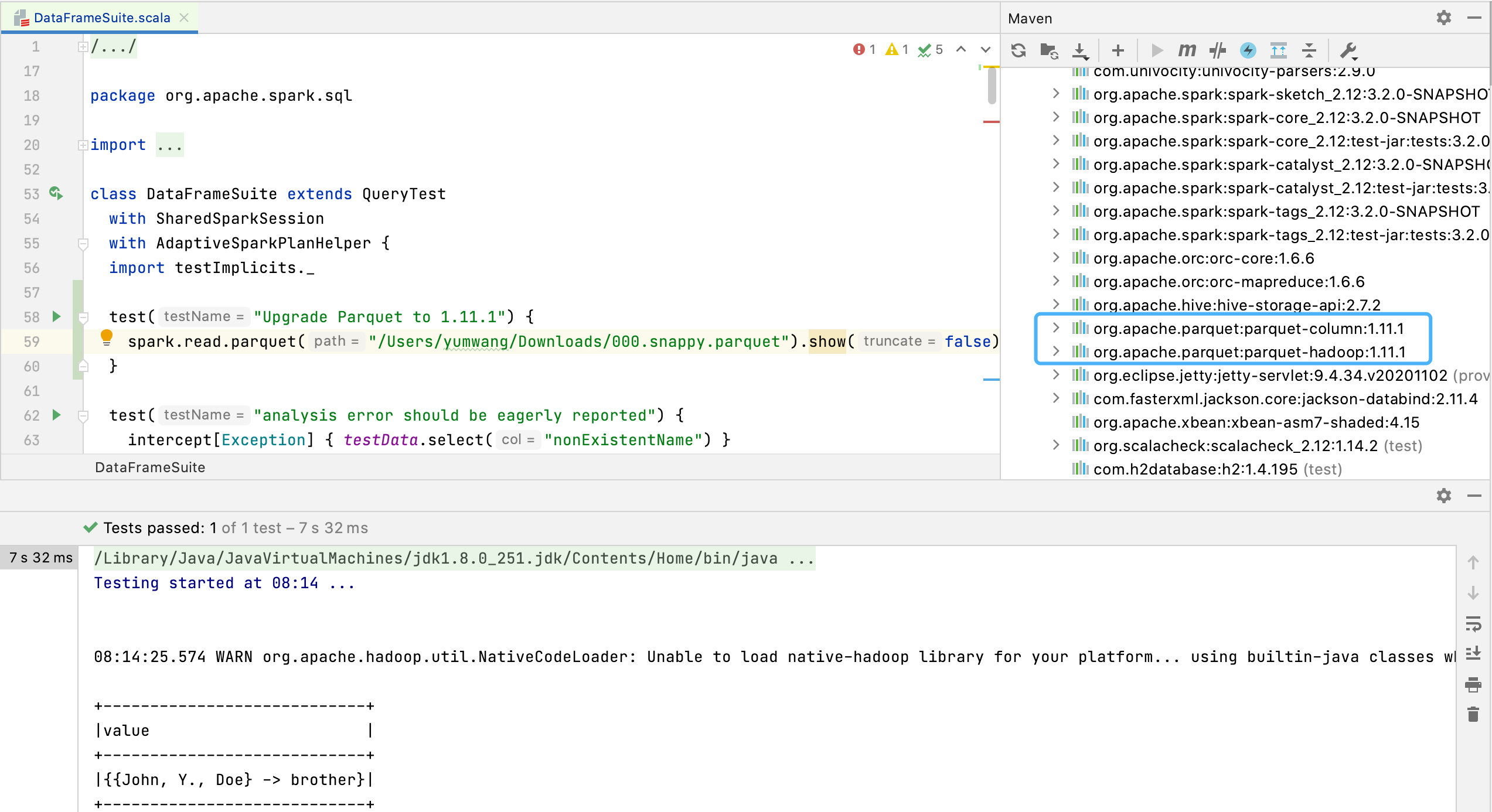

[SPARK-26346][BUILD][SQL] Upgrade Parquet to 1.11.1 #26804

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

b5101d2

4e257c4

4efac50

c9b4792

802eb36

8a50d56

f2ccb3a

a89c61d

eb1c95e

cb7133f

72c52b6

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -251,7 +251,7 @@ class ParquetSchemaInferenceSuite extends ParquetSchemaTest { | |

| """ | ||

| |message root { | ||

| | optional group _1 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required int32 key; | ||

| | optional binary value (UTF8); | ||

| | } | ||

|

|

@@ -267,7 +267,7 @@ class ParquetSchemaInferenceSuite extends ParquetSchemaTest { | |

| """ | ||

| |message root { | ||

| | optional group _1 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required group key { | ||

| | optional binary _1 (UTF8); | ||

| | optional binary _2 (UTF8); | ||

|

|

@@ -300,7 +300,7 @@ class ParquetSchemaInferenceSuite extends ParquetSchemaTest { | |

| """ | ||

| |message root { | ||

| | optional group _1 (MAP_KEY_VALUE) { | ||

| | repeated group map { | ||

| | repeated group key_value { | ||

| | required int32 key; | ||

| | optional group value { | ||

| | optional binary _1 (UTF8); | ||

|

|

@@ -740,7 +740,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| nullable = true))), | ||

| """message root { | ||

| | optional group f1 (MAP_KEY_VALUE) { | ||

| | repeated group map { | ||

| | repeated group key_value { | ||

| | required int32 num; | ||

| | required binary str (UTF8); | ||

| | } | ||

|

|

@@ -759,7 +759,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| nullable = true))), | ||

| """message root { | ||

| | optional group f1 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Would this be a possibly breaking change to files written as Parquet? may be a dumb question.

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Looking at the original PR, I think the change should be backward-compatible (

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Seems better to test both. |

||

| | required int32 key; | ||

| | required binary value (UTF8); | ||

| | } | ||

|

|

@@ -797,7 +797,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| nullable = true))), | ||

| """message root { | ||

| | optional group f1 (MAP_KEY_VALUE) { | ||

| | repeated group map { | ||

| | repeated group key_value { | ||

| | required int32 num; | ||

| | optional binary str (UTF8); | ||

| | } | ||

|

|

@@ -816,7 +816,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| nullable = true))), | ||

| """message root { | ||

| | optional group f1 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required int32 key; | ||

| | optional binary value (UTF8); | ||

| | } | ||

|

|

@@ -857,7 +857,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| nullable = true))), | ||

| """message root { | ||

| | optional group f1 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required int32 key; | ||

| | required binary value (UTF8); | ||

| | } | ||

|

|

@@ -893,7 +893,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| nullable = true))), | ||

| """message root { | ||

| | optional group f1 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required int32 key; | ||

| | optional binary value (UTF8); | ||

| | } | ||

|

|

@@ -1447,7 +1447,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| parquetSchema = | ||

| """message root { | ||

| | required group f0 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required int32 key; | ||

| | required group value { | ||

| | required int32 value_f0; | ||

|

|

@@ -1472,7 +1472,7 @@ class ParquetSchemaSuite extends ParquetSchemaTest { | |

| expectedSchema = | ||

| """message root { | ||

| | required group f0 (MAP) { | ||

| | repeated group map (MAP_KEY_VALUE) { | ||

| | repeated group key_value (MAP_KEY_VALUE) { | ||

| | required int32 key; | ||

| | required group value { | ||

| | required int64 value_f1; | ||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We do not need this, please see PARQUET-1497 for more details.