-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-29712][SQL] Take into account the left bound in fromDayTimeString()

#26358

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #113085 has finished for PR 26358 at commit

|

|

Test build #113125 has finished for PR 26358 at commit

|

|

jenkins, retest this, please |

|

Test build #113158 has finished for PR 26358 at commit

|

|

@srowen @dongjoon-hyun @cloud-fan Please, have a look at this. |

Do you mean pgsql has a different behavior? |

It ignores maxim=# select interval '5 12:40:30.999999999' hour to minute;

interval

-----------------

5 days 12:40:00

(1 row) |

|

Does SQL spec define the behavior? I tried oracle and it fails if the from/to field doesn't match the actual value. |

|

Test build #113197 has finished for PR 26358 at commit

|

|

Pardon the dumb question, but what is the 'hour to minute' supposed to mean? select just the hours and minutes out of the interval? yeah, the pgsql implementation doesn't seem to do that, whether a bug or by design. Just trying to understand what we think the semantics are, and whether they need to be different in Spark. |

|

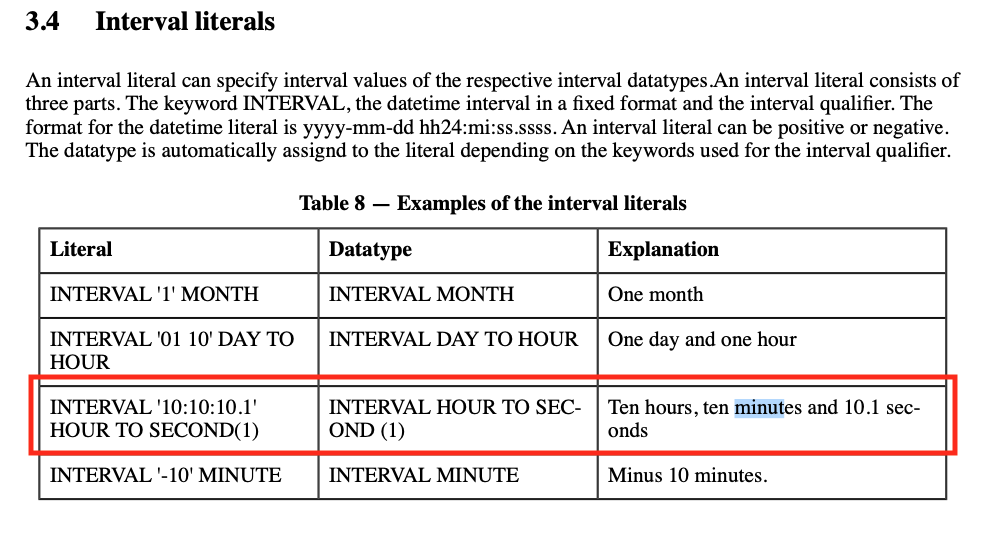

I haven't found precise description of acceptable input string but it seems the format should be defined by the interval qualifier. For example, if it is spark/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/IntervalUtils.scala Lines 182 to 183 in a4382f7

|

|

Hm, none of those examples show 'extracting' a subset of the interval. It seems like a way to specify what the unit-less string means. It seems like the pgsql you have above ought to fail if so, as the string doesn't match the units, yeah. It seems like the current behavior works like pgsql? if so, is that maybe an OK stance on the semantics in these 'incorrect' cases? |

I am not sure that fully following other dbms in bugs and features is right decision. From the user point of view, if I specify |

|

Since this is only for interval literal, I think it doesn't hurt if we fail invalid string format. The oracle behavior looks more reasonable to me, we can try more to see if that's a common behavior. |

|

For example, MySQL is strict as proposed in the PR: mysql> SELECT DATE_SUB("1998-01-01 00:00:00", INTERVAL "1 1:1" HOUR_MINUTE);

+---------------------------------------------------------------+

| DATE_SUB("1998-01-01 00:00:00", INTERVAL "1 1:1" HOUR_MINUTE) |

+---------------------------------------------------------------+

| NULL |

+---------------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

mysql> SELECT DATE_SUB("1998-01-01 00:00:00", INTERVAL "1:1" HOUR_MINUTE);

+-------------------------------------------------------------+

| DATE_SUB("1998-01-01 00:00:00", INTERVAL "1:1" HOUR_MINUTE) |

+-------------------------------------------------------------+

| 1997-12-31 22:59:00 |

+-------------------------------------------------------------+

1 row in set (0.00 sec)@cloud-fan @srowen What can I do to continue with the PR except of resolving the conflicts. |

|

OK, so it would be reasonable to fail / return null, to match Oracle? There doesn't seem to be consistent behavior out there. I guess I prefer to fail this if it doesn't make sense semantically. |

…y-time-string # Conflicts: # sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/IntervalUtils.scala # sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/IntervalUtilsSuite.scala # sql/core/src/test/resources/sql-tests/results/literals.sql.out

|

Test build #113472 has finished for PR 26358 at commit

|

|

so let's keep the existing behavior if pgsql dialect is set, and fail for invalid bounds otherwise. |

…y-time-string # Conflicts: # sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/IntervalUtils.scala # sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/IntervalUtilsSuite.scala

…y-time-string # Conflicts: # sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/IntervalUtils.scala # sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/IntervalUtilsSuite.scala

| } | ||

| } | ||

|

|

||

| withSQLConf(SQLConf.DIALECT.key -> "Spark") { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can we have a test case to show the error behavior?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just to be clear, this PR just truncates results according to specified bound. In particular, it takes into account the left bound. Could you explain, please, what do you mean by saying error behavior?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the conclusion is to fail for invalid bounds?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you mean if a string doesn't match to specified bounds like in literals.sql: https://github.com/apache/spark/pull/26358/files#diff-4f9e28af8e9fcb40a8a99b4e49f3b9b2R424 ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yea, like what Oracle does.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Here is the PR with strict parsing #26473

|

Test build #113576 has finished for PR 26358 at commit

|

What changes were proposed in this pull request?

In the PR, I propose to check the left bound - the

frominterval unit, and reset all unit values out of the specified range of interval units. Currently,IntervalUtils.fromDayTimeString()takes into account only the right bound but not the left one.Note: the reset is not performed if

spark.sql.dialect = PostgreSQL.Why are the changes needed?

This fix makes

fromDayTimeString()consistent in bound handling.Does this PR introduce any user-facing change?

Yes, before:

After:

How was this patch tested?

IntervalUtilsSuiteliterals.sqlandinterval.sql