-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-28659][SQL] Use data source if convertible in insert overwrite directory #25398

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can you add tests? |

yes i will add tests and update the PR |

|

@maropu added the testcase! |

|

ok to test |

|

Thanks! |

|

Test build #108912 has finished for PR 25398 at commit

|

|

Test build #108920 has finished for PR 25398 at commit

|

| (ctx.LOCAL != null, storage, Some(DDLUtils.HIVE_PROVIDER)) | ||

| val fileFormat = extractFileFormat(fileStorage.serde) | ||

| (ctx.LOCAL != null, storage, Some(fileFormat)) | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are you sure this is correct? It seems a valid value is Some(DDLUtils.HIVE_PROVIDER) or None for the third parameter.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

in case of parquet and orc we can use the respective file format instead of hive.

In case of ctas also we convert to use data source https://github.com/viirya/spark-1/blob/839a6ce1732fa37b5f8ec9afa2d51730fc6ca691/sql/hive/src/main/scala/org/apache/spark/sql/hive/HiveStrategies.scala#L188

This will make it inline with that behavior.

| sql( | ||

| s""" | ||

| |INSERT OVERWRITE LOCAL DIRECTORY '$path' | ||

| |STORED AS orc |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why did you delete this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

if we use stored as and file format is orc or parquet it will be converted to data source flow.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe the test case name should be modified correspondingly and a new test case for (orc/parquet) should be added.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@advancedxy i have updated the testcase name and for orc and parquet testcase was already added. As they will be converted to data source, the data in the directory would be compressed.

| sql( | ||

| s""" | ||

| |INSERT OVERWRITE LOCAL DIRECTORY '$path' | ||

| |STORED AS orc |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe the test case name should be modified correspondingly and a new test case for (orc/parquet) should be added.

| } | ||

|

|

||

| private def extractFileFormat(serde: Option[String]): String = { | ||

| if (serde.toString.contains("parquet")) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Although None.toString is "None", this looks like a hack to me.

How about:

serde.map({ x =>

val lowerCaseSerde = x.toLowerCase(Locale.ROOT)

if (lowerCaseSerde.contains("parquet") "parquet"

else if (lowerCaseSerde.contains("orc") "orc"

else DDLUtils.HIVE_PROVIDER

}).getOrElse( DDLUtils.HIVE_PROVIDER)

|

Test build #108968 has finished for PR 25398 at commit

|

|

Test build #108972 has finished for PR 25398 at commit

|

|

cc @HyukjinKwon |

|

ok to test |

|

Test build #110698 has finished for PR 25398 at commit

|

|

failed test doesn't look related to this PR @HyukjinKwon |

|

retest this please |

|

Test build #110857 has finished for PR 25398 at commit

|

|

retest this please |

1 similar comment

|

retest this please |

|

Test build #110880 has finished for PR 25398 at commit

|

sql/core/src/main/scala/org/apache/spark/sql/execution/SparkSqlParser.scala

Show resolved

Hide resolved

|

If you only target to fix Hive ser/de to respect compression, why don't you set Hive compression properly? |

Yes compression can be achieved by setting Hive ser/de or |

HyukjinKwon

left a comment

HyukjinKwon

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Alright, if we want to do the conversion, it should have a configuration like spark.sql.hive.convertMetastoreCtas which respects spark.sql.hive.convertMetastoreParquet or spark.sql.hive.convertMetastoreOrc.

|

Can one of the admins verify this patch? |

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

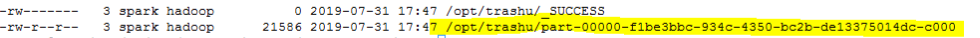

What changes were proposed in this pull request?

In insert overwrite directory while using

STORED AS file_format, files are not compressed.In this PR it is converted to

datasourceif it is convertible, to make it inline withCTASbehavior which is fixed in this PRWhy are the changes needed?

To make the behavior inline with

CTASwhile usingSTORED AS file_formatDoes this PR introduce any user-facing change?

Yes, After the fix of this PR now

STORED AS file_formatwill be converted todatasourceif it is convertibleBefore

After

How was this patch tested?

New testcase is added