-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-28349][SQL] Add FALSE and SETMINUS to ansiNonReserved #25114

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #107530 has finished for PR 25114 at commit

|

|

Is this correct? I don't check carefully check yet, but I think the 'reserved' meaning is essentially different between spark and the other systems. For example, these keyworkds below are reserved in postgresql, but they can be used as alias names; |

|

First, FALSE is reserved word. Second, see https://www.postgresql.org/docs/7.3/sql-keywords-appendix.html

We do not need to follow PostgreSQL to support reserved words in column alias. |

|

Thank you @gatorsmile |

spark-sql> set spark.sql.parser.ansi.enabled=true;

spark.sql.parser.ansi.enabled true

spark-sql> select extract(year from timestamp '2001-02-16 20:38:40') ;

Error in query:

no viable alternative at input 'year'(line 1, pos 15)

== SQL ==

select extract(year from timestamp '2001-02-16 20:38:40')

---------------^^^

spark-sql> set spark.sql.parser.ansi.enabled=false;

spark.sql.parser.ansi.enabled false

spark-sql> select extract(year from timestamp '2001-02-16 20:38:40') ;

2001Do we need to add |

|

I think we don't need to do so cuz we basically follow SQL-2011 now. |

|

or add a regular_id? Some UDFs are also not available in ansi mode: spark-sql> select left('12345', 2);

12

spark-sql> set spark.sql.parser.ansi.enabled=true;

spark.sql.parser.ansi.enabled true

spark-sql> select left('12345', 2);

Error in query:

no viable alternative at input 'left'(line 1, pos 7)

== SQL ==

select left('12345', 2)

-------^^^ |

|

Rather, itd be better to list up these functions and rename them if necessary? IMO making these keywords non-reserved worsens parser error messages... |

What changes were proposed in this pull request?

How to reproduce this issue:

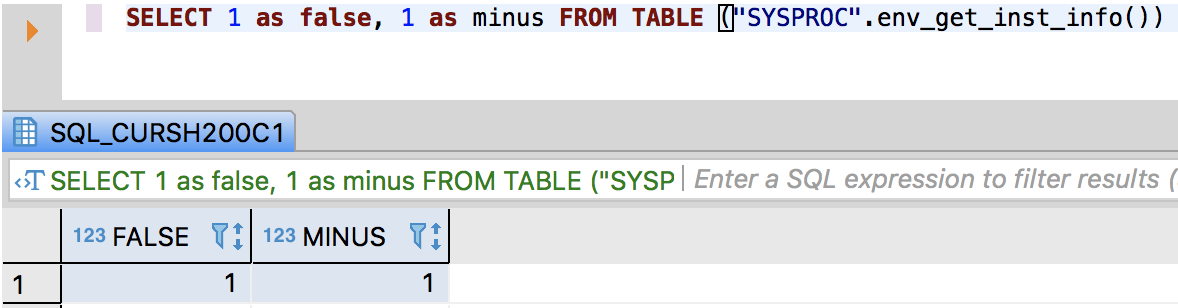

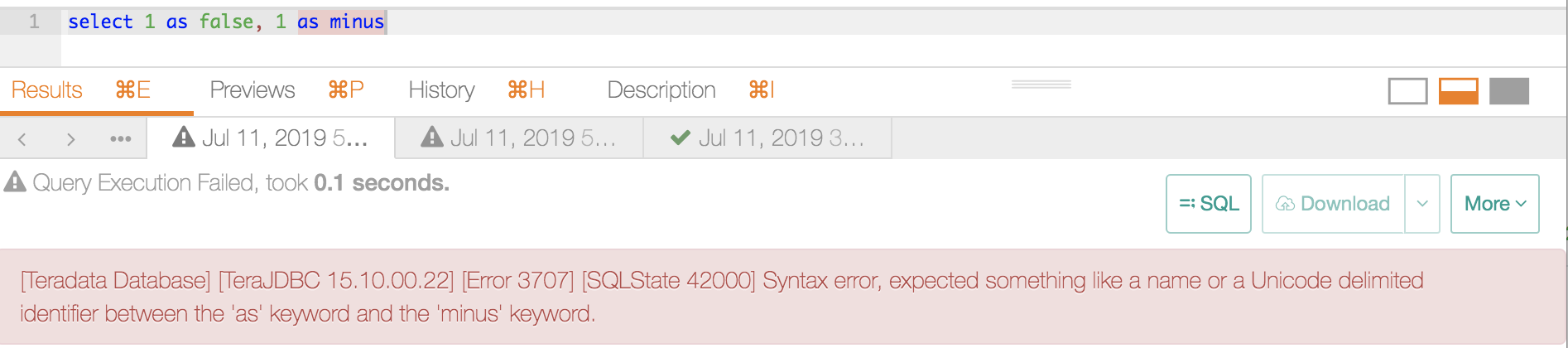

Other databases behaviour:

PostgreSQL:

Vertica:

SQL Server:

DB2:

Oracle:

Teradata:

This pr add

FALSEandSETMINUSto ansiNonReserved to fix this issue.How was this patch tested?

unit tests and manual tests: