-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-20090][PYTHON] Add StructType.fieldNames in PySpark #18618

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

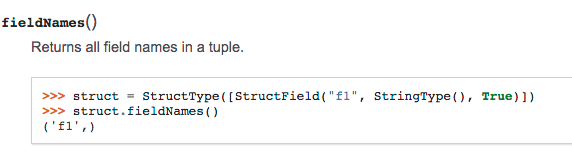

| def fieldNames(self): | ||

| """ | ||

| Returns all field names in a tuple. | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| This is the data type representing a :class:`Row`. | ||

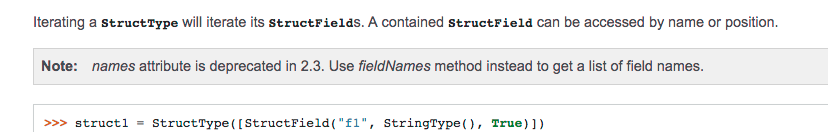

| Iterating a :class:`StructType` will iterate its :class:`StructField`s. | ||

| Iterating a :class:`StructType` will iterate its :class:`StructField`\\s. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank's for fixing the documentation issue while you were here :) +1

|

Okay, @jkbradley, I tried to find and build some arguments for this API here although actually I am rather neutral on this (as the reasons above might not be worth enough adding an API). Could you take a look please? I am also fine with closing this PR/JIRA. |

|

Test build #79578 has finished for PR 18618 at commit

|

|

Thanks @HyukjinKwon ! I'm still in favor of adding this, partly to match Scala and partly to have API docs for it. I just had one question: Is there a reason fieldNames should return a tuple in Python, rather than a list? (I just always think of Python lists being the analogue of Scala Arrays.) |

|

Either way is fine to me. Let me update this to return a list. I was just thinking struct/row are a tuple-like and the output for this could be as so. |

| >>> struct.fieldNames() | ||

| ['f1'] | ||

| """ | ||

| return list(self.names) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just to note that this list call is required to make a copy to prevent an unexpected behaviour described in the PR description by manipulating this names.

>>> df = spark.range(1)

>>> a = df.schema.fieldNames()

>>> b = df.schema.names

>>> df.schema.names[0] = "a"

>>> a

['id']

>>> b

['a']

>>> a[0] = "aaaa"

>>> a

['aaaa']

>>> b

['a']|

Test build #79631 has finished for PR 18618 at commit

|

|

@jkbradley, does this make sense to you in general? |

holdenk

left a comment

holdenk

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks like a good improvement. Would it make sense to make the current undocumented API deprecated and more our internal usage to _name?

|

@holdenk, sure, makes sense but let me just leave a deprecation note for the |

| .. note:: `names` attribute is deprecated in 2.3. Use `fieldNames` method instead | ||

| to get a list of field names. | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@holdenk, would you maybe still prefer to deprecate it? I am willing to follow your decision.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is good enough :)

|

Test build #79814 has finished for PR 18618 at commit

|

felixcheung

left a comment

felixcheung

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

Would you guys mind if I give a shot for my first merge :)? |

|

retest this please |

|

oh I'm sorry I just merged this to master, but I'll leave the cherry-pick open if you want to back port it to 2.2.X? |

|

Oh, that's fine. I don't want to back port this. Will give a try in another small and safe one. Thank you! |

|

Test build #80036 has finished for PR 18618 at commit

|

## What changes were proposed in this pull request?

This PR proposes `StructType.fieldNames` that returns a copy of a field name list rather than a (undocumented) `StructType.names`.

There are two points here:

- API consistency with Scala/Java

- Provide a safe way to get the field names. Manipulating these might cause unexpected behaviour as below:

```python

from pyspark.sql.types import *

struct = StructType([StructField("f1", StringType(), True)])

names = struct.names

del names[0]

spark.createDataFrame([{"f1": 1}], struct).show()

```

```

...

java.lang.IllegalStateException: Input row doesn't have expected number of values required by the schema. 1 fields are required while 0 values are provided.

at org.apache.spark.sql.execution.python.EvaluatePython$.fromJava(EvaluatePython.scala:138)

at org.apache.spark.sql.SparkSession$$anonfun$6.apply(SparkSession.scala:741)

at org.apache.spark.sql.SparkSession$$anonfun$6.apply(SparkSession.scala:741)

...

```

## How was this patch tested?

Added tests in `python/pyspark/sql/tests.py`.

Author: hyukjinkwon <[email protected]>

Closes #18618 from HyukjinKwon/SPARK-20090.

|

@holdenk Thanks for merging it! Just wondering: Why is the "pushed a commit" notification from hubot? Did you use the |

|

(Yea, I was wondering too..) |

What changes were proposed in this pull request?

This PR proposes

StructType.fieldNamesthat returns a copy of a field name list rather than a (undocumented)StructType.names.There are two points here:

API consistency with Scala/Java

Provide a safe way to get the field names. Manipulating these might cause unexpected behaviour as below:

How was this patch tested?

Added tests in

python/pyspark/sql/tests.py.