-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-21169] [core] Make sure to update application status to RUNNING if executors are accepted and RUNNING after recovery #18414

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

… RUNNING SPARK-21169

…utors are accepted and running after recovery

|

Can one of the admins verify this patch? |

| app.state = ApplicationState.RUNNING | ||

| logInfo(s"Application :: ${app.id} status updated to RUNNING state") | ||

| }}) | ||

| apps.foreach(f = appInfo => { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It would probably be more clear to write foreach like this. You don't need the extra parentheses.

apps.foreach { appInfo =>

...

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My Bad, IDE auto corrected few things. Please review the latest update

| }}) | ||

| apps.foreach(f = appInfo => { | ||

| val app = idToApp(appInfo.id) | ||

| if (app.executors.size > 0 && |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It might be more clear to write this condition like this

if (app.executors.nonEmpty && app.executors.forall(_._2.state == ExecutorState.RUNNING))|

Updated logic as per the comments. Please review and let me know your comments. |

|

please fix up the PR title: http://spark.apache.org/contributing.html |

|

@srini-daruna I think I already addressed this issue in SPARK-12552, here is the code. Did you test with SPARK-12552 in or not? Also is that fix not enough to address the problem? |

|

@jerryshao Sorry, i have not used the code with SPARK-12552. I used older one and didn't check the fix. Do you think marking every one as RUNNING OK.? Because, applications may wait for resources sometimes. |

|

From my understanding, only when Master fully recovered, then |

## What changes were proposed in this pull request? This PR proposes to close stale PRs, mostly the same instances with apache#18017 Closes apache#14085 - [SPARK-16408][SQL] SparkSQL Added file get Exception: is a directory … Closes apache#14239 - [SPARK-16593] [CORE] [WIP] Provide a pre-fetch mechanism to accelerate shuffle stage. Closes apache#14567 - [SPARK-16992][PYSPARK] Python Pep8 formatting and import reorganisation Closes apache#14579 - [SPARK-16921][PYSPARK] RDD/DataFrame persist()/cache() should return Python context managers Closes apache#14601 - [SPARK-13979][Core] Killed executor is re spawned without AWS key… Closes apache#14830 - [SPARK-16992][PYSPARK][DOCS] import sort and autopep8 on Pyspark examples Closes apache#14963 - [SPARK-16992][PYSPARK] Virtualenv for Pylint and pep8 in lint-python Closes apache#15227 - [SPARK-17655][SQL]Remove unused variables declarations and definations in a WholeStageCodeGened stage Closes apache#15240 - [SPARK-17556] [CORE] [SQL] Executor side broadcast for broadcast joins Closes apache#15405 - [SPARK-15917][CORE] Added support for number of executors in Standalone [WIP] Closes apache#16099 - [SPARK-18665][SQL] set statement state to "ERROR" after user cancel job Closes apache#16445 - [SPARK-19043][SQL]Make SparkSQLSessionManager more configurable Closes apache#16618 - [SPARK-14409][ML][WIP] Add RankingEvaluator Closes apache#16766 - [SPARK-19426][SQL] Custom coalesce for Dataset Closes apache#16832 - [SPARK-19490][SQL] ignore case sensitivity when filtering hive partition columns Closes apache#17052 - [SPARK-19690][SS] Join a streaming DataFrame with a batch DataFrame which has an aggregation may not work Closes apache#17267 - [SPARK-19926][PYSPARK] Make pyspark exception more user-friendly Closes apache#17371 - [SPARK-19903][PYSPARK][SS] window operator miss the `watermark` metadata of time column Closes apache#17401 - [SPARK-18364][YARN] Expose metrics for YarnShuffleService Closes apache#17519 - [SPARK-15352][Doc] follow-up: add configuration docs for topology-aware block replication Closes apache#17530 - [SPARK-5158] Access kerberized HDFS from Spark standalone Closes apache#17854 - [SPARK-20564][Deploy] Reduce massive executor failures when executor count is large (>2000) Closes apache#17979 - [SPARK-19320][MESOS][WIP]allow specifying a hard limit on number of gpus required in each spark executor when running on mesos Closes apache#18127 - [SPARK-6628][SQL][Branch-2.1] Fix ClassCastException when executing sql statement 'insert into' on hbase table Closes apache#18236 - [SPARK-21015] Check field name is not null and empty in GenericRowWit… Closes apache#18269 - [SPARK-21056][SQL] Use at most one spark job to list files in InMemoryFileIndex Closes apache#18328 - [SPARK-21121][SQL] Support changing storage level via the spark.sql.inMemoryColumnarStorage.level variable Closes apache#18354 - [SPARK-18016][SQL][CATALYST][BRANCH-2.1] Code Generation: Constant Pool Limit - Class Splitting Closes apache#18383 - [SPARK-21167][SS] Set kafka clientId while fetch messages Closes apache#18414 - [SPARK-21169] [core] Make sure to update application status to RUNNING if executors are accepted and RUNNING after recovery Closes apache#18432 - resolve com.esotericsoftware.kryo.KryoException Closes apache#18490 - [SPARK-21269][Core][WIP] Fix FetchFailedException when enable maxReqSizeShuffleToMem and KryoSerializer Closes apache#18585 - SPARK-21359 Closes apache#18609 - Spark SQL merge small files to big files Update InsertIntoHiveTable.scala Added: Closes apache#18308 - [SPARK-21099][Spark Core] INFO Log Message Using Incorrect Executor I… Closes apache#18599 - [SPARK-21372] spark writes one log file even I set the number of spark_rotate_log to 0 Closes apache#18619 - [SPARK-21397][BUILD]Maven shade plugin adding dependency-reduced-pom.xml to … Closes apache#18667 - Fix the simpleString used in error messages Closes apache#18782 - Branch 2.1 Added: Closes apache#17694 - [SPARK-12717][PYSPARK] Resolving race condition with pyspark broadcasts when using multiple threads Added: Closes apache#16456 - [SPARK-18994] clean up the local directories for application in future by annother thread Closes apache#18683 - [SPARK-21474][CORE] Make number of parallel fetches from a reducer configurable Closes apache#18690 - [SPARK-21334][CORE] Add metrics reporting service to External Shuffle Server Added: Closes apache#18827 - Merge pull request 1 from apache/master ## How was this patch tested? N/A Author: hyukjinkwon <[email protected]> Closes apache#18780 from HyukjinKwon/close-prs.

What changes were proposed in this pull request?

In Spark-HA, after active master failure, stand-by mater is choosen and workers,applications will be re-registered with new master.

However, application state is not moving from WAITING state to RUNNING state.

This code change checks applications after recovery and if all executors are RUNNING

(Please fill in changes proposed in this fix)

In the method completeRecovery in org.apache.spark.deploy.master.Master class, where cleanup of the workers and applictions is being done,

i have added code change to move the application to RUNNING state, if application has more than 1 executors and all of them are in RUNNING status.

In some cases, executors will be in LOADING status, but we cannot consider those to change application state to RUNNING, as executors in LOADING status might also happen due to resource unavailability.

How was this patch tested?

To check existing bug.

1) Created a zookeeper cluster

2) I have configured spark with recovery mode zookeeper and updated spark-env.sh with recovery mode settings.

3) Updated spark-defaults in both worker and master with both the masters. spark://:7077,:7077

4) Started spark master1 and spark master 2 and and workers in the order.

5) master1 is ACTIVE and master2 showed as STANDBY.

6) Started a sample streaming application.

7) Killed the spark-master1, and waited for the workers and applications to appear in master2. They appeared and job showed up in WAITING state.

To check implemented fix:

1) I have built spark-core

2) removed spark-core jar in SPARK_HOME/jars folder and replaced with the newly built one.

3) Performed the same steps as above, and checked job status

4) checked spark-master logs to ensure the log message got printed and

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

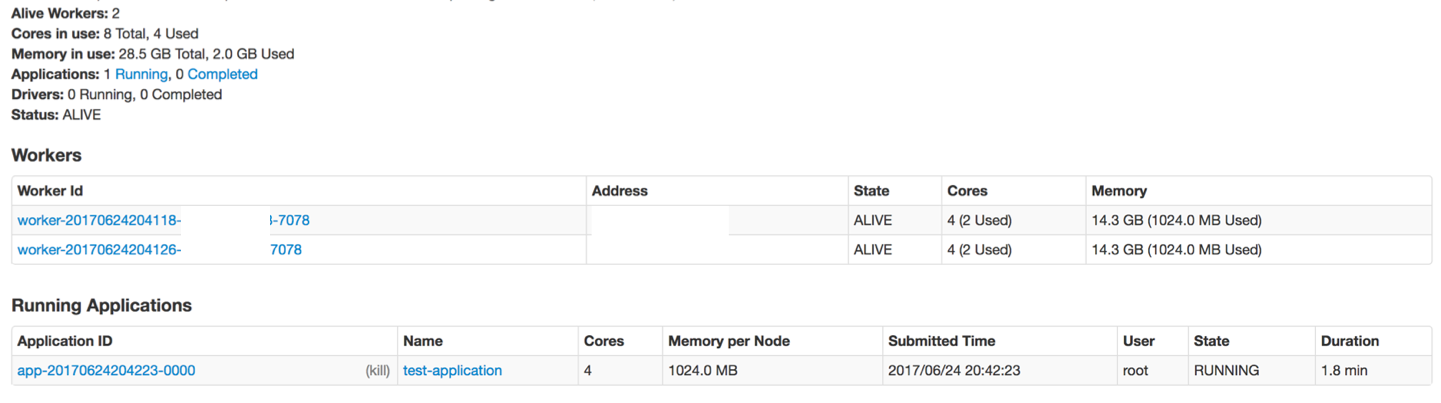

Master 1 UI Before Fix

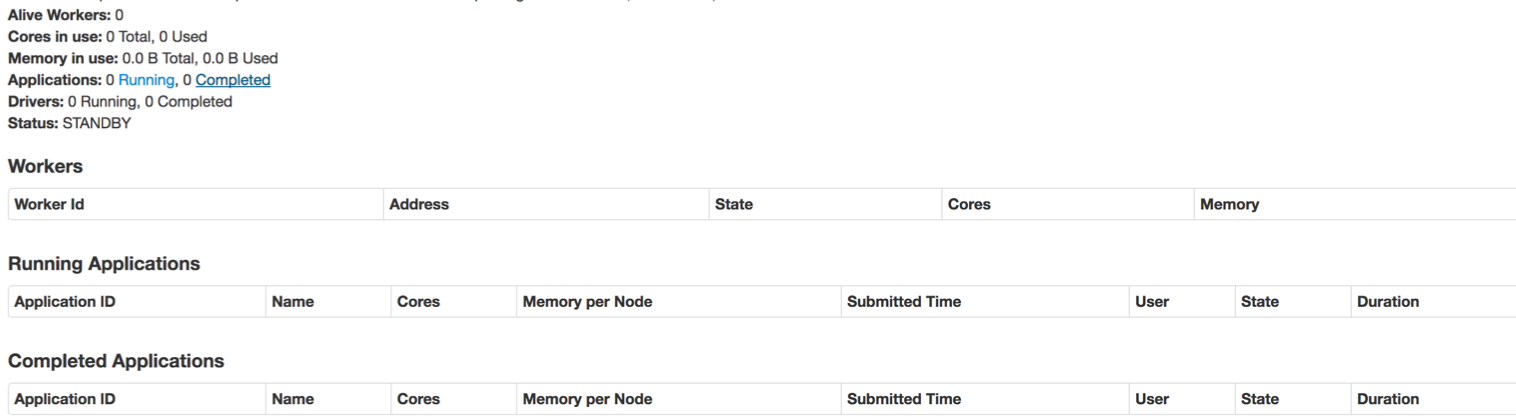

Master 2 UI Before Fix

Master 2 UI (before fix), which became active after Master is killed.

(Application can be seen with WAITING status)

Master 1 UI after fix

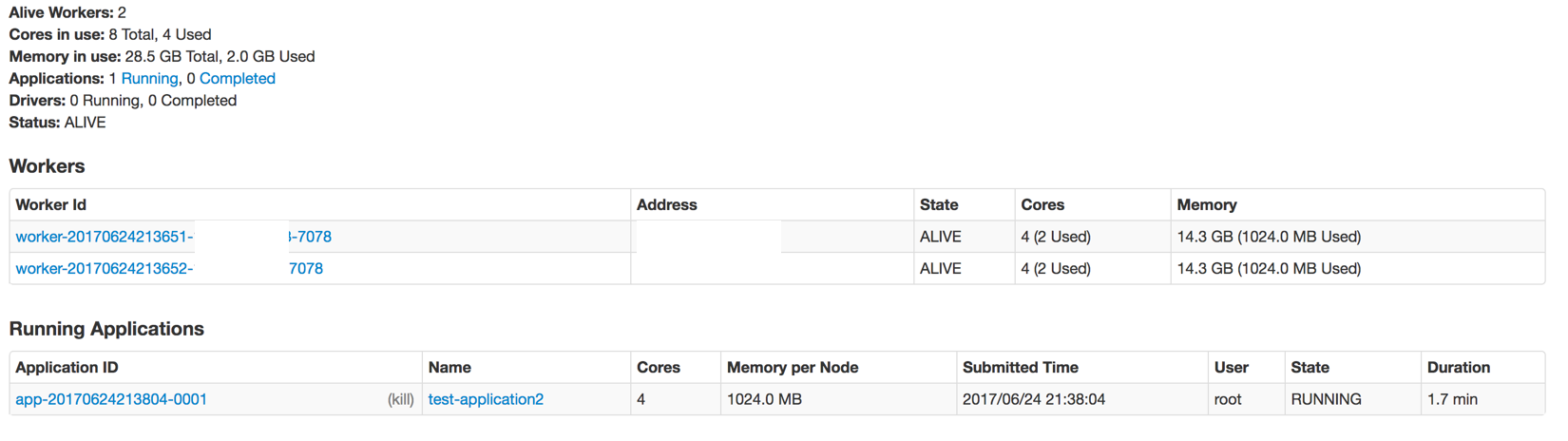

Mater 2 UI (after fix), after master is killed and Master became active.

(Application can be see with RUNNING state)

he.org/contributing.html before opening a pull request.