-

Notifications

You must be signed in to change notification settings - Fork 587

HDDS-3223. Improve s3g read 1GB object efficiency by 100 times #843

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

ced02e6 to

2388d62

Compare

bharatviswa504

left a comment

bharatviswa504

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank You @runzhiwang for the analysis and great improvement in read performance.

I see there is one more usage of IOUtils.copyLarge in createMultipartKey, can we update over there also with a similar change.

L534:

if (range != null) {

RangeHeader rangeHeader =

RangeHeaderParserUtil.parseRangeHeader(range, 0);

IOUtils.copyLarge(sourceObject, ozoneOutputStream,

rangeHeader.getStartOffset(),

rangeHeader.getEndOffset() - rangeHeader.getStartOffset());

}

And also can we add test for S3WrapperInputStream which tests a new method with multiple seek values.

hadoop-ozone/s3gateway/src/main/java/org/apache/hadoop/ozone/s3/io/S3WrapperInputStream.java

Outdated

Show resolved

Hide resolved

|

|

@bharatviswa504 Hi, I move copyLarge into KeyInputStream, and add integration test in TestKeyInputStream for it. And also change all the use of IOUtils.copyLarge. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I delete the following code because the InputStream in unit test is ByteArrayInputStream, it cannot be cast into KeyInputStream when new S3WrapperInputStream(sourceObject.getInputStream())).

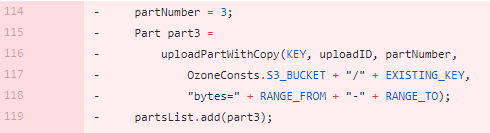

It's ok to delete it, because there are other test to cover the case, such as MultipartUpload.robot as the image shows.

|

+1 LGTM. |

What changes were proposed in this pull request?

What's the problem ?

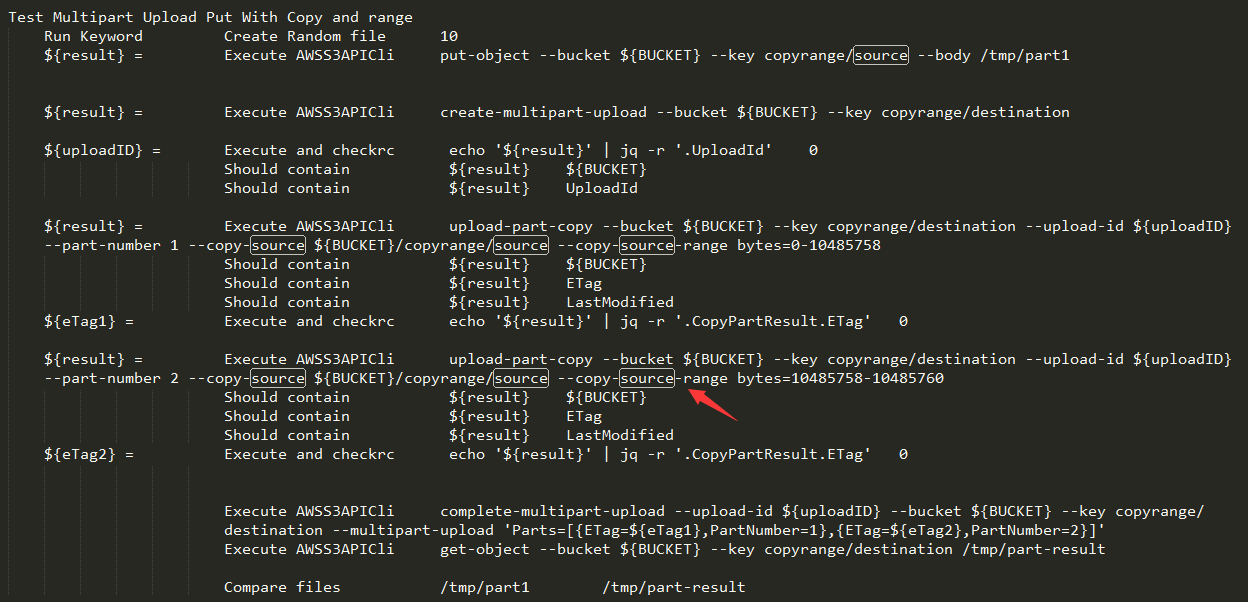

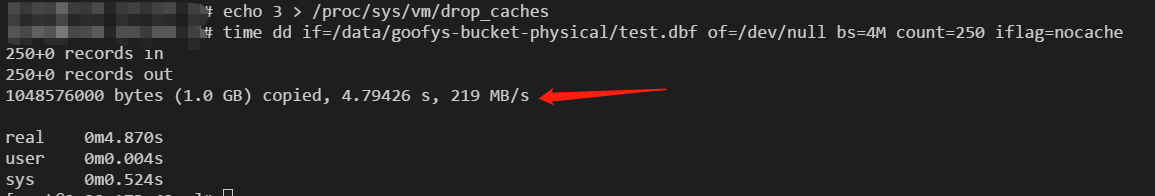

Use dd to read 1000M object from ozone bucket mounted by goofys, it cost about 470 seconds, i.e. 2.2M/s, which is too slow.

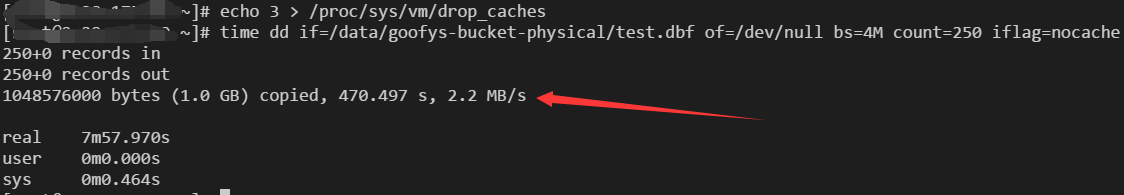

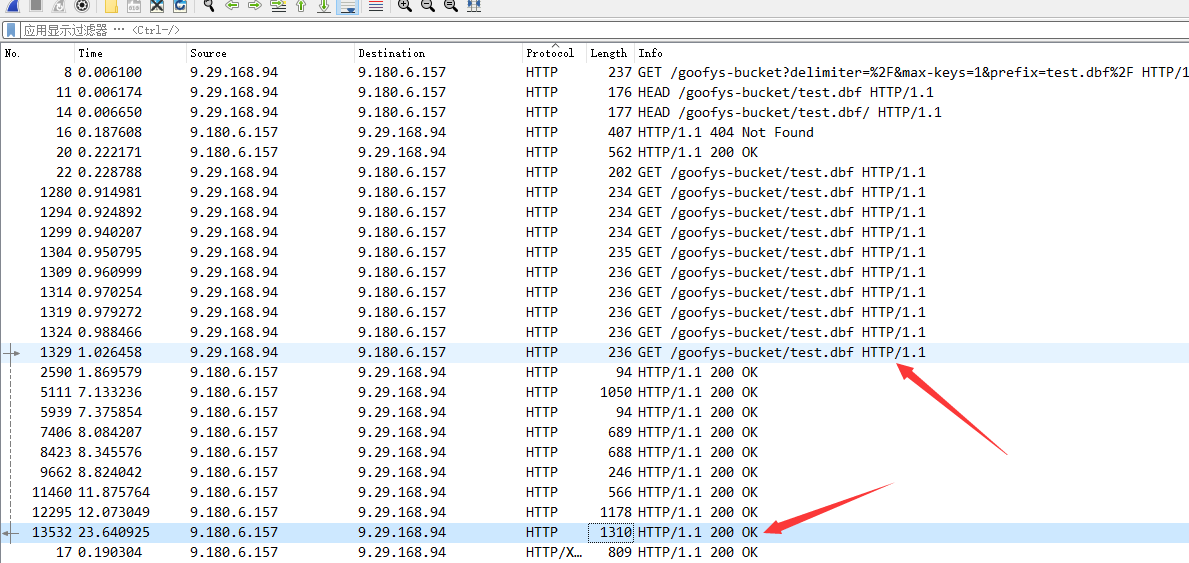

I also use tcpdump to capture the packet when I read 200M object. As the image shows, the 1st GET request cost about 1 second, but the 10th GET request cost about 22 seconds. The GET request become slower and slower.

What's the reason ?

When read 1000M object, there are 50 GET requests, each GET request read 20M. When do GET, the stack is: IOUtils::copyLarge -> IOUtils::skipFully -> IOUtils::skip -> InputStream::read.

It means, the 50th GET request which should read 980M-1000M, but to skip 0-980M, it also InputStream::read 0-980M. So the 1st GET request read 0-20M, the 2nd GET request read 0-40M, the 3rd GET request read 0-60M, ..., the 50th GET request read 0-1000M. So the GET request from 1st-50th become slower and slower.

You can also refer IO-203 and IO-355 why IOUtils implement skip by read rather than real skip, e.g. seek.

How to improve ?

Copy IOUtils::copyLarge to S3WrapperInputStream, and replace IOUtils::skipFully with S3WrapperInputStream::seek. No other changes of IOUtils::copyLarge.

After improving, read 1000M object cost 4.79 seconds, i.e. 219M/s, about 100 times faster.

What is the link to the Apache JIRA

https://issues.apache.org/jira/browse/HDDS-3223

How was this patch tested?

Existed UT and IT.