-

Notifications

You must be signed in to change notification settings - Fork 593

HDDS-6500. [Ozone-Streaming] Buffer the PutBlockRequest at the end of the stream. #3229

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

This header is actually included in every ratis Stream request. org.apache.ratis.netty.NettyDataStreamUtils#encodeDataStreamRequestHeader Can we use it? |

@guohao-rosicky , that is Ratis header but not Ozone header. Later on, Ratis can buffer the small requests at the client side before sending it out for performance improvement; see https://issues.apache.org/jira/browse/RATIS-1157 . That's the reason that we cannot depend on individual packet sizes or empty packets. As an analogy, we use TCP in streaming. We may not be able to use TCP header in our code. |

|

Thanks @szetszwo update this PR. Let me test this PR first and then we can continue the review. |

|

@captainzmc , sure, please test this. Thanks a lot! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

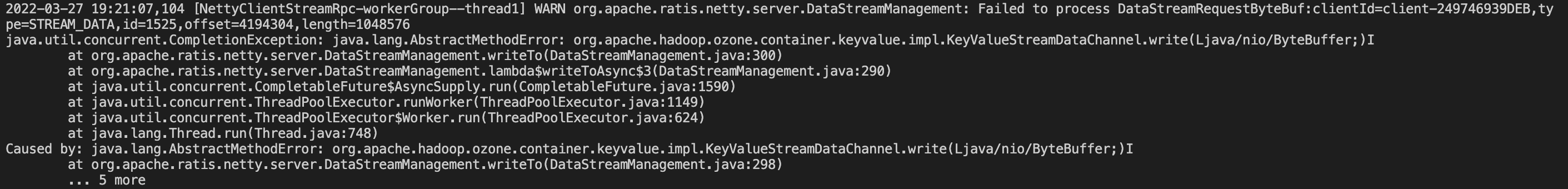

Hi @szetszwo. I just tested the PR and found that the previous test case did not run successfully. There are a lot of AbstractMethodError found. Here is datanode log. Can you confirm that?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@captainzmc , I guess you somehow were using some old version of Ratis/Ozone since you got AbstractMethodError. Please try deleting the maven cache and then rebuild everything.

This change should work since it already has passed all the checks in https://github.com/apache/ozone/runs/5687893931

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oops, my mistake, I didn't replace all the jars. Let me recompile and retest.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I recompiled the code and the tests were fine.

|

Hi, @szetszwo and @captainzmc The current code does not handle small files separately: Current process:

Is it necessary to continue #2860 after this PR is merged? Because there are only two steps in its transmission:

|

Hi @guohao-rosicky. I think writing small file using Streaming needs to continue separately in #2860. @szetszwo Any suggestion here? |

If we buffer everything before sending it out, this is actually is a single step for small files in the transmission. Indeed, write small file should only have one transmission. Why it needs to take two transmissions to send a small file? @captainzmc , @guohao-rosicky , I am okay to continue working on #2860. As mentioned above, we should just buffer everything in the client side. |

|

Thanks @szetszwo. There are some conflicts in the current code. I think after resolving the conflict, we can merge this PR first. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 Thanks @szetszwo, Let merge this.

|

@captainzmc , thanks a lot for reviewing and merging this! |

Sure. |

|

@captainzmc , I actually like to run some benchmarks. Could you show me the details steps (including setting up a cluster)? |

@szetszwo Sure, I will prepare a test document and update it here. |

Hi @szetszwo here is my test doc. It contains my test results and procedures. I recommend using Freon instead of my test code, because it's easier. Please leave me a message if you have any questions. |

|

@captainzmc , thanks a lot for sharing the test details! Definitely will try it. |

Hi @szetszwo, Any progress on the streaming tests? Are there performance and stability issues? We can keep discuss if there are any questions. |

|

@captainzmc , I tried running some single client benchmarks. However, the ASYNC performance is better than STREAM Found that we have not called RaftServerConfigKeys.DataStream.setAsyncWriteThreadPoolSize. I temporarily have the following change: |

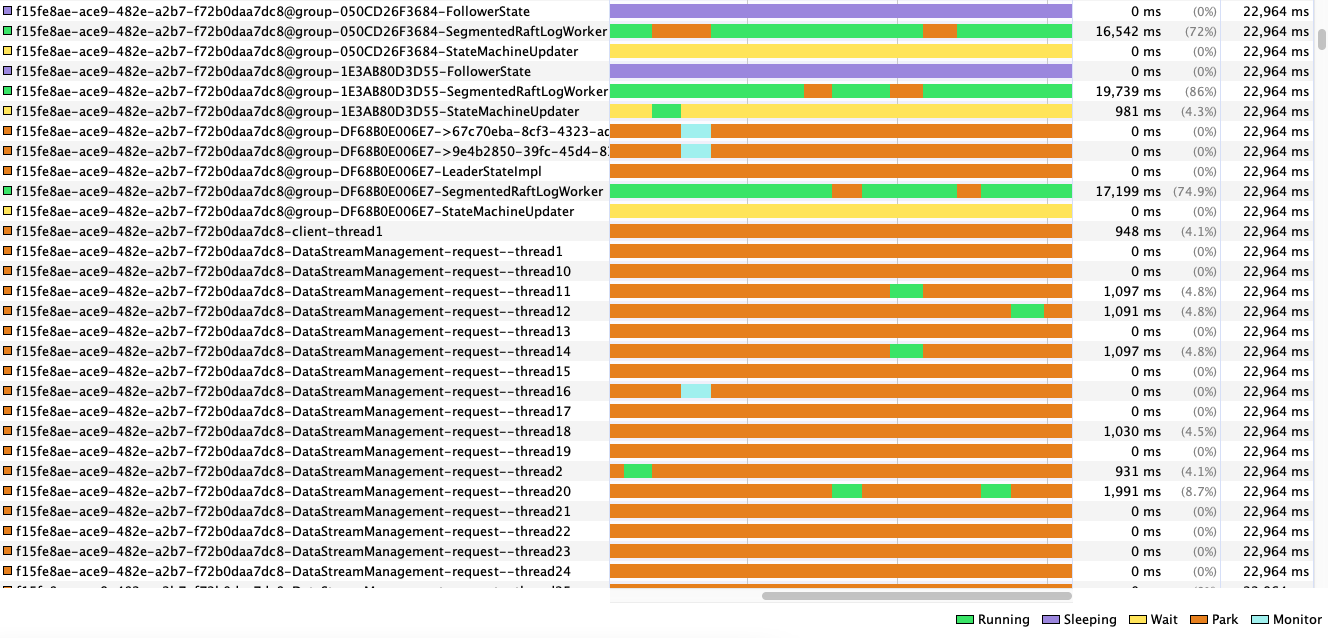

@szetszwo, Our previous test results are similar, streaming performance is not as good as async. However, the bottleneck here is probably not WriteThreadPool. In our tests we looked at the VM monitor, and WriteThreadPool and RequestThreadPool were mostly idle. So we think the problem might be Netty. |

@captainzmc , ASYNC also uses Netty (gRPC + Netty). If the slowness in STREAM is due to Netty, then it should just be a configuration problem. BTW, we did not see similar problem in FileStore benchmarks we did earlier. |

Agree,Maybe there are some Netty configurations that we haven't noticed.

@szetszwo Yes, I remember that. runzhiwang and I did the FileStore test together. FileStore performed well at the time. But recently I noticed that runzhiwang commented a line of code in the Filestore test code. There was no logic for startTransaction in the previous Filestore test. This line is required in Ozone code. That's as different as I can think of so far. |

I see. Then, if we move the startTransaction to the client slide, it may help a lot. Let me try. |

|

@captainzmc , @guohao-rosicky , after some tests, I found that the current client Stream code is slow. The Stream code was mainly copied from the Async code. The flush and watch handling in Async seems slowing down Streaming since they call wait(). |

Thanks @szetszwo for the test, the performance problem may be not easy to solve. I wonder if we can continue with HDDS-4474 (WriteSmallFile) and HDDS-5869 (S3Gateway)? The streaming will be almost complete after these two are completed. |

|

@captainzmc , I am fine to continue working to these two JIRAs. For HDDS-4474 (WriteSmallFile), I thought @guohao-rosicky was going to work on RATIS-1157 ? For HDDS-5869 (S3Gateway), it seems that the pull request was not able to pass all the tests yet. |

|

Hi, @szetszwo

For now, I will focus on HDDS-5869 (S3Gateway), which will help us merge HDDS-4454 into master and add ozone 1.3.0 release.

Ratis-1157 and HDDS-4474 (WriteSmallFile), I think can continue to optimize after HDDS-4454 merged into master. |

Thanks @guohao-rosicky. Agree. Ratis2.3.0 is currently in release and will not contain ratis-1157. Hdds-4474 WriteSmallFile optimization we can improve after merged into master. @szetszwo, Any suggestions here? |

|

@captainzmc , @guohao-rosicky , I find out why Ozone-Streaming is slow -- the watch requests slows it down. Will submit a pr. |

… the stream. (apache#3229) (cherry picked from commit d0407c1)

|

hi, @szetszwo . If the client exits abnormally, the stream close is not called; Whether can cause org.apache.hadoop.ozone.container.keyvalue.impl.KeyValueStreamDataChannel.Buffers in memory is not released. |

|

@guohao-rosicky , thanks a lot for pointing it out! Would you like to submit a pull request for fixing it? Or I can do it. |

|

What changes were proposed in this pull request?

Retain the buffers (using RATIS-1556) so that the PutBlockRequest can be deserialized when the stream is closed.

What is the link to the Apache JIRA

https://issues.apache.org/jira/browse/HDDS-6500

How was this patch tested?

New unit tests.